androidlad

-

Posts

1,215 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Posts posted by androidlad

-

-

9 hours ago, webrunner5 said:

I say you are wrong. Lower resolution cameras have less cumulative read noise due to fewer pixels. If there are fewer pixels they are collecting more light than a equal sized high pixel one. If not true you would never have the same light gathering, so no equal ISO or aperture to say a A7r. They would have completely widely different base ISOs. And read noise is created in the Amp also. So it is not a sensor only problem, main reason the higher the ISO, ergo the more you turn up the Amp, the more read noise.

Please eduate yourself: https://www.dpreview.com/videos/7940373140/dpreview-tv-why-lower-resolution-sensors-are-not-better-in-low-light

-

20 minutes ago, webrunner5 said:

I think you are thinking the photo side that the DPR article stated not the video side. The A7s Does have less pixels that are bigger. Your math is all wrong. The less the MP count the bigger the pixels have to be to fill the space.

Low pixel count cameras tend to be cleaner at high ISO because of the lower cumulative read noise. It has nothing to do with bigger pixels collecting more light.

However, with the latest sensor design, it's doesn't alway apply any more. A1 for example, despite having 50MP, is slightly better in low light when shooting video compared to A7S III.

-

4 hours ago, webrunner5 said:

Or have larger pixels for light gathering such as the Sony A7s series.

Larger pixels don't gather more light.

FF sensors are the same size at 36mm x 24mm, they gather the same amount of light with the same aperture.

-

Fuji X-H2S

In: Cameras

3 hours ago, TomTheDP said:As long as there is some mode that can be used as a tripod replacement, for short periods of course, then I am good with it.

My main disappointment which will keep me from buying it is in 4k 60p it goes down to 12 bit readout vs 14 in 24-30p.The 14bit readout only gets you ~0.3 stops of additional DR compared to 12bit.

Also 14bit readout is 10ms rolling shutter vs 5.6ms in 12bit.

-

Fuji X-H2S

In: Cameras

12 hours ago, newfoundmass said:Maybe photographers will buy this camera, but it really seems like it's more video centric. I think they think it'll appeal to the hybrid shooter and video focused user mode than photo. I could be wrong though!

X-H2S is a speed centric camera, it's the very first stacked APS-C sensor and also the fastest, which benefits both stills (action, sport, wild life etc.) and video.

-

Fuji X-H2S

In: Cameras

-

6 hours ago, TrueIndigo said:

I've quickly gone through the Z9 PDF user manual but can't find it -- are there film ratio marking options available in the display? Considering the headline video specs of this camera, I'm surprised if Nikon still expects you to put masking tape across the rear screen for 2.39:1 guidelines in 2022.

It's called Grid Type in Z9 settings, there's one each for stills and video:

https://onlinemanual.nikonimglib.com/z9/en/15_menu_guide_04_g11.html

- Juank and Rinad Amir

-

2

2

-

Fuji XH-2/S

In: Cameras

The two new X-H cameras both use Sony Semicon sensors, they incoporate some of the latest technology from IMX610 (A1) including DBI and Sigma-Delta ADC.

- gethin, rainbowmerlin, webrunner5 and 1 other

-

4

4

-

Panasonic GH6

In: Cameras

34 minutes ago, kye said:So, can you get the 16-bit files out of the camera and see them in an NLE? or just the 12-bit files?

There's nothing stopping them from reading the data off the sensor, changing it in whatever ways they want to without debayering it or anything like that, and then saving it to a card. I talented high-school student could write an algorithm to do that without any problems at all, so it's not impossible.

When light goes into a camera it will go through the optical filters (eg, OLPF) and the bayer filter (the first element of the colour science as these filters will determine the spectral response of the R, G, and B photosites. Then it gets converted from analog to digital, and then it's data. There's very little opportunity for colour science tweaks there. I've looked at their 709 LUT and it doesn't seem to be there either.

I'm seeing things in the colour science of the footage, but I'm just not sure where they are being applied in the signal path, and in-camera seems to be the only place I haven't looked.

It would be amazing if we were to get that tech in affordable cameras. It will give better DR and may prompt even higher quality files (i.e. 12-bit LOG is way better than 12-bit RAW).

It's not a small guy vs corp thing at all.

Most of the people pointing Alexas or REDs at something have control of that something.

Most of the hours of footage captured by those cameras will be properly exposed at native ISO, will be in high-CRI single-temperature lighting, and will be pointed at something where the entire contents of the frame are within certain tolerances (eg, lighting ratios and no out-of-gamut colours, etc).Most of the people pointing sub-$2K cameras at something do not have total control of that something, and many even have no control over that something.

A lot of the hours of footage captured by those cameras will not be properly exposed at native ISO (or wouldn't be at 180 shutter), won't be in high-CRI single-temperature lighting, and won't be pointed at something where the entire contents of the frame are within certain tolerances (eg, lighting ratios and no out-of-gamut colours, etc).You really notice how well your camera/codec handles mixed lighting when you arrive somewhere that looks completely neutral lighting and look through the viewfinder and see this:

This was a shoot I had a lot of trouble grading but managed to make at least passable, for my standards anyway. There are other shots that I've tried for years to grade and haven't been able to, even through automating grades, because things moved between light-sources.

Unfortunately that's the reality for most low-cost camera owners 😕

The difficult situations I find myself in are:

- low-light / highISO

- mixed-lighting

- high DR

and when I adjust shots from the above to have the proper WB and exposure and run NR to remove ISO noise, the footage just looks so disappointing.

Resolution can't help with any of those. I've shot in 5K, 4K, 3.3K and 1080p, and it's rare that the "difficult situation" I find myself in would be helped by having extra resolution. I appreciate that my camera downsamples in-camera, which reduces noise in-camera, and the 5K sensor on the GH5 allows me to shoot in downsampled 1080p and also engage the 2X digital zoom and still have it downsampling (IIRC it's taking that from something like 2.5K) but I'd swap that for a lower resolution sensor with better low-light and more robust colour science without even having to think about it.

They care about quality over quantity, and realise that one comes at the expense of the other.

This is literally what I've been trying to explain to you for (what seems like) weeks now.

Interesting stuff.

In that thread, the post you linked to from @androidlad says:

This idea of taking frames "a few milliseconds apart" sounds like taking two exposures where the exposure time doesn't overlap. Assuming that this is the case then yeah, motion artefacts are the downside. Of course, with drones it's less of a risk as things are often further away and unless you put an ND on it the SS will be very short, so motion blur is negligible anyway.

We definitely want two readouts from the same exposure for normal film-making.

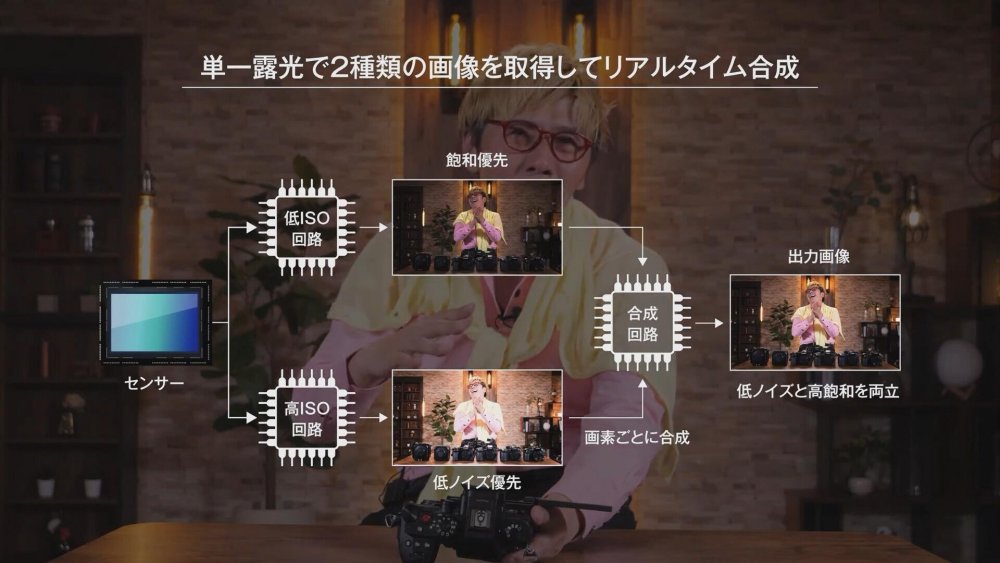

GH6 will use single exposure dual gain HDR:

-

Again the root cause is indeed the X-Trans CFA. Fuji uses a demosaic algorithm for video/jpeg that prioritises speed and efficiency in a small camera body. This leads to colour smearing issue. It doesn't happen to X-Trans RAW stills anymore because modern RAW processing softwares have much more refined algorithms to deal with X-Trans.

-

Nice video, inquisitive as always.

This is mostly due to the nature of X-Trans, there are far more green photosites than red and blue, compared to traditional Bayer sensors, hence the less detailed Cr channel. The benefit is that it naturally reduces moire and it has a bit cleaner luminance detail (Y channel).

You won't notice this on videos shot on Fuji GFX cameras which use Bayer sensor.

-

Olympus OM-1

In: Cameras

5 minutes ago, hoodlum said:According to the article OM is licensing AI-Denoise from Topaz.

In the OM software "OM Workspace" yes, but not internally in-camera.

-

Olympus OM-1

In: Cameras

It seems OM1 has really good internal NR, applied at RAW debayer level:

https://www.geh-photo.org/post/die-om-1-im-praxistest?continueFlag=143e81367b1d01290587846ff81073b9

Left EM1X, right OM1

OM1 ISO40000, yes forty thousand.

-

Read the full article please?

QuoteKey takeaways—Flare light was not an issue with the high-quality DSLR/mirrrorless lenses we tested in the past, but it has become a major factor limiting the performance of recent low-cost lenses intended for the automotive or security industries. We have seen examples of how flare light can improve traditional DR measurements while degrading actual camera DR.

Our approach to resolving this issue is to limit quality-based DR measurements (the range of densities where SNR ≥ 20dB for high quality through SNR ≥ 0dB for low quality) to the slope-based DR. This works because, for patches beyond the slope-based limit (where the slope of log pixel level vs. log exposure drops below 0.075 of the maximum slope):

Contrast is too low for image features to be clearly visible.

Signal is dominated by flare light, which washes out real signals from the test chart; i.e., the “signal” is an artifact, not the real deal.

Limiting quality-based DR in this way significantly improves measurement accuracy, and perhaps more importantly, can help prevent inferior, low-quality lenses being accepted for applications critical to automotive safety or security.

-

Get a used TITAN X or XP from ebay:https://www.ebay.com/sch/i.html?_from=R40&_trksid=p2047675.m570.l1313&_nkw=nvidia+titan&_sacat=0

-

2 hours ago, Eric Calabros said:

These rumors have no reliable source, but..

Sony A1 also uses sigma delta ADC and consumes almost 2.8W at 44fps, 14bit.

The sensor is capable of outputing 44fps but A1 is limited to 20fps at 14bit, while Venice 2 8K will run the sensor at full power.

-

First glimpse of Canon's true flagship mirrorless slated for 2022:

54MP 9000 X 6000 resolution with 4um pixel size

Quad Pixel AF

1/450s sensor readout (2.2ms rolling shutter)

Dual-15bit Sigma-Delta ADC

Fully electronic shutter with no mechnical component (same as Z9).

- billdoubleu, Andrew Reid, shooter and 4 others

-

6

6

-

1

1

-

54 minutes ago, sanveer said:

Curiously the fixed pattern noise, that appears static across frames is something I noticed in one of stars wars films (one of the 2 last ones). I thought it was a bad case of adding film grain, after shooting on a digital camera. Also, strangely, it was during one of the daytime shots, and not low light ones, like Sony explains in their reasoning doe not including PDAF.

Similarly, if you look really hard you can see the "stitching lines" on some shots showing skys in Miracle on the Hudson shot on Alexa 65, because the sensor is "stitched" using three A2X recticles.

-

-

Sensor is based on IMX610 from α1, but with 16 SLVS-EC 4.6Gbps lanes, intead of 8. The sensor itself cosumes over 5W power.

There's no DRAM. ADC operates at 14bit at 1/250s readout at all times (meaning 4ms rolling shutter in all recording modes).

Later paid firmware updates may unlock 8.6K 3:2 open gate up to 72fps, 8.6K 2.39:1 up to 120fps.

- Juank, Emanuel, Rinad Amir and 1 other

-

4

4

-

-

8.6K 3:2 30FPS Full-Frame

8.2K 17:9 60FPS Full-Frame

5.8K 6:5 Anamorphic 48FPS Super 35

5.8K 17:9 90FPS Super 35It shares the same 8.6K sensor IMX610 (but no DRAM) with Sony A1 mirrorless camera.

-

DJI Mavic 3

In: Cameras

-

Atomos also needs to go fanless, it's way too audible.

Z9 did it.

Fuji X-H2S

In: Cameras

Posted