Leaderboard

Popular Content

Showing content with the highest reputation on 11/07/2017 in all areas

-

ARRI Alexa cheap enough to own now?

AaronChicago reacted to Dave Maze for a topic

I was curious to see what used ARRI Alexa's were going for and I was shocked to see that a Classic EV model goes for $8k-$10k on average. Is this camera using the same sensor technology we have in the modern ARRI's? Is this a practical thing to get into? $10K is very doable and reasonable...and for ARRI...gosh darn it.... Why keep comparing everything to a standard when you can just OWN the standard? https://www.ebay.com/itm/ARRI-Alexa-Classic-EV-High-Speed-Camera-TESTED-AND-EXCELLENT/302511937364?hash=item466f1dd754:g:jb8AAOSwFnJZeina1 point -

What I'm curious about, but still haven't found an answer for is if SxS adapters for SD (or XQD) cards work in an Arri Alexa? Haven't ever yet found an answer for that. As if they are compatible (I use SD cards in their SxS adpater for my Sony PMW-F3) then that is a tremendous cost savings if buying a secondhand Arri Alexa.1 point

-

@dbp I would be happy to deal with the sluggish workflow for that DANG SEXAYYY IMAGE! I shoot 90% on sticks anyways! I'm going to be digging around for one. Arri Alexa would be worth selling my 1DC for! Lol. Leitax makes a great Canon EF adapter for Alexa too that would be perfect for my CONTAX Zeiss kit. They have the Leitax mounts on them already. http://www.leitax.com/conversion/Cine/Alexa/index.html DANG..imagine my youtube content shot on ALEXA lol. MKBHD on steroids. Had a conversation with Roman Alaivi about his on vimeo....insane.1 point

-

I am really hoping the S3 will have 10bit codec. I don't want to have to switch systems.1 point

-

Since this thread has already wandered, Jon, do you have access to Netflix or Amazon? If so I think it will be quite easy for you spot the difference with 4khdr material. The Ridiculous Six is shot on film and supposedly mastered in 4k Dolby vision. Beautiful natural looking imagery. Another thing to keep in mind, is that your Lg is upscaling hd automatically, as well as applying various detail, sharpening, contrast enhancement, etc....So unless you have turned all of that off, you won't really have a good idea of what footage looks like. Also, another thing to think about is a bmd mini monitor, decklink, etc... combined with DR14 so you can send hdr metadata over HDMI (Not to mention a video signal unadulterated by your mac/pc/gpu.)1 point

-

People in the professional color grading world think the screens of the Atomos stuff are rubbish for actual grading and it's just a marketing gimmick with the HDR. e.g. http://liftgammagain.com/forum/index.php?threads/atomos-sumo-19-hdr-recorder-monitor.8947/ I was quite interested in the monitor-only Sumo but the specs are really not that interesting. It's not really 10bit but 8+2 FRC, and it only does Rec709 according to the the specs sheet. It's an IPS panel with up to 1200 nit so I guess you can expect the blacks to be grays when it shows the max brightness in the frame (couldn't quickly find any data about contrast). It even says "Brightness 1200nit (+/- 10% @ center)" so I wouldn't expect great uniformity. So I'd say it's still better to get a used FSI or get a LG OLED. Unrelated: my only HDR capable device is a Samsung Galaxy S8+ and I hate how colorful the demo videos are I watched so far. Didn't help my taste that they were in 1080p60.1 point

-

From Dolby test laboratory: https://blog.dolby.com/2013/12/tv-bright-enough/ "...At Dolby, we wanted to find out what the right amount of light was for a display like a television. So we built a super-expensive, super-powerful, liquid-cooled TV that could display incredibly bright images. We brought people in to see our super TV and asked them how bright they liked it.Here’s what we found: 90 percent of the viewers in our study preferred a TV that went as bright as 20,000 nits. (A nit is a measure of brightness. For reference, a 100-watt incandescent lightbulb puts out about 18,000 nits.)..." On an average sunny day, the illumination of ambient daylight is approximately 30,000 nits. http://www.generaldigital.com/brightness-enhancements-for-displays1 point

-

Which is probably why Canon offered CLog with the 5Div but you have to send it in to get it or buy a new one that already has it installed. If I ever buy a 5Div, I would not buy the CLog version just in case ML ever cracks it and we can get 10-12bit, 5-6K Raw.1 point

-

Magic Lantern for 80D coming soon?

maxotics reacted to Mattias Burling for a topic

This I don't buy at all. The reason they haven't hacked for example the 1DC is because they don't know how. Without a firmware update file they don't know where to start. I'm willing to bet that it is the same reason the 5Div isn't hacked. No one knows how.1 point -

You just quoted a forum member who have 4 posts on the whole forum and cleary dont understand how the hacking process work. Yes there is a slow progress on hacking Digic 6 cameras like 5D Mark IV and 80D, but the guys constantly hitting in walls while trying to hack the newer (Digic6) canon cameras. Probably they will do it, but not in the near future... and in about a year (i hope) we will have lot of 10 bit cameras with good codec which means RAW recording will no longer be as shiny as it was years ago..1 point

-

Sony A7R III announced with 4K HDR

Trek of Joy reacted to Don Kotlos for a topic

? I find the video side of A7rIII was improved far more than the still side. The A7sIII will only add 4k 60p, better 4K FF quality and maybe a 10bit output.1 point -

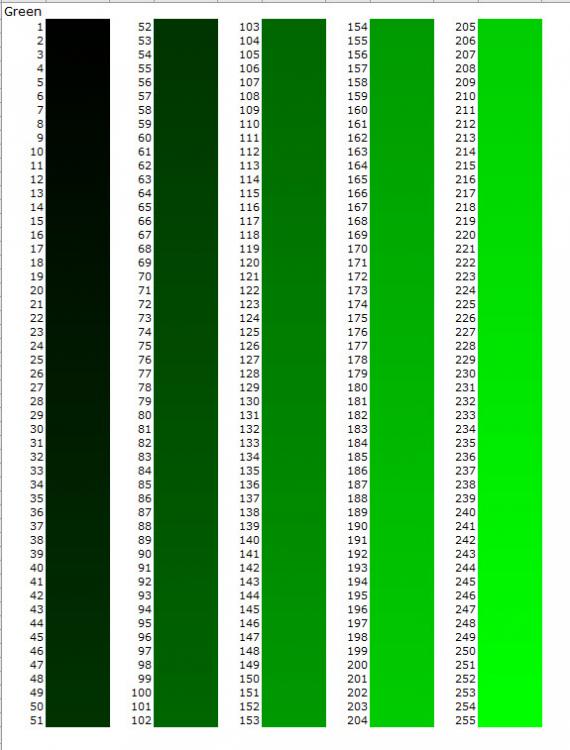

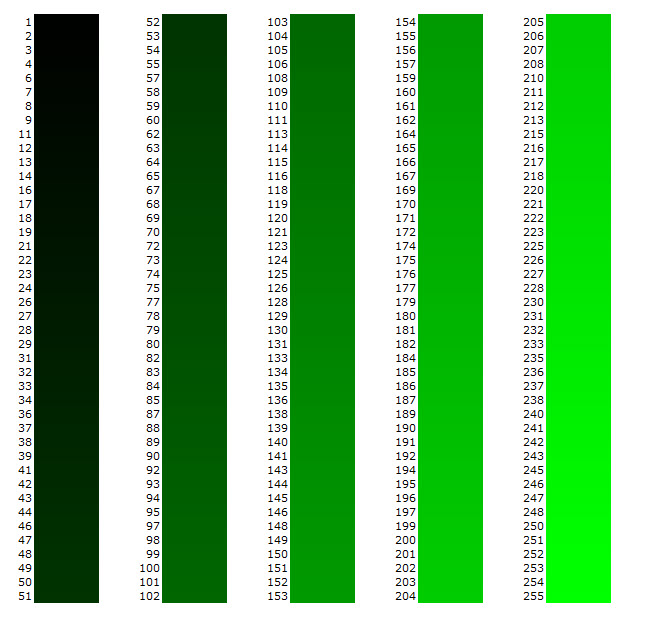

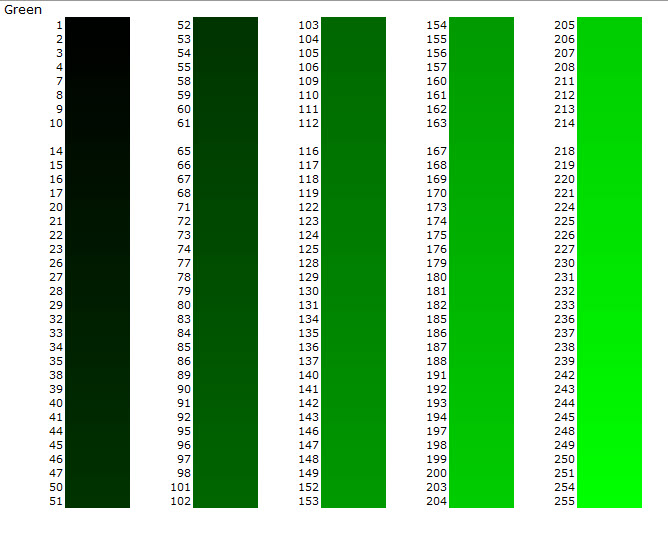

It's a taste thing, right, trading color saturation for greater dynamic range. We certainly wouldn't want HDR if it did that because people who favor saturation over DR would then be left with inferior images. We need both. When I say "saturation" (and maybe someone can give me a better term) I mean the amount of color information we need to discern all colors within the display gamut. Banding is the clearest example of what I mean. As I mentioned elsewhere, if you display, say 20 colors (saturation) of yellow on an 8-bit, 6DR gamut display, you will see banding, because your eye can tell the difference. Here are some examples I created. The first is all 255 shades of green an 8bit image, which should render "bandless" on a 6DR screen I can already see some banding, which tells me that the website might re-compresses images at a lower bit-depth. Here's a version where 18% of the colors are removed, let's call it 7-bit And now for 32% removed, call it 6-bit The less colors (saturation information) there is, the more our eye/brains detect a difference in the scene. HOWEVER, what the above examples show is that we don't really need even 8bits to get good images out of our current display gamuts. Most people probably wouldn't notice the difference if we were standardized on 6bit video. But that's a whole other story How does this relate to HDR? The more you shrink the gamut (more contrast-y) the less difference you see between the colors, right? In a very high contrast scene, a sky will just appear solid blue of one color. It's as we increase the gamut that we can see the gradations of blue. That is, there must always be enough bit-depth to fill the maximum gamut. For HDR to work for me, and you it sounds like (I believe we have the same tastes), it needs the bit-depth to keep up with the expansion in gamut. So doing some quick stupid math (someone can fix I hope), let's say that for every stop of DR we need 42 shades of any given color (255/6 DR). That's what we have in 8bit currently, I believe. Therefore, every extra stop of DR will require 297 (255+42) shades in each color channel, or 297*297*297 = 26,198,073. In 10bits, we can represent 1,024 shades, so roughly, 10-bit should give us another 24 stops of DR; that is, with 10bit, we should be able to show "bandless" color on a screen with 14 (even 20+) stops of DR. What I think it comes down to is better video is not a matter of improved bit-depth (10bit), or CODECs, etc., it's a matter of display technology. I suspect that when one sees good HDR it's not the video tech that's giving a better image, it's just the display's ability to show deeper blacks, or more subtle DR. That's why I believe someone's comment about the GH5 being plenty good enough to make HDR makes sense (though I'd extend it to most cameras). Anyway, I hope this articulates what I mean about color saturation. The other thing I must point out, that though I've argued that 10bit is suitable for HDR theoretically, I still believe one needs RAW source material to get a good image in non-studio environments. And finally, to answer the OP. I don't believe you need any special equipment for future HDR content. You, don't even need a full 8bits to render watchable video today. My guess is that any 8bit video graded to an HDR gamut will look just fine to 95% of the public. They may be able to notice the improvement in DR even though they're losing color information because again, in video, we seldom look at gradient skies. For my tastes, however, I will probably complain because LOG will still look like crap to me, even in HDR, in many situations 10bit? Well, we'll just have to see!1 point

-

Well hello there EOSHD forum readers, I've just posted my short film, Axiomatic, to Vimeo. Shot with an older version of the 5D3 Raw hack without the audio component - remember when we had to reload the Raw modules each time after restarting the camera? Ah well...it still worked flawlessly and I cannot thank all the ML developers enough for what they unlocked. 5D3 raw is simply amazing. Spanish, French and English subtitles are available for whomever needs them and viewer discretion may be advised as there is brief nudity, violence and coarse language. However I tried to make everything as tasteful as possible. Hopefully you can take some time out of your busy day to view it and please feel free to share it with any of your friends or contacts that might enjoy some dark Canadian cinema. Kelly http://filmshortage.com/dailyshortpicks/axiomatic/1 point

-

1 point

-

Actually you can make the GH5 look very cinematic!

graphicnatured reacted to AaronChicago for a topic

1 point