-

Posts

8,046 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

It's worth mentioning that with the right geometry you don't even need to tighten the clamp at all. This hook, which is designed to sit on top of a door, works because all the force is applied to one side of the door, and because the force from gravity is down, which keeps the hook securely on top of the door: Obviously the clamps we're talking about can easily rotate if kept loose, but I'm just saying that they don't need to be super tight because they're not fighting gravity, they're working with it.

-

Ok, now I am going to call you guys crazy.. Balconies are designed so that drunk people partying will be stopped by the fence/railing when they trip or get shoved towards the railing. People who fall from balconies do so because they fall over the railing, not that the railing fails! I'll take the structural integrity of something designed to hold up 100kg+ falling people over the structural integrity of an aluminium tripod with a rating of 10lb/5kg 🙂 Obviously it's important not to over-tighten the clamps, and also to ensure that the teeth or clamping surfaces aren't sharp in any way, which can easily be done by just putting a towel or t-shirt inside the clamp, but the setup only needs to hold up a GHx and lens combo, so that's not a problem. I can also arrange to put a tether around it to catch the setup if the clamp fails, but the orientation I would set it up in would put the centre of mass on the balcony side of the railing so it would tip into the balcony rather than over it anyway.

-

That makes total sense, and I guess that the lack of them out in the wild (they're radically backordered) will exacerbate current second-hand prices too. I can't justify the purchase now, so waiting until more people have taken delivery of theirs, and inevitably a bunch of people working out that it's not for them, will mean there are more around and the price might go down a bit. The way people were talking was that the editor was almost the same price as the license, so the (faulty) conclusion would be that you can get these things for almost nothing!! Alas. I think longer-term I would likely end up selling it anyway. For me, the more important aspect would be learning what techniques it's designed around and then implementing them in some other way. The only non-keyboard thing that the editor seems to have is the jog wheel, which I already have with my Beatstep Resolve conversion.

-

So, if I bought the Speed Editor and then sold the Resolve license to recoup some of the cost, how much is the difference? Has anyone looked at this? My rationale is that I want the Speed Editor but don't want to pay for it!

-

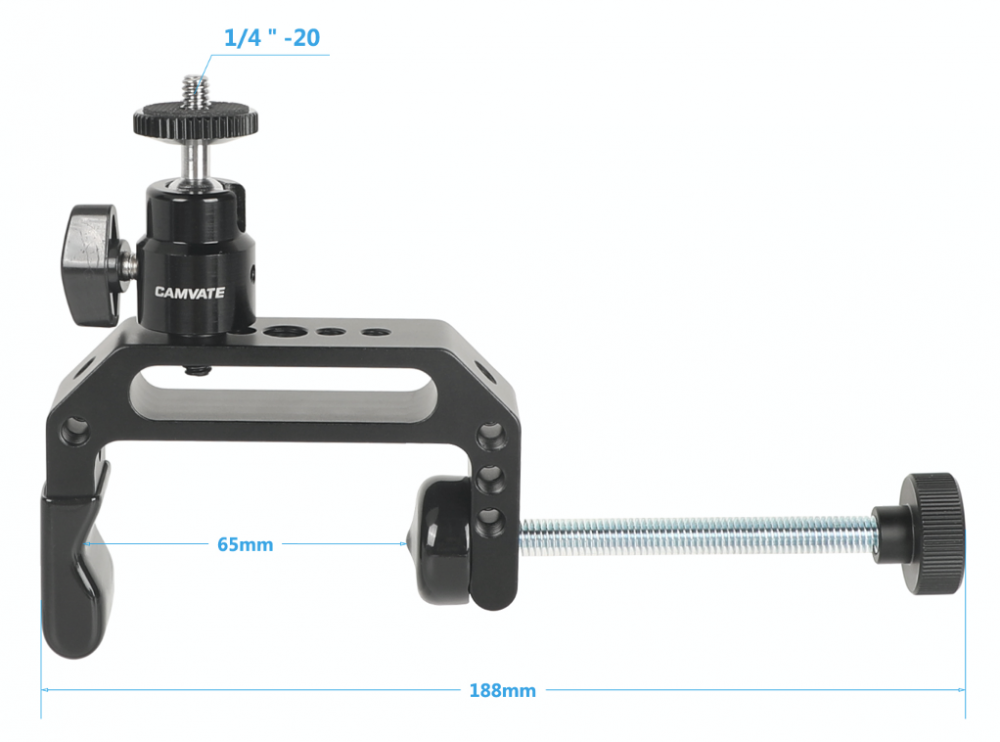

Ah ha... now I get it! You use the clamp to attach and then the head to change the orientation 🙂 Considering the cost of these things I'd be more likely to buy one and put both cameras on it rather than buy two. For that I'd probably mount a couple of arca-swiss quick-release clamps to a flash bracket and then the flash bracket to a ball head and then the ball-head to the grip head. So how do I mount a ball-head to the grip head? Is there a pin of some kind that goes in the grip head and ends in a 1/4-20?

-

I'm not convinced - the 24-70/2.8 will be as good a lens that Canon / Nikon / Sony etc can make, but they all managed to extend the zoom range by lowering the aperture by a stop. I cannot believe that the same trade-off isn't possible at a slightly lower focal length.

-

D16 would do it! The GX85 is definitely a small package, but the D16 would fit in much more convincingly on the set of the original Star Trek, and I suggest that perhaps that's the more important criteria 🙂

-

What makes you think he hasn't already got one? ....or more? 😂😂😂

-

I am at that stage of just refining my kit with odds and ends. I think this is a good place to get to because it means that you're actually taking feedback from how you work on real projects, and you're refining your kit to be the most useful to how you work. In terms of new camera GAS, I'm thinking I should build a collection of great looking stills from videos I've shot just so that when I start going "maybe I need a...." I can just go look in that folder and remember how good the camera I already have is. That can then me a kickoff point to thinking about lights and composition and things that may actually improve my images... 🙂

-

or maybe I mount a ballhead to the end of these...

-

-

This one has the right geometry, but doesn't open up far enough...

-

You're paranoid! Not sure why you requested that, but anyway, moving on... 😆😆😆 Ideally I'll have two, one for my action camera and one for my second camera (likely a GH3), so spending $100 each for tripods that weigh ~1kg each doesn't really make sense, especially considering that I wouldn't use them for anything else. I've taken a tripod on long trips and never used it, and taken a large Gorillapod and only used it for time lapses mounted to a balcony rail, so this is literally the only thing I need anything for. Thanks, half the battle is knowing the right terminology. However, it does look like neither is a good fit. The problems with the Mafer style is that they don't seem to open very wide, with the max I could find being 2" which is probably fine for rigging etc but is on the smaller side for handrails, so I'd be running the risk of it just not being large enough if I got a larger handrail. The Cardellini look to solve this issue, and the vise-clamping motion seems to be perfect, but I'm not that sure the geometry quite works. If I oriented it like the below then that would work for free-floating handrails but wouldn't work for handrails capping a glass sheet, which are very common. Ie, I can't attach the clamp upright like this: on balconies that are a rail on top of a sheet of glass like this: In that situation I could clamp it so the threaded rod is horizontal, but then I'd have trouble attaching the tripod mount on the end. This clamp has the right geometry, but it's special order from B&H and the price is getting a little high at USD$54 each. This could really be achieved with a cheap hardware store clamp with an angle bracket welded to it. I think my late father-in-law had a welder gathering dust in the shed, so maybe I should fire it up and with a few dollars of parts and the worst welding job in the world I'd have something suitable. I was just thinking there would be a $7 high-impact plastic clamp on eBay that someone knew about.

-

This looks like a good option as it looks like it can clamp on flat and round things, but it's 1.2kg / 2.7lb !!! https://www.bhphotovideo.com/c/product/840831-REG/Kupo_KG500511_Large_Gaffer_Grip.html/specs

-

I'm now thinking about travelling again (but not doing it yet!!) and am looking for recommendations for a camera clamp for a balcony handrail. The context is that I often shoot time lapses from balconies and want something that can clamp a full-size camera and lens combo securely to the balcony. Some of them have a handrail, but others are just a piece of toughened glass that isn't capped off with anything. Here's some random shots of various balcony hand-rail setups.. Ideally, I'd like it to: be able to clamp on just glass, or a thicker handrail have the camera mount on one side of the clamp so I can put the camera on the balcony side of the railing so if the clamp fails the camera falls into the balcony and not over the edge be lightweight as I'll be travelling with it, although size isn't as much of a concern so sturdy plastic would be fine I think I've carried gorilla pods before, but I've realised that I don't end up using them except for this, and they're big and heavy and not actually that sturdy for clamping to anything, especially as I'd like to be able to leave them unattended, even in a decent breeze, and not be afraid they're going to fall. Does anyone have any recommendations?

-

Yeah, odd that it was 2019 TV shows vs 2020 movies - not a straight comparison and sample size isn't that large.

-

Sorry - I mean FF. There's heaps of lenses starting at 24mm FF FOV, but if you want to get the 16mm FF FOV then you're stuck with 2X zooms. If you don't care about wider than 24mm FF FOV then you're covered, but the 16mm FF FOV is part of my trinity (16 / 35 / 85mm FF FOVs) and they're super common if you're a vlogger too. Even on MFT there's the 6-11, 7-14, 8-18, and 9-18... all 2X zooms. That Nikkor is so 3D compared to the Taks. I found the Taks pretty flat in their rendering too. My love goes almost exclusively to the MFT Voigtlanders now, but the Helios is a true classic that can be had for almost nothing... I've also re-discovered my Minolta 135/2.8 too, such nice rendering!!

-

Watched this recently, comparing the Alexa 65 to the Alexa Mini. Unlike typical YT hit-and-run equipment reviewers, this is a proper test done with care, rather than just waving the cameras around. To my eye the 65 has extra shallow DoF, the vignetting and barrel distortion from the lens, and greater resolution of course, but the interesting thing is that the colour science just kills the mini! I do wonder how much of the 3D rendering could be emulated by matching DoF and applying the vignetting and barrel distortion in post. ....and to those who think that 4K matters, even to Netflix, please recalibrate yourselves.... Source: https://ymcinema.com/2021/01/20/the-cameras-behind-best-netflix-original-movies-of-2020/

-

So, why are there no 16-50+ F4 lenses? Everyone makes a 16-35/2.8 and a 24-70/2.8, but lots of people slowed the aperture by a stop and made 24-105/4 and there's even a Nikon 24-120/4, but no-one seems to have slowed the aperture of the 16-35 and extended it's zoom range. A 24-120 is 60% longer than 24-70, but even if they could only add 50% more range to the 16-35 that would still make it a 16-52mm lens. Then you could get an extra prime in there. For people who like to shoot wider than 24, this would be a big improvement.

-

One of the philosophies I've adopted is that the things you can do to make bad footage look passable also help to make good footage look great, and great footage look spectacular. Luckily I have an almost endless supply of terribly-shot footage, which I shot because I didn't know what I was doing for a long time, and so I've been in my own self-made crash-course for quite some time now!

-

Oh, that's cool. Only being able to copy one node at a time is occasionally frustrating. One of the things that I am very aware of is that Resolve is the software component of an ex $100K+ hardware solution. The heritage of hardware solutions really came from tape cutting/splicing rigs for editing and things like colour timing machines: On such machines the workflow is determined by doing whatever the hell the hardware wants you to do, and the user-experience is that the engineers push the potential of the technology and the user learns to jump through whatever hoops are required to make the machine go. The reason I bring this up is that these limitations are often still hidden deep in the way that something like Resolve works. For example, a machine such as the above would be configured with the settings for the whole roll of film, then the roll would be processed. I ran into this "configure things on the input" philosophy in Resolve when I was half-way through an edit and was looking for some function in the edit page to change settings on a clip, and the answer was that I change it in the Media Pool, because (you guessed it) the workflow is designed for you to ingest and configure / code all your media before you edit. That's one example, but there will be many things that are constructed in a certain way that was relevant back in the day but is no longer required, but is kept, either because they haven't thought to change it, or because it's baked into the workflows of Company 3 etc.

-

One of the challenges with spatial NR is that it softens edges and fine detail, so if you only NR the red channel then you might get rid of most of the noise but only smear a third of the edge definition, probably a good compromise. I have no idea how the NR features in the software actually work - maybe they're doing this already. Not sure about temporal NR on ALL-I vs Long-GOP but you may find that Long-GOP might have a finer and less compressed noise considering that the keyframe paints the scene and then the progressive frames only have to deal with what changes, which would be mostly noise unless there was heavy movement in the scene. All else being equal, the Long-GOP would have much more bandwidth allocated to the noise and therefore it would be higher quality, and perhaps eliminated more easily as it would be closer to being random. Certainly the comparisons I made showed that ALL-I was lower quality compared to Long-GOP when they both had the same bitrate.

-

Yeah, tomorrow is the best predictor of tomorrow. ..or to put it another way, things change but not as fast as you'd like.