-

Posts

8,046 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Let me ask the opposite... Do you think you have ever had a new idea or captured an existing idea in a truly new way?

-

🙂 Good plan. I find that by using what you have and then seeing what doesn't work for you in real-world shooting, doing the projects that you do, in the way that you do them, with your own particular expectations and tastes, then you'll end up spending the money on what actually matters to you. There's lots of times when something is needed by someone else, but that doesn't mean you should upgrade. There's also lots of people recommending extra features "just in case" which can create a kind of spec inflation that's not based on reality, especially when people read the just-in-case recommendations and then pass them on to others with their own just-in-case inflation added on.

-

I'm looking for a compact HDMI cable for my BMMCC rig from the camera to the monitor. I have one but it's thick and inflexible. I spent a few hours searching dozens of pages of eBay auctions and other cheaper online stores, but couldn't find anything. I think I found one cable from a professional camera store, but it was something like $100 and there's no way I'm paying that for what should be a $5-10 cable. I'd prefer one with angled connectors so it's flush, rather than sticking out, but I found some 90-degree connectors that might work. Does anyone have any recommendations?

-

I agree about lock-in, but look at how many thousand/million posts there are online that basically distill down to "hi, two years ago I spent $3000 on a camera body that has 5-bajillion pixels, and now I can't sleep at night until the brand I worship with my all my heart and will fight to the death online to defend releases a camera with 5.2-bajillion pixels that I can spend 150% of what I spent on my current camera body on a new camera body that will give me a 2.4% improvement over the performance of the one I already own". and if you want to talk about existential crisis', then don't get me started about vintage lenses.... 8-bit vs 10-bit is an interesting topic. I'm a big fan of 10-bit and I bought my GH5 over other options specifically because it had 10-bit. However..... a large (very large) percentage of the time, that difference doesn't matter. You can shoot in one of two ways. The first is to shoot LOG, which is best done by shooting fully manually, using technical methods to expose correctly (eg, grey cards, or even light meters), using a view LUT probably on an external monitor, and then spending significant time in post to colour grade the image to get the absolute most out of it. The second is to shoot in a 709-style colour space, where you can expose using in-camera tools like waveforms or zebras, and then the time spent in post is minimal, and you're just tweaking the existing colour science that the camera has already given you and you were seeing on-set. The first method will give you the greatest DR and best results, if you know what you're doing. However, the second method can basically be paraphrased as "Nikon has optimised the exposure tools in your camera to work optimally with the colour science that the Nikon colour scientists spent decades developing and optimising". So, the first one will only give you better results if you want something very different to what the Nikon colour scientists predicted you would want, or if you are a better colourist than the Nikon colour scientists. I swallowed the hype online about shooting with LOG and using LUTs and colour grading in post, and I spent years shooting and creating images that weren't as good as the default colour profile in the cameras I had. I now shoot in 10-bit, but I use a 709-style picture profile to give me a great starting point, and I adjust from there. Almost all the arguments online about shooting 10-bit are really about shooting LOG, and more than half of the discussion about shooting LOG is coming from hipster YouTubers who just want to sell you their LUT pack. Obviously you're free to do as you choose, and there's more to a new camera than just 8-bit vs 10-bit, but I would highly encourage you to dig a bit deeper into each aspect of the camera, each specification, and to really challenge the idea that you need it or that it will even help you. Shooting LOG requires colour grading in post that takes considerable time and effort. Most professional videographers I've seen who talk about productivity and efficiency and keeping their clients happy and *gasp* running a profitable business, rather than endlessly talking online about specifications, use an 8-bit codec and a customised 709-style colour profile, and either don't colour grade at all, or apply a preset look they've developed that just tweaks the image a bit. Their focus is on getting final videos done and out the door, rather than shooting LOG and arguing about DR on camera forums.

-

I don't know much about them, but I assume that much of that functionality (peaking, zebras, a huge amounts more) could be added using an external monitor, if not an external recorder. I know these are expensive, but the features / codecs you'd get from a good monitor/recorder would be hard for almost any camera to match. It would add size and weight of course, but would also mean investing in something that could benefit you several upgrades down the track. Electronic stabilisation is almost always better done in post, because in post the software can 'see' into the future, whereas the camera can't do that, plus it can be tweaked from shot to shot. Also, if you're adding a monitor, and maybe other rigging, your shots will get more stable due to the weight. People seem to have very strange and often illogical ideas when talking about investing in equipment, but I'd suggest that a camera body is one of the investments that lasts the least amount of time, with people often re-buying their camera body every few years, when things like lenses can last many body upgrades, external recorders last until the next resolution bump, audio equipment can last until your quality expectations are no longer met, and lighting basically lasts forever. Oh, and none of that makes basically any return-on-investment compared to educating yourself and making better content.

-

Absolutely. While the D850 may not be the best video-centric camera, but it's a very high-end stills camera and the video modes get the benefits of the sensor, the colour science, the full set of Nikon lenses (which you will already own at least some) and the overall benefit of Nikon, one of the largest camera companies in the world, such as their support networks etc. Not to mention it's free because you already own it. Free is pretty hard to beat, when that means the entire budget can go to things other than the camera body.

-

Please watch this video from the D850 and then explain, in great detail, how this image isn't sufficient for your needs.... Here's the blog post outlining a bit about how it was shot (handheld with a 50mm lens): https://news.coreyrich.com/2017/08/latest-work-home-shot-d850/ Spend the money on yourself, lighting, audio, or lenses, but why upgrade?

-

Or accurate diffusion of any source that is clipped, or diffusion of any source that is out of frame... but he'd know that if he read the thread I linked to. I guess when I said "more info than you ever wanted" I was being prescient - he really didn't want the info in the thread!

-

Yes, it seems they're all just combinations of small diffusion for skin softening, large diffusion for contrast reduction, and medium diffusion for when someone in a 90s TV show dies and goes to heaven. Just kidding! The medium size is for 80s wedding photography. From their proximity on the Tiffen triangle chart, Digital Diffusion FX and HDTV FX should be similar, but I vaguely remember a comparison that showed a pretty significant contrast reduction from the HDTV that the DD didn't have. It's a pity it's not compared in the Scatter plugin website. I'll have to look around and see what I can find in terms of comparison images. I'm not afraid of lowering contrast too much as I'll be shooting in 10-bit and can expand contrast back in post.

-

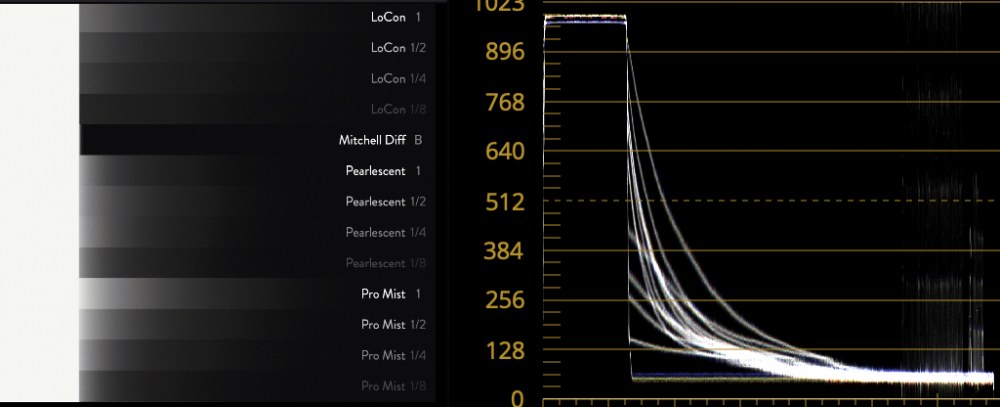

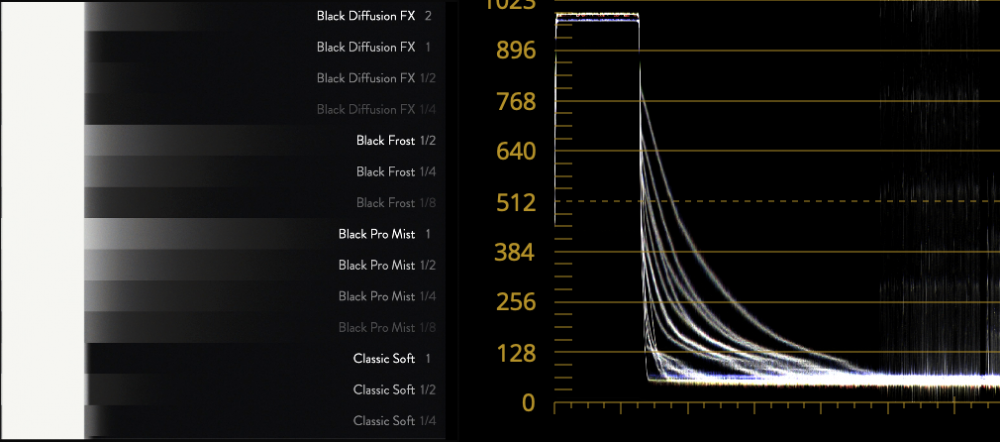

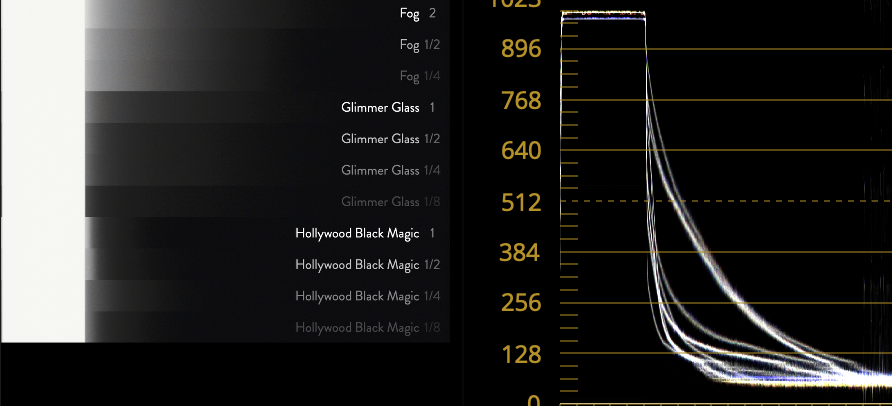

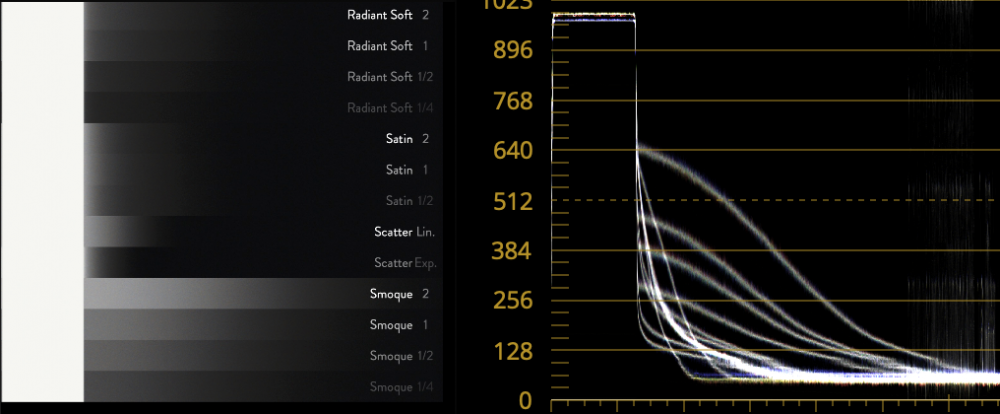

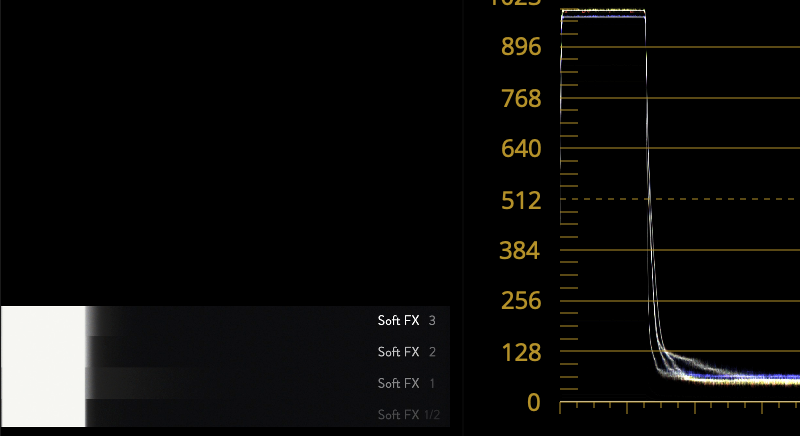

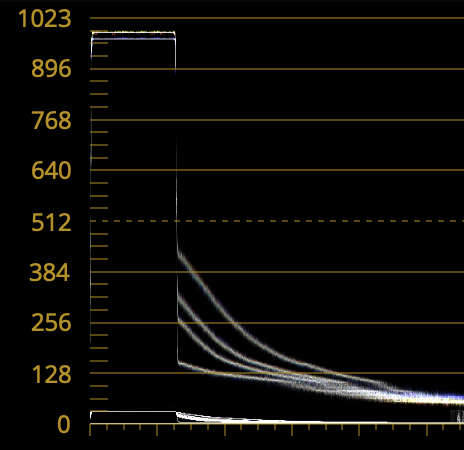

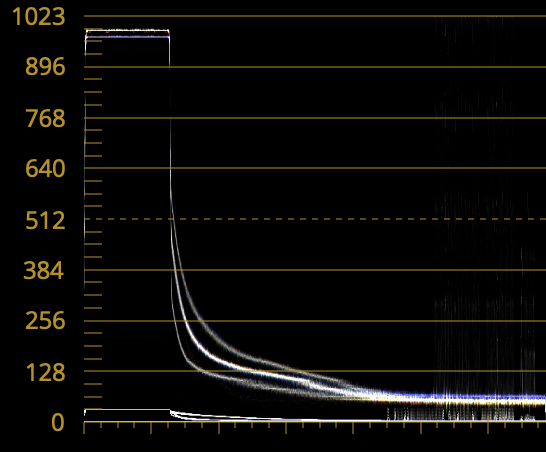

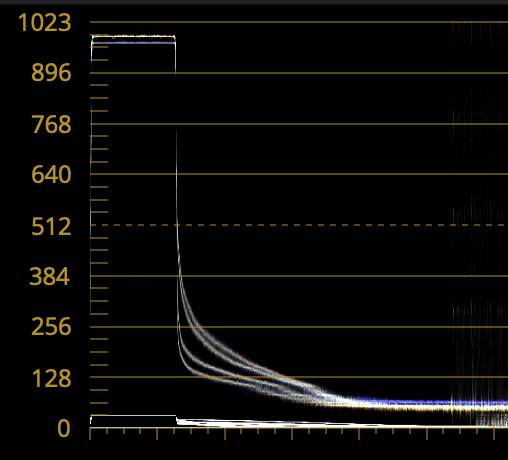

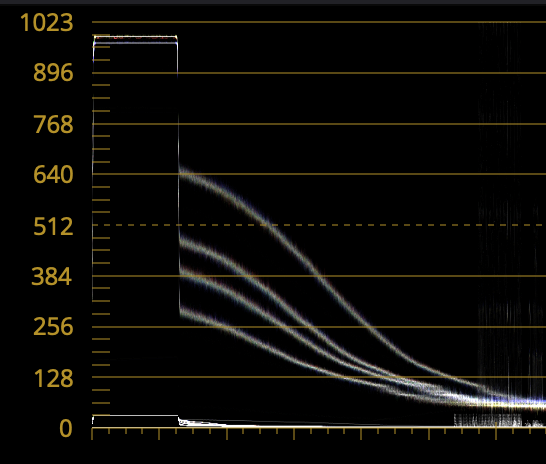

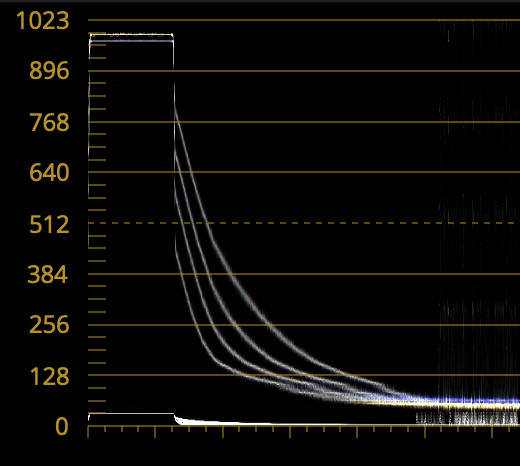

I've watched a bunch of tests, and decided that what I want is something that spreads the light the furthest. To put it another way, I want the edges of the frame to be lifted, and the area near the light-source to not be lifted much more than the edges. This is a useful video for seeing the BPM filters in a range of situations: For me, the BPM 1/8 filter was too much near the highlights, and too little on the edges of frame. So, we can look at that Scatter plugin, and have a look at what it thinks the filters are doing.. for example: That kind of view can show us the "response" of each filter type. Here's the rest of them in each strength: Very useful. Using a Power Window, we can isolate the ones that look interesting: This is the LoCon set: The 1/8 appears to scatter as far as the stronger ones, but obviously with less intensity. This is the Glimmer Glass set: Also quite wide-spreading, but the diffusion is gathered nearer to the highlight, and drops off a bit faster. Radiant Soft set: Also wide spreading, but slightly less than the LoCon. and Smoque: and for reference, here's Black Pro Mist 1/8 - 1:

-

Looks like there are other players throwing their offerings into the (lucrative?) body cap lens market... It didn't sound cheap though.

-

Thanks, this is all very useful. I was thinking that I would want something that is a combination of contrast and resolution reduction without halation, but I'm not so sure now. Perhaps my main challenge is that it would be for shooting in available lighting, and so that means that the sun and other bright sources may well either be in frame, or out of frame but still hitting the filter. Something like a contrast filter, with its very wide spread of light, would have a radical effect if the sun was hitting the filter, even if it was out of frame by a large amount. I suspect these filters, and the people that want to emulate them in software, all assume that every scene will be controlled lighting and there will be no significant light sources in or outside of frame. That's where the halation filters come in. They spread the light quite widely, but no-where near as widely as the contrast ones do, so the frame won't be blown out when the sun is a long way out of frame. I'll have to do more research and work out what that special something is that I'm seeing. Interestingly, when I look at some of the filters I get that "ooh, it doesn't look like video any more" effect, but it doesn't seem to get less like video when the strength of the filter increases, so in that sense, maybe what I want is the filter that will do this "not video" effect but with the least amount of effect, so when I'm shooting something in golden hour the sun then it won't just blow out the whole frame, and I don't have to muck around with lens hoods. Your comments about the filter being in-front vs behind the lens are interesting too, considering that I may use this on a zoom lens, so obviously that would change the strength of the effect, or perhaps more accurately, the size / radius of the effect in relation to the size of the frame. Ultimately, all the ones we're talking about (digital diffusion, HDTV, smoque) all had that magic while not really impacting the image too much otherwise, except that I'm having trouble finding samples shot outside where the flare from the sun is a factor. I'll have to look into them more and see if I can extrapolate what they might look like from what I can find at sunset and the relative strength and spread from that Scatter plugin.

-

Funny, I looked for the part where they increase the DR of your camera in post, and I couldn't find it. I consulted the laws of physics, who seemed dubious but referred me to their friend AI. AI said they're working on it, but it would be easier just to buy a contrast reduction filter and use it while shooting.

-

Funny you should mention that, but I watched the 38 minute Tiffen demo video last night (it was riveting - I won't spoil the ending if you haven't watched it..) and I was thinking that I might be more interested in the areas further away from the halation corner and towards lowering contrast and resolution. On first review the Digital Diffusion, HDTV FX, Black Satin and Smoque were of the most interest, with the filters in the halation corner seeming to push the image too far very quickly, whereas the others seemed to add a certain something but then not fall off a cliff when the filter was stronger. My criteria was whatever makes the unfiltered one look cheapest / most video / most digital, but without looking like the image quality has gone funny. I'm particularly interested in the contrast corner as it increases the effective DR of your camera, which is something that we'd almost all appreciate.

-

Go read the thread again. You missed some things.

-

Not quite, for many reasons which we've gone through already. This thread has more info than you ever wanted to know about emulating a Tiffen BPM filter in post.

-

The more I try to get a higher-end / less video-looking image, the more I keep coming back to filters. We've all heard about the Tiffen Black Pro Mist filters, but there's a whole world of them out there, Tiffen and other brands. I'm curious who uses filters, for any reason really, but particularly to counter the video look.

-

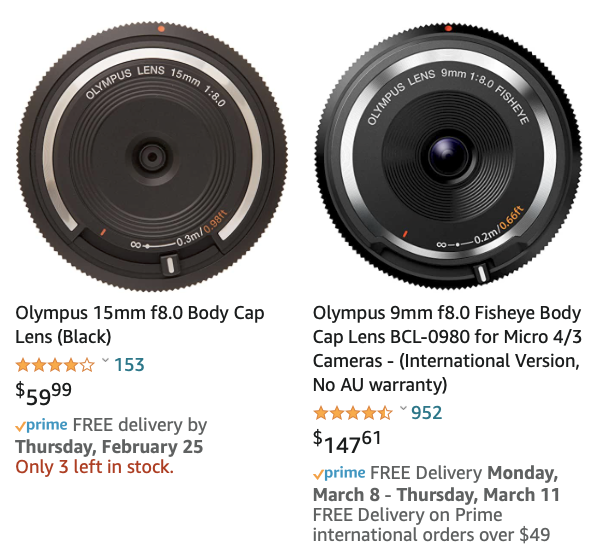

Yeah, they're kind of gimmicky, and I guess Oly wouldn't want the pixel peepers buying them thinking they're "real" lenses and then trashing them online. Expectation management I guess. Canon did the same thing with the XC10 - when it launched it was on their website in the cinema camera section but very quickly it moved to some other random section. That makes sense, both the 15 being more common and also the simpler construction making it cheaper. It's a 30mm equivalent FOV, so much closer to the focal lengths that are easier to design and build, like a nifty fifty etc. Wider lenses seem to be far less common, and if you go deep into C-Mounts and coverage the difficulty was always at the wide end. Lots of cine zooms by the big names that were 50-250+ or other ridiculous lengths, and just leave me wondering why they didn't go for a more 24-70 type range that covers the middle ground, but wider lenses are more difficult and expensive to make and correct all the issues etc. Why only those lenses? Are you just thinking that lenses longer than 25mm won't be well stabilised, or is it a weight issue? Maybe some of the newer Chinese lenses might be light enough from being cheaper plastic construction? Or maybe they protrude too much to balance?

-

Yeah, imagine if they did.. Meanwhile, I'm trying to actually unpack what it is that makes an image great, rather than which camera has the highest numbers. No wonder I'm confused and having a hard time about it. Those that don't know, talk, and those that do know, don't. It's easier to swim downstream I guess.

-

Not sure why, but there's quite a difference in price.... Maybe the "3 left in stock" means that's not a normal price, or maybe it's that the 9mm is the international version and looking at the delivery dates it obviously ships from overseas. Commerce and the foibles of markets are strange sometimes, although they gave us lenses like the Helios that are crazily cheap for what they are, so it's not all bad!

-

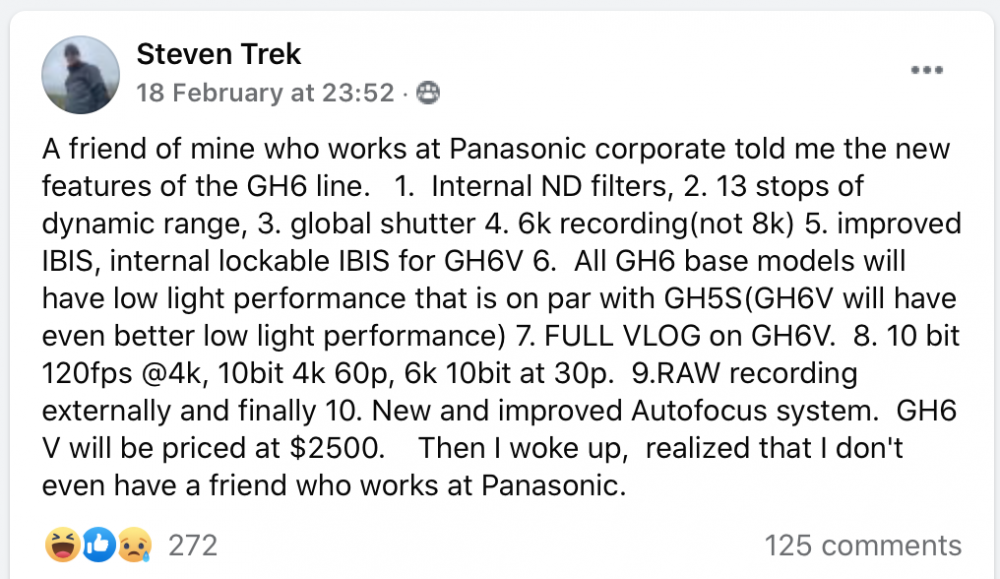

Is this where we post rumours? Excellent... Here's a GH6 rumour from the GH5 FB group that everyone might find useful.

-

Yeah, all the discussions I've read about people buying lens sets to rent out where either talking about EF and PL so that seems to be where the industry is at.

-

Totally agree, they should be restricting classes to camcorders in order to teach story, narrative, lighting, composition, and things that make good movies and TV. Youtube is busy teaching people everything about film-making that doesn't matter, like how to make 'cinematic' videos, when unfortunately all that does is train people to make videos that are sequences of epic B-roll that have no real content. The presence of the latter makes the former even more important!

-

Sounds to me like you're not aiming high enough. I'll leave you to your comfort zone....

-

Commenting on the highlighted sections... It's not about being an idiot - it's about asking questions about things that you don't understand. You and I can tell that this isn't a handheld camera in the sense that it is specialised for the task, but people who don't know this will get distracted and confused by it, and a conversation between people who know it and people who don't will just be confusing as they won't be able to tell who is right and who isn't. Another way to say this is that it's not a problem if you already understand the topic. That's great, if all you do is talk to people about things you already understand. I'm different. I spend a good amount of my time trying to learn things, things where I am the idiot who doesn't know things. I've tried on dozens of occasions to ask questions and had the threads completely derailed because of confusion about words and phrases and people with differing skill levels not effectively communicating with each other. It's not a theoretical phenomena, it's even cost me money. I've bought expensive books or pieces of equipment because somewhere in some confusing as hell thread on it those things got mentioned. I get them and they don't help. Later, after I have struggled through learning this stuff the hard way (have a look at how many tests and stuff I do myself - I do this partly because it's not possible to get clear answers about this stuff online) I have revisited the confusing conversations to re-read them and now I can see who knew what they were talking about and who didn't. Unfortunately I spent the money and the time and the confusion while I was being an idiot, thinking that these things might help me, which is what you have to be to learn something new. Saying that terminology doesn't matter because only idiots can't tell, is basically saying you don't care about learning and anyone who dares to read about new material is an idiot. Well, I'm an idiot, but the real idiots are the people who don't ever try to learn something new.