-

Posts

7,880 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Yes, AI is a real wildcard. I see that there are really three fundamentally different groups when it comes to generative content. The first is professionals who create material for the general public, or various niches of the public. This is where AI will have incredible impacts. The second is professionals who create for their clients directly. This is people like wedding photographers etc, where the client is the audience. This has been debated, but I think that there will still be a market here. If I did something and wanted a record of it, I would want the final images to be of me, not AI generated content that looks like the people I know might have looked during the thing that actually happened. The third is people creating for themselves, where there is no client or money changing hands. This is every amateur, every personal project from professionals, etc. The goal is to have a final result that this person created. Amateur photographers take photos and print and hang the best ones, not because they're the best photos ever taken, but because they were taken themselves. Personally, I'm in the last category and I am completely resigned to the fact that my videos will never be great, will never attract a significant audience, will never be regarded as important, etc, but that's not why I do it so in that sense AI is no threat to me at all. I do understand that people are all in different segments of the industry and have very different perspectives for very good reasons..

-

A phone is always a great second camera in a pinch.. but if there's budget, it's hard to look past the GX85 or LX10. They're comparatively small, especially if you fit the GX85 with one of the pancake zoom lenses. Jeez, it's lenses the whole way isn't it. A sensor size goes up, lens size goes up exponentially... Maybe you should rent an MFT system? I understand that lots of the wildlife is best seen at dawn? Things can be pretty still then, so that works in your favour. Not sure how things go at dusk as the sun shouldn't have been heating things directly for a while and temps could even out a bit. But if you're shooting big cats sitting lazily in trees during the middle of the day it'll be heat shimmer galore. Yeah, these old lenses can be a bit beat-up sometimes. The GH5 does a great job with stabilisation, but there's no getting around the fact that you're trying to hand-hold an 800mm FOV lens, or trying to use it on a crappy tripod where the only thing "fluid" about the head on it is the words in the product description!

-

Wonderful images... great stuff!

-

This is my most recent finished edit. I wrote the music for this too. I've shared it before, but some might not have seen it. Shot on the trip I did to Seoul last August where the wife and I got sick and spent most of our time in the hotel. OG BMMCC + 12-35mm F2.8 + TTartisans 50mm F1.2. Graded in Resolve with heavy use of the Film Look Creator tool. Music written in Logic Pro.

-

This looks incredible! Great images and colour, and I really like the music and edit too. It really is a different world down there isn't it...

-

That's a hell of a lens! I have a Tokina 400mm F5.6 permanently on my GH5 now to act as a telescope because I looked into buying one and it was cheaper and more fun to buy a super-telephoto lens! It's not super-sharp wide open but in daylight you can just stop down, plus anything that is quite far away suffers from heat haze anyway, so the sharpness of the air is the limiting factor. I've thought about going on safari for years but have never actually gone. My thinking eventually lead me to the idea of having two bodies, one with a very long lens on it, and the other one with a very shot zoom on it so you can get shots of when the monkeys start stealing food out of your van, or the elephants ram you. My impression from social media is that these things are practically guaranteed to happen. I have the PanaLeica 100-400mm on my "when I'm a millionaire" list as it seems it would be perfect for things like a safari where you never know how far away the subjects are going to be.

-

Ouch!! My GH7 (with battery, card, 14-140mm, and vND) is just over 1.1kg. The 12-100mm is just a hair under 300g heavier, so the GH7 + 12-100mm combo would actually be a hair above 1.4kg by the time it's fully functional, and my setup doesn't even include any audio equipment, so that's also something to take into consideration. I walked around Pompeii carrying the GH5 + Voigtlander 17.5mm + Rode Videomic Pro (1.4kg) in my hand for several hours, raising it up when I saw something I wanted to shoot. My wrist was sore for several days afterwards, just from having the weight on it for that long. It might be something you'd get used to, but having to train so you have the strength and stamina to carry a camera around seems a bit much to me! I agree. The high-ISO performance is actually quite impressive too. For low-light I have the 9mm F1.7 with CrZ and if I want longer range than that I have the 12-35mm F2.8. Probably the only other lens I would get for super-low-light shooting is the PanaLeica 15mm F1.7 because it's small and fast and being a Leica lens should be nice and sharp wide-open so the CrZ mode should be quite usable with it.

-

This seems like a simple question, but the more I think about it, the less simple it gets. Let's start out with the seemingly obvious answer - it looks like Super-16 because the sensor is literally a S16 sized sensor. End of thread, thanks for coming, byeeeee! Here are some thoughts suggesting it looks more like S35, or at least more than S16. Some are good arguments, some aren't, but summed up I think they're hard to dismiss. It appears sharper than S16, a lot sharper. Without getting overly technical, S35 has around 4K resolution, but the level of contrast on the fine details is quite low, and it's well known that by the time you print and distribute a 35mm film it really only looks like about 2K once it's projected in cinemas. This is perhaps the biggest argument for me - the P2K just looks like cinema did in the 90s. I know this isn't comparing a 35mm neg scan with the P2K files, but virtually all the memories of 35mm film that most people would have are from movies shot and distributed on film, not from viewing modern film scans. Lenses are much sharper now too, adding to it. S16 lenses were often very vintage! We have speed boosters, much faster lenses, and much wider lenses now. One of the looks of S16 was longer focal lengths and deep DOF, but if we were to use the P2K like we would use any other camera, it would be with speed boosters and faster lenses which would have much shallower DOF. The wider lenses we have now would be much sharper and faster too. So the lens FOV, lens DOF, and sharpness combinations would all be much more like S35 was, and perhaps even exceed it. How it's used would be much more modern. The framing, movement, lighting, locations and subjects also play a role in 'placing' a medium. This has probably changed less than the above arguments, and the things that any of us might shoot are more likely to still resemble things that I would associate with S16 (like FNW and TV and low-budget projects). I'm curious to hear thoughts from others. I've been reviewing my equipment and got to the P2K and thought "oh, it's a pocketable S16 camera" but my brain immediately added "that looks like 90s movies" and then I realised that these two things don't align!

-

Yes, it's the AF that makes me think of manual lenses on the GF3. For stills it's a fully featured camera, but for video it's auto-everything* and so having an AF lens on it is a pain because the CDAF will hunt occasionally. (* actually I recorded some clips with it last night and discovered it keeps the current WB setting - how odd that's the only thing it will let you lock down!) If you don't already own the Olympus body cap lens then perhaps the "7Artisans 18mm f/6.3 Mark II" might be a better choice as it's cheaper and faster than the Olympus.

-

Indeed! Actually, the killer combo for the GF3, if we think of it like a tiny vintage film camera, is when it's paired with the Olympus 15mm F8 body cap lens. It is truly tiny.... In a sense it's an incredibly synergistic pairing, because it gives a 30mm FOV, which is wide enough to make any micro-jitters pretty minimal (especially if you add gate weave in post) and it's sharp, so the softness is just limited by the GF3, and it's deep DOF which fits with the 8mm look. Without an ND you're also using the shutter to expose, which I understand is also how 8mm cameras worked? However, perhaps the killer aspect of it is the way you would use it. You'd never use this as your main setup, so this would be a carry-everywhere low-stakes camera for having fun with. It would be what you pull out when being silly with friends, or filming random things that aren't so formal. In a way, that's how people might have used an 8mm camera back in the day, because they weren't inundated with video and didn't have the media savvy we all have now, so they would have just pointed their home movie cameras at whatever was happening. It's even got a lever that closes it for use in pockets, but it also works as a manual focus adjustment and close focus is something like 30cm / 12 inches which might even get a little bit of background blur (I can't recall) so it's quite versatile. The challenge is that the F8 aperture means it's basically no good after sunset or indoors, so that's the weakness. Apart from that, this is perhaps the most likely setup I would use this with. There's an F5.6 version from a different manufacturer that is tempting, but re-buying it for only one extra stop is a bit hard to swallow. Anyway, here's a video I shot with this combo quite some time ago.... I can't remember how I graded that, but I think I used a film emulation plugin that added a lot of softening in post, so don't take that as the limits of its resolving power. It also shows a lot of rolling shutter, so maybe the strategy would be to have it on a strap around your neck and pull that tight when shooting to stabilise the camera a little. There is something about the extreme lack of technical performance that makes my brain think "well, this isn't going to win awards for literally anything, so ignore all the rules and just shoot and have fun!"

-

The elephant in the room is Resolve. As I have discussed and demonstrated in my "New travel film-making setup and pipeline - I feel like the tech has finally come of age" thread, over the last decade Resolve has gotten more feature-rich, but more importantly, it's made it HUGELY easier to use and get good images. People now have a lot more knowledge about colour grading tools and techniques, that's for sure, but things like the Film Look Creator enable you to use a single node, you set your input and output colour spaces, and then you can adjust exposure / WB / saturation / contrast and all sorts of other things in the same tool. You don't even need to apply a film look at all... just select the "Blank Slate" preset, which sets it to have no look at all, and you can still use all the tools to adjust the image without having to worry about colour management at all. Any improvement in your post-processes is a retroactive upgrade to your camera, your lenses, and all the footage you have already shot. Colour grading is such a deep art that I think the average GH5 user back in the day was probably extracting a third of the potential of the images they'd shot, if that, simply because they didn't know how to colour grade properly. I'm not being nostalgic about the GH5 either, the same applies for any camera you can think of. There are reasons to upgrade your camera, for sure, but most of the reasons people use aren't the right reasons, and they'd be better spent taking the several thousand dollars it would take for a camera upgrade and taking unpaid leave from their job and improving their colour grading skills instead.

-

Thanks! and yes, I also like those particular shots too. Some time ago I invested in a M42-MFT speed booster and since then looked almost exclusively for M42 lenses, except for telephoto lenses where a speed booster isn't required. Vintage FF lenses don't normally get wider than 28mm, at least the ones that don't cost much, and at 28mm the difference between an M42 lens with my SB and an FD lens (for example) without one is a 40mm FOV vs a 56mm FOV. I know I shot the above images without a SB, but mine really steers my buying habits towards that system. I have now fully converted my setup to native AF MFT lenses (14-140mm, 12-35mm, 9mm, 14mm) so I now need to work out what I will use my MF and vintage lenses for. When I shot those images the IBIS stabilised the image but not the flares, so the video files aren't really usable. This means that if I want to shoot with very vintage lenses I need to shoot without IBIS and physically stabilise the camera, either going for a shaky image and embracing the aesthetic, or going for a more stable image and using a heavier setup / tripod / both. Thinking about turning off IBIS and going for a more vintage look, my thoughts turned back to my GF3. So I compared the softness of the image from my GF3 to the softness and grain of film, and depending on the amount of movement and detail in the image it's somewhere between being a Super-8 camera and a Super-16 camera. I am still pondering this information, as I'm not really sure what I would shoot with a S16-like camera and vintage lenses, but I definitely feel some attraction to this concept. Also, there is something super-cool looking about this setup! GF3 + SB + Tokina RMC 28-70mm F3.5-4.5 + vND... giving a FF equivalent of 40-100mm F5-6.4. This is quite similar to lots of S16 zooms back in the day too. For example the S16 Meteor 5-1 17-69mm F1.9 lens is equivalent to 49-198mm F5.5, etc. Part of my is very interested in finding a larger bulkier zoom and really leaning into the form-factor, but I couldn't find any around, and even if I did I'm not sure what they'd cost and if they'd be worth it to me (considering I don't even know what I'd use this for!) This is just wonderful... the trees have a painterly look that sort of makes them feel a bit hyper-real and a bit dream-like at the same time. Great stuff!

-

I'd argue that this kind of testing is actually necessary to understand how things behave. Over the years I have tested a lot of things and it's amazing how many things that "everyone knows" do not stand up in even the most basic tests, but continue to be myths because no-one bothers to even look. Aristotle claimed that women have fewer teeth than men, which is not true, but he obviously never actually looked to see if he was right - despite being married multiple times where he could easily have tested his claim at any time. No, not mixed up, but the 12-35mm has a shallower DOF and so you have to know where in the image to look to compare sharp details in the focal plane. This is the unsharpened cropped image: This is the 12-35mm image: This is the sharpened cropped image: The sharpening is perhaps a little over-correcting, but the thin edges are still slightly blurred in comparison to the proper image from the 12-35mm. This is where it is important to know how to read the results of a test. This comparison of the zoom to the crop matched FOV but not DOF, and while I probably could have zoomed in using the 12-35mm and also stopped down at the same time to keep DOF the same, the lens sharpness would have been reduced so it wouldn't have been a fair test. To get around that I should have tested using a flat surface like a resolution chart or a brick wall. The problem with going that route is that now we're no longer testing anything close to real-life, and no longer answering questions about what will and won't work in real shooting. The test wasn't "what percentage of resolving power is lost using the CrZ function?"... it was "is the CrZ function usable for shooting with cropped lenses?". Realistically I shouldn't have included the 12-35mm optical zooms at all, I should have just cropped in using the CrZ function and left the images to be judged on their own merits in isolation, the same way that any project shot using the CrZ function would be. This is the danger of pixel-peeing - it distracts from the only thing that actually matters - the image. The cosmicar really is a gem! There's a reason that cinematographers have relentlessly driven up the price of vintage lenses over the last decades, and why modern lens manufacturers are designing and releasing brand new lenses with vintage looks, and manufacturers are even creating new mechanisms to control the amount and type of vintage looks with custom de-tuning functions.

-

I was just poking around in the menus and noticed there is an option where you can switch between Full and Pixel:Pixel, so that's the same as the ETC mode on the GH5. It looks like you can use this with any resolution. Also, you can record C4K in Prores RAW, which is a 1:1 sensor readout, so exactly a 1.41x, or a horizontal crop factor of 2.934 from FF (the GH7 horizontal crop factor is 36/17.3=2.0809). The bitrates are a bit heavy though at either 1700Mbps or 1100Mbps and it's Prores RAW so you can't import it directly into Resolve and will need to transcode with a third party utility. This thing has so many options, and the more I poke around in it, the more it feels like a cinema camera in the body of a MILC.

-

@PannySVHS I've now tested the Crop Zoom (CrZ) mode in 1080p. This is the first test, and I exposed for the sky (which it thought was the right thing to do) which meant that the plants were a bit low, so I ended up bringing them up a little in post. The Prores HQ is great at retaining noise and so there's quite a bit visible despite me having shot this at base ISO 500. I've found that ETTR is definitely recommended if you want a more modern looking cleaner image. I also used the 12-35mm lens at F4.0 for all images as that's where it's the sharpest. First is comparing the C4K Prores HQ vs 1080p Prores HQ (on a 1080p timeline): Next we compare the CrZ vs zooming with the lens. I have prepared these images in sets of three. The first is the CrZ image, the second is zooming with the lens, the third is the CrZ image again but with sharpening added. This allows you to compare both CrZ images directly with the 'proper' one, as the more zoomed CrZ images did look a little soft in comparison when viewed at 300%. Around 14mm (1.16x): Around 18mm (1.5x): Around 25mm (2.08x): Once I got those images into Resolve and looked at them I decided to re-shoot it with a better exposure. So I chose a different framing that meant the sky wasn't influencing anything. However, I didn't realise that where I was standing was going in and out of the sun, so some shots were washed out and I had to compensate for it in post, adjusting contrast/sat/exposure/WB to match. Tests are never perfect but are enough to give a good idea of what's going on, and in real use where there is no A/B comparing going on no-one would ever spot it anyway. There's also a slight difference in exposure between the C4K and 1080p modes too, which is a bit odd. I imagine it's due to changing the sensor mode. I compensated for that in all these tests too. C4K Prores HQ vs 1080p Prores HQ (on a 1080p timeline): Around 14mm (1.16x): Around 18mm (1.5x): Around 25mm (2.08x): I am actually rather encouraged by these results, as my previous test was in low-light and I did on something with much sharper edges and that showed differences I'm not really seeing here. However, it's not really surprising that the GH7 did this well, as even with a CrZ of 2.08x it's still reading an area of the sensor around 2776 pixels wide. I say "around" that wide because there is a slight crop when you compare the native 5.8K mode with the native C4K, 4K, and 1080p modes, but I think the 2.08x crop will still be oversampled from the sensor by a good amount. The other thing I noticed was that I couldn't adjust the CrZ function while I was recording, the button just didn't do anything. I'm not sure if that's because I have it assigned to a button and that there might be some other way to engage it while recording. Maybe through the controls that are used to control powered zoom lenses, not sure. Anyway, it looks pretty darn good to me, and the grain actually reminds me of the OG BM cameras which are quote noisy at native ISOs too (and also lots of seriously high-end cinema cameras too).

-

Thanks! 14-140mm vs 12-100mm is really about different preferences. The most important factor to me is that you won't get the Dual IS stabilisation with Olympus lenses, whereas you will with the Panasonics. You can never really be sure what you'll use and what you won't until you have it, and I was surprised to find that I actually use the 140mm quote a lot, and it's actually usable thanks to the Dual IS.

-

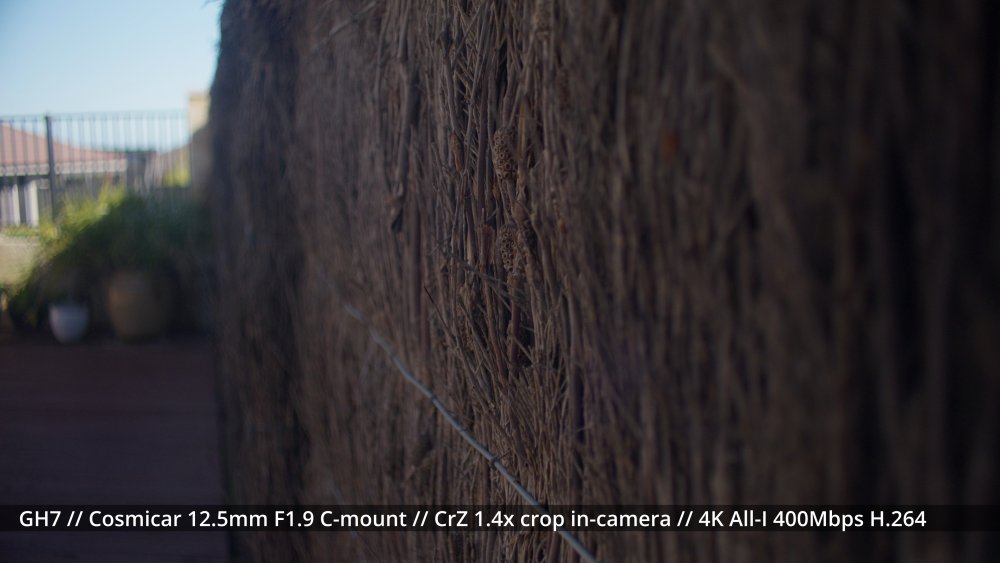

The 9mm I tested is the Panasonic Leica 9mm F1.7, I'm not aware of a 9mm F1.4 - maybe you're thinking of the Leica 12mm F1.4? Let me see if I can further tempt you!! I have done some tests (images below) but found the following: You can use the Crop-Zoom function (CrZ) to go up to 1.3x in C4K and up to 1.4x in 4K resolutions There is no 4K option in Prores, only C4K If the sensor was cropped to be a 1:1 readout, it would be a 1.4x in C4K and a 1.5x in 4K, but the CrZ mode stops just short of these amounts. I suspect that they have limited it so that it is always downscaling, even if just slightly. Test shots. First set are with the S-16 Cosmicar 12.5mm F1.9 C-mount lens. These are all on a 4K timeline, so you can really pixel-pee if you want to. I didn't have quite enough vND to have it wide open on all the shots, so some are wide open but some are stopped down to F2.8. Now, I switched from the 4K to the C4K, which meant I had slightly less crop available and you can just start to see the edges of the image circle. I suspect your mileage would vary depending on what lens you were using. The Cosmicar is pretty wide, so if you were using a long focal length you'd probably get no vignetting at all. This should also give a comparison between the 4K H.264 and the C4K Prores HQ. Now we switch lenses to the 12-35mm and stopped down to F5.6 so we can compare the CrZ crop to a non-cropped image. This is cropped to 1.3x using the CrZ function in C4K Prores HQ: and this is without any CrZ and using the 12-35mm to zoom in to match the FOV: I didn't shoot any clips this morning comparing the CrZ mode in 1080p, but I can also shoot a test for this if you're curious. I had a closer look and discovered you can't change the zoom amount, which seems to be stuck at 3x zoom. I'd say that it is resolving enough for focusing and I used it with the Cosmicar in the above test. It's the normal story of using peaking and rocking the focus back and forth to find the sharpest spot. At least I'd say that if you can't use it to manually focus then the problem isn't the punch-in feature but some other issue!

-

The 2nd and 3rd are the most extreme, but I think the third-last (with the plant and green chair) is the best as it shows the image being sharp but also having a painterly quality to it. It depends if you're interested in photography or videography. People seem to love lenses to be enormously distracting in photos but for video they are often way too much, like this lens was for this subject. These are frame grabs from a C4K Prores file on a 1080p timeline. In scenes without a strong light-source that blooms, it is just a lower contrast softer lens. From memory, it sharpens up substantially at F4, but if that's what you want then you may as well use a kit lens!

-

Went for a wander in the rain over the weekend with the GH7 and this lens: I applied some filmic colours and a bit of grain, but the halation / bloom / softness / flares are all the lens. Just remember, the less you pay for a lens, the more fun it is.... and this lens is a lot of fun.

-

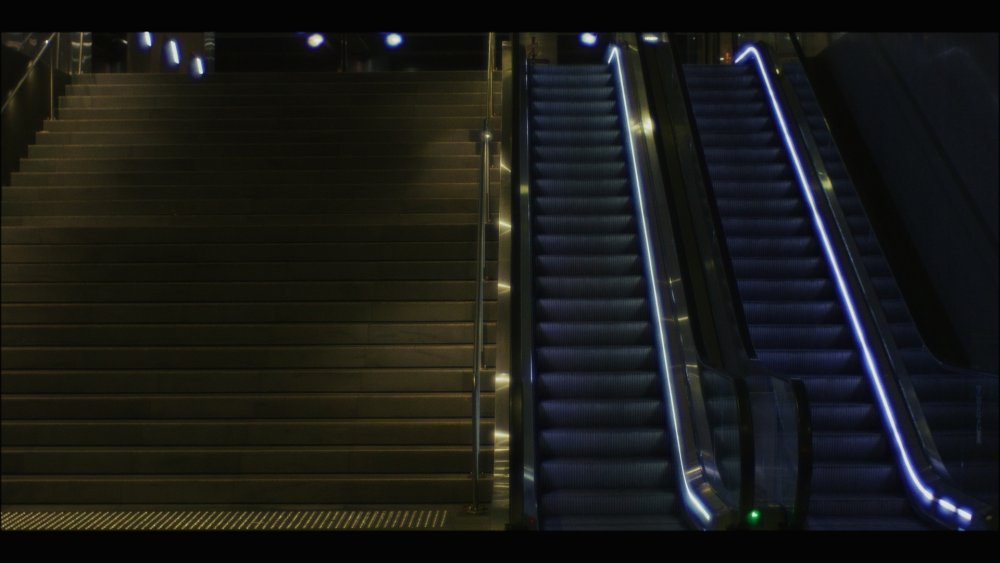

Sharpness seems very natural to me, although I am not at the level of pixel peeing as others around! What I can say is that the Prores feels like Prores from a cinema camera. So the files edit like butter, the grain is well captured and not removed / crunched, etc. I've done quite a lot of low light high-ISO testing in the last few weeks and even up to ISO 12,800 the footage cleans up in post using temporal NR, which wouldn't work if the compression killed all the noise. Punch-in focussing is available during recording, and pops up automatically if you touch the focus ring on a native lens, and has a custom amount of zoom. I'd assume it's the same as previous cameras where you have an option to give you a punch-in box in the middle of the monitor, or for the whole monitor to show the punched-in part. The focus peaking was also active within and outside the punched-in part of the screen. The in-camera digital zoom is changed from previous models, and significantly improved at that. It's quite different now. Let's say I have my 9mm lens fitted. I hit the button I have mapped it to (it's called Crop Zoom "CrZ") and it activates the feature, showing me the current focal length (9mm) and there are a bunch of ways to get it to smoothly zoom in and out, displaying the current equivalent focal length as it goes (10mm, then 11mm, etc). The function is integrated into the zoom controls for the powered zoom lenses too, so I think you can zoom in and it will zoom the lens in as much as it can and then (if enabled) it will keep zooming in with the digital zoom. I thought the idea was it will keep zooming in until it gets to a 1:1 sensor read-out and then won't go any further, but the manual just lists some rather arbitrary zoom amounts. With my 9mm lens, if I shoot with the C4K mode it will go to 11mm, but on the 1080p mode it will zoom in to 24mm. In my tests I've found that the in-camera cropped images are free from artefacts, and I'd even zoom in/out during recording using it if I felt the need to. I'd happily use it for S16 cropping, or any other cropping you wanted. Perhaps the only caveat is that if you wanted to crop more than the 1.3x it will do in C4K, or 1.4x in UHD, then you have to use the 1080p mode, and that mode seems to have a slightly different look to the images, a bit more like the OG BM cameras in that it looks like a lower-resolution sensor readout. It's got a bit of that lower-resolution more sharpening look to it, rather than a higher-res-downscaled look to it. It's subtle, but it's there. It's still high-quality, but just compared to the 4K modes it's noticeable. I've been doing lots of tests for my next ballooning trip, and these include low-light testing. I figured I'd take my 14-140mm zoom for when the light is sufficient, and I'll take my new 9mm F1.7 as my ultra-wide, but was wondering if the 9mm could be my low-light non-wide lens as well. I did two tests. The first test was an ultra-low-light test. I tested: - GX85 with TTartisans 17mm F1.4 manual prime at F1.4 - GX85 with TTartisans 17mm F1.4 manual prime at F2 - GH7 with 9mm F1.7 at F1.7 (shot in C4K and cropped to be 17mm FOV in-post) - GH7 with 9mm F1.7 at F1.7 (shot in 1080p and cropped in-camera to be 17mm FOV) - GH7 with 12-35mm F2.8 at F2.8 and 17mm - GH7 with 14-140mm at 17mm - GH7 with TTartisans 17mm F1.4 manual prime at F1.4 - GH7 with TTartisans 17mm F1.4 manual prime at F2.0 - GH7 with Voigtlander 17.5mm F0.95 manual prime at F0.95 - GH7 with Voigtlander 17.5mm F0.95 manual prime at F1.4 - GH7 with Voigtlander 17.5mm F0.95 manual prime at F2.0 I reviewed all of them with just a 709 conversion, with NR/sharpening, and with tonnes of NR/sharpening. This is a test of lots of things being traded-off against each other, as the slower lenses all needed a higher ISO, and the 9mm was sharp wide-open and brighter but also pulling from a smaller sensor area, but I didn't upload to YT so it's not a full pipeline test. The result was that the Voigtlander won, the TTartisans at F2.0 was good, the 12-35 was good, but the 9mm was still acceptable and waaaaaay better than the GX85 + TTartisans wide open (which was what I shot the previous outing with and I found to be disappointing - the combo of the TTartisans at F1.4 combined with the GX85 ISO6400 was just a killer combo). I also tested the 9mm F1.7 wide-open vs the 12-35mm F2.8 stopped down to F4.0 against each other in good lighting and native ISO and using the 1080p in-camera zoom to match focal lengths. I reviewed all of them with just a 709 conversion, with NR/sharpening, and with NR/sharpening put through my FLC pipeline (which includes softening the image slightly and adding grain). I didn't upload it to YT either, so it's not a full-pipeline test but was a good indicator of it. I found that the 9mm zoomed to 12mm was equivalent to the 12-35mm, at 18m it was noticeably softer, and at 24mm it was really noticeable and getting into vintage territory. I can post some stills if you're really curious.

-

Think about selling it and see how you feel. If you don't have a negative reaction to the thought, and it's not a practical choice, then sell it. If you do have a negative reaction to the thought of selling it, then think about how much you might get for it and what you could do with that money. Then think about swapping the camera for those other things and see which gives more excitement. Ultimately, if you're not shooting for money, then you're doing it for enjoyment, so ignore the specs and go with what would bring you the most happiness. Thanks! Not sure if I said this above, but my approach for the GX85 shots was to just apply enough of the film simulation in the FLC to get rid of the "video" look in the files, which I think was only about a third. The GX85 has a strong look to begin with, so I didn't need to add much to the colours. After many years working out colour grading tools and techniques across lots of cameras I've worked out how to get images to not look so digital, but now I can do whatever I want with them, I have to now work out what I want! It's a work in progress as everything is. Prores V-Log on the GH7 is an absolute joy. I have been doing some low-light testing over the last week or so in anticipation of my next balloon adventure, and I compared using a 17mm lens with using my new 9mm lens to get a 17mm FOV (firstly by shooting C4K Prores 422 and cropping to 17mm FOV in post, and also by shooting 1080p Prores HQ and cropping to 17mm in-camera) and even then, at ISOs of 5000 or more, the results were still not like the normal results from cheap cameras. In the grade it feels like footage from any cinema camera I've tried - the controls all feel great and the image responds how you want it to without colour shifts etc. Hell, I'm in groups where there are guys dealing with FPN from their RED cameras at base ISO that is worse than this camera has at ISO3200. Absolutely! There is something magical about this combo. I don't know what, but it's a joy to use and the files just seem to have something special to them. Dual GX85 bodies is a great way to go actually, and saves time in changing lenses all the time. Great stuff!!

-

I did a bunch of testing some time ago comparing them, and they were less different than I thought they would be, and the CineD and Natural seemed to have the same latitude. I'm not sure if somewhere along the line I got confused between Natural and Standard though, so that might be something to test. Years on, the conclusion I've come to about colour profiles is that if you're going to colour grade in post with any kind of sophistication (and now with the Film Look Creator tool and Resolve colour management we have incredibly sophisticated tools) then it probably doesn't matter which profile you use. If I was limited to basic tools then I might just use CineD and be done with it. It's just preference really. The vND I'm using is the "K&F Concept 58mm True Color Variable ND2-32 (1-5 Stops) ND Lens Filter". They offer one with a larger range, but it isn't the True Colour one so I suspect it has more colour shifts. I've been really happy with it and my tests didn't show any colour shifts. I asked some professional cinematographers for advice and they said this was the cheapest one they'd recommend, so I suspect this is entry level. The NiSi ones were also recommended, but they're significantly more expensive. As always, do your own research, but if you're curious I might have my tests somewhere.

-

From what you've said I would strongly suggest you to use Manual mode and to customise the dials individually (like @ac6000cw posted above) to configure one dial to be ISO ("Sensitivity" in the manual) and Aperture on the other. This would give you immediate control over the ISO (which you should only raise once the lens is wide open and there isn't enough exposure) and Aperture. This gives the advantage of the exposure of the shot not changing automatically during the shot. This is almost always something you want to avoid. Like I explained earlier - you don't want the exposure going up and down when bright objects come in and out of frame in the background. In situations where you do want the exposure to be adjusted during the shot, for example if you're moving from a light location to a dark one, or if lights get turned on/off, you can adjust these things with the dials. This will result in the exposure suddenly changing during the shot, rather than it gradually transitioning (as auto-ISO will do) but this is actually an advantage in post instead of a disadvantage. This is because if you need to adjust exposure during a shot then in post you can just chop the clip up into a few pieces (on the exact frames that the exposure changed when you changed the dials) and can automate the it from there. The challenge you have when using auto-ISO is that every shot where the exposure is drifting up and down will need to be adjusted with curves to compensate for what the camera did, and this can take literally hours. I shot a rodeo once using auto-ISO and a guy fell and the bull went over him, nearly treading on him, and so my framing went: him on top of the bull, pan down to him on the ground under the bull, him on the ground after the bull has run off. The exposure was all over the place as the elements in the frame changed and the camera "helped" me with exposure. The exposure automations I had to use to create an exposure that looked like nothing happened were complicated and took me literally hours. Had I shot it using one exposure then I could have just used a single exposure automation to bring up the exposure would have only taken minutes, and even if I'd have adjusted aperture or ISO during the shot it would only have added a few minutes in post to chop it up and adjust each segment individually. As you saw from my stress tests above, there is a lot of latitude in the files, so I cannot imagine that many situations where you'd want to change exposure during the shot. I used to be a full-auto shooter, and shot like that for years, listening to people online about how doing things manually was better. Now we have better tools in post, I have now fully switched to manual shooting as I've been through the pain of adjusting things in post to compensate for the camera wandering around. Just looked. I have -5 Contrast, -5 Sharpness and -5 NR, and 0 Saturation. There is a whole topic about why I have set these the way I have, but that's what I recommend. 5600K makes things look like what they actually look like. I shot for years using auto-WB and just couldn't make things look natural in post, they always looked like something was off in some way. This solves that issue. I found that when shooting in available light, even doing a custom WB on a grey-card gives a worse result than just using 5600K. I'd encourage you to set it to 5600K and carry the camera around with you in a pocket for a day with the smallest lens you can find and just take a 1s clip of every location you can find. Then pull them all into your NLE and see how they look. You might be surprised at how well it works across all the different situations. If you can, take it to some night markets where vendors are selling food from vans and people are selling low-value items. You will find the most incredible variety of ultra-low-quality lighting imaginable, as every vendor will buy the cheapest LED lights they could find at the time. Trying to "correct" for these lights will be futile, but the footage should still be a representation of the environment you shot in, even if it won't look like a beauty commercial. A note on testing... I shoot in similar situations to those you have mentioned, and I have come to the conclusions I have come to via lots of experience and an incredible amount of testing. Testing is so important to getting good results because so often you are convinced of something and then do a test to verify your opinions and find that the results are radically different than what you were expecting. So many people online are full of opinions that are so easily proven to be false with only a few minutes of real-life testing. I'm not sure if you've come across any cinematographers doing latitude testing of a cinema camera, but it's very telling that cinematographers (whenever possible) will do camera and lens and lighting tests prior to shooting a TV series or movie, and it seems like no-one doing videography or photography does these or talks about them. Hell, I was looking at a lens the other day and couldn't even find anyone who published test images at different apertures to see how the lens performed wide open vs stopped down. I found lots of photography bloggers who published lots of images and had lots to say, but testing? Nah.... Professionals test, amateurs guess.

-

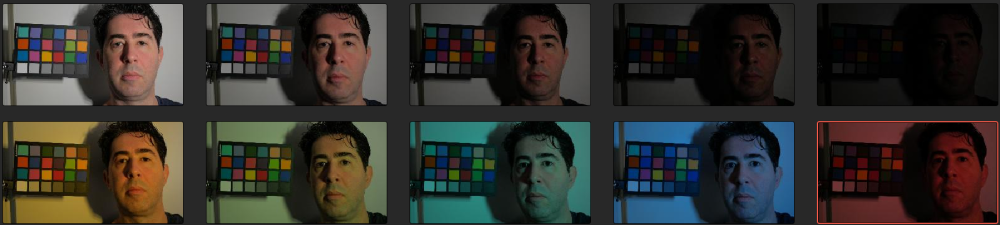

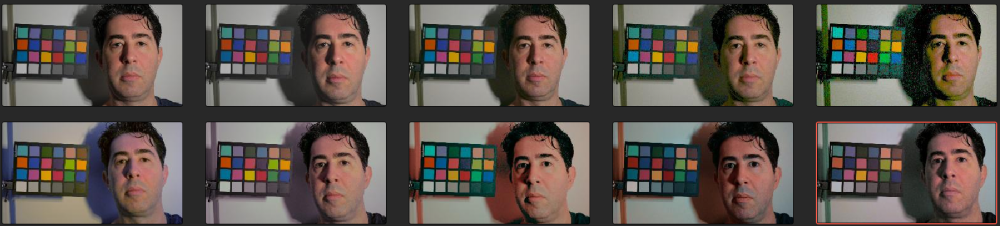

I forgot that for exposure I also use Zebras to show what is clipped. To expand on my exposure strategy, I try to expose to the right (ETTR) but making sure that the only things being clipped are things I'm ok with being clipped. If the DR of the scene is significant, you have a decent ability to bring up the image in post, so underexposing high-DR scenes can be a good strategy. This might sound silly, but when shooting in available light, having the sun clipped is just fine, at night having lights clipped is fine, etc. The GX85 image can always be brought up in post, but you can't bring it down if something is clipped. I have a good thread on testing the GX85 here. Here is an example of the torture test I did with exposure and WB. These are the test images SOOC: Note that these are horrifically wrong in exposure and WB. This is a torture test after all! After correction: Small adjustments in exposure or WB are completely possible in post.

-

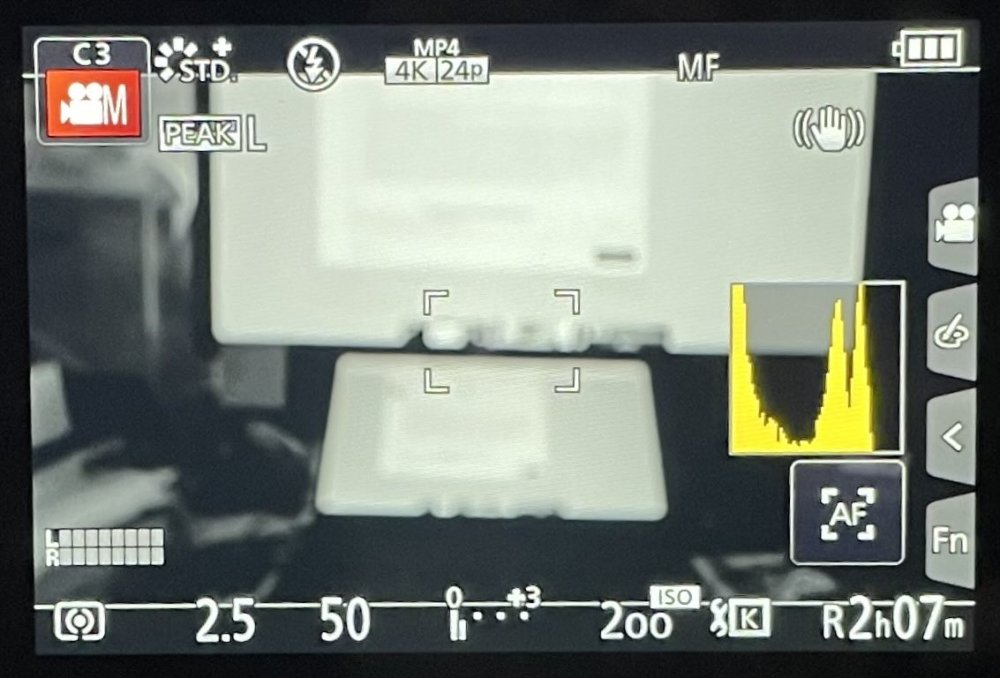

Congratulations on your purchase! I have the GX85, which apparently is very similar to the GX80, but you might notice small differences perhaps, so I guess maybe not everything I say below will apply to you. I shoot run-n-gun fast-paced stuff so probably very similar to the challenges you are facing. Here is how I setup and use my GX85. This is the screen, showing lots of handy info. Going from top right, here's what I do. I use the custom modes to store different configurations. In this case, it's in C3 and that is fully-manual. You can't set the mode (ie, PASM) once you've created the custom mode, you need to create the custom mode with the right PASM mode. To do this you choose the right PASM mode that you want to use using the top dial, then save that configuration to the custom mode with the "Cust Set Mem" function, and then you can change to that custom mode using the top dial and then further customise that custom mode. I use the standard profile. I've done lots of testing and the standard profile is the most flexible if you're going to further tweak the colours in post. If not, feel free to choose whatever mode you like. I use focus peaking and have set it to a custom button to switch between the high and low sensitivities. I use this in combination with making the display black and white so the peaking is more visible. No flash. 4k 24p. I've setup back-button focus. This means the camera is in MF mode, but I have configured the AE/AF Lock button to enable AF while you hold it down. The way I shoot with an AF lens is to push in that button, see what it focuses on (which is obvious because of the peaking) and then I release the button, then I hit record. This means that the focus doesn't change during the shot. This means the focus isn't hunting around all over the place, it's not focusing on the persons hand or on the person that walks in between you and your subject, it doesn't focus on the background if they move in frame, etc. It won't follow them if their focus distance changes, which can ruin some shots, but my experience is that AF jumping around ruins more shots than the subject moving does. Plus, if the subject moves slightly the aesthetic of them being slightly out of focus for a bit is far less objectionable than the AF jumping around for no reason. IBIS is enabled. IBIS gets a lot of criticism but if you stand still and hold the camera as still as you can then the IBIS will simply help you to be more stable and the jitters and jello effects can be reduced entirely. Shooting in fast situations means that tripods and monopods are often too slow and cumbersome, but if you try and emulate a tripod by using IBIS (or better yet, combine IBIS with OIS from a stabilised lens) then you can easily get very stable hand-held shots that with a tiny bit of stabilisation in post can be perfectly locked off without and artefacts at all. I set my AF to be in the middle of the frame, which combined with back-button focus is really fast and usable. Even if you do the photography thing of putting the subject in the middle, doing AF, then setting up your composition, it all works perfectly. I expose using the histogram. Exposure is a big topic, but I have done extensive testing and have concluded the following. In the Standard profile, you can do quite significant changes to exposure and WB in-post, even with simple tools, if you keep the exposure in the middle. The limits are that if something is clipped then it's clipped (of course!) and if it's in the noise floor then it's also gone. Apart from that, you have lots of flexibility. Audio meters show the levels. I use auto-levelling, but audio isn't really a big part of what I do and if you only shoot short clips like I do then any variation in level that it introduces isn't going to be much over a short clip, and it saves more shots by adjusting itself than it ruins. You can always set it to manual if you like. Aperture and shutter speed. I always use 1/50s when I shoot manually. Adjust as you see fit, depending on if you want to expose with shutter speed and not use a vND. Personally, I find that not having the exposure going up and down randomly is a good thing, and adjusting the shutter speed with the dial is just as painful as adjusting a vND. If you're using a manual lens then you can just set the camera once, and then all your controls are on the lens (vND, aperture, focus) so that's a really nice way of working. Lighting doesn't change that much, especially in daytime exteriors, so it's not a big deal. I've swapped to a high quality 2-5 stop vND and it's got enough range for daytime if you're willing to stop down a bit during the brightest bits. In busy outdoor situations you don't want to blur the crap out of the background anyway, so stopping down is actually more relevant than isolating subjects to the point where the shot could have been taken anywhere. The exposure meter is sometimes useful, but it's dumb. For example if you're shooting a person and a white van drives past in the background it thinks that you should change the exposure. Obviously that's dumb because you're shooting the person and not the van. ISO200 = base ISO. This camera doesn't have great high-ISO, so stick to base ISO when you can. WB = 5600K. I shoot exclusively in this mode. After using auto-WB for many years, I've come to realise that while different lights appear different with a fixed WB, things look like what they are. During the day things look right, sunset looks very warm but looks right, fluorescent lights look green but that also looks right. I rarely change WB in post now, and if I do it's to even out and tiny variations between shots just to polish the final video. My final piece of advice is to get a native zoom lens. Either the 12-35mm F2.8 or 14-140mm F3.5-5.6 are great, but the 14-42mm kit lens is also very capable and not to be underestimated. In complex situations you are often restricted in where you can move to, and will often want to zoom in to control your compositions. Having AF speeds up your shooting substantially, especially when you might only have seconds to get rolling when you see a moment about to happen. Happy to discuss further if you have questions, and I recommend searching around here, I've been posting lots of stuff over the years, including lots of tests and sharing what I have learned. Also happy to talk about strategies for shooting coverage, etc. Once you buy the gear and learn the settings you can make a video. It's what's in front of the camera and what's behind the camera that determine how good that video will be.