-

Posts

8,051 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

It's funny, but I look at "what if" scenarios for lenses about once a fortnight and I've repeatedly considered getting an EF-MFT speed booster and I never found a reason for it. The EF lens options never met the parameters of whatever it was that I was contemplating doing, so I never bought one. On the other hand, my M42-MFT speed booster remains in use continually. Every time I 'tidy up' my lenses and put the ones I don't use into a box this always seems to stay out for one reason or another. I always seem to be learning something about images or shooting or something, and will look at my lens collection from a completely new perspective, and the MFT system and M42 system (for adapting FF lenses) always seems to be the winner.

-

The Blazar Mantis is an interesting lens, and does reduce the weight from 1.3kg down to 800g but the SB+50/1.8 is only 350g, so pretty hard to beat. Plus the Mantis is probably 20-50x more expensive! The Lensbaby Double Glass has a replaceable aperture, but is 50mm F2.8 so on MFT equivalent to a 100mm F5.6 so pretty slow when compared to the Voigt+Sirui combo which is equivalent to a 68mm F1.5, or the SB+50/1.8 which is equivalent to a 71mm F2.6. I'm actually quite impressed at how much stretch and how consistent it was with the insert at the rear of the lens. I'm guessing this is where a 3D printer would come in perfectly, as I could rapid-prototype a completely custom insert for it. No matter, I'm sure I can do a reasonable job with some cardboard and a sharp knife.

-

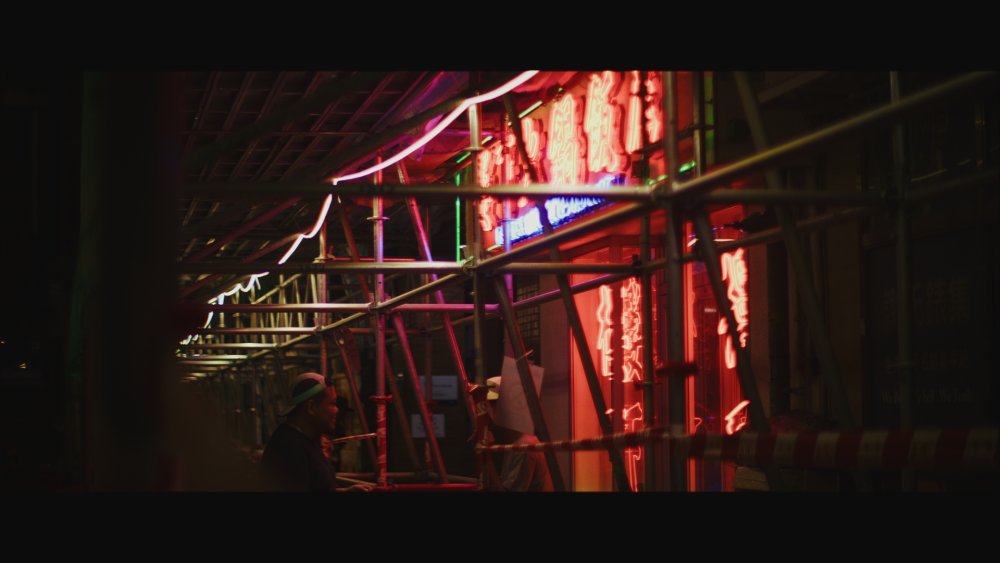

I shot a lot with the GH7 + Voigt 42.5mm F0.95 + Sirui 1.25x adapter while in China and Hong Kong and loved the setup, both for the images and also the ergonomics and use, but the combo is heavy. The glass is 1.3kg and with the GH7 the rig is over the 2kg mark (4.4lbs). While I felt like a total bad-ass wielding the rig, and the coolness factor of shooting street scenes with hand-held anamorphic is in-arguably off the charts, it wasn't perfect. The bokeh isn't that stretched from a 1.25x squeeze factor, and does exhibit a certain amount of swirl, I was wondering if there was a spherical alternative that would be considerably less weight to drag around the world. This is a first experiment in that direction. GH7 + M42-MFT Speedbooster + Meyer-Optik Görlitz 50mm F1.8, but with two small additions: Yes, these are small cutouts of the sticky part of a post-it note stuck directly to the rear element of the glass. These sit between the lens and speed booster. I've tried putting cutouts onto the front of the lens (on a space UV filter) and the results are underwhelming. This, however, looks incredible and is much more consistent in the shape of the bokeh throughout the frame. Obviously the shape isn't ideal, as I should round it slightly and especially round the corners, but as a proof of concept, this looks promising. More complex scenes: Of course, these are open-gate images, and this is definitely bokeh that deserves widescreen.... and with the best grade I could manage in the Mac image Preview tool, we get this: There is definitely something here. More to come for sure.

-

Love this. I remember as a teenager I used to translate everything to CDs - how many albums I could buy with the money I was evaluating. +1. My own experience of buying from Japan on eBay is similar to this. There are always examples of scammers / criminals / misrepresentation / bad behaviour from every culture. I think that it's precisely because the Japanese have such a good reputation for this that the few examples of misrepresentation that have happened get blown up and repeated far more than they might from other countries. I've bought quite a number of lenses that were cheap because they had fungus or haze or some other issue, and I consistently found with the Japanese listings that when I received the lenses and really examined them (especially against a strong light source) that the issues I discovered were almost always completely described by the Ebay listing. I suspect I might have had one surprise from a Japanese listing where something was misrepresented, but the level of deception was what you'd sensibly assume to be true on every other listing from any other place basically. From everywhere else, "buyer beware" is just being sensible.

-

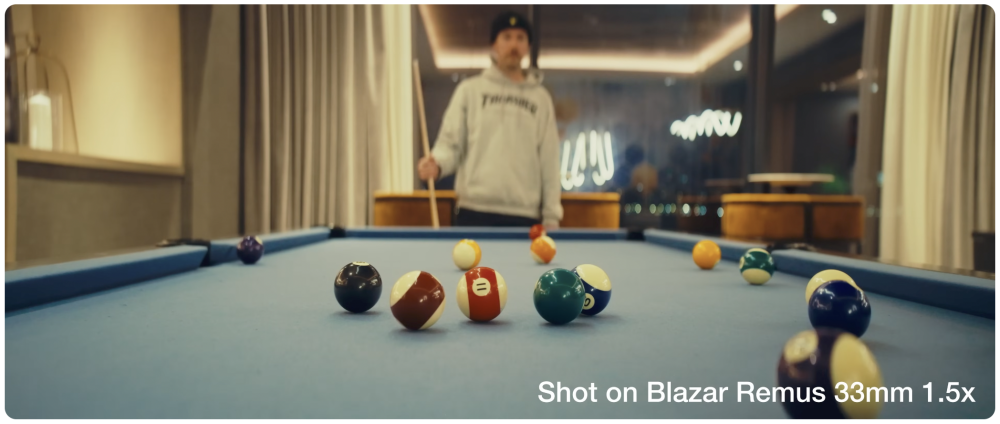

I agree, the Remus seemed to more consistently have vertical bokeh. 2x squeeze must just be incredible though. Thanks! I think things like this are a really practical way to get more flexibility with your equipment because you work out what you can emulate in post and then can focus on buying things that have the rest of your requirements, rather than everything you want. Then you just do some tests on dialling in what you like and save it as a preset and you're good to go. You do have to consider the practicalities of these things. I went out with my GH7 >>> Voigt 42.5mm F0.95 >>> Sirui 1.25x setup last night and while it's hand-holdable, it's not something I'd want to be much heavier, and definitely a challenge to hold perfectly still, even with the GH7s anamorphic IBIS turned up to 11. One thing I noted last night was that you can pull focus on the Sirui with one finger, so with my right hand on the grip and my left palm-up under the middle of the rig taking the weight I can still pull focus with my thumb or index finger. I really might. Maybe that's why I shouldn't! I've got a cache of images from this trip, and I've got some vintage equipment I want to test when I get home (and I might have ordered "a few" new lenses in the last few days off eBay) so I'll be looking to do a bit of a deep dive into this stuff in the coming weeks/month. Here's a few more recent stills. This place is intoxicating. Most of the shots have so many layers that without the movement you can't tell what anything is, so these are some of the simplest compositions.

-

To further elaborate on what I was saying about matching some elements in post, here's an attempt to bring the Sirui closer to the look of the B&H. It's not perfect but it's definitely closer. I've played with contrast / levels / colours / diffusion. The only tools used should be available in all NLEs: Lift Gamma Gain wheels Log wheels WB controls Contrast control Curves Added a Gaussian Blur at 36% opacity for the diffusion / halation / glow effect B&H Original: Sirui (graded to match): Sirui Original:

-

Just watching this video here, which is about the Sirui Ironstar 35mm T1.9 1.5x lens and am amazed at how different the looks are: This has loads of vertical bokeh: and yet, this has triangular horizontal bokeh (I think it's called Coma?): I mean, seriously: Then he compares them to the Blazar Remus which seems to have vertical bokeh that even gets more prominent towards the edges rather than less-so: and then compare that to a swirly bokeh image cropped to a broadly similar aspect ratio and the bokeh on the very edges looks similar: It's definitely not the same, but if it wasn't sharpened to within an inch of its life it might be a broadly similar aesthetic when put into a more normal scene. Or this crazy combo of Helios 44-2 and Blazar 1.5x adapter, which seems to have horizontal streaking on the edges rather than vertical: I'm beginning to think the bokeh is a lie, or potentially randomly generated!

-

Great shot of the cows.. like Mercer said it's window into chaos, and great use of the fence. I'm finding myself leaning heavily into the more vintage aspects of late, vignetting / CA / heavy film look / etc. I'm not sure why and I'll probably go too far and then pull back later once I've explored the territory. Perhaps the thing I like about anamorphic most is the non-circular bokeh as it gives a surreal feeling - our eyes just don't do that so it reinforces that feeling that movies happen in a parallel reality near ours rather than in the same one we live in. I find that the level of anamorphic characteristics visible in these lenses varies greatly between shots. For example this one shows quite a bit of it. and then this one seems to show almost none, including the complete lack of horizontal flares. In fact, despite shooting in crowded streets for quite a few hours over a few outings, I don't think I saw a single horizontal streak in the camera or footage. I didn't stand in the middle of the road while oncoming traffic approached, but the conditions sure had their fair share of almost direct LED lights set against very dark surroundings. Even this image, where the almost-white billboard was just below clipping and the sky is very very low there's no streaks... (on the GH7 the DR is so good I can almost always protect the highlights and still pull things up in the grade) @QuickHitRecord for reference the above shot was with the Voigt stopped down as I'd forgotten my vND filter, so that's how sharp the Sirui 1.25x can be, and this is how soft it can look too (not softened in post): It's definitely a rabbit hole, but one I am very curious about in terms of the images. It's interesting to compare the aesthetic to the test I posted previously of a very degraded spherical setup which in some ways is very similar and in other ways is very different. Loving this thread and looking forward to doing more testing when I get home.

-

The "Jennette (GH5 + Kowa 8Z Anamorphic)" footage might be quite sharp actually, but the lighting is very soft (despite being quite directional), the grade of the video is very low contrast, the stream on YT is 1080p, and who knows what their export from their NLE was like. Have a pixel pee at the shot at 20s and see what you think. It seems like there's very little funk going on with that shot compared to more vintage glass. For example, compare it to some of the older lenses on share grid: https://www.sharegrid.com/quadplayer Like the Hawk V-Lite '74, the Todd AO, etc choosing the 50mm wide open option. In your two shots B&H vs Sirui don't discount the impact that Halation / Bloom / Glow can have on a shot - the resolution stays the same but the sharpness is reduced along with the perception of sharpness because of the reduction in local contrast beyond the pixel level. Yeah, you can always reduce resolution in post but you can't increase it (despite all this AI trickery trying to do this). Don't be afraid of blurring the image either. I suggest you make a list of all your NLEs effects that can soften / blur / diffuse / etc, then take a couple of example shots and apply each of those effects in turn and just see what looks you can get from these tools. It's work, but if you do it properly and label and export the images you will only have to do it once, and you might find that you can get a cheaper and more flexible lens and use some effects in post to get the look you want. Also, don't forget that if you don't set the taking lens to exactly infinity then the focus of the taking and anamorphic elements won't ever be aligned, so things near the focal plane will either be blurring vertically or horizontally or a combination of both. Do this subtly and it will just knock-off the sharpness of the focal plane but keep the rest of the image basically the same.

-

Great thread BTW. Modern sterile images are incredibly boring.

-

Here's a quick test from the Voigtlander 42.5mm F0.95 and Sirui 1.25x showing the overall quality and how it behaves with differing apertures. The Voigt is sharp when stopped down, but not when wide open. The colour shifts are from the Voigt. F2.0 F1.4 F0.95 Here's an image from the other night to get a bit of a flavour. I've sharpened it quite a bit in post. I've shot with this combo on my current trip and really like it, but it's really heavy and so I've been thinking about alternatives for getting a similar look. I'm starting to think of this as a two-part challenge: the first part is things that can only be done optically like the bokeh (size, shape, CA, etc) and the second part is things that can be influenced in post (especially the softness of the focal plane). In this sense, I'm looking for glass that will give me the right bokeh, and can then degrade the image in post using softening, vignetting, distortion (barrel / pincushion), CA (of the whole image) etc. I'm surprised at how much the bokeh swirls: The fact that swirly bokeh is just anamorphic bokeh at the sides of the image, and the wider the aspect ratio you end up using in the final video the more you're cropping off the top and bottom where the swirl goes from vertical to horizontal, makes me think that a very swirly spherical lens with a wide crop might be a passable alternative. I will be investigating my vintage fast ~50mm collection on SB when I get home. These seem the easiest way to get soft images with character without huge weight and complexity and cost.

-

How dirty are you looking for? My Sirui 1.25x can look pretty dirty if you put it on a softer taking lens.

-

Not a clue, I just saw that there were some products available. A quick search reveals that the usual suspects offer them (Moment, Neewer, Smallrig, etc). Lots of them (or all of them?) will have their optics manufactured in the same factory, so they might all be similar optically. In which case, you're choosing form-factor (for example the Moment one is square and doesn't seem to have filter threads so doesn't look like it supports a vND) and perhaps the case / system that goes with that, and also price of course.

-

Interesting idea and seems like it might work well for some situations. I personally always use my phone in a drop-proof case and never take it out (as that just means the fit of the case gets loose and it lessens the protection) but the idea of a clip-on / clamp-on one seems sensible. I'd imagine that we'll see a number of third-party accessories come out for the 17 over the coming months. When I got mine there were only Apple cases available to be delivered immediately and the delivery date for the rest were weeks away. I took the GH7 >> Voigtlander 42.5mm F0.95 >> Sirui 1.25x anamorphic adapter out for a spin in the markets last night and the images were great. I'm not sure how much of that was due to the anamorphism of the lens, and how much it was just due to it not being pristine, but maybe that might be worth the extra fuss.

-

The more I use and grade my 17 Pro, the more I think that I'll work out a compact vND setup for it. The primary job for my phone is shooting ultra-fast with the default app in Prores Log, but the footage is so good that it would be great to be able to put on a vND, swap to the BM Camera app, and then shoot manually with a 180-shutter. In the default app where it's exposing with the shutter the only things I'm noticing that are limitations are the short shutter speeds and the stabilisation in difficult situations (like when you're tired, hot and sweating, blood-sugar is up and down, etc). The stabilisation is actually really good in the longer focal lengths, it's the 13mm and 24mm ones that are the challenges as you get the edges flopping around a bit. I'm also wondering what the 1.5x anamorphic adapters might be like for this. To get a vND setup for it you have to get a dedicated case and a custom vND for that case (which aren't cheap) so if you're going to be spending decent money on these things anyway then maybe getting a case and anamorphic lens and then using a standard vND that fits the lens might not be that much more costly. I have no idea if the lenses are good, are worth it, and how easily they work with the 1x / 2x camera and the 4x / 8x camera, as the mount would have to move if you wanted to use it with the 4x / 8x camera. I've seen a few 1.5x adapters, which would make the 24 / 28 / 35 / 48mm normal camera into a 16 / 19 / 23 / 32mm camera. The other camera would go from being 100 / 200mm to 67 / 133mm. Perhaps the best combinations in that bunch would be the 32mm, and 67mm ones, perhaps with the 23mm (or 19mm) as very wide options for specific situations.

-

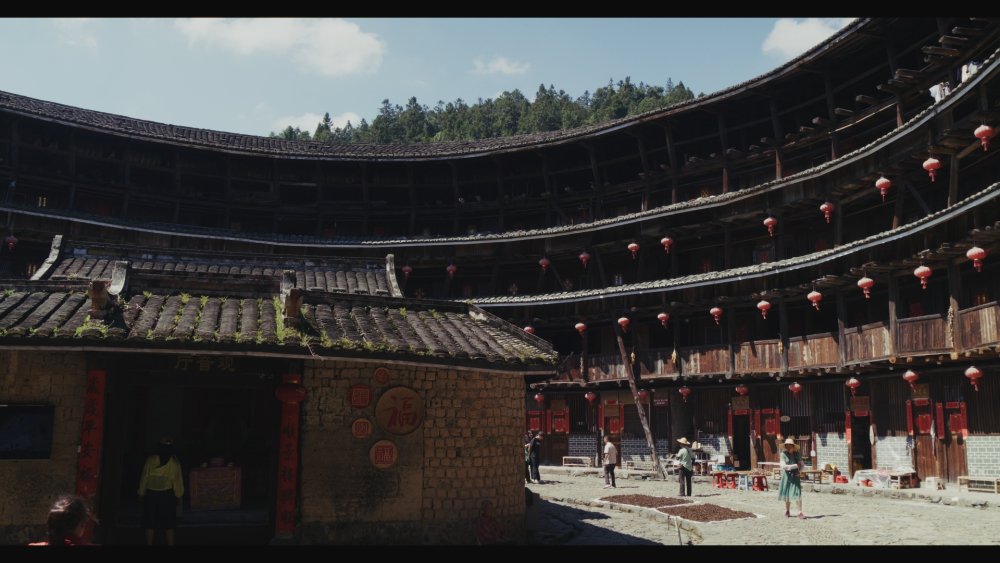

Tianluokeng Tulou clusters, Fujian Province, China. These buildings have a very thick outer wall of earth and a 3-5 storey inner wooden structure that houses dozens of families. The structure is designed to be stable during earthquakes and secure against bandits. The oldest if the ones we visited was built in 1796. These are just with a quick grade, mostly Resolve Film Look Creator. The DR in the scene is extreme, and while all the required info is in the files, I'm going to have to go heavy on the power-windows when I grade these properly. Grabs from GH7 + 14-140mm zoom. Grabs from iPhone 17 Pro shooting Prores Log with default app. The Prores HQ Apple Log files grade really nicely, have heaps of DR, and are great to work with. The DR isn't quite as much as the GH7, but it's more than enough for these scenes. These were graded at a different time to the above GH7 shots so probably don't match. All-in-all, the iPhone well and truly punches above its weight when you take into account it's pocketability, the size of the sensor, and the incredible range in focal lengths. Imagine how much you'd have to pay to get a lens that can do 13-200mm FF equivalent FOV and has exposure levels between F1.78 and F2.8 across the whole range.

-

Another frame grab showing a bit more DR. Same Resolve + FLC workflow, but no grain added, so what you see below is the noise and compression artefacts from the Prores. Default exposure: -2 stops to see where the clipping point is: +2 stops to look at the shadow detail: Very serviceable, especially considering this was shot from the window of a moving train (the OTHER reason why rolling shutter matters).

-

I started this thread by talking about the GH7, but I think the iPhone 17 Pro has also come of age (for me at least). My goals for using this is to keep it in my pocket, be able to shoot super quickly using the default camera app, and focus on the compositions and capturing the events in front of the camera while it does all the auto-everything required for a good quality capture. First impressions and thoughts from a few weeks of using it. 4K Prores HQ files in Apple Log 2 look great and are a joy to work with in Resolve (see examples below) All the lenses seem to work well and even up to the 8x 200mm are completely usable hand-held, and if leaning your hand against something the 8x is almost locked-off It records 6 channels of audio, and they appear to all be independent and available in the NLE (see image below) which might(?) be useful in difficult situations where there's wind noise in one or one channel clips etc? While recording Prores Log the default camera app shows you the log image and doesn't have an option to apply a LUT, so although it's a great way to be sure you're recording LOG, it's hardly ideal. Hopefully they fix this in an update. Audio channels in Resolve: Some frame grabs from out the hotel window in HK. Bear in mind these were shot with the default camera app, through multiple layers of tinted glass, and have had a film emulation grade put on top of them. 1x 24mm camera: 8x 200mm camera: with a bit of sharpening: with too much sharpening (unless you're a "cinematic Youtuber"): 1x 24mm camera (ignore the reflections in the window): 8x camera: 8x camera with sharpening: and in terms of DR / latitude, here's the 1x image brought up ~2.5 stops: I haven't tested it in low-light yet, but to me, all this essentially means that the camera is sufficiently technically capable that I can shoot with it without feeling like the technical factors are overly restrictive. This is incredibly impressive, given that even cameras like the GH5 needed you to pay attention to their limits in some situations. For the first time I feel like if something happened to my 'real' camera and all I had to capture a trip is my phone I wouldn't feel like I'd stuffed up. This is the first trip I haven't packed a backup camera body, which has given me the ability to pack a couple of extra lens options, thus 'upgrading' the GH7 rig as well.

-

Correct (Proper) Color Management Settings in Premiere Pro?

kye replied to BlueBomberTurbo's topic in Cameras

If no-one is able to assist here, might be worth asking in the relevant sub-forum on liftgammagain.com where the colourists all hang out. Of course, you might get an answer that's more technical than you'd like... -

The way I've used it in the past is there's a DCTL that will convert to/from OKLab. The pain is that it assumes you're in LogC3, so I have to do a CST before/after to get from/to DWG/DI which I use as my timeline colour space. My next steps were to look at the licensing for the OKLab DCTL, and work out how to just go directly from DWG/DI to OKLab in my DCTL. I'd assume I'd just need to combine the matrices for DWG->LogC3 and the LogC3->OKLab together into one step, but not sure if that's right. The way I'd do it is to implement a function that can be applied to the LAB variables that is stronger in the target areas than the others, or only apply in the target areas. A simple way is to say something like: if(A<0) { A=0; } if(B<0) { B=0; } X = (A * B); Then use that as a mask where the transformation is multiplied by X, so if X=1 the full effect is applied and if X=0 no effect is applied. What that will do is not effect the three quarters of the colour circle where either A or B are negative, and for the quarter they're both positive it will start at zero on the edges and then ramp up. This will ensure nothing in the image breaks because every adjacent colour before the operation remains adjacent afterwards. That will create sudden transitions in the mask though, so you could (for example) square the mask before using it, so values of X that are very low will be vanishingly low and the surface created will be a smooth curve. A bit more sophistication can make the transitions at the top (near 1) smooth too. This above is a transform that targets the A=B vector, but if you calculate Hue and Sat from the A and B values, then you can start to combine all sorts of values together, like having the value target a hue with a certain range, a saturation with a certain range, and then square that to target a location on the A-B plane. You can localise the intensity further by cubing X, or better yet, apply a variable factor which shifts X closer to 1 before it gets squared or cubed, so you can adjust it to the strength you want. While you're developing these things I'd map the variables to sliders in the plugin and then you can tune them and when you find good values just hard-code them. People have been calling for many years to be able to change the colour space of lots of controls, the way you can with tools like the Colour Warper in the spiderweb view. This would make grading so much easier if you could just set the colour space of the Saturation knob to be OKLab and be done with it, etc.

_1.2.1.thumb.jpg.3121de2fa91a61db3546cab951b51e5e.jpg)

_1_26.2.thumb.jpg.cc8083cdc240f0f7658187d4f640cdbe.jpg)