-

Posts

8,051 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

The iPhone 17 Pro selfie camera has a square sensor, and when using the default camera app there's a button that swaps between it recording a 9:16 video and a 16:9 video. I don't know if you'd rate it as a "real" camera or not though!

-

I referenced a video previously from Cam Mackey, which explains it (with real examples and a bit of drama too of course). Based on the video and also from some chats I've had with a friend who runs his own studio specialising in commercial work, the main points are: If you're shooting on location for a brand, there are often lots of things in the background you want to blur out (other brand logos, construction, etc) and backing up or going to a wider lens means the background comes more into focus, which means you need a wider or faster lens to get the same background defocus, potentially meaning you have to sacrifice optical quality (which commercial clients don't like), hire/buy expensive lenses, and potentially have to deal with much heavier setups (bad if you're using them on a gimbal/rails/etc) Vertical deliverables will often need extra vertical space for logos and text to be added, so for commercial clients you need to deliver more height than you normally would include for horizontal video so the images aren't used in the same way In physical shops, you'll often see TVs mounted vertically showing ads, and you can walk right up to them, so if you're cropping into a 16:9 and then that client is viewing the end result on a vertically mounted 4K TV that is almost as tall as an adult, you want your images to have as much resolution / sharpness as you can get because the last thing you want is your client saying "why are our ads all fuzzy compared to our competitors? how much did we spend on this campaign?" You often don't have space to back up, especially considering that lots of corporate and brand content will be shot on location, and corporate especially is often shot in tiny conference rooms etc where you want as much space as possible to pull the subject away from the background for some separation (blur) and also to make lighting easier so there's less spill on the background 90% of clients are expecting both horizontal and vertical deliverables. The divide in this debate is between people shooting for themselves who don't deliver vertically, and people shooting for brands who have to. It's easy to think the only people shooting vertically are influencers but for professionals it's the brands driving the demand (or really it's the people sitting on the train holding their phones vertically and scrolling). If you do back up, your horizontal FOV is now going to include more at the very left/right edges of frame, so either you have to crop the landscape deliverables or you need to make sure the extra FOV is visually suitable for including in the frame.

-

Don't forget that there is a silent army of people who are making work for clients. I am in a number of private groups with professionals (shooting corporate, advertising/PR, etc) and things like open gate are absolutely critical for those amongst them doing commercial work. I thought that Cams video on open gate was actually really good and explained it well. Basically every camera argument is people saying "I definitely need the things I use, and no-one needs the things I don't use".

-

Absolutely. I am a huge proponent of doing tests. Pick a lens and go out and shoot with it for a day, edit, grade, and export it, then watch it over and over again for a week or so and see how it makes you feel. Do it again. Do it with a different camera. Do it with a different technique. Pick a sequence of 5 shots and then shoot that sequence with several lenses, edit the sequences together and then compare them. Shoot a latitude test (the same shot but with each at a different exposure) and then work out how to grade them in post to match the shots. This will check if you have your colour management setup correctly, and will also show you the limits of your camera. Take a series of shots and lower the saturation on all of them, and duplicate the shots many times in a timeline. Then test every method you can find to raise the saturation again, label and export them. Over a week or so look at them, compare them, see how each makes the footage feel, makes you feel. Take the same series of shots as above, but try every LUT, try every method to add contrast, every way to apply a tint, every way to change WB, every way to add a split tone, every way to sharpen, every way to soften, every way to grade. Test every look.. film emulation, VHS emulation, etc. Test every resolution. Every way to add grain. Every way to reduce noise. Every aspect ratio. The goal is to learn how to see.

-

This is one of the 485 reasons why I look at all the latest FF cameras and just shrug then go back to my MFT cameras. Life without camera GAS is a very different experience..

-

Lighting is critical - without it the files are just blackness. The debate about artificial vs natural light is a large and complex one, but the fundamental is that you must learn to understand light and its various qualities. Soft vs hard, directional vs flat, etc. Especially what it does to the human face, assuming your work includes people. Perhaps a good exercise is to practice shooting light rather than shooting the physical objects that it hits. Take your phone and go out and just shoot as many different types of light you can. Do half-a-dozen photo walks with this as the only subject and you'll leapfrog the majority of photographers.

-

Looks like you can just change the URL of the map camera link to get previous months.. https://news.mapcamera.com/maptimes/2025年10月-新品・中古デジタルカメラ人気ランキング/ https://news.mapcamera.com/maptimes/2025年9月-新品・中古デジタルカメラ人気ランキング/ etc.

-

Benro are coming out with drop-in e-ND (electronic ND filter kit)

kye replied to Andrew - EOSHD's topic in Cameras

I had the vague impression that LCD panels had some element of polarisation to them, but maybe that's not true. However, even if it was true, the fact you haven't noticed any probably means the polarisation isn't changing direction as the strength varies. I do a lot of tests where I match different shots in post, things like latitude tests etc, and if I use a vND to control exposure then I'll end up with two shots where the subject is the same WB/exp but the sky or the level of reflections in water/glass will be completely different, which ends up being the polarisation from the rotating element of the vND being a different angle between the two shots. -

Benro are coming out with drop-in e-ND (electronic ND filter kit)

kye replied to Andrew - EOSHD's topic in Cameras

Interesting product. I couldn't see from the images how it is powered, but it looks like maybe it has a USB-C port on top, so maybe it's rechargeable that way. I always assumed that such a thing would sit between the camera and lens (like those adapters with drop-in filters) and could be powered by the mount, but obviously this couldn't do that. If we assume its total range is ND3-64 then that makes it ~1.5-6 stops. Not a bad range - I use a 1-5 stop vND when out and about and find it has enough range for most run-n-gun conditions. I gather e-ND filters are much better than vNDs with colour accuracy and not messing with the image too much (e.g. with polarisation etc)? -

A very good YouTube channel by British Photographer Harry Borden

kye replied to Andrew - EOSHD's topic in Cameras

Same, started watching a few months ago. -

A primer on the fundamentals might be useful as a refresher too...

-

Motion cadence will be impacted by every step in the image pipeline from the sensor onwards. The way to think about this analytically is anything that influences the quality or quantity of the motion blur within each image will impact the motion-cadence, or anything that lets the contents of any frame influence the surrounding frames. So it will be influenced to some degree or other by: shutter speed sensor modes (RS, etc) in-camera NR in-camera compression (codec, especially bitrate and IPB vs ALL-I, etc) processing in the NLE (NR, sharpening, colour grading, noise, etc) NLE export compression (codec, especially bitrate and IPB vs ALL-I, etc) streaming platform processing (NR, sharpening, colour grading, noise, etc) streaming platform compression (codec, especially bitrate and IPB vs ALL-I, etc) viewing device refresh rate vs video FPS mis-matches viewing device motion smoothing settings ambient viewing conditions and screen view angle etc I think of there being three dimensions within video. There are individual-pixel things that impact each part of the frame in isolation, like temp, tint, exposure, contrast, saturation, etc. There are spatial things where one part of the frame influences other parts of the frame, like softening, sharpening, halation, bloom, NR, etc. There are temporal things where parts of one frame are influenced by the content of other nearby frames, like temporal NR, grain algorithm, etc. We talk a huge amount about the first category, we talk anecdotally about the second one but no-one is really tackling it as a whole, and the third one is rarely even discussed.

-

I think there's an echo in here....

-

Canon EOS R6 Mark III and Sony a7 V compared - Canon better specs but...

kye replied to Andrew - EOSHD's topic in Cameras

Cheap zoom lenses (and especially kit-zooms) are often the BEST lenses. I wrote a whole thread on it here including examples and comparisons, but the summary is: They're cheaper than almost any alternative They're flexible and very fast to use, because zooming is always faster than changing primes They improve your edits because you can get greater coverage and variety of shots in the same amount of time / setups They are in the "sweet spot" between being too sharp and looking clinical and being too vintage, but their aberrations are often actually very aligned with the qualities of vintage lenses people want, just dialled down to a modest amount They have smaller apertures so are easier to focus / less prone to focus errors and don't need as much ND in brighter situations They often have native AF and are kept updated with firmware updates They often have OIS Those with variable apertures are much closer to being constant DOF, where the more you zoom in the smaller the aperture gets, counteracting the effects of the longer focal-length, which makes your footage more consistent They don't get a lot of love online, but that's because most of the discussion online is about the things that are THE MOST of something (the sharpest, the newest, the biggest, the most expensive, etc) and being cheap and good is only really attractive to people who actually shoot in the real world and where a happy middle ground is desirable. My most used lens is a variable aperture zoom lens, despite me owning many much "better" lenses. -

This video from @Tito Ferradans has some info on it, but he doesn't show everything needed so you might need to watch a bunch of YT videos and search to see if other people have shared how they do it.

-

I sympathise deeply - well done for getting through it. I shot a number of trips with a nice camera + smartphone + action camera, and only in the last few months did I shoot my first trip where every camera had colour management support. Matching footage from multiple older/budget cameras that don't have log profiles and colour management support is perhaps one of the hardest challenges in colour grading. Not only are the colours and gammas a challenge, but matching sharpness and NR and compression artefacts are also things that can make clips stand-out from each other. I've lost count of the number of times I spot a 1-2s clip from an action camera spliced into a high-end production based solely on how much sharper it is than the rest of the footage, which could all be avoided with a simple low-strength small-radius blur to take the edge off. Obviously ultra-low-budget docos are often subject to using whatever cameras are available at the time, and docos often need to use more rugged cameras like action cameras etc to survive different conditions, not to mention dealing with footage from other sources. To me, a camera has to be pretty bad or pretty ill-suited to make it worthwhile to break consistency with the other material already shot. As much as you can, pick a camera, pick a few lenses (or just one lens), do your due diligence to get familiar with them, and then just get on with it. It's only when you can see past the camera and the lens and the filters that you'll be able to see what you're pointing the camera at.

-

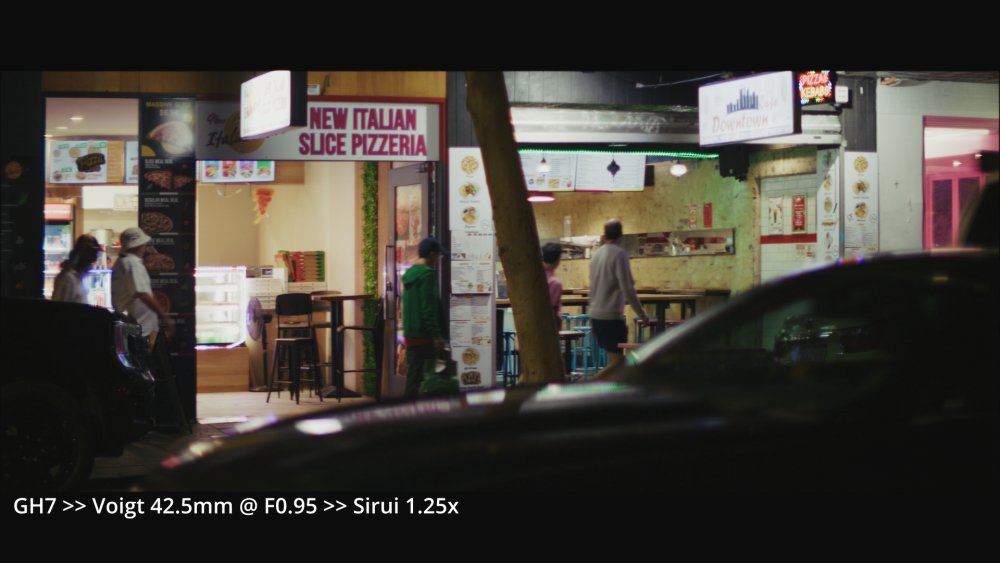

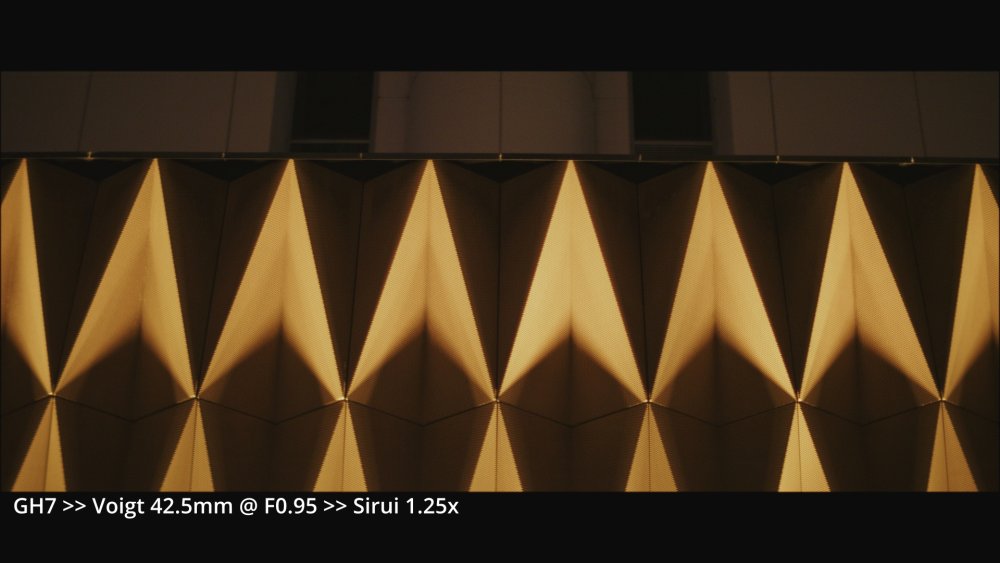

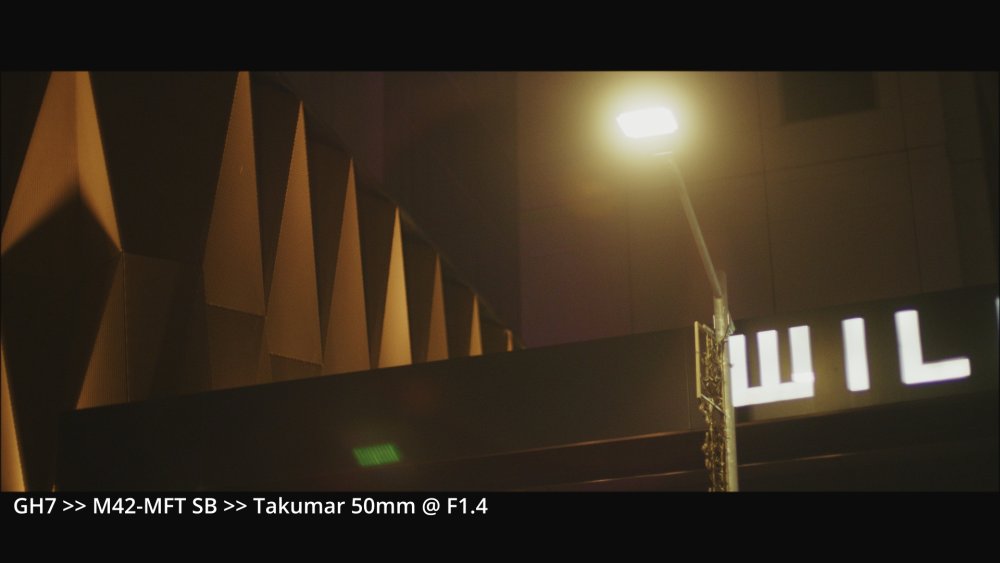

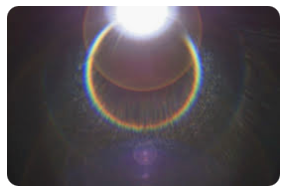

My Takumar 50mm F1.4 arrived and I took it out to compare it with the Voigt 42.5mm F0.95 with Sirui 1.25x adapter setup. The Voigt+Sirui is equivalent to 68mm F1.5 and the Takumar+Speedbooster is equivalent to 71mm F2.0, so a bit longer and a bit slower. In order to avoid changing lenses all the time, I did a little lap with the Voigt+Sirui, then did another lap with the Takumar and re-created the compositions from the first lap (as well as grabbing other shots too). These images have had a film-emulation applied, and have had exposures matched, but no other changes, so things like contrast and WB etc should be the same. My impressions: Voigtlander is softer at F0.95 than the Takumar at F1.4 I haven't compared them with the Voigt stopped down, but I'd probably rather get a bit more subject separation than sharper images. The extra sharpness of the Takumar makes the focus peaking a lot easier to use when using the screen (using the EVF you can see what is in focus but the monitor you need peaking on) The focus ring on the Takumar is a lot looser (not that damped but not too loose either), but of course it's backwards The extra width of the Voigt is apparent. It makes me wonder if the Speedbooster is a little less boosting than I claimed. The Sirui has a lot of coma on the edges (stretching light sources horizontally) so the bokeh seems more horizontal than vertical on lights, whereas the Tak seems to have a zoom-blur sort of effect which is a bit odd The Tak has slightly lower contrast (hard to see but I noticed it when trying to match exposures) In addition to those, I also grabbed these with the Takumar: Swirly bokeh! and it's not a Helios!! It has a nice halation/glow but the ghosts from the light sources are real. This is something I am thinking a lot about because I find them to be very distracting. Seems sharp at the edges at closest focus. The bokeh is confusing - sometimes with bubbles and sometimes not. I actually find that the response to light sources in this lens is very complicated - sometimes it stretches along the spokes of a wheel and other times along the rim of the wheel. Also something I noticed but isn't really visible in stills is that light sources pointed straight at the camera have a flare that's like a loop, like this, which can look pretty distracting too. My conclusions are: The focus being lighter and peaking being more obvious makes it a bit easier and faster to use, so much so that I changed my process to where I'd adjust the vND, then hit record and then focus in the 2s it takes the GH7 to begin recording The size and weight difference is significant and I found I was able to shoot people from a lot closer with it than the Sirui The small size and weight mean it's a no-brainer to pack on trips where I'm reluctant to pack the Voig/Sirui combo Next is to test it with an oval insert to get some stretched bokeh. As a proof of concept it certainly looks promising.

-

Interesting lens - I missed it when I was looking around, but like I said, 75mm is a bit long. I'd have to pair it with an adapter to make it a bit wider!

-

What lenses would you suggest? The GH7 combo is equivalent to a 68mm F1.5 but I can't find anything with a shallower DOF without it being too long. The fastest things I can find are 75mm F1.4 lenses that are a bit longer than I'd like, or 50mm F0.95 lenses but they're deeper DOF than this. You'd think it's a no-brainer with FF but I couldn't find anything without also adding adapters. I was hoping I'd missed something!

-

It's quite easy to focus. Physically it's well damped and optically it's really sharp so the limitation is in how sharp the taking lens is and what sort of monitoring and peaking you have on the camera. Minimum FD is around 60-something cm, which I think is what the spec says. IIRC I tested it on the Voigt 17.5/0.95 and didn't really notice differences between that and other lenses, although I'm not doing much close up stuff. Yep, why stop down? 😄 Test shots here: More shots here: and here: The GH7 is a dream to use. The only "downsides" are it's not FF, it's physically large/heavy/chunky, it doesn't have a 1.25x anamorphic de-squeeze (so I use the 1.33x one which works fine), and the punch-in focus assist only goes to 6x. That's literally everything I can think of. That would only let me focus closer, wouldn't it? I don't find close focus to be an issue with it, just looking for something with shallower DOF. My Takumar 50mm F1.4 arrived today, so I'll be playing with that.

-

On my last trip I shot with the GH7 >> Voigtlander 42.5mm F0.95 >> Sirui 1.25x anamorphic adapter, and was really taken with the images, which remind me of 90s/00s cinema. But the setup was big, heavy, and didn't have as much shallow DOF as I wanted as I was shooting a lot of compositions at a distance without having many/any things in the foreground. Optically it's equivalent to a 68mm F1.5 on FF. A good horizontal FOV, although it's a 'between' amount and I would go wider and crop rather than going tighter. Physically it's large and heavy, weighing 2.1kg 4.6lb and with the 82mm front gave me very little stealth factor, further justifying shooting street with such a long focal length. Despite me shooting in busier areas and stopping when I first come upon a composition, people clocked me very frequently. In terms of the brief, I think that I want: - similar horizontal FOV (H-FOV) - shallower DOF - similar softness (the Voigts are nicely soft wide open, taking off the digital edge beautifully) - funkier bokeh, preferably stretched consistently vertically rather than swirly / cats-eye - smaller / lighter - not thousands of dollars I'm pondering how to get there, I've figured a few potential pathways... Vintage speedbooster - GH7 >> Speedbooster >> ~50mm F1.4 I already own a M42-MFT speed booster, which combined with a ~50mm F1.4 lens would give a 71mm F2.0 FOV. This is slightly deeper DOF but is only a AUD200 or so, and when combined with an oval insert, can give great bokeh. This is a proof-of-concept shot with a M42-MFT speed booster and a 50mm F1.8 lens with a couple of bits of paper stuck to the rear lens element: This is definitely a vintage / funky approach, but isn't so fast. This leads us to the elephant in the room, which is that while MFT is excellent at a great many things, very shallow DOF isn't really one of them. We are using a 0.71x speed booster already but need to decrease the crop-factor further. Vintage speedbooster + wide-angle adapter - GH7 >> Speedbooster >> ~50mm F1.4 >> Wide-angle adapter If we add a 0.7x wide-angle adapter (WAA), we end up with a crop factor of 0.995, which is essentially FF. This seems promising as TTartisan noticed that everyone-and-their-dog wanted a longer telephoto lens to go with their M42 Helios, and gifted us an M42 75mm F1.5 swirly bokeh master. Combined with the SB + WAA that gives us a 75mm F1.5 which is a bit longer than I'd want, but is interesting. BUT, and it's a big but (I cannot lie) Spherical wide-angle adapters seem to be universally rubbish. I bought two ultra-cheap WAA and they were rubbish (when shot with a fast lens wide-open anyway) which is to be expected, but recently I snapped up a Kodak Schneider Kreuznach Xenar 0.7x 55mm adapter, which should be a fine example of the breed, and it was also pretty rubbish. Certainly, more 'vintage' than I am looking for. Subsequent research lead me to conclude that people stopped making these adapters once the mirrorless revolution happened and people stopped using fixed-lens camcorders. I'd be happy to be proven wrong..... However, there is one type of wide-angle adapter that is available with modern optical standards, and that's the anamorphic ones, which leads us to... More anamorphic - Blazar Nero 1.5x anamorphic adapter This is probably my ideal anamorphic adapter in many ways (but one) as it's smaller and lighter than the Sirui, isn't quite as sharp (I don't mind) and has more squeeze to-boot. If I use it on my 42.5mm Voigtlander lens it widens the HFOV compared to the Sirui (not ideal) and also doesn't change the DOF. If we combine its 0.667x HFOV boosting with my 0.71x speed booster we get a crop factor of 0.95 - wider than FF! So, combined with that 75mm F1.5 TTartisan lens, that's a 71mm F1.4 equivalent. Compared to the 68mm F1.5 we started with that's only a slight improvement in DOF, and only a slight improvement in size and weight, but we've paid the better part of AUD2000 to do it. ...and for that kind of money, we can just buy a FF camera. Go Full Frame - but what to buy?? Remembering our original goal, the option that stood out to me was the Lumix S9. It's small, has a flippy screen, and is within consideration at around AUD1500 used. The OG S5 is similarly priced and I hear it has some good mojo. There might be others too. Ideally, I'd go with a smaller body, as if I'm paying this much for a new system, starting with a GH7-sized body seems counter-productive. The S9 is very similarly sized to my GX85 and here's a comparison of sizes... [GH7 + Voigt 42.5/0.95 + Sirui] vs [GX85 + SB + 50mm F1.8]: The weights are similar - those setups are 2110g vs 800g - more than 2.5x the weight. In terms of lens options, this is a world I am unfamiliar with, but considering we've just blown most of our budget on the body (and spare batteries etc) lenses can't cost too much. Considering I am inclined towards cheap/funky/vintage MF lenses, I figure the options include things like: Vintage 50mm F1.4 lenses (like a Takumar) on a dumb adapter The swirly 75mm F1.5 TTartisans on a dumb adapter The 7artisans 75mm F1.4 in L-mount These aren't shallower DOF at all! FF is a lie! (I kid.. well sort-of anyway) Even if I spring for more expensive options like a 50mm F0.95 that still has deeper DOF due to the shorter focal length, and it doesn't look like F0.95 lenses for FF are affordable for anything other than 50mm. If I start adding serious weight again with things like a Sirui 150mm T2.9 1.6x and then attach my 1.25x to it, I'd end up with a 150mm T2.9 2x anamorphic lens, which has a HFOV of 75mm T1.45, but the combo weighs almost as much as my GH7 rig does in total! No wonder the replacement series from Sirui were made from carbon fibre! Perhaps the only real jump in shallower DOF is to combine FF with an F1.4 prime and an anamorphic adapter, like FF + MF 85/1.4 + Sirui 1.25x adapter which would give an HFOV of 68mm F1.1, which is definitely faster. If this was the S9 then it would be smaller and lighter, but is still 75% of the weight and most of the size of my GH7 rig. But I suspect there are better more 'inventive' options. I want cheaper and lighter and I'm willing to 'pay' for it in image quality (actually I'd PREFER less sharp glass with more funk) so there have got to be other paths too. One I can see is to continue the speed-boosting pathway with a L-mount speed booster like 0.71 EF-L / FD-L / PL-L / NIK-L and then pair it with a ~100mm FF lens that might have a greater than FF image circle and not only get a shallower DOF but also get to see some funk at the edges (or even the actual edge of the circle which might be really cool). Unfortunately vintage 100mm F1.4 lenses don't seem to be common or cheap. My current leanings are to accept defeat and just go with the [GH7 >> M42-MFT SB >> oval cutout >> vintage 50mm F1.4 Takumar] option, which gives a 71mm F2.0 FOV, and only costs a couple of hundred dollars.

-

Seems like another hidden cost of the internets obsession with AF. The more everyone screamed about it from the rooftops the less that manufacturers cared about anything else. The worst thing about the camera industry is the BS that the online communities prattle on about. Now we have clinical lenses and megadollar-megapixel cameras that fill up your card in 10s flat with 8K 60p RAW and require all kinds of Film Emulation in post to get rid of the sensation that digital scalpels are being hurled into your eyeballs when you look at the footage. No wonder vintage lenses have never been more in-demand. ...or vintage point-and-shoot cameras or digicams for that matter.

-

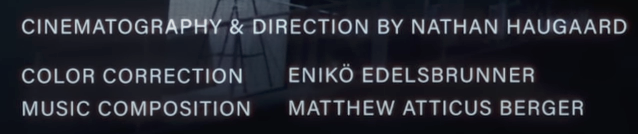

No reason that the iJustine channel can't publish a great video..... Well done to the film-making team that created it: Strange that there's no credit for editing. Maybe that was also Nathan. Well done to Apple for creating a phone that can produce results like this when used competently. Any YouTuber with the desire and sufficient resources should be able to replicate such a thing. It is a nice example of what the tech can do when used correctly by competent film-makers. The night shots were pretty good. I've found it to have impressive DR and low-light capabilities, but this was slightly more than I was anticipating. Those areas of Tokyo are probably quite well lit though, and I bet there was a healthy dose of NR in post for select shots. The results are still good though.

-

I certainly like the combination of vintage lenses with modern anamorphic adapters. In some ways the images might be similar to the modern lenses with vintage anamorphic adapters. It's certainly much more accessible.. Please do, and make sure to post it here so I'll find it - I get the sense that there might be a lot of tricks to get the most from it. I pulled up Buyee and a quick search for "50mm F1.8 m42" revealed quite a number of things: Lots and lots of lenses that are many hundreds of dollars It's got the search engine from Amazon, not the one from ebay... I would categorise its approach as a "vibe search".. like "that's a lens right? ok, sure.. here are a bunch of lenses - knock yourself out!" It ranked the Takumar 28mm F3.5 surprisingly high up in the list, considering that further down in the list were quite a lot of Takumar 55mm F1.8 m42 lenses (which match 2 of my search words instead of the 28/3.5 which matched ZERO of my keywords!). Damn Takumar made a lot of 55mm F1.8 lenses I've been buying vintage audio valves/tubes from all over the world since the early 00s and have found the Japanese to be the hardest market to buy from, especially as buying vintage stuff likely means dealing with older vendors who are less likely to be interested in dealing with language barriers, international postage, customs, etc. Back then it was pretty common for us to search the net, or forums, or even business directories etc, or just see something pop up on ebay and we'd contact the seller and then buy all of their stock from them. Most of the time we couldn't even get the Japanese vendors to reply to our emails.

-

Going back to the GH7, one thing that surprised me on the trip was the GH7 + Voigtlander 42.5mm F0.95 + Sirui 1.25x anamorphic adapter combination. When I saw that the Sirui was under USD300 / AUD500 I was stunned as anamorphic was something that I had dismissed as simply being inaccessible to me - too expensive / difficult / complicated. I ordered it immediately. When my tests revealed it was quite happy paired with the Voigtlander F0.95 primes shot wide open, I decided to take the 42.5mm on the trip with me as a creative experiment. The FF horizontal equivalent for the 17.5mm and adapter is 28mm F1.5, which is interesting but I'm not a huge fan of the 28mm FOV, so I chose the 42.5mm lens to pair with it, which gives an equivalent of 68mm F1.5. It's a longer lens for street shooting, but will give me some distance to work with (useful for a rig that is as large as this combination) and will give some great shallow DOF too. Here are some sample frame grabs from the night markets in Xiamen Island, China. When I used it in Hong Kong I found the focal length really came into its own. There were so many layers and so much movement, the best shots are just a confusing mess without the motion that helps you identify what is going on. Here are some more minimal frames. I have pushed the grade in these very heavily. Loads of contrast and vignetting and a strong application of Film Look Creator too. The Voigtlanders are soft wide-open too, adding to the look. IIRC these images were shot with the lens stopped down a bit (I'd forgotten my ND filter!) so it can be quite well behaved. It has sent me down a rabbit hole of looking at how to get a more vintage S35 / FF look. More on that later. My mini-review of the Sirui is this: It's very affordable It's large and heavy, but build quality feels very good and seems to have tight tolerances It's sharp It doesn't flare much at all, even shooting in the streets at night I only saw flares on a few occasions when the headlights of a car hit the lens just right The focusing mechanism is a joy, I used one finger to focus it for a lot of the time I was using it The bokeh is surprisingly cats-eye / swirly, and doesn't have that strong a vertical stretch (at 1.25x it's only a mild squeeze factor so that makes sense) It has a bit of coma with bright lights If you like what you see above, I'd recommend it. I started off thinking that my bag was very heavy and not taking this combo next trip would be a good way to lighten my luggage a bit, and on the trip home was thinking that I'll take it everywhere and just pack less clothes.