-

Posts

8,046 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Nice! What formats and focal lengths is it compatible with? and what camera and taking lens combos are you planning to use with it?

-

My take on the situation is that I'm super-happy with the GH7. It basically does everything I want, and apart from having ultra-sharp ultra-shallow DOF, pretty much does most things that FF does. It does low-light very well, and is only behind the low-light from FF cameras because they have gotten crazy good.

-

I went with the GH7 as I'm video-first and need the heat management etc. The G9ii is an incredible camera though. There's a whole thread about it here: https://www.eoshd.com/comments/topic/90374-panasonic-g9-mark-ii-i-was-wrong/ Do you have either one?

-

It was released in mid-2024 🙂 Definitely a strong camera though. I expect mine to be useful for many years, and TBH, I haven't felt jealous over a new camera release since buying it.

-

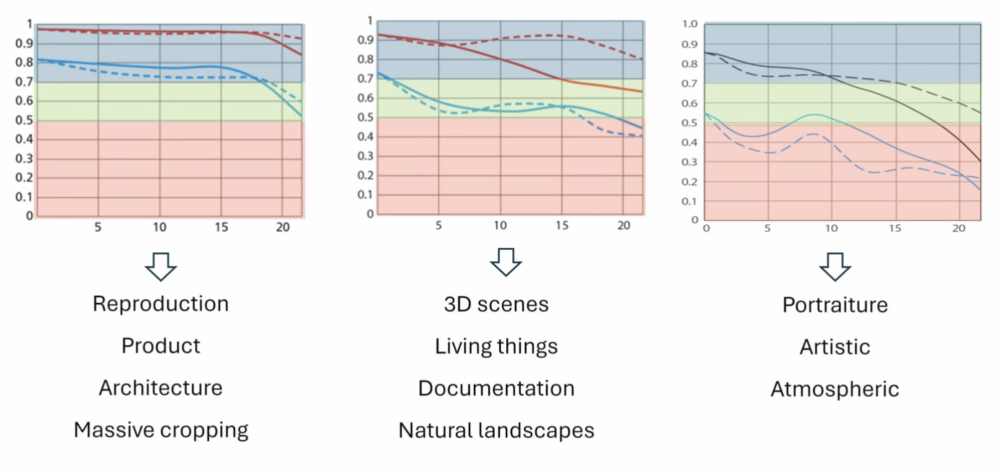

For anyone interested in understanding a bit more about the relationship between technical measurements and aesthetic experiences, this video is very interesting. Perhaps the challenge is that many people believe there's a golden zone of sharpness where it's softer than clinical glass, but sharper than poor performance vintage glass, but as there's very little qualitative data it's hard to know how a lens performs. The video gives a non-technical primer on MTF charts, and discusses what potential uses there are for different levels of performance, culminating in this chart. I particularly like this approach because the thinking is well beyond "good vs bad" lenses and takes the much more mature approach of "the right tool for the job". This is an example of this kind of thinking from the video: Recommended viewing if you want to go beyond "I like this lens" and "I don't like that lens"!

-

I find it incredible that people talk about switching bodies / systems all the time without really considering the wider ecosystem of lenses and accessories. Hell, I've stayed within the MFT system and whenever I get a new MFT body there are still all these extras that I end up being surprised about and inflate the price by 10-15%. If I was re-buying lenses then it would double/triple/quadruple the cost. I have no idea what the economics of lenses are, but I wouldn't be surprised if the camera body is now a loss-leader and the lenses where all the profit is.

-

Well, we've gotten drastically better pixels, but because everyone has been screaming incoherently about wanting sharper images the manufacturers took the higher performance and kept the same overall image performance but made the pixels smaller so there's more of them. Everyone said they wanted a camera that could match the 2.5K Alexa, but because there were more people screaming for resolution than screaming for quality the industry took it's improvements and gave us mediocre 4K cameras, then more improvements and we got good but not great 5K downsampling cameras, then more improvements and we got quite good 6K cameras, and since then the flagship bodies have given us 8K / 12K / 17K cameras with pixels that are close to rivalling the 2.5K Alexa. So ARRI released the Alexa 35, and now there's a 4K ARRI camera that absolutely smashes the 8K / 12K / 17K flagship cameras. It's a complete myth that cameras aren't getting better. They're getting better by leaps and bounds, but almost all those gains have been "spent" on smaller pixels / higher resolution. If that hadn't been the case, you'd probably have had every other feature you've ever wanted by now.

-

Good points. The way I see it is there's a toxic feedback loop of consumerism, hype, marketing, and release cycles. The skepticism and criticisms around this is justified, but the forgotten ingredient in this whole picture is us - the people paying attention. Without us, the whole thing falls flat. I would suggest the uncomfortable truth is that the people caught up in the drama of it are either making money from it (manufacturers, dealers, influencers, etc) or are desperately trying to buy their way into making nicer images. I will be the first to admit I did this. I tried to buy gorgeous images by swallowing the myth that Canon colour science was the answer, then that 4K was the answer, then that shallow DOF was the answer. The truth was that even if someone handed me an Alexa LF I'd still have made awful looking images. Sure, there are people making great work and want to upgrade their equipment from time to time and dip into the chaos briefly, but once they've made their decision and bought something that works for them, they tune out again. These people are spending their time on lighting tutorials, getting better at pre-production and planning, learning how to improve their edits, etc. They're not watching reviews and talking online about the colour subsampling of the 120p modes of the latest 12 cameras that are rumoured to come out in the next 17 minutes. My advice to you is this - if you feel like this then take a break from the industry and try and remember why you got into this in the first place. I'll bet it wasn't because you found a deep love for reading spec sheets!

-

"my mood tanks and it bleeds into the set" is a great way to express what I was thinking. I might have to steal your wording! I've had cameras I've loved to use and ones I always felt like I was struggling against, and it's definitely something that can be difficult to quantify. I suspect it's that we each have a range of priorities and preferences, and after getting used to the equipment and learning how it impacts the whole pipeline from planning through delivery and perhaps even into repeat business, the feeling we get is perhaps representative of how well it aligns with our individual preferences. It's easy to compare specs and pixel pee images, but there are lots of things that can be a complete PITA that don't show up on the brochures or technical tests. When reading your original post it felt like you want to go with the C50 and are trying to talk yourself into it / justify it. One thing that I think is underrated is the idea of the quiet workhorse. A camera that is a professional tool, does what you need without fuss, and doesn't have a lot of fanfare. For me that was the GH5 (although the colour science and AF weren't great) and now the GH7. These sorts of cameras don't grab headlines, but the fact that they're quiet workhorses rather than outlandish divas means you're able to move past the tech and concentrate on what you're shooting and the quality of the work. Canon have a very solid reputation in this regard - there's a reason they ruled the doc space for decades. One other thought.. if you don't have one already, consider buying a nice matte box. It'll help to stabilise the rig and will also make you look more impressive to clients!

-

I think you've been looking at the camera industry too long. We operate in a marketplace where people offer goods and services and if people want to purchase them they do, and if not, they don't. There are reasons why Governments might incentivise or subsidise various industries or products or behaviours, but I don't think any of these apply to cameras. The only other situation that is an exception is if something starts to become a necessity, like clean water or reliable electricity supply, and more recently now internet access is getting into this territory. When this happens then efforts might need to be made to ensure that these things are accessible. I very much doubt anyone is arguing that high-end mirrorless cameras are a human right, in which case they should just be traded like all goods, where they're subject to the laws of demand and supply. You can't get your house painted for $50 because paint and labour costs more than that. You can't buy a car for $9 because no-one has worked out how to make them for anything remotely like that price. You can't buy a super-car for $10000 because the market has valued them significantly above that.

-

Two thoughts from me. If you close your eyes and imagine each scenario, how do each of them make you feel? What is never really talked about is that if you feel like you're having to argue or strong-arm your equipment then you'll be in a bad mood, which isn't conducive to a happy set, getting good creative outputs, or just enjoying your life. I think people dismiss this, but if you're directing the talent then this can really matter - people can tell if you're in a good mood or distracted or frustrated etc and people tend to take things personally so your frustrations with the rig can just as easily be interpreted by others that you're not happy with their efforts. The odd little image technical niggle here or there won't make nearly as much difference as enjoying what you do vs not. When it comes to IBIS vs Giroflow vs EIS etc, it's worth questioning if more stabilisation is better. For the "very dynamic handheld shots" having a bit more camera motion might even be a good thing if it is the right kind of motion. Big budget productions have chosen to run with shoulder-mounted large camera rigs and the camera shake was pleasing and added to the energy of the scene. Small amounts of camera shake can be aesthetically awful if they're the artefacts from inadequate OIS + IBIS + EIS stabilisation, whereas much more significant amounts of camera shake can be aesthetically benign if coming from a heavier rig without IBIS or OIS. If more stabilisation is better, maybe it would be better overall to have a physical solution that can be used for those shots? Even if there aren't good options for those things, maybe the results would be better if those shots were just avoided somehow? In todays age of social media and shorts etc, having large camera moves that are completely stable is basically a special effect, and maybe there are other special effects that can be done in post that are just as effective but are much easier to shoot?

-

Good to hear you got a solution that works for your (very challenging) shooting requirements - that's what truly matters! Low-light is now the current limitation for the high-end MFT line-up. The GH7 sacrifices having a dual-base-ISO in favour of having the dual-readouts and the DR boost that architecture gives. I shoot uncontrolled external locations in available light, which means low-light performance is a consideration for me too, but the GH7s performance is enough for my needs. I suspect the low-light capabilities of MFT would be described as "Very Good to Excellent", but the latest FF cameras now have low-light capabilities that would be described as "Absolutely Incredible" and so MFT lags by comparison. You can't cheat the laws of physics! It wasn't that long ago that cameras weren't really usable above ISO 1600 or 3200, so things have advanced very quickly. Suggesting that you "need" to shooting weddings at ISO 25,600 would have been considered a joke and saying you were serious would have started arguments and gotten you banned as a troll! Personally I think the "if todays cameras can't do it then you don't need it" is a silly perspective, because it implies that there aren't any new situations or circumstances that are worth recording, and obviously that's just plain ridiculous. I wonder how the GH7 compares to the original A7S. The difference might be smaller than you'd think.

-

Where did Mattias Burling go? Youtube channel is gone.

kye replied to John Matthews's topic in Cameras

I remember a quote from around the time that Facebook started having issues with people passing away - "in 80 years there will be 800 million dead people on Facebook". People don't really think about social media channels having an end, and so when they do people are often confused. It would be great if there was specific functionality for such things, like automatically turning off comments to all videos etc, but we haven't really worked through the related issues as a society yet so there isn't really a common understanding of what we even want to have happen when people move on. -

MFT has been dead for decades now - everyone who hasn't been living under a rock for the last 10 years knows this. What people don't know is that due to a quirk in quantum physics and the way that time works, MFT was actually dead before it was invented. This means that my GH7 and GX85 and OG BMPCC and BMMCC never existed, don't exist, and when MFT finally "dies" somehow will disappear from my house. I bet you even think the earth is round... some people are just too much!

-

Nice! The other thing to consider when testing ISO and noise in the final image is the delivery part of the pipeline. If I shot in two different modes and then processed them differently in my NLE, I might be able to tell the difference between them in my NLE. But no-one except you is watching your footage in your NLE, so you'll be exporting it, probably to h264 or h265, and you might not be able to tell the difference between them at this point. If you're going to be uploading them to a streaming service, then that service will decompress, process (NR, sharpening, who knows what else) and then brutally re-compress it. Lots of things are visible in the NLE and are completely gone or mangled beyond recognition in the final export or stream.

-

Just make sure you're testing the options in the full image pipeline, so comparing finished 709 grades. So many people only test one part of the pipeline and ignore the rest. I haven't really experimented much with the AF on the GH7 as I'm used to the AF on the GX85 etc, and I tend to use manual lenses in lower light. AF is very difficult to test as well, and @Davide DB has posted before about how lens-dependent it can be too. Maybe there are AF tests online? Playing peekaboo with your camera seems a popular camera reviewer pastime!

-

I'm not sure how this would translate, but my GH7 does far better when I raise the ISO to get a proper exposure in-camera vs shooting under exposed and raising the exposure in post. For some reason the shadows are quite noisy, even at native ISOs. This is shooting in C4K Prores so it's not a codec issue.

-

Just a note to say that it would probably be worth doing some tests ahead of the event. Situations like this involve many variables and most often people don't consider all of them because they don't do any methodical tests. You are assuming that the AF will work differently between different picture profiles, but I would suggest the AF would be operating on the image before the picture profile is applied, so it shouldn't matter... but, once again, you should test this to confirm. Another thing to consider is if you can push the shutter angle to 270 degrees or even 360 degrees. If it's a worship setting then making the footage seem a bit more surreal might be appropriate, and you can get another half or full-stop of exposure this way. You should also test NR in post - it's not ideal but it might give a better result overall considering none of your scenarios are operating in the cameras ideal operating range. I've done a lot of shooting with cameras at/beyond their capabilities and when you're pushing things you're trading off the drawbacks of each strategy.

-

Digital zoom is definitely an underrated feature of these higher resolution cameras. On my GH5 I used the 2x punch-in on my 17.5mm F0.95 to get 35mm and 70mm FOVs, and on my GX85 I used it with the 14mm F2.5 pancake lens to get 31mm and 62mm FOVs in a pocketable form-factor. The crop function on the GH7 is different and a bit more restrictive. You get continuous zooming, but only to the point where the resolution you've chosen is at/near 1:1 crop into the sensor. So, if you've got the 14mm lens on there and you're shooting in C4K, you enable the feature and it pops up a box on the screen saying "14mm" and you can zoom in more and more by pushing or holding a button and it goes from 14 - 15 -16 - 17mm, but it won't let you go further. If you're in 1080p mode then it goes from 14mm to 38mm. Conveniently, if you disable the mode then it goes back to 14mm but if you re-enable it then it goes back to whatever zoom you were at previously, so it's easy to set a zoom level you like and then jump in and out of that FOV. My testing didn't indicate any IQ issues with it, in 24p mode anyway, so I think it's probably downscaling from a full sensor read-out. Not only is it really good for getting more FOVs from primes, but it's also great in extending the long end of your zooms too.

-

My advice is to forget about "accuracy". I've been down the rabbit-hole of calibration and discovered it's actually a mine-field not a rabbit hole, and there's a reason that there are professionals who do this full-time - the tools are structured in a way that deliberately prevents people from being able to do it themselves. But, even more importantly, it doesn't matter. You might get a perfect calibration, but as soon as your image is on any other display in the entire world then it will be wrong, and wrong by far more than you'd think was acceptable. Colourists typically make their clients view the image in the colour studio and refuse to accept colour notes when viewed on any other device, and the ones that do remote work will setup and courier an iPad Pro to the client and then only accept notes from the client when viewed on the device the colourist shipped them. It's not even that the devices out there aren't calibrated, or even that manufacturers now ship things with motion smoothing and other hijinx on by default, it's that even the streaming architecture doesn't all have proper colour management built in so the images transmitted through the wires aren't even tagged and interpreted correctly. Here's an experiment for you. Take your LOG camera and shoot a low-DR scene and a high-DR scene in both LOG and a 709 profile. Use the default 709 colour profile without any modifications. Then in post take the LOG shot and try and match both shots to their respective 709 images manually using only normal grading tools (not plugins or LUTs). Then try and just grade each of the LOG shots to just look nice, using only normal tools. If your high-DR scene involves actually having the sun in-frame, try a bunch of different methods to convert to 709. Manufacturers LUT, film emulation plugins, LUTs in Resolve, CST into other camera spaces and use their manufacturers LUTs etc. Gotcha. I guess the only improvement is to go with more light sources but have them dimmer, or to turn up the light sources and have them further away. The inverse-square law is what is giving you the DR issues. That's like comparing two cars, but one is stuck in first gear. Compare N-RAW with Prores RAW (or at least Prores HQ) on the GH7. I'm not saying it'll be as good, but at least it'll be a logical comparison, and your pipeline will be similar so your grading techniques will be applicable to both and be less of a variable in the equation. People interested in technology are not interested in human perception. Almost everyone interested in "accuracy" will either avoid such a book out of principle, or will die of shock while reading it. The impression that I was left with after I read it was that it's amazing that we can see at all, and that the way we think about the technology (megapixels, sharpness, brightness, saturation, etc) is so far away from how we see that asking "how many megapixels is the human eye" is sort-of like asking "What does loud purple smell like?". Did you get to the chapter about HDR? I thought it was more towards the end, but could be wrong. Yes - the HDR videos on social media look like rubbish and feel like you're staring into the headlights of a car. This is all for completely predictable and explainable reasons.. which are all in the colour book. I mentioned before that the colour pipelines are all broken and don't preserve and interpret the colour space tags on videos properly, but if you think that's bad (which it is) then you'd have a heart attack if you knew how dodgy/patchy/broken it is for HDR colour spaces. I don't know how much you know about the Apple Gamma Shift issue (you spoke about it before but I don't know if you actually understand it deeply enough) but I watched a great ~1hr walk-through of the issue and in the end the conclusion is that because the device doesn't know enough about the viewing conditions under which the video is being watched, the idea of displaying an image with any degree of fidelity is impossible, and the gamma shift issue is a product of that problem. Happy to dig up that video if you're curious. Every other video I've seen on the subject covered less than half of the information involved.

-

I remember the discussions about shooting scenes of people sitting around a fire and the benefit was that it turned something that was a logistical nightmare for the grip crew into something that was basically like any other setup, potentially cutting days from a shoot schedule and easily justifying the premium on camera rental costs. The way I see it is any camera advancement probably does a few things: makes something previously routine much easier / faster / cheaper makes something previously possible but really difficult into something that can be done with far less fuss and therefore the quality of everything else can go up substantially makes something previously not possible become possible ..but the more advanced the edge of possible/impossible becomes the less situations / circumstances are impacted. Another recent example might be filming in a "volume" where the VFX background is on a wall around the character. Having the surroundings there on set instead of added in post means camera angles and sight-lines etc can be done on the spot instead of operating blind, therefore acting and camera work can improve.

-

I'm seeing a lot of connected things here. To put it bluntly, if your HDR grades are better than your SDR grades, that's just a limitation in your skill level of grading. I say this as someone who took an embarrassing amount of time to learn to colour grade myself, and even now I still feel like I'm not getting the results I'd like. But this just goes to reinforce my original point - that one of the hardest challenges of colour grading is squeezing the cameras DR into the display space DR. The less squeezing required the less flexibility you have in grading but the easier it is to get something that looks good. The average quality of colour grading dropped significantly when people went from shooting 709 and publishing 709 to shooting LOG and publishing 709. Shooting with headlamps in situations where there is essentially no ambient light is definitely tough though, and you're definitely pushing the limits of what the current cameras can do, and it's definitely more than they were designed for! Perhaps a practical step might be to mount a small light to the hot-shoe of the camera, just to fill-in the shadows a bit. Obviously it wouldn't be perfect, and would have the same proximity issues where things that are too close to the light are too bright and things too far away are too dark, but as the light is aligned with the direction the camera is pointing it will probably be a net benefit (and also not disturb whatever you're doing too much). In terms of noticing the difference between SDR and HDR, sure, it'll definitely be noticeable, I'd just question if it's desirable. I've heard a number of professionals speak about it and it's a surprisingly complicated topic. Like a lot of things, the depth of knowledge and discussion online is embarrassingly shallow, and more reminiscent of toddlers eating crayons than educated people discussing the pros and cons of the subject. If you're curious, the best free resource I'd recommend is "The Colour Book" from FilmLight. It's a free PDF download (no registration required) from here: https://www.filmlight.ltd.uk/support/documents/colourbook/colourbook.php In case you're unaware, FilmLight are the makers of BaseLight, which is the alternative to Resolve except it costs as much as a house. The problem with the book is that when you download it, the first thing you'll notice is that it's 12 chapters and 300 pages. Here's the uncomfortable truth though, to actually understand what is going on you need to have a solid understanding of the human visual system (or eyes, our brains, what we can see, what we can't see, how our vision system responds to various situations we encounter, etc). This explanation legitimately requires hundreds of pages because it's an enormously complex system, much more than any reasonable person would ever guess. This is the reason that most discussions of HDR vs SDR are so comically rudimentary in comparison. If camera forums had the same level of knowledge about cameras that they do about the human visual system, half the forum would be discussing how to navigate a menu, and the most fervent arguments would be about topics like if cameras need lenses or not, etc.

-

I think this is the crux of what I'm trying to say. Anamorphic adapters ARE horizontal-only speed boosters. Let's compare my 0.71x speed booster (SB) with my Sirui 1.25x anamorphic adapter (AA). Both widen the FOV: If I take a 50mm lens and mount it with my SB, I will have the same Horizontal-FOV as mounting a (50*0.71=35.5) 35.5mm lens. This is why they're called "focal reducers" because they reduce the effective focal length of the lens. If I take a 50mm lens and mount it with my 1.25x AA, I will have the same Horizontal-FOV as mounting a (50/1.25=40) 40mm lens Both cause more light to hit the sensor: If I add the SB to a lens then all the light that would have hit the sensor still hits the sensor (but is concentrated on a smaller part of the sensor) and the parts of the sensor that no longer get that light are illuminated by extra light from outside the original FOV, so there is more light in general hitting the sensor, therefore it's brighter. This is why it's called a "speed booster" because it "boosts" the "speed" (aperture) of the lens. Same for the AA adapter Where they differ is compatibility: My speed booster has very limited compatibility as it is a M42 mount to MFT mount adapter, so it only works on MFT cameras and only lets you mount M42 lenses (or lenses that you adapt to M42, but that's not that many lenses) My Sirui adapter can be mounted to ANY lens, but will potentially not make a quality image for lenses that are too wide / too tele, too fast, if the sensor is too large, if the front element in the lens is too large (although the Sirui adapter is pretty big), and potentially just if the internal lens optics don't seem to work well for some optical-design reason The other advantage of anamorphic adapters is they can be combined with speed boosters: I can mount a 50mm F1.4 M42 lens on my MFT camera with a dumb adapter (just a spacer essentially) and get a FF equivalent of mounting a 100mm F2.8 lens to a FF camera I can mount the same lens on my MFT camera with my SB and get a FF equivalent of mounting a 71mm F2.0 lens to a FF camera I can mount the same lens on my MFT camera with my AA and get a FF equivalent of mounting a 80mm F2.24 lens to a FF camera (but the vertical FOV will be the same as the 100mm lens) I can mount the same lens on my MFT camera with both SB and AA and get a FF equivalent of mounting a 57mm F1.6 lens to a FF camera (but the vertical FOV will be the same as the 71mm lens) So you can mix and match them, and if you use both then the effects compound. In fact, you'll notice that the 50mm lens is only 57mm on MFT, so the crop-factor of MFT is converted to be almost the same as FF. If instead of my 0.71x speed booster and 1.25x adapter, we use the Metabones 0.64x speed booster and a 1.33x anamorphic adapter, that 50mm lens now has the same horizontal FOV as a 48mm lens, so we're actually WIDER than FF. What this means: On MFT you can use MFT lenses and get the FOV / DOF they get on MFT On MFT you can use S35 lenses and get the FOV / DOF they get on S35 (*) On MFT you can use FF lenses and get the FOV / DOF they get on FF (**) On S35 you can use S35 lenses and get the FOV / DOF they get on S35 On S35 you can use FF lenses and get the FOV / DOF they get on FF (*) On S35 you can use MF lenses and get the FOV / DOF they get on MF (**) On FF you can use FF lenses and get the FOV / DOF they get on FF On FF you can use MF+ lenses and get the FOV / DOF they get on MF (***) The items with (*) can be done with speed boosters now, but can also be done with adapters so anamorphic adapters give you more options. The items with (**) were mostly beyond reach with speed boosters, but if you combine speed boosters with anamorphic adapters you can get there and beyond, so this gives you abilities you couldn't do prior. The item with (***) could be done with a speed booster there aren't a lot of speed boosters made for FF mirrorless mounts, so availability of these is patchy, and the ones that are available might have trouble with wide lenses. One example that stands out to me is that you can take an MFT camera, add a speed booster, and use all the S35 EF glass as it was designed (this is very common - the GH5 plus Metabones SB plus Sigma 18-35 was practically a meme) but if you add an AA to that setup it means you can use every EF full-frame lens as it was designed as well.

-

I shoot in uncontrolled conditions, using only available light, and shoot what is happening with no directing and no do-overs. This means I'm frequently pointing the camera in the wrong direction, shooting people backlit against the sunset, or shooting urban stuff in midday-sun with deep shadows in the shade in the same frame as direct sun hitting pure-white objects. This was a regular headache on the GH5 with its 9.7/10.8 stops. The OG BMPCC with 11.2/12.5 stops was MUCH better but still not perfect, and while I haven't used my GH7 in every possible scenario, so far its 11.9/13.2 stops are more than enough. The only reason you need DR is if you want to heavily manipulate the shot in post by pulling the highlights down for some reason, or lifting the shadows up for some reason. Beyond the DR of the GH7 I can't think of many uses other than bragging rights. When the Alexa 35 came out and DPs were talking about its extended DR, it was only in very specific situations that it really mattered. Rec709 only has about 6 stops of DR, so unless you're mastering for HDR (and if you are, umm - why?) so adding more DR into the scene only gives you more headaches in post when you have to compress and throw away the majority of the DR in the image.

-

In a lot of cultures these things are still very present. You only need to go online and listen to the children of immigrants talk about their struggles of living in their new culture but still respecting the wishes of their parents, and these are the parents that were open-minded enough to literally move their whole family overseas. The people who live and are completely immersed in the culture they were born in could be far more traditional than that, especially in Asian countries where there are thousands of years of history and culture. The progressiveness of modern life in western liberal democracies is also quite deceiving as there are a great many superstitious things embedded in those places too - not a lot of skyscrapers have a 13th floor for example. We get used to the peculiarities of the culture we live in, and find the peculiarities of other cultures odd. A fun exercise is to watch the "culture shock" videos of people moving or travelling to where you live. If the person explains their perspective well, you can get a real sense of how strange some things are, and not just different but actively backwards! I found the image from that GH7 film to look very video-ish actually. It's odd because when I paused the trailer and studied the image, they seemed to be doing almost everything right. The only thing that I could think of was that they didn't use any diffusion, whereas the vast majority of movies or high-end TV show that have shots with bokeh will reveal they're using netting as diffusion. Those that don't may well be using glass diffusion and that might not show in the bokeh. Maybe the difference is more than diffusion, but that's my current best guess why it looked like that. I think there are different kinds of diffusion, with some looking overbearing at low strengths and others being fine at much greater strengths. The majority of movies and narrative TV will be using a decent amount of it. Maybe it's the type that you didn't care for? I've also found diffusion filters to be almost impossible to use in uncontrolled situations, even at 1/8 which is the lowest strength available - on some shots it'll be too weak and you turn around and a light hits the filter and now the image is basically ruined because half the frame is washed out. Maybe because of the uncontrolled conditions they just had some shots that suffered. When I started in video I couldn't tell the difference between 24p and 60p, now I hate 30p almost as much as I hate 60p. I also couldn't tell the difference between 180 shutter and very short shutters except on very strong movement. Now I am seeing the odd shot in things like The Witcher which 'flip' in my head and look like video and I don't know why. Some people outside the industry/hobby will be able to see the difference between something shot on a phone and a cine camera, but I suspect most won't, and those that can probably don't care because if they did they wouldn't be able to watch almost anything on social media, no home videos, nothing they record on their phone, etc. A surprising number of people will just think that a smartphone vlog looks "different" to Dune 2, rather than "worse" than it.