-

Posts

8,051 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

That's interesting - do they scale the values back into legal, or clip them? I haven't heard that non-broadcast distribution cared about such things.

-

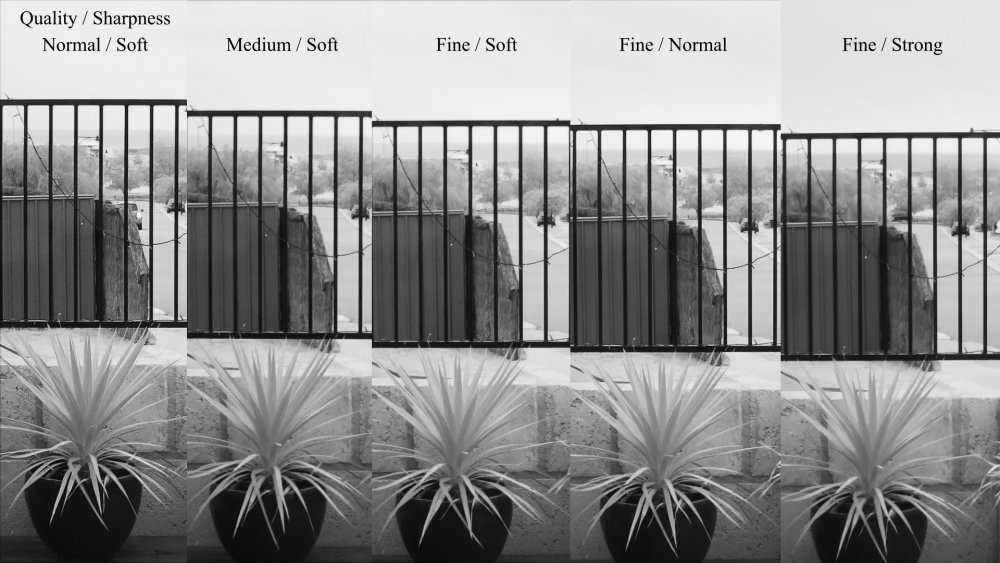

Another vignette.. I'm now officially over the IR sensitivity. It makes people look, well, awful. I'm also a bit over the focal length being a bit too long for some situations. Lucky, I have IR Cut filters on order, and I say filters, because I'll need one for this lens and one for the new lens I have on order, a 2.8-12mm zoom lens. This will give around 20-85mm equivalent FOV, and (I think) has a focus control, so I might be able to focus closer too, so that will be fun. I also found the quality setting on the camera was not set optimally, so did a modes test and found the best looking settings, and also optimised a grade and saved it as a Powergrade in Resolve, so now I can just apply it whenever I want and save time pixel-peeping in post. Here's the modes from the camera: and here's the powergrade applied, with each shot having the edge softening optimised to cancel out the halo from the sharpening and compression applied: The middle one looks best, so that's what I'll go with. The goal of this project was to get me shooting and doing smaller projects and it certainly has done that, so that's a success in my book.

-

@TomTheDP @BenEricson You've both listed some virtues of film (and the negative parts of digital) but didn't list the advantages of digital or the disadvantages of film. Halation, gate weave, gamma-related colour shifts, hue compression, softness, etc are all parts of dealing with motion-picture film that don't get talked about. This is kind of what I think the problem is - we view film as being better than digital (and maybe we know that it's only better in some ways) but never talk about the ways its far inferior. This leads "film" to be a proxy for "nice" and then we never get anywhere because we're not even talking the same language. People are jumping up and down about 6K and 8K but seem to be unfamiliar with the idea that 35mm motion-picture film struggles to match 4K. No-one seems to be trying to blur their images to match film (apart from me).

-

There are many good reasons that having a physical filter is better than emulating the effect in post. I'm considering filters myself, although I have lenses that have some of this effect built-in so I'm not starting with a blank canvas. As pointed out in the thread though, lighting conditions and subject matter can make the effect very difficult to control. For example, if you had a strong light in the frame then you might get a huge amount of the effect, compared to a shot with low contrast and no light hitting the filter which might have almost no visible effect. Therefore, to get the two shots to match visually you would have to swap the strength of the filter on each shot to get an end result that looks the same. This is one of the advantages of doing it in post, because you can adjust it afterwards, and don't need to buy every strength of filter, and you can even select values in between the ones that the filters come in, or even animate the effect so that it varies amount in the same shot. For example if you went from a portrait shot and panned to show a scene with the sun in it then you would want more effect at the start of the shot than at the end, and a smooth animation would do the trick in post. I suggest that the best approach is likely to combine the two, getting the majority of the effect using a filter while shooting, but evening it out in post.

-

I was reading a thread on a pro colourist forum and a big debate started about how film was (mostly) awful to work with and mostly it was hugely inconsistent and although it was occasionally glorious, it was mostly a completely undesirable thing. This makes sense, as evidenced by the complete lack of threads where people are asking how much to blur their footage to get the Super-8 resolution. This leads me to perhaps a rather odd terminology question - there's the "video look" which typically isn't regarded as desirable, but what is the other "look"? We can say "filmic" but in reality the film look consists of a whole bunch of things that mostly people aren't interested in. "Cinematic" is a great phrase, but that just means "like the things in the cinema" and considering that Steven Soderbergh shot Unsane on an iPhone, having a phrase that includes everything from the Alexa 65 to the iPhone isn't really that useful. We could attack it from another direction - we don't want that "digital" look, we want the "analog" look. This isn't really so helpful either - analog was either film, which we don't want to emulate many of the qualities of it at all, or analog tape. Analog tape simulation was in-vogue in music videos etc but used as a special effect, because the quality of VHS or Beta or even their higher-resolution variants, well, it wasn't great. So, if we've only ever captured images on film, tape, and digital, and these often included attributes that were very undesirable, and even what got shown in the cinema wasn't always to be envied, what is it that we do want? We can describe what we do want by listing the many things we don't want, like clipped highlights, or digital noise, etc etc, but that's not so useful. This may sound like a trivial matter and just semantics, but I think there's a larger issue going on here. I've been interested in learning about the film look, not because I want to emulate film completely authentically, but because I wanted to pick the good bits out of it. But the issue is that any discussion of the "film look" either takes place with people who aren't experts and therefore isn't useful, or it involves those who have deep knowledge, but will argue that film didn't have a "look" because they spent decades of their life trying to create a single look out of pieces of film that were radically different to each other. Thus, these conversations often go nowhere because we don't have a good language to even frame the discussion. I recently watched the Resolution Demo by Steve Yedlin (who shot Knives Out, Star Wars The Last Jedi, etc) which in Part 2 gave a few more clues about what might be going on with image quality and specifically which aspects of image quality have which effects. http://yedlin.net/ResDemo/index.html Another thing that 'inspired' this thread is the recent demo video of the Sony A1 which might be the most "video" looking thing I have seen in years.... What I take from this is that whatever the hell makes an aesthetically pleasing image, it's almost entirely absent in this video. So, I've tried taking a different approach, and tried asking what makes something look good. I've prefaced it with some comments around what I don't like, in order to provide some context (because once again there isn't language for this topic) but that went about as well as you'd expect, and I got replies that included "it's whatever looks good to you" or "whatever is right for your project" etc. Correct, but not helpful. Does anyone have any idea how we might start to have a conversation about this stuff?

-

IIRC, these settings are related to if you plan to broadcast your video over TV. When TV stations process video they only have a range of values that are allowed, they're called "legal" values, and they're a little bit less than the full range of values that the camera can generate. The 16-235 and 64-940 ranges will be within those "legal" values. Computers and YouTube and other online services probably don't care about these things, so if your videos aren't going to be broadcast on TV then it probably doesn't matter. If your videos are being broadcast on TV then you should contact the person at the TV station that you are in contact with and ask them. They should be happy to tell you their requirements if that's important for them.

-

Thanks Chris - yes, I think I will just have to accept defeat and go with a small consumer solution like that. There were a few others previously mentioned that had slightly better geometry for me, but I think that's the general idea. I'll be running the risk I can't mount it to whatever they have if it's slightly larger in diameter, but I guess having a clamp that's slightly bigger teleports me to a different universe where people think I'll be destroying the universe if I mount an action camera on a sheet of drunk-person-proof and gale-force-wind-proof toughened glass! I also saw some magnetic mounts that looked interesting too, so they might be worth a second look.

-

That's an interesting observation, maybe adding RAW would be a good strategy. The GH5 and P4K seem completely different to me, having almost opposite strengths and weaknesses: GH5 vs P4K... IBIS vs none Great battery life vs not All-in-one design vs needing a rig Great build quality vs not h264 vs RAW / Prores bitrates capped vs bitrates scale with frame rate complicated UI vs great UI hybrid vs cine If you look at the P4K one of the killer features why you would put up with the terrible battery life and other things is RAW. Giving a GH6 RAW would potentially start to capture why the P4K remains popular. With all the new cameras it seems like they're abandoning the decent bitrates on the resolutions other than their flagship modes, so I'm thinking that the natural successor for good image quality might be a compressed RAW format that downscales from the whole sensor. They're ALL-I so are easy to edit and you also get 12-bit colour which is fantastic. Maybe a GH6 that does compressed RAW, has upgraded colour science, maybe has some other things like dual ISO etc, maybe that would do well because it would be a way of getting RAW with IBIS in a reliable compact package. Obviously the biggest omission there is PDAF, but the P4K doesn't have it, so who knows. All BM cameras have this stigma of poor AF too, and they seem to be offering features that keep people buying. I disagree - the A7S3 didn't get cancelled by the internet for not having 8K. I really think that the A7S2 was the spiritual sibling of the GH5 - both were workhorses that had sore points and weren't perfect. Sony updated the A7S2 with another camera that extended it's usefulness to working pros but didn't go 8K, and the reception was very positive. Of course, who knows if the GH6 would get a warm welcome if it got a workhorse update, because unfortunately it lacks the PDAF and FF sensor of the A7S3.

-

Actually, the 12-35/2.8 equivalent for FF is 24-70/5.6 so the 24-70/4 version is better, and the 24-70/2.8 is a FF lens that MFT never offered. You can make the argument that the 12-35/2.8 is a 2.8 ff equivalent based on exposure, even if it's not equivalent based on DoF, but even then, a FF sensor gathers so much more light that the noise performance and quality of colours is probably the same between MFT at 2.8 and FF at 5.6. It really irritates me how people adjust for crop factor on focal length but not on aperture, and Panasonic and Olympus even labelled their f2.8 lenses "PRO" which is completely ridiculous. I didn't know the 1080p was line-skipped on the newer models, that sucks completely. It will be interesting to see what Z-Cam or Panasonic (or maybe BM who are always a wildcard) will do with the sensor, considering the Z-cam/BM cameras are a battery, screen, lens mount and a whacking great data processing pipeline to take the data off the sensor and pump it into the card slots or USB interface as quickly as possible.

-

8K open gate would be useful, and would distinguish the camera. My only objection to 8K is that if you're going to downsample then you have to do the full read-out and more pixels means more RS, and the GH5 is actually really good with RS. It's funny you say AF and bring in more cinema level features, considering that Hollywood is still mostly manual focus, but you're right that the videographers want AF and the cinematographers want more cine features.

-

This thread has more info on the topic than you could ever want....

-

I don't think we'd only get 8K. Any 8K sensor would mean an update to the colour science, which Panasonic has definitely improved in other cameras since the GH5 and I think is one of the GH5s main weaknesses. 4K120 would be pretty good and would also be a bit headline grabbing which is cool too. Depending on the data rates and the imaging pipeline they put in there, I wonder if it could do 8K60? 8K downsampling would be cool. It also adds the possibility of a 6K mode that's also downsampled, which would be pretty newsworthy. I'm not sure how many of the 6K cameras are oversampling, and back in the day there was a significant IQ bump from oversampling rather than just doing a straight pixel readout. At this point I'm not sure what it would take to be revolutionary. If you take the R5 and A7S3 and imagine something that would blow them out of the water and having people coming back to MFT, then what would it have to be? Not only don't I think it's possible to deliver that, but I'm not even sure that if it did that it would connect with the market. Imagine that it had a ridiculous configuration like 16K, 8K60, 4K240, 2K600, internal RAW, triple native ISO, eyelash AF for people animals insects and amoeba, 12-bit h266 444, and 8 hour battery life... who would sell their R5 or A7S3? It's still MFT in a FF hype world, would people really be abandoning FF because "I need 16K" or "My clients require 4K240" or "I have to film at f11 at night under a new moon with no lights"? My inspiration for the GH6 was for it to be a solid upgrade but keep it's workhorse status. We're all about those specs here, but other GH5 forums I am part of online are much more focused on the work and how to get good results with it. Many shoot in 1080p (gasp!) because they never once had a client ask for 4K, etc etc. I suspect those people would think that FF was an advantage in the sensor size that was more valuable than 2K600 or whatever. Which I guess is a way of saying that beyond a certain point people don't care about specs when they're 'good enough' and I think that the pack is probably good enough for most, so going further doesn't really help much, and what people will pay for is an upgrade that is meaningful, which leads me to.... My logic for that thread was that now we're about to get a $6K medium format camera that is 'good enough' for most people (either internally or with the 4K Prores RAW) we can get a massive increase in sensor size, which is something more valuable to me than 4k120 or 8K. In a sense, my issues with the GH5 are minor enough that even if they fixed everything wrong with it the upgrade probably wouldn't be worth it for me. I've recently worked out that the difference between videographers and film-makers is that videographers are having to please many future clients who may demand 4K or request radical changes in post so the priority is to be as flexible as possible, thus buying a camera with high resolution and frame rates and sharp lenses. Film-makers only have to please one client at a time, and if there are particular requirements they can hire equipment without losing money, and if they have a good enough reputation or are independent then they only have to please themselves. As someone who only shoots my own projects, this means that my camera purchase only has to please me and I'm not interested in 'what-if' questions about pleasing others. I seriously doubt that anyone is buying the A7S3 because they have their own needs for 600Mbps 4K ALL-I or 4K120 422 10-bit. Rather I think they will be buying these cameras because they don't know what future projects require and want to cover their bases and never have to turn down work.

-

I always thought that Panasonic had three options: Treat the GH line like a pony and teach it more tricks, by going 8K / RAW / <spec goes here> .... this is the path of the R5 Treat the GH line like a 'workhorse' and improve the things that currently get in the way .... this is the path of the A7S3 Treat the GH line like it's dying and do a half-assed update that can be cheaply manufactured hoping enough people will buy one No GH6 Personally I don't mind if it goes 8K, because the GH5 is 5K and I shoot 1080, so a 2.5x oversampling to a 4x oversampling isn't a large difference. A new sensor means we'll also get new colour science and that's one of the biggest weaknesses of the GH5 IMHO. A new sensor might mean a new AF technology, but who knows. Dual ISO could be on the table too, which would be fantastic. Sadly, the Panasonic way seems to be releasing the camera with only crappy codecs and adding the good ones later through firmware. This means that the hype surrounding high bitrate and bitdepth modes don't get included on launch and firmware updates don't get the hype, and I wouldn't buy it until it has what I need, unlike the S5 which might never get those upgrades. Still, it would be a shot in the arm for the MFT system and would protect peoples investment in lenses and accessories for some time, which seems to have been a concern for some. Does anyone have any info on the sensor? a @androidlad perhaps?

-

I'm not sure what the chances are for that - potentially lower than you might think. Consider the options: The new models are impressive and expensive.... "it's the end of the world - $6K cameras have doomed civilisation" The new models are impressive and affordable.... "today our company is closing its doors due to low unit profit of recent releases and the failure of the compact camera market" The new models are modest upgrades and affordable.... "WTF that's not impressive - WORST CAMERA EVER" The new models are modest upgrades and expensive.... "what the absolute @#$$" Personally, I think the GH5 is actually very close to perfection, and so a modest upgrade of its weaknesses, even just some of them, would justify its existence. The hype train that seems to expect new cameras to be spectacular won't let the "we've taken a solid performer and given it a solid incremental update" message gain traction. These days things are either spectacular or despicable, and no logic of common sense can cut through the mania.

-

Video will never be a niche. The demand for video is on the rise, but never seems to get mentioned when the units shipped graphs come out each year. It's on the rise for people shooting with smartphones, but also for people who want better than they can provide. The professional videographers continually banging on about why the latest equipment is the absolute bare minimum and the camera from last week is trash paint a picture that there is an un-ending supply of people who want 6K advertisements for their laundromat, but the problem is that they don't want to pay enough for it. I agree. Smartphones are eating the market for cameras that will take less than 10,000 images before being left in a drawer. Prices might not have come down in an absolute sense, but what you get for your money is growing steadily - likely in rough alignment with Moores Law. If the obsession with 4K and 6K and 8K and 12K calmed even slightly then people would realise that the price of a camera that can deliver an image of X quality is dropping steadily.

-

Why Do People Still Shoot at 24FPS? It always ruins the footage for me

kye replied to herein2020's topic in Cameras

I find my exposures typically change from shot to shot, as unless i'm waiting for something to happen, I typically shoot for the edit and know what shot i'm aiming for and when i've got it. When I bought the GH5 I had shortlisted an Olympus as it had better stabilisation, and also the A73 for the great AF. I was a huge fan of good autofocus, and knew that the AF was the achilles heel of the GH5, but decided to test myself and "prove" that manual focus wasn't for me. So I shot a few short test projects using MF and I found that not only did I find it 10 times easier than I thought it was going to be, I found that I liked the aesthetic. It's imperfect in a very human way, rather than mistakes that are made by the tech and look robotic or plain awful. I also remembered, and started to not instantly forget, the times when AF does a great job of focusing on the wrong thing and ruins a shot. I don't think that AF is at a level yet where I would rely on it, because as imperfect as MF is, I NEVER EVER EVER find myself focusing on something that I didn't want to be focusing on 🙂 Yes, manually focusing isn't the fastest thing in the world, but it's usable in the edit. I see people using manually focused shots that are far from perfect regularly online, but it's rare for anyone to show a shot that the AF gets wrong, mostly because it's not nice aesthetically. I know cameras now have a speed control setting but I always find that if i'm seeing an AF pull focus it's either too fast or too slow. In terms of the GH5, I have it dialled in and I know it well now. I feel like i've finally learned it. I'm invested in lenses, and even bought two new lenses (Laowa 7.5/2 and Voigtlander 42.5/0.95) in late 2019 that I haven't even had the chance to use on a real trip yet! I don't need more DoF, because my lenses are fast enough, and I get high-bitrate 10-bit ALL-I 1080p. You're right that the colour science is a weak point but it's passable and my grading skills are getting better, so I don't mind, however I think this is the biggest thing that would tempt me to upgrade. It won't be any time soon, and it may very well be to a Medium Format camera, skipping these tiny "full frame" cameras lol. I'm not sure what Canon FF camera you're talking about with "good results", but I'm not aware of anything that shoots 10-bit internally except perhaps the R5? One day Canon might make a FF camera to rival the GH5 at a similar price point, but we're not there yet! I've spent many hours of frustration grading the 8-bit footage from the XC10, and the 10-bit footage from the GH5 is absolutely spectacular in comparison, practically being from a different universe. I see grading videos of people grading Alexa footage and pushing UMP footage around and it moves smoothly without a hint of breaking and the GH5 footage always gives me that same feeling when i'm grading it. I've tried to break it and failed. The Canon 8-bit footage I've shot and graded works fine for perfect conditions but given any difficulties at all it broke before it even looked normal, let alone having latitude to push or pull the footage to adjust compositions in post. -

I love it when people claim to speak on behalf of an entire world-wide industry, but let's introduce some science... Here is the table of required sample size to give a certain margin of error. Ignore the blue box, someone else put that there. We want to look at the bottom left corner - to get a 95% confidence we're within 5% of what we say, we need to have 384 samples. So, for those saying easyrigs are common, please provide photographic evidence of 384 films using an easyrig. For those saying they're not common, please provide a time-lapse video for 384 productions showing the DoP over the full shoot schedule not using an easyrig. I look forward to your responses. On a separate note, I'm thinking of buying a new Canon cinema camera to replace my GH5 for my families home videos which I shoot handheld and I was wondering if it will have IBIS or if I will need to buy an easyrig. Thanks.

-

Yeah, but can it shoot 8K without overheating? Getting the shot is overrated.... it's all about that sweet sweet megapixel count, everyone knows that.

-

Yeah, I suppose. I guess from the outside it just looks like they're all fawning over whatever looks cool at any given moment, with the guiding principles of 1) MORE=more, 2) MOAAR!!!!, 3) See #1, and 4) How high do you think I can count? It seems odd to think that the hype would go against the more is more principle, but I guess the golden rule is the golden rule. Interesting, and makes sense. I wonder what a setup with the 2X anamorphic adapter looks like, assuming the optical path will work? I kind of liked the look of the ground glass.. it had way too many issues across the whole frame, but the texture in the centre was lovely.

-

I agree with your summary from the perspective of a stills-first shooter. You're right that FF lenses and the speed booster lend huge support to a new system, which is hugely important commercially. It will be interesting to see if FF ends up being the sweet spot. When you have something where bigger-is-better it tends to only be better because all the things you've seen are underneath the 'best' size, or it ends up that although the thing you're looking at keeps getting better with size, other things take over in importance. No-one is going to carry around an 8x10 sensor camera even if the image was beyond belief, except on crazier projects, like that movies where they hand-held the IMAX film camera(!). You're right that it will depend on the other manufacturers getting in on the act and collectively PR-ing people to death and making them upgrade. I can also understand Fuji using this as a strategy. My question is really "if I have to change from MFT and bigger is better then why would I settle for FF if there are better options?" If there was a MF camera with the features of the A7S3 and lenses available then I think the camera trendies would be plastering it all over YT.

-

Why Do People Still Shoot at 24FPS? It always ruins the footage for me

kye replied to herein2020's topic in Cameras

Cool technique and I can see why you are able to use aperture to set exposure. Not a lot of folks on this board seem to understand that a lens closes down. My overall philosophy is 1) get the shot, and 2) make it as 3D as possible. Making it as 3D as possible explains many / most of the things that are done on controlled big budget sets, and as I don't do some of those things because of (1) I overdo the rest, but just by a little. I prefer to shoot with a DOF effect similar to my eyes, but just a little shallower in order to help with depth. Even on bright sunny days we still see blur in backgrounds, so that's how I use the aperture. In low light I will open up completely and although it's a radical thing with fast lenses, it looks more natural because our eyes naturally open up in low-light conditions so it doesn't seem unnatural. I shoot in public in uncontrolled situations so it's nice to be able to separate out the subject from the environment to create focus. For example: Excuse the colour grading, they are ungraded or only a quick job. You can definitely over-do the shallow DoF thing. Philip Blooms film shot in Greece with the GFX100 had DoF that was too shallow for my tastes, so I'm not a bokeh fiend or anything. Sometimes you want to take everything in (once again, basically ungraded): My thoughts about DoF are that it's about the story you want to create. It's about controlling what the focus of the shot is. Sometimes the situations are so crowded that frames would be chaos and it's difficult to tell who the subject is or know where you're meant to be looking. Obviously that's the main job of composition, and to an extent DoF is about how 'deep' your composition is. Very shallow DoF says "that other stuff back there isn't important" and deep DoF says "everything here is important". It's a creative tool, which is why I don't want to be forced to use it to control exposure. Exposing with SS isn't my preferred aesthetic, but the number of times that I only just get a shot of the kids doing something funny means that shaving every second off the reaction time really counts. I've even taken the first two frames of a shot (or the first two in focus) and used Optical Flow to create a kind of moving snapshot of the moment, and did that because frame #3 of the clip showed the smiles fading or the kids noticing the camera or something else coming into play and the moment is clearly gone. Literally, I got the shot but had I been 0.08s later, I'd have missed it. This has happened more times than I can count and often they're the best moments. I don't know about you, but I can't set a variable ND to correct exposure in under 0.04s. When a camera uses auto-ISO and auto-eND to set exposure I will set the shutter angle to 270 and leave it there forever. I say 270 instead of 180 because I slightly overdo the things I can in order to compensate for the things I have no control over, like lighting conditions, or sometimes even my filming location (like if I'm stuck in my seat on a tour bus / boat / etc). -

You're right that photographers were all about FF, but I think you have to remember that photographers lusted after Medium Format, but didn't buy it because it was far far too expensive, and was too slow with slow AF and slow burst rates (or no burst rates at all) etc. The fact that we have a MF camera coming out that is HALF the price of what previous models of MF have been, and is usable in real-world conditions instead of just being a 'studio camera', well, that changes things. I think photographers have more lust for megapixels and sharper lenses than they do for FF, so if they had to choose between FF and MF I think they'd choose MF in a heartbeat. Of course, that involves changing systems, so that's not going to be something that will happen quickly, and the price may have to come down for the majority of the stills market to start thinking of it as an accessible option. So yeah, I think people didn't talk about MF because it was viewed as unattainable, but now that's changing, we might see the lust start to emerge. They are slow to change, and rightly so, if the image was the only thing that mattered then they'd change quickly, but as you know, it's not the most important thing on a film set, and it takes time to understand the new lenses and the new colour science and all that stuff. Plus, it's not like shooting with an Alexa is a terrible place to be as your default option! I agree that it will be quantifiable, but we haven't quantified it yet, and i've dug pretty deep in this stuff. I think people don't know. It's probably some specific combination of things, which of course makes it that much more difficult to zero in on. In the meantime we only have our aesthetic impressions to go from. There are quite a lot of subjective accounts from highly respected people that larger sensor sizes and certain lenses or lens designs have a certain X-factor, which is really one of the main attractions of MF and the purpose of this thread. My entire philosophy of image has changed over the last few years as i've gone on this journey. I started out with the philosophy of getting a neutral high-resolution high bitrate image shot with sharp lenses and then degrading it in post to give the aesthetic that is desired. What I learned along the way is that: resolution does matter and I want less of it, not more, so now I shoot 1080p DoF has a much larger impact on aesthetic than I thought, so now I shoot with wider aperture lenses (sometimes but not always used at larger apertures) bit-depth and bit-rate are highly important, so now I shoot 200Mbps 1080 at 10-bit in a 709 profile (not log) halation, flares, and contrast can't be simulated very well in post, so now I try and have lenses with a less clinical presentation in these areas In short, I worked out what is more important and what is less important, and I worked out what I can and cannot do convincingly in post. Therefore, I moved these things to be done right in-camera. If medium format becomes accessible for video (it's not in my price-range yet!) then that's another thing I'll be able to shift from trying to do in post (but not knowing how, like your comment about not knowing what is going on outlines) to doing things in-camera and then having it baked-in to begin with.

-

Absolutely, and that's why I am even tangentially interested in the format. My expectations of a camera are that the 'rig' is the camera body, an SD card, a lens, an on-camera mic and a wrist strap and then I put a couple of spare batteries and a couple of other lenses in my bag and I'm off for an 18-hour day, during which I shoot anything and every that that peaks my interest. In those circumstances my phone would take better images than a Phase One because my phone would suit the conditions and the Phase One would be a PITA. I'm looking at it from the perspective of video-only and also from the perspective of getting good-enough quality with a portable package. For me, shooting with an external monitor / recorder is a downside as it means the rig is larger, heavier, requires more complexity in power solutions, has messy cables, and creates unwieldy file sizes. The A7S3 internal codecs are good enough for me (actually they're radically more than what I'd need, but luckily they have high quality 1080p and ALL-I codecs). I just saw that the GFX100 can do 1080 at 400Mbps so I guess that's fine for my purposes. In a sense the more integration that they build into these cameras the less they will make them usable with things like focus peaking and exposure tools etc, so that's not a good thing. Anyway, it's good to have the option of external RAW but keeping good internal quality should remain a high priority. I meant that with a 100MP sensor it's odd that it can only do 4K. Considering the hype has moved to 6K and 8K which are now settled as standards you'd think that offering these would be a 'home turf' advantage of MF. If you think about MFT, doing 6K or 8K means having to work on new sensors and dealing with all kinds of new issues, but MF was already the king of high resolution, so you'd think that these things would be playing to the strengths it already has. Ok, that makes sense. I guess I see the crop factor as being a strength and weakness. It's great if it can use FF lenses, but that also means that the sensor isn't so much larger than FF. Given a hypothetical 6x4.5 camera as a competitor, it wouldn't be able to use FF lenses, but would have a huge sensor size advantage over FF, so would be easily worth the trouble. I guess that brings us to.... For me, I see MFT as having the advantage of being what I already have lenses for. FF as being the thing that is now good enough, has a larger sensor, and has heaps of lenses and overall support. MF represents going away from what I already have, and where all the lenses are, but you'd do it for the mojo. Considering that the GFX is only a little bit larger than FF, but is the best you've ever seen that lens and is also almost good enough to use the M-word, maybe a 645 sensor would be crazy good and worth all the 645 lens shenanigans that would be required. To me, a format that is only just a little bit better than FF seems to be skimping on the thing that it really has going for it. Now, of course, there are limits - I'm not going to be lining up at the camera store to buy an 8x10 camera for shooting my travel films, but MF needs to offer something significant over FF to really make it worth the hassle of going through that transition. @mercer is talking about a certain X-factor that can occur with larger sensor sizes. I've been trying to chase down what this might be, and you're right that it's not FOV or DoF, but it's important to know that the math doesn't explain everything that's going on with sensors and lenses. I've tested a lot of lenses in controlled conditions and when you do these tests you start to see differences that there are no readily available explanations for. An example of this is the Takumar lenses, which render images that are noticeably flatter and less 3D-looking than other lenses, and this is under controlled conditions with everything else being equal. Same focal lengths, apertures, same lighting, camera position, etc etc. It's something that the Takumars are known for. The question is, if the background is the same level of blurriness, then how is the perception of 3D space different? I've been looking at this question for years and haven't come up with anything, except that I've seen it myself enough times to know that something is going on. Sensor size can have a similar effect, some things look more 3D than other things. Not sure why, it just does. This is one of the attractions of larger sensors. See @BTM_Pix comments above about the Contax lens being better than any other camera he's seen it on. Why would this be the case? Who knows. I've played with things like this and these effects hold up even if you decrease the resolution, bitrate, and even colour depth and even if you make the images B&W, so I can't readily find an explanation for it. FF only took a few years to 'catch up' to where MFT was, and the MF cameras we're talking about aren't that far away from FF in terms of sensor size. Certainly they're a lot closer to FF than FF was to MFT. If there is market demand, which is debatable considering FF still has a lot of hype and many haven't moved from MFT or S35 to it yet, it could be that MF 'arrives' in a few years.

-

Why Do People Still Shoot at 24FPS? It always ruins the footage for me

kye replied to herein2020's topic in Cameras

I don't see a difference between 24p and 30p. Or at least, 30p doesn't have the same look that 60p has to me. I figure the only way to get SS you want is by having an ND. You can have a variable ND or you can use fixed NDs and then vary your aperture (assuming it's declicked) to fine tune it. The reason I say that is because there is very little tolerance for variations in SS if you want some motion blur in the frame. To put it in context, let's say you're aiming for 180 degree shutter. Obviously a 170 degree shutter would still be fine, but where are the boundaries of the aesthetic. Some say that 360 shutter gives too much blur, but let's say that we're ok with it. Steven Spielberg famously used a 45degree shutter on Saving Private Ryan because he wanted the aesthetic to be jarring and he wanted the audience to be able to see the bits of peoples bodies splattering everywhere when things exploded. Let's say you don't want to go this far and so a 90 degree shutter is our limit. That's a 90-360 shutter. Take the sunny-16 rule. For outdoor exposures at ISO 100 you'd typically have a 1/100s exposure when set to f16. No-one contemplating not using an ND will be wanting to shoot at f16, so let's say they're going to be shooting more like f4. That's 4 extra stops of light we have to get rid of in the SS, so that's a SS of 1/1600. That's a 180 shutter if we're shooting 800fps. This is absolutely no-where near what we need for 24fps, 30fps, 60fps, or even 120fps, so changing from 24p to 30p because you don't want to use an ND doesn't work. Using a graduated ND won't take 4 stops off the brightest part of the image, so that does't work. Polarisers won't work either. Maybe you never shoot in bright sunlight, sure. For me, I realised that I would need NDs that went from something like 6-stops to zero, and even then I'd still need to dial them in every time and miss a bunch of my shots. So I just abandoned using one. I used to use a fixed one on my XC10 because it couldn't do a short enough SS to exposure during the day with the aperture wide-open, as it was a cinema camera and not a hybrid. I will happily go back to using an ND when they implement a built-in eND that is controlled by the camera automatically like the Sony cinema cameras have. That way I control the aperture because it's a creative tool, I control the SS because it's a creative tool, and the camera controls the ND and the ISO to get the full range of exposure values, because neither ND nor ISO is a creative tool. -

Why Do People Still Shoot at 24FPS? It always ruins the footage for me

kye replied to herein2020's topic in Cameras

I shoot handheld and don't have problems with pans without a subject, but they're not really a big part of what I shoot, especially now I have a 15mm equivalent lens, so landscapes etc don't require that much panning. The issue with 24p panning is that the 180 shutter obscures the detail, but that doesn't bother me as I'm shooting with auto-SS for exposure, so normally very short shutter speeds. I'm aware it makes the video less cinematic, but not having to use an ND means I get about 20-30% more usable shots, considering that much of the time I see something happening and only just get the camera going and in focus, and in the edit I end up using the first 2-3 seconds of the clip, so if I had to manually expose with an ND then I would have missed the moment. I chose the GH5 because of the 10-bit internal and IBIS. Even now, if I was re-buying my whole setup I would still consider the GH5 as the best option due to the 200Mbps 422 ALL-I 1080p 24p and 60p modes downscaled from 5K, the IBIS, the fact it's much lighter than things like the S1H, the fact I manually focus so don't care about AF, and the MFT crop factor gives me a 2X zoom on long lenses which I use for filming sports in the 120p mode. Is it the best camera available, no. Is it a camera with only 4K60 as its only defining factor? No way. It is still considered a workhorse today, and the GH5 FB group I'm in has 37k+ members and has a steady stream of people buying a GH5 for the first time and asking questions as they familiarise themselves with the camera, or asking advice about buying GH5/GH5s as a second or third camera for their setups. The group is interesting in that it seems to be full of people who are shooting things, posting their work, and are still very excited about the benefits that it provides.