-

Posts

8,046 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I'm picky because it's just misleading. People went completely bananas when the P4K was released about it not fitting in a pocket, also because that was misleading. I think that over time words get used, then misused, then appropriated, and then they become meaningless and just confuse people. Think about the word 'cinematic'. Once upon a time it used to mean something, but now, any time there is a conversation about film, someone who thinks the word cinematic means something will use it, and the people who know it doesn't mean anything then talk about the word, then the whole conversation goes sideways and the only thing that people learn is that conversations on the internet are frustrating and go no-where. In terms of this being a "handheld" camera, sure. According to what criteria? We used to talk about cameras that were particularly good at stabilisation, but now apparently the criteria is to be light enough to hold, and accepts OIS lenses. Great, so now the phrase "handheld" means every camera under 3kg made in the last decade. The next time that someone who shoots handheld in extreme situations where great performance is really required asks a question they will get met with a sea of numptys who think that handheld means basically any camera on earth, and if the person shoots in a situation where an the P6KPro wouldn't cut it and a camera with excellent IBIS / OIS integration is genuinely required then they are going to have to wade through the people who don't understand that OIS doesn't truly distinguish a camera. Effectively it just creates noise and more hassle to have real conversations about things. The way that I would summarise a camera like this would be "you CAN use it for handheld work, but I wouldn't call it a handheld camera" and this kind of statement represents some nuance and context. Unfortunately when the manufacturer themselves calls it such, it's just one more thing getting in the way and not being helpful. I'm happy to drop the subject and not hijack the thread, but it's kind of equivalent to Apple calling the latest iPhone a 'cinema' camera and then forums like this being full of random Apple fanboys who fill up every thread discussing cinema cameras arguing with people about why the iPhone should be on their shortlists, because "it's a cinema camera too"!

-

That doesn't make the camera a hand-held camera, it makes those lenses handheld lenses. Name a camera that isn't a handheld camera then. It's like saying that my dining room table is a cocktail table because it is compatible with cocktail making equipment. By that logic my dining table is also a boat table, because:

-

Literally, the first thing underneath the name of the camera is: HANDHELD..... I carefully read the page looking for IBIS, but no mention of it. So, it's handheld for the same reason that any other camera under 3Kg is handheld. Excuse me while I sip my handheld coffee, contemplate all my handheld lenses and handheld memory cards and type this on my handheld laptop. I'd like to see them create the GH5 successor. It's an entirely different market, but within reach. People loved the GH5 for video because: IBIS reliability, no overheating, and battery life good codecs anamorphic and slow-motion modes flippy screen MFT lens mount can adapt most other lens mounts compact and light for hand-held and gimbal use low price for the features (at the time) The BM cameras meet quite a lot of these, but for many including myself, the P4K is a mixture of things I don't want/need and the absence of things I use all the time. I own a Micro, their cheapest current camera, and it's nothing like the GH5 in terms of usability - apart from being cheap it has almost nothing in common with the GH5 or how I shoot for that matter. I'm aware that the GH5 is a hybrid, but I suspect many users don't care about stills, and besides, BM don't seem to understand that the P6KPro isn't a hybrid. I think there's room in their lineup for a more video-centric camera that fits into the above spec, and it wouldn't be cannibalising much of their existing customer base, as not many GH5 fans could use a P4K, and not many P4K fans could use a GH5. There seems to be an entire market segment of wedding videographers, music video creators, social media influencers, who are faced with the choice of a camera that can shoot how they want (reliable in the field and fast to work with) and what they want (RAW and high-bitrate/bit-depth codecs) but there aren't many cheap offerings that span this gap. These people are doing comparisons like A7S3 vs Komodo vs C70 and the like. These comparisons always have the tone of Lamborghini vs Hummer vs Mercedes S-Class - all great performers but for vastly different tasks and if these things are being directly compared then there is something wrong. Oh, and nothing is remotely affordable. This seems like a gap in the market to me.

-

I'm here all week - tell your friends!

-

Something that comes to mind is their expectations about the colour grade. It's one thing to re-use compressed files, but if you have to change WB or levels significantly to be able to intercut footage from different finished pieces then the footage can fall apart pretty easily. If your edit isn't really jumping around between lots of differently sourced footage then it wouldn't be a problem, for example if each scene was from the same source, but if you're telling a non-linear story or something like that then it might get very crunchy very quickly, and then be something that is worse than their expectations.

-

Oh, and it makes the camera look pretty small, and highly pocketable due to the lens having almost no thickness:

-

Interesting write-up and I completely agree with your ethos of less being more, as long as the footage is good enough. Depending on the situation I have different levels of tolerance for how big (and therefore how intrusive) the camera can be. I've used everything from a naked GoPro to my various DSLR + Rode VMP sized rigs, and less is absolutely more. I've been tossing things up lately about getting a third Go Shoot camera to compliment the SJ4000 action camera and GF3 + 15/8 lens cap combos. The driving force is that the SJ4000 30p footage looks so thin you need those butchers chainlink gloves to edit it, and the GF3 footage is 25p and nicer but still not that great and the camera isn't easy to use with basically no controls in video at all and no focus assists or anything. But anything that is bigger than the GF3 better be absolutely killing it in the IQ department to justify its size, but I've had some ideas so watch this space. Yeah, I'm with you. I alluded above to being willing to sacrifice a little size for a huge bump in IQ and have some plans. I know that when I get the 10-bit footage from the GH5 into Resolve it's just a dream to edit and all my frustrations with camera whatevers all go away and I forget that RAW or 6K or 8K or 14 stops of DR or whatever even exists. Beautiful tools are beautiful to use throughout the whole production and post-production process. No vignetting for me either... a few test shots converted from RAW 4000x3000 stills: It's really quite a modern lens, with high contrast and flare resistance, and while it isn't tack sharp, it's probably sharper than the 100Mbps bitrate of the average 4K codec. I'm seeing a blur of about 2 pixels on the RAW 4K images, and most lenses don't get much sharper than that, especially for the USD$47 this cost me, brand new:

-

I think you forgot to put a lens on that camera! 😆😆😆 Going back to your previous comment about the gimbal 'floaty' look, I'm not a fan of it either, but that doesn't mean I'm against gimbals. They have a bunch of looks that don't give the 'floaty' aesthetic: Getting a quick static shot while keeping mobile where they eliminate the micro-jitters of hand-holding but don't add motion They can do reasonable shots pan/tilt if you stand still and just pan/tilt the camera They can do reasonable crane shots if you're standing still or have it on a long pole and there is nothing close to the camera to give away the fact your motion might not be in a smooth path They can be programmed to move the camera, eg, slow movement for time lapses etc, or even just a slow pan or tilt and this works well when attached to a solid object rather than being held It's a matter of using them wisely and knowing what things cause that look and what doesn't. No vignetting I could see - very nice! I also like flowers. I thought @noone had put it on 2x digital zoom, which should be an MFT crop exactly. Maybe something else was going on filming a monitor so close? Not entirely sure. Either way, I'm fine with it. Those pics even have some background separation which is pretty cool. I've wanted one of those lenses for years for street photography and one of the cool things people talked about was that you learned to pre-focus by touch because the control was so tactile and because of the large DoF small errors in focus distance weren't really a problem. I've also found lenses to be heaps of fun. With MFT you can adapt lots of lenses but they're all so long withe the MFT crop, and it seems that in the earlier days there weren't the wider lenses that are around now. For example, I struggle to find a C-Mount TV zoom that's wider than 50mm or so (FF equivalent FOV). I think vintage lenses would be even more fun if I had a FF mirrorless camera to play with them on. Was the wedding a paid gig? I can really see how such a small camera would be great for such an occasion - the feeling of freedom and ability to really move quickly. I find that when I talk to people who use full-size cameras, maybe in a rig of some kind, and I talk about cameras that are smaller than a full-size body and how they let you move faster, most people don't get it, I don't know if they don't understand that smaller cameras are more freeing, or that it's possible to be more free than they currently are with their "lightweight run and gun" GH5 / 18-35 / shotgun mic on sticks setup. I even notice the difference between P&S sized cameras and an action camera. I shot a project on just a GoPro Hero 3 black at an event (it was a personal project as a present to a friend) and the camera was so portable and getting angles that you wouldn't do with a normal setup. It was atrocious in low light though, and I had to work really hard in post to make it work, but my friend was really happy with it. Here it is... Something like the RX100 with it's low-light capabilities would be lots of fun to work with!

-

This statement is behind every piece of frustration that basically anyone has ever had about the files a camera delivers. Unless the camera can write a RAW stream in every mode that the sensor supports, then that's the entire problem. You're frustrated by lack of open-gate functions in FF cameras, I'm frustrated with my sub-$100 action camera that applies dreadful sharpening and NR to its 2MP sensor and then writes the files in abysmal quality 15Mbps h264, the P2K became a cult camera for writing RAW 1080p internally, the GH5 got hype for the 5K open-gate modes and 4K 400Mbps ALL-I modes, the P4K got hype for RAW 4K60 internally, ML has people voiding their warranties on cameras to get higher quality video to the card, etc etc. Taking the image from the sensor and not stomping on it before writing it to the SD card is basically the only thing we care about and talk about.

-

Interesting - a good amount of vignetting on MFT sized sensor readout. All I can say to that is... EXCELLENT! I may end up slightly compensating for that in post, but maybe not. We'll see. In theory, mine arrives tomorrow 🙂 What was it about the RX100V that you most enjoyed? How is the ZV1 as a replacement? Do you mean modifying a GoPro? with something like a backbone mod? I was looking for c-mount lenses and came upon this which I thought was an interesting concept: https://cmount.com/product/c-mount-modified-sony-rx0-3-lens-atomos-shogun-inferno-4k-recorder-package/ Thanks Leslie! I'm not sure that taking up abseiling is a substitute for travel, but thankfully I have enough toys at home to keep me from getting desperate and grabbing some string and jumping off buildings 😛

-

Popped down the beach and caught a half-decent sunset... GF3 and Voigtlander 17.5mm lens. It only has a full-auto-everything video mode, so I used the old trick of setting it to manual mode for stills, selecting base ISO, 1/50 SS, then dialling in the exposure with vND/aperture then hitting record and letting it automatically choose what I want it to choose. It's soft as hell due to the low bitrate of the GF3, the lack of focus assists, and the fact that it was windy and I forgot to take a jumper so my mild hand-shake created the need for stabilisation in post 🙂 Most FUN... not most useful! I don't yet own a glass balcony clamp, but buying one will just add to the enjoyment of easing lockdowns and being able to actually go, well, anywhere. Very nice!

-

I worked out that I have three different purposes for cameras: my family trips / events, camera testing and learning, and Go-Shoot. Go-Shoot is the most fun one, and I'm wondering why, but I suspect it has to do with the fact that nothing is at stake. The event isn't significant enough for there to be any consequences if I botch the whole thing and there's no edit, or even any footage at all. Thinking about the psychology of it, is there's something at stake then the mood changes and you have to "stop horsing around" and "let me concentrate on this" and "it's time to get serious now" etc... Obviously on projects you can be having fun in-between shots when the pressure is off, but you'd have to be pretty darn skilled to be super-relaxed while also nailing the shot. I think it's when you can do whatever you want that it becomes fun, like in Austin Powers...

-

I've ordered the Olympus 15/8 lens-cap-lens to put on my GF3 as a larger (and better quality) rig for my "go-shoot" project. My Go-Shoot project is the inspiration for pushing forwards with the action camera setup, and is basically to get out of the house, film something, edit it, and publish it, just to do little experiments and to get more practice with editing and develop my editing style more. I'm really looking forward to the Oly 15/8 and GF3 combo, because the GF3 doesn't have manual controls in video mode and the 15/8 is by far the smallest manual lens around, which will give me MF and take away aperture control from the camera.

-

Their PR ahead of announcing the UMP12K was pretty low-key so who knows! BM seem to be a random number generator with their features, with each camera kind of being like the first camera from a startup company trying to fill a little gap they've found in the market. They could either do something sensible like creating a new camera that fits into an existing lineup and uses the same lens-mount and accessories etc, or they could go wild with another one-off offering, who knows. It could just as easily be a medium format 20K "pocket" camera with a PL mount, or a 6K 1" sensor C-Mount action/crash "cinema" camera.

-

You need IBIS if you want smooth footage from a 180 shutter. IBIS / OIS stabilises the camera DURING the exposure, EIS stabilises AFTER the exposures have been made. Imagine doing a jittery pan at night. With no stabilisation at all you will have a sequence of frames that aren't all aligned horizontally, and in each frame you will have motion blur that goes left to right with some little wiggles in it. If you had IBIS / OIS on then you will have a sequence of frames that are all aligned horizontally, and in each frame you will have motion blur that goes left to right without any up and down wiggles in it. If you used EIS only you will have a sequence of frames that are all aligned horizontally, but in each frame you will have motion blur that goes left to right with some little wiggles in it. EIS is spectacular for action cameras because they use SS to expose and so in good lighting have basically no motion blur, but as soon as you have motion blur at all then IBIS or OIS becomes necessary for properly smoothed motion.

-

@tupp I give up. You obviously think that working for years and learning the names of all the clamps is required for anyone to exercise any judgement or awareness of safety and that I'm an idiot because I think I might know things when I can't because I've never worked as a grip or learned the names of the clamps. I wonder how much you could possibly know (beyond knowing the names of the clamps) if you fail to understand that the universe is literally maths and physics, and I'm also wondering why you haven't come up with literally thousands of photos of those little camera clamps sitting in the middle of piles of shattered glass - there are no shortage of images of people using them that way. I could debate this forever, but your method of assessing judgement or practical ability seems to be one-dimensional and I don't fit that narrow definition, and am never going to because I don't aspire to the same career path as yours, so how about this: you have fulfilled your legal and ethical duty to tell me (and anyone else reading this thread henceforth) to never even look at tempered glass until I am the god of all grips (and can name all the clamps), and I will go ahead and use the judgement I have and when it all inevitably comes crashing down just like you have predicted I will not hold you responsible.

-

Raspberry Pi Releases an Interchangeable-lens Camera Module

kye replied to androidlad's topic in Cameras

Does anyone know if anyone is working on the h264 modes for this setup? The default was typical low-bitrate stuff, but maybe there's a chance of a higher bitrate 10-bit ALL-I mode? -

Recently I've been playing around with modifying a sub-$100 action camera, and have been having more fun than I have in a long time. What is the most fun piece of equipment or rig that you have had, or used? Why? What made it fun? Post some pictures! Here's my "cinematic beast" with a few M12 lenses in front of the GH5 for scale: and here's the most recent video I shot with it: Shooting with this thing is so much fun because no-one takes a camera the size of a box of matches seriously, and assumes that the lens is really wide (it's about a 60mm equivalent FOV so it's really not that wide) and the only controls you get are on/off, record/stop, and where you aim it. What has put a smile on your face?

-

A few thoughts: I try to always be respectful of items (regardless of if they're mine or not) and clamping force would be something I'd be very aware of, both for the possibility of breaking something and also leaving teeth-marks on things (as an aside, when I was young I bought a second-hand car that had the options pack which included a leather steering-wheel, and I took it somewhere once and I think they had clamped a clipboard to the steering wheel because it had little chew-marks in the leather at top dead-centre of the wheel.. I saw it every time I drove the car and it made me so mad that they'd damage my car like that!) My inclination would be to apply as little clamping force as possible - sufficient to make sure it wouldn't fall, and I'd always try to use sufficient padding to ensure there wasn't a grain of sand or something concentrating the force (thus my comment earlier that you were very critical of) I would also try to put the centre of gravity on the inside of the railing so that should anything fall it would be towards safety instead of towards other people's heads, but I can understand the concept of centering it to eliminate torsion In terms of a safety line, any tips on something that's easy and practical? fishing line tied to a chair perhaps, or a bottle filled with water? or something else? be careful being critical about people knowing or not knowing the terminology of something - I may not know the terminology of clamps, but I have postgraduate level physics and math, and it seems to me that this is a physics problem, not a "I can name all the clamps" problem

-

@elgabogomez @Django The controlled tests I have seen all show that 35mm motion picture film can't beat 4K and IMAX motion picture film does better but still isn't as good as a 6K sensor like the Alexa LF. Did you watch the Resolution Demo that I linked in my first post? I know it's long, but it might have more real information in it than years of reading forums. I'm curious if you can link to any demos that show, under controlled conditions, direct comparisons between motion picture film and digital cameras. Or any kind of calibrated resolution test would also be good. Sadly, everything else other than controlled tests are worthless for actually being able to understand what is going on. The more I think about this stuff, the more I think that sharpness is the problem. Reality has infinite resolution and no sharpness. Both digital and film images obviously have less resolution than reality, but for some reason film doesn't look objectionable but digital often does. Of course, RAW video, or very high quality codecs, also don't have this objectionable quality. This all leads me to think that it's about sharpness and not resolution. Resolution is fine - reality has so much of it that if it was at all objectionable then it would be awful to look at, but it's not. I agree, however I still think we have a massive blind spot. I don't think that anyone in this thread is interested in applying Gate Weave to their images, and I'd suggest that some of the people here talking about how film is desirable don't even know what it is. This proves exactly my point, that our one-sided rose-coloured-glasses view of film is getting in the way of a useful conversation about what is great about film and what is not, what is great about digital and what is not, and what might have been great about tape and what wasn't. Until we start breaking down the various attributes of each then we're going to have discussions where someone less educated in the medium talks about "the film look" as a proxy for everything nice about an image, and the people who have deep knowledge about what is really going on react in ways that don't make any sense to the newcomer, and the discussion never makes any progress. If we had a different phrase, then maybe it would create space in these conversations to discuss the good parts of every medium without the newcomers inadvertently implying they love gate weave. Actually, I think the cinematic vs video look argument hasn't been argued well at all, which is kind of the point of this thread. The state of the debate is this: People almost universally claim to like the colour and DR rendition of good film stocks People almost universally claim to like the colour and DR rendition of well-shot and well-graded Alexa images A huge amount of technical knowledge accumulated over decades went into developing film stocks A huge amount of technical knowledge about film and digital accumulated over many years went into developing the ARRI colour science The few people who have demonstrated the ability to flawlessly match digital to film do so with Alexas, are able to do so after years of personal testing and research and talk about making complex adjustments that are often not known about or discussed in the public domain, and use specialist and even custom written software tools to do so So I'd say that a better summary of "cinematic vs video" is that film-like colour and DR are an attribute of "cinematic" and only a selection of people on earth know how to do that. ...and good luck if you "only" have Resolve. Are you talking about blurring images or softening contrast? The BPM filters soften contrast but don't blur. Vintage lenses often do both, with the lens optical tolerances accounting for the resolution of the lens (blur) and the coatings accounting for the macro and micro contrast of the image, which is actually called halation. Fascinating how different the colour reproduction is on those images, even with two coming from the same film stock. Funny how no-one in this thread has mentioned that they're chasing images that vary in colour reproduction from shot to shot like film can do. The point of this conversation (and these forums in general I believe) is to try and get smarter are getting better results with more modest equipment. Anyone can just say "oh well, if you want good colour then go buy the Alexa 65... byeeeeee" but it's not really of much help. Any ideas about what BM did in the new U12K that improved the image and got it closer to film-like? I'm a big fan of trying to learn by picking apart good examples of things. For example, I bought a BMMCC just to be able to experiment with the colour science as it has a good reputation. A few questions for thought around the U12K: Does it keep its magic at lower resolutions? If so, it's not the resolution creating the magic. Does it keep its magic when blurred? If so, it's not the sharpness creating the magic. Does it keep its magic when sharpened? If so, it's not the lack of sharpening that is creating the magic. you get the idea.... Do you do this just to make them look nicer? or for a particular aesthetic effect? I know what you're saying but I'm going to disagree with the way you outlined it. There are two competing sets of priorities, one set that makes an image with the organic aesthetic, and one set that is all about resolution and high frame rates and other specs. I think these priorities only conflict when a person doesn't know enough about what is going on. For example, in this thread I've linked to evidence showing that film has quite limited resolution, and yet we've heard from others that film has heaps of resolution. For those that understand how much resolution film really has, then there is no conflict, they aren't tempted by the allure of increased resolution because they know that the aesthetic they desire doesn't have that resolution. Other parameters have different status, for example, film has almost infinite bit-depth, all the way through the entire signal chain, so having greater bit depth is in alignment with a more organic aesthetic. Knowing which parameters are which is the point of trying to have these conversations. Any modern camera that jiggles the image around slightly from frame to frame or subtly varies the exposure from frame to frame would have to take this crown, considering these are attributes of film that no other camera is replicating. That conversation is the one that inspired this one. Another reason that we should move beyond film being a proxy word for "good". Is anyone asking if the kids will stop liking good images because they never saw film in a theatre? No, that's ridiculous. Therefore, good does not equal film. Are there desirable attributes that film has, yes absolutely, but many aspects of film are only desirable because of association and nostalgia.

-

That's interesting - do they scale the values back into legal, or clip them? I haven't heard that non-broadcast distribution cared about such things.

-

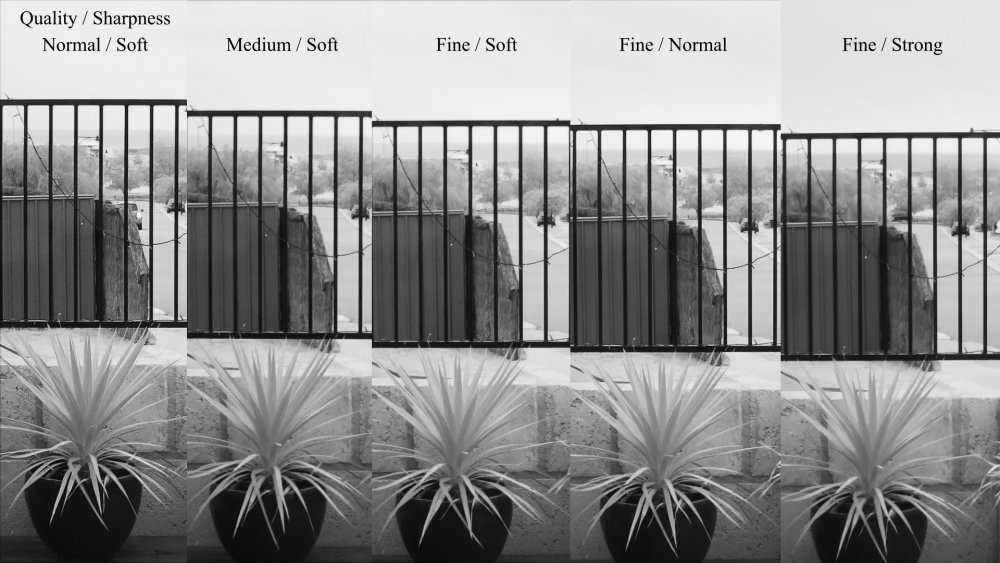

Another vignette.. I'm now officially over the IR sensitivity. It makes people look, well, awful. I'm also a bit over the focal length being a bit too long for some situations. Lucky, I have IR Cut filters on order, and I say filters, because I'll need one for this lens and one for the new lens I have on order, a 2.8-12mm zoom lens. This will give around 20-85mm equivalent FOV, and (I think) has a focus control, so I might be able to focus closer too, so that will be fun. I also found the quality setting on the camera was not set optimally, so did a modes test and found the best looking settings, and also optimised a grade and saved it as a Powergrade in Resolve, so now I can just apply it whenever I want and save time pixel-peeping in post. Here's the modes from the camera: and here's the powergrade applied, with each shot having the edge softening optimised to cancel out the halo from the sharpening and compression applied: The middle one looks best, so that's what I'll go with. The goal of this project was to get me shooting and doing smaller projects and it certainly has done that, so that's a success in my book.

-

@TomTheDP @BenEricson You've both listed some virtues of film (and the negative parts of digital) but didn't list the advantages of digital or the disadvantages of film. Halation, gate weave, gamma-related colour shifts, hue compression, softness, etc are all parts of dealing with motion-picture film that don't get talked about. This is kind of what I think the problem is - we view film as being better than digital (and maybe we know that it's only better in some ways) but never talk about the ways its far inferior. This leads "film" to be a proxy for "nice" and then we never get anywhere because we're not even talking the same language. People are jumping up and down about 6K and 8K but seem to be unfamiliar with the idea that 35mm motion-picture film struggles to match 4K. No-one seems to be trying to blur their images to match film (apart from me).

-

There are many good reasons that having a physical filter is better than emulating the effect in post. I'm considering filters myself, although I have lenses that have some of this effect built-in so I'm not starting with a blank canvas. As pointed out in the thread though, lighting conditions and subject matter can make the effect very difficult to control. For example, if you had a strong light in the frame then you might get a huge amount of the effect, compared to a shot with low contrast and no light hitting the filter which might have almost no visible effect. Therefore, to get the two shots to match visually you would have to swap the strength of the filter on each shot to get an end result that looks the same. This is one of the advantages of doing it in post, because you can adjust it afterwards, and don't need to buy every strength of filter, and you can even select values in between the ones that the filters come in, or even animate the effect so that it varies amount in the same shot. For example if you went from a portrait shot and panned to show a scene with the sun in it then you would want more effect at the start of the shot than at the end, and a smooth animation would do the trick in post. I suggest that the best approach is likely to combine the two, getting the majority of the effect using a filter while shooting, but evening it out in post.

-

I was reading a thread on a pro colourist forum and a big debate started about how film was (mostly) awful to work with and mostly it was hugely inconsistent and although it was occasionally glorious, it was mostly a completely undesirable thing. This makes sense, as evidenced by the complete lack of threads where people are asking how much to blur their footage to get the Super-8 resolution. This leads me to perhaps a rather odd terminology question - there's the "video look" which typically isn't regarded as desirable, but what is the other "look"? We can say "filmic" but in reality the film look consists of a whole bunch of things that mostly people aren't interested in. "Cinematic" is a great phrase, but that just means "like the things in the cinema" and considering that Steven Soderbergh shot Unsane on an iPhone, having a phrase that includes everything from the Alexa 65 to the iPhone isn't really that useful. We could attack it from another direction - we don't want that "digital" look, we want the "analog" look. This isn't really so helpful either - analog was either film, which we don't want to emulate many of the qualities of it at all, or analog tape. Analog tape simulation was in-vogue in music videos etc but used as a special effect, because the quality of VHS or Beta or even their higher-resolution variants, well, it wasn't great. So, if we've only ever captured images on film, tape, and digital, and these often included attributes that were very undesirable, and even what got shown in the cinema wasn't always to be envied, what is it that we do want? We can describe what we do want by listing the many things we don't want, like clipped highlights, or digital noise, etc etc, but that's not so useful. This may sound like a trivial matter and just semantics, but I think there's a larger issue going on here. I've been interested in learning about the film look, not because I want to emulate film completely authentically, but because I wanted to pick the good bits out of it. But the issue is that any discussion of the "film look" either takes place with people who aren't experts and therefore isn't useful, or it involves those who have deep knowledge, but will argue that film didn't have a "look" because they spent decades of their life trying to create a single look out of pieces of film that were radically different to each other. Thus, these conversations often go nowhere because we don't have a good language to even frame the discussion. I recently watched the Resolution Demo by Steve Yedlin (who shot Knives Out, Star Wars The Last Jedi, etc) which in Part 2 gave a few more clues about what might be going on with image quality and specifically which aspects of image quality have which effects. http://yedlin.net/ResDemo/index.html Another thing that 'inspired' this thread is the recent demo video of the Sony A1 which might be the most "video" looking thing I have seen in years.... What I take from this is that whatever the hell makes an aesthetically pleasing image, it's almost entirely absent in this video. So, I've tried taking a different approach, and tried asking what makes something look good. I've prefaced it with some comments around what I don't like, in order to provide some context (because once again there isn't language for this topic) but that went about as well as you'd expect, and I got replies that included "it's whatever looks good to you" or "whatever is right for your project" etc. Correct, but not helpful. Does anyone have any idea how we might start to have a conversation about this stuff?