-

Posts

8,051 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

One issue I see a lot is that photo cameras completely incapable of decent video look like DSLRs, hybrid MILCs like the GH5 which are workhorses look like DSLRs, and small form-factor cinema cameras look like DSLRs, therefore, because they all look the same, people compare them. The GH5 is a fundamentally different shooting experience than BM cameras for example. Something like the GH5 is built for run-and-gun situations out in the world far away from civilisation. It's weather sealed so can withstand wet / heat / dust / etc. It's got IBIS so can be hand-held with great results. It has long battery-life so with a dozen batteries may even be fine for a week away from AC, has higher-quality consumer codecs so can record lots of footage onto reasonably priced media, and it's an all-in-one solution that only needs an external sound source for professional audio. The P4K is the complete opposite. It's not weather sealed. It doesn't have IBIS so you need a tripod or rig. It has terrible battery life, so a dozen batteries is more like a day shooting than a week. It has high-bitrate professional formats that need large storage solutions for long duration clips. It's not an all-in-one solution at all - to get the flexibility of the GH5 in practice you need to have a rig that has an external monitor, external power solution, external storage SSD, and that needs to be on a tripod or shoulder-rig etc. The GH5 is designed to go out into the world and to capture the world by working around the unique foibles of the world. The P4K is designed to have the world come to it and to capture the world by having the world work around the foibles of the camera. The GH5 is basically the perfect solution for the kind of film-making that I do, the P4K is an absolutely terrible solution for what I do. Most people don't shoot in situations that understand how completely different they are from each other, and because they look alike, they get compared way too directly with each other. I agree. Your point about an ambassador is a good one too, MFT isn't winning the marketing game. Let's hope that they set a precedent with the Sony A7S3 in terms of going for 4K but doing it better and reliably and fixing the niggles, and that they can do this with a GH6 as well. Probably the biggest enemy we have to objectivity is confirmation bias. So when someone spends thousands of dollars on a camera they become very invested in thinking they made the right choice, so they will argue about it on the internet with other people. Fights about sensor size or camera brand aren't about cameras, they're about fighting to maintain the illusion that we make good decisions and are in control of our lives. I'm really hoping that Panasonic don't start playing the games of crippling part of their camera line to protect another part of their camera line. In a sense Panasonic were a great challenger to the status quo as they didn't have a huge cine line to protect, thus the GH5 wasn't really a threat to the EVA in terms of loss of sales.

-

Awesome. I'm willing to wait for something great. Quick decisions are often bad decisions, and the GH5 was made so much better in firmware updates rather than up front, but I don't think the current market really responds to that. I can see Panasonic waiting a little bit longer, getting the extra modes configured and tested, and then coming out with an absolute cracker of a camera. The video I posted above talks about using the 5K sensor in the GH5 to de-squeeze in-camera to get 10K or 8K files SOOC, so even if they don't go for extra resolution sensor but up the image processing they can still get out larger resolution files by de-squeezing in-camera. There are also a whole spectrum of algorithms for upscaling, such as Resolves Super Scale function, as well as other image processing functionality that might be useful, so going that route might yield a spectacular upgrade.

-

Who knows what Panasonic will do. I finally found the video I've been looking for though.. the GH5 was almost an 8K HDR camera. See below (linked to the right time-stamp): Given that Panasonic seems to have been trying to get the maximum out of the hardware possible, if they simply updated the GH5 to include all 2020 parts instead of 2016 parts and did the same "what can we possibly get out of this hardware" approach, it would truly be something to see. Considering that in some ways Sony has made a huge statement with the A7S3 that it's ok to make a camera that 'just works' and isn't about chasing outright pixel counts or clickbait marketing headlines, the GH6 could be a 20MP dual-ISO version of the GH5 with beefed up image processing pipeline so we could have things like 4K60 10-bit HLG, 1080p240 10-bit, with the updated colour science from the S1H, with less rolling-shutter, etc. Even just those upgrades would be spectacular. I'm still championing the idea of an internal eND that can be managed by the camera for auto-exposure, and combined with ISO would allow a fixed shutter angle and aperture and it would adjust exposure from full-sun to darkest low-light.

-

Blackmagic casually announces 12K URSA Mini Pro Camera

kye replied to Andrew - EOSHD's topic in Cameras

I understood you were referring to the editing workspace, and so was I as well. The modern NLE has many conventions - if someone completely new to video came in and designed their own it would look completely different. Even things like calling folders "bins" because of the physical objects used to store and manage strips of celluloid is part of those conventions. And inn the context of things being easy to understand and useful, it's great that they do all look similar. I also thought they were obvious, but it wasn't something really being talked about so I posted it. I don't agree that it will only be a few years before BM is huge, but they're definitely making the right decisions to grow and become a bigger player. -

Yep - nothing wrong with that image! Nice.

-

@EduPortas yeah, on the internet if you find something then you have to bookmark it, or even archive it to your own storage. If you don't do it at that time then you'll never find it again! It certainly does look like a very compact setup: It makes sense as Herzog is on a mission to get the most X content he can, where X is in the realm of crazy / entertaining / ridiculous / cutting / insightful / shocking / etc. Considering that people are intimidated by equipment and basically stop acting naturally given the slightest reason, you'd want a setup that was so fast that you could always keep up and small enough that the people can forget that they're being filmed. Sacha Baron Cohen went to some extreme lengths to get people to feel comfortable while being filmed during Who Is America? and I think what is remarkable in that show is that he managed to get such open responses while people were obviously on set and being interviewed with lights and cameras and the whole setup.

-

@Towd The difference between 24p and 23.976 is one frame every 41.6 seconds. If you have your NLE set to "nearest frame" then that means it won't jump until 20.6 seconds into a clip, which is probably fine for me and my edits. However, if you have your timeline set to some kind of frame interpolation, where you're doing slow-motion shots that aren't a direct ratio (speed-ramps or using the algorithms to create new frames in-between the source ones) then your 23.976 clip is going to trigger those algorithms and the first frame will be the first frame of the clip, but every frame from then on will be algorithmically generated as being between two frames in the source clip. The way around that is to set the timeline/project to "nearest" but then engage the algorithm for specific shots, but that's a bit of a PITA. I'm fine to change to NTSC mode, and I guess that's what I should do. Funny how you convinced me to shoot 24p and now I'm switching away from it again 🙂 I don't care about PAL or NTSC or whatever - I haven't been able to receive TV or radio in my house for over a decade, all content is data via the internet. Whenever people ask me about something on TV I normally reply "do they still have that?" just to be cheeky - to me it's all just data. These days the frame rate of a video has about as much connection to the frequency of the AC coming out of my power sockets as the bit-depth of a JPG image is connected with the phases of the moon. I guess that if you're involved in the industry then it's probably still a big deal though, and maybe there's a bunch of hardware processing digital TV signals that are all hard-coded or spec'd for a certain frame rate. I genuinely have no idea what refresh rates my TV runs though. We have a smart 4K TV with Netflix and Amazon apps but no clue what the settings are - when we got it I went through the menus to turn off the image auto-magic enhancement destruction features. Before that we had a dumb FHD TV with a Roku media box with Netflix and other apps, and before that we used the Xbox with those apps. I always set the TV to native resolution but I never paid much attention to the refresh rates though. Considering that TVs are just computers now and they're all made in the same factories I wonder if the content is all region unspecific and maybe the apps are all written to handle whatever frame rates the content is in? TBH it makes as much sense as talking about NTSC mode it is! Thanks for your help. back to the original question though.... Does this mean that the motion cadence question is now moot? or just moot for the GH5? I have a BMMCC which can shoot uncompressed RAW, and can do a test with that if it will help.

-

@Dustin start a new thread... no one around here is short of an opinion and we do love to spend other people's money!! 🙂

-

Blackmagic casually announces 12K URSA Mini Pro Camera

kye replied to Andrew - EOSHD's topic in Cameras

My understanding of FCPX is that it's pretty well designed for editing, which was the part that it was lacking prior to v12, so fan or not, it makes sense to go with established conventions. People aren't aware of how many conventions have been set, but there's lots. Imagine if every screen in a program had the menu options somewhere different instead of them staying in the same place, or every dialog box had the buttons in a different location or different order rather than OK/Cancel. Or someone abandoned the File - Edit - View - .... - Window - Help style menu structure and went with their own one. I remember custom software like that, and every time you clicked something you had to stare at it like you were trying to understand a treasure map! Standards are either created by corporate committees or by people copying the good things from each other, I know which one tends to work out in the best user experience! -

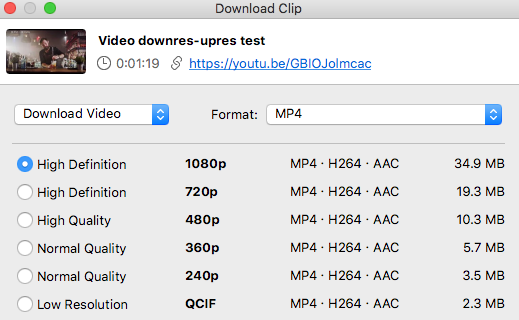

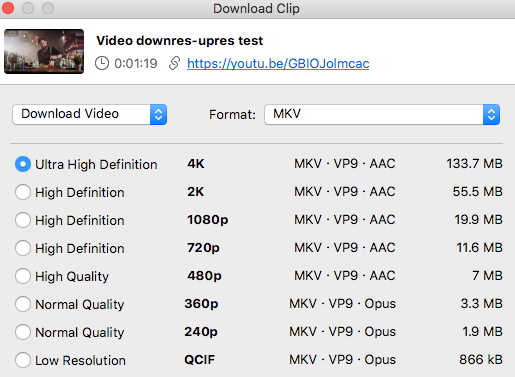

Actually, when I was downloading the video again, I got two choices for the 1080 mode, so I downloaded both, but I only ended up analysing the higher bitrate one (which would be what people without VP9 capability would be limited to). Here's the two modes, notice the different file sizes for the same resolutions between the two modes:

-

Another thing to think about with the GH5 is the mode you shoot in impacts the zoom factor that the Extended Teleconverter ETC mode gives you. It's 1.4x in 4K mode, but is 1.7x in 3.3K mode, and 2.8x in 1080p. That's another plus for the 3.3K mode, as 1.7x would give me a bit more reach at the long end of the three primes I carry - the longest is the 42.5mm and with the 1.7X it would be a FF equivalent of 145mm, which is quite a bit of reach. If I wanted more like a 1.4x then I can just crop in post, as that's within the limits of hiding something in post with a bit of sharpening to compensate.

-

You raise an excellent point about 60p in 1080 and getting the 10-bit. How do I set that on the camera? I have the latest firmware (2.7 only released very recently) and I'm in 24Hz cinema mode, and when I go into the menus there is only 24p modes available, and the 1080 10-bit mode does not have VFR as a valid option - only the 8-bit modes allows it. IIRC I had that mode on 25Hz PAL but not in 24Hz mode. I see it's available in PAL or NTSC modes. Do I have to change system frequency and restart the camera? Or should I be in NTSC mode and be shooting 23.98fps to go with my 24p from my other cameras?? Won't the sync between 24p and 23.98 fail every two seconds or so? If I have to swap between system frequencies that's a PITA if I want to just grab a quick shot.. (and by quick, I mean 5 seconds to change modes rather than 50 seconds). These camera modes and frame rates are doing my head in.

-

Blackmagic casually announces 12K URSA Mini Pro Camera

kye replied to Andrew - EOSHD's topic in Cameras

Interesting take from Alex Jordan about the 12K and what it means for the industry. TL;DR: BM made a 12K camera that was editable on a laptop because they make the cameras and NLE, so can create their own file formats. No-one else has both, so this is BM strategic advantage over all other manufacturers who have to co-operate in order to innovate like this. -

Yeah, the sun was in and out of clouds during the tree test, not the best but it is what it is. I'm kind of having a change of heart with side-by-side tests too. If you can only tell the difference between two modes in a side-by-side test but can't take a collection of shots from one and a different collection of other shots from the other and tell the difference then will you really notice if a film is shot on one vs the other? I don't think so. No worries about 24p. I've now completely changed over. My iPhone only had 24p and 30p, like the PAL countries don't exist. I thought that my Sony X3000 only had 25p, but it turns out that if you set it to PAL then it only has 25p, but if you set it to NTSC then it has 24p and 30p. I guess that cinema is only done by NTSC countries..... Maybe I should buy a bunch of world maps and mail them to every company in silicon valley, they seem to be unaware there is a world out here. I'm also not seeing much difference between the different modes, even with the Prores export. Maybe I'm blind, but there it is. On the back of this I'm tempted to use the 3.3K mode as it's a sweet spot in the middle of the highest bit-rate (for overall image quality), the least resolution (for processing strain on pushing pixels around), and ALL-I for being able to be usable in post. The effective bit-rate is only 300Mbps because a 16:9 crop of a 4:3 only includes 75% of the total pixels. If I used it then I'd have 3.3K timelines for lower CPU/GPU loads in editing and just export at 4K for upload to YT, which would slightly soften the resolution like the Alexa does for 3.2K sensor for 4K files.

-

Yes, not old by artist standards, but old digitally. I guess in the context of the DSLR revolution, and perhaps now the mirrorless cinema camera revolution?, we can 'go back' in some regards but stay modern in others. I'm not really talking about anyone going back to a huge ENG camera with belt-mounted battery pack and Betamax to get their 240p fix. Do you have links to Herzog's comments? I searched youtube a bit and couldn't find an account or which videos he might have been commenting on. It would be interesting to read them - he's not short of an opinion that's for sure, but they're often piercing and highly relevant.

-

GH5 modes test here:

-

GH5 stress-test of the various modes is here: The case for going back to 1080 is strong.

-

Ok, here's the test. Video tests the best 24p GH5 modes. Modes: 1080p 422 10-bit ALL-I 200Mbps h264 3.3K 422 10-bit ALL-I 400Mbps h264 (4:3 cropped) C4K 422 10-bit Long-GOP 150Mbps h264 C4K 422 10-bit ALL-I 400Mbps h264 5K 420 10-bit Long-GOP 200Mbps h265 (4:3 cropped) Tests: Motion stress-test x 2 (beach and tree) Motion cadence test Skintone and colour density test All shots in HLG profile. The export file (that I uploaded to YT) is here: https://www.sugarsync.com/pf/D8480669_08693060_8967657 It's C2K Prores (LT I think) at ~87Mbps and 1.17Gb. I can export a C4K version if there is enough interest. I went with C2K as people make feature films in 1080p Prores HQ, so if we can't tell the difference between GH5 modes in Prores LT then what are we even talking about? 🙂 Interesting observations from editing this was that during rendering, the 1080p mode was fastest (at around 30fps), and the 3.3K and 4K ALL-I modes were next at around 18fps, followed by the 4K Long-GOP around 13-15, then the 5K h265 at about 5fps. I don't have hardware h265 decoding so that probably explains the 5K mode, but why is the Long-GOP codec slower when it's a straight sequential export? If I was playing the file backwards or seeking then I understand that ALL-I has the advantage, but in a straight export I don't understand why it would be slower. Regardless, it was something I noticed. Also, in editing, the 4K Long-GOP files aren't that nice to work with, but the ALL-I files are great, playing forwards and backwards basically without hesitation, on my 2016 Dual-Core MBP laptop. Something to consider.

-

I do feel a bit badly about it because I got it and haven't shot with it yet. In a sense it's all dressed up with no-where to go! It's my first true cine camera, so that's taking some adjustment too 🙂 The goal is actually to understand it. To understand its colour science, to understand its resolution, to understand it's motion cadence. Many would be amazed at some of the things that I've found when replicating it. What it does to skin tones for example. It pushes around the colour space in ways that shouldn't even work, let alone actually look good. The BMCC was compared very favourably to the Alexa (as in, it held up!) and there's no way I'm getting an Alexa to play with, so this is a kind of close-second for a no-where-near price. BM know what they're doing with colour, and comparing the colour charts, and some other things I've done that I don't think I've shared yet, there is some crazy sh*t going on in there. Once I have learned its secrets, which it's not giving up so easily BTW even after I've matched it almost exactly a couple of times, then I can integrate them into my workflow if I like, so it ads another set of techniques that I can apply if I want. I typically shoot projects on my phone, an action camera, and the GH5, and I have to match them all, so the more I know about colour the better off I am. In terms of this thread, I "went back" to use the Micro as a reference for colour science and motion cadence, because it delivers colour a lot less accurately than modern cameras. Sony wins the accuracy race for colour science, so we should have learned by now that accuracy isn't automatically better.

-

I haven't done that yet, but it's on the list. I haven't even really shot anything with it yet, except some colour tests. Disappointing I know, but covid cancelled all my travel plans, my work went through the roof, and the family wore PJs all day and didn't want to be filmed lol. I was contemplating the GH5 to BMMCC conversion project and was thinking that a more practical pinnacle would be to shoot a bunch of random things with each camera (not A/B of the same thing, just random shots) and grade the GH5 with the BMMCC characteristics and just put up a video saying shot A, shot B, shot C, and then put out a poll asking if people could tell which was which, with options as Definitely BMMCC, maybe BMMCC, not sure, maybe GH5 and definitely GH5, and see if people could pick the shots easily or not. If they couldn't I'd declare victory. The rationale is that when we watch something we're not given another version of the same thing shot on some other camera, so side-by-side tests aren't really needed for a successful imitation, only for a perfect replication.

-

How many times do you have to stop a shoot in order to change lenses because of the 4K60 crop? How many times are you shooting 120p and find that the 1080p image quality is an issue in post? How many times have you picked up and turned on your camera, or been looking at the files in your NLE and thought "gee, I really wish this brand had more market share". I wouldn't be so sure that they've maxed it out. The GH5 received incremental updates for years afterwards, and they made the original firmware look puny in comparison. I look at the current crop of cameras and don't feel at all left behind - 4K 400Mbps 422 10-bit ALL-I internal h264 without any crop compared to the 24p modes isn't that much lower than the current offerings. In fact, the GH5 wasn't even maxed out. There was talk within Panasonic of a firmware update to have a higher resolution than 5K (maybe 8K?) coming out of the camera - I saw this an excerpt of an interview with a Panasonic staff member, but had a quick look and couldn't find it again just now. I suspect it would have been de-squeezing the anamorphic modes in-camera. It has the capability to write 400Mbps to the card, and it has h265 capability, so an 8K 2.35:1 or 2.66:1 400Mbps h265 mode may very well have been possible. In 2017.

-

This conversation seems to have wandered into OLD = BAD = DISTORTED and NEW = GOOD = PRISTINE and I think that in many ways this is fundamentally wrong. Yes, a 1080p sensor creates a lower resolution image than reality, which has infinite resolution, but with the greater resolution cameras there are often sacrifices made along the way. Motion Cadence is one of the things that I am thinking of. Items such as a global shutter don't automatically come with a higher resolution camera. In this sense, the more modern stuff is worse. I'm perhaps one of the biggest fans of 'doing it in post' on these forums, but I'm yet to read anywhere about how you can improve motion cadence in post. As someone who chose very early on to get a neutral capture and process heavily in post I've been consuming every camera review, grading breakdown, lens review, technical white paper, codec quality analysis, etc with the single question in mind of "how do I do this in post?" and I have found that most of the time the various qualities of image that are being talked about are degradations of one kind or other, but not all of them. I discovered with my Canon 700D that you can't remove the Canon Cripple Hammer in post, and I 'went 4K'. The XC10 was 4K and high-bitrate (and had those Canon colours!!) but I discovered that you can't simulate a larger aperture (convincingly) in post (yet), you also can't simulate a higher bit-depth in post, and you can't simulate great low-light in post either. I bought the GH5 but had to go to Voigtlander f0.95 lenses to get the low-light and DoF flexibility that I wanted, but even then the lenses are a little soft wide-open and while you can sharpen them up a little in post to do a quick-and-dirty cover up for a couple of shots, you can't simulate higher resolution or higher contrast lenses in post either. Don't succumb to oversimplification - newer is better in ways that sell cameras but not in every way that matters to the connoisseurs amongst us.

-

In a sense yes, and in a sense no. Things like the OG Pocket probably don't make sense commercially any more, but @Geoff CB gets value from his F3 because it's more professional in form-factor than most alternatives at that price point, rather than more finicky in form factor. Yes, I agree about that. Although that many would say that the motion cadence of some of the older cameras bests some of the new cameras. Certainly shooting RAW on the OG Pocket is nicer than most h264 codecs, and would be more gradable, so if we take character to mean 'distortions' then actually compressed codecs lose out and RAW is actually less distorted as it's got higher bit-depth and less/no compression distortion.

-

I just watched this video by Of Two Lands, where he talks about why he bought an OG BMPCC / P2K in 2020. Some of his reasons were sentimental, as he owned one early in his career and it worked well for him, but he also mentioned that a lot of people are going back to shooting S16, or are getting sick of a super-clear image, or don't want to have the 'crutches' of AF or slow-motion or the challenges and limitations of an older fully-manual camera. We also had the P4K firmware update that added a S16 crop mode, the Ursa 12K camera has a S16 crop mode, and @JimJones argued that the new Ursa might attract purchasers purely for its S16 mode: @crevice Argued that the BMMCC still matters, and received much agreement. When the P4K first came out there was a lot of anticipation of getting a 4K BMPCC but then disappointment that the image wasn't the same, and that it looked too modern and too clean and too high resolution. After all this I wondered what it was about the image from these cameras that attracted so much praise. I even heard that the BMMCC was a "baby Alexa" due partially to its colour science, but also in other ways too, such as motion cadence, resolution and codec support. I bought one and tried emulating its image with my GH5 (a project which is still ongoing): I had a theory that this interest in lower-sharpness (and to some extent low resolution) cinema was a nostalgic thing amongst those who grew up with cinema being celluloid rather than digital acquisition and distribution, but the " cinematic / film / organic / LUT / hipster " crowd is getting younger and more interested rather than less, so I wonder - is this a thing? Are people feeling a pull to go back? ....to go back to S16? ....to go back to MF? ....to shooting slower and more deliberately?

-

Why is no-one talking about the most scandalous part of this leaked image.... that the screen says SONY on it, but it's only the right way up when it's in selfie mode. The new A7SIII. Vlogging camera to the stars.