-

Posts

8,047 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

This is interesting / surprising. Do you mean that the Colour Space Transform is wrong? I have found with the "cheaper" cameras (XC10, GH5) that its unclear what colour profiles they align with and no clear answers online. I just assumed that the C-Log in the XC10 would be the first version and that the CS would be Canon Cinema Gamut but I guess not. It was billed as being able to be processed along with footage from the other cine line cameras and was completely compatible, although @jgharding mentioned above that this wasn't the case in reality. I'm not against grading it manually, so that's not an issue. I've been wondering what the best approach is to grading flat footage. I've got about half-a-dozen projects in post at the moment with XC10 "C-Log", GH5 "HLG", GoPro Protune, and Sony X3000 "natural" which are all flat profiles with no direct support in CST / RCM / ACES. I was thinking one of my next tests would be to grab some shots and grade them using: LGG Contrast/Pivot Curves CST to rec709 then LGG CST to rec709 then Contrast/Pivot CST to rec709 then Curves CST to Log-C then LGG CST to Log-C then Contrast/Pivot CST to Log-C then Curves etc? to try and get a sense of how each one handles contrast and the various curves. I was hoping to get a feel for each of them to understand which I liked and when I might use each one. I suspect that curves will be the one as I will be able to compress/expand various regions of the luma range depending on the individual shot. For example I might have two shots, one where there is information in the scene across the luma range and I would only want to have gentle manipulations, but a second shot might have the foreground all below 60IRE and then the sky might be between 90-100IRE, in which case I might want to eliminate the 60-90IRE range and make the most of the limited gamma of rec709, bringing up the foreground without crushing the sky. What's that saying... "I was put on earth to accomplish a number of things - I'm so far behind I will never die". I have sooooo much work to do.

-

It's a bit more complicated actually, which tripped me up. You're right that it's "-intra" for h264, but the h265 conversion doesn't accept that - it just ignores it, thus my question. You want "-x265-params keyint=1" for the h265 conversion. For example: You can tell it's working because when you run it, it shows: The comparison command to render the SSIM is: I didn't look at this specifically (and now I don't have to since you did!) but it makes sense. In terms of an acquisition format, the question of prores vs h264/5 doesn't really come into question that much if your h264 option is below ~150Mbps, which is where I went down to. Prores Proxy is 141Mbps and people don't really talk about using that in-camera, so in a sense that's below the conversation about acquisition. It should scale perfectly, if you take the approach of bits-per-pixel. Prores bitrates for UHD are exactly 4x that for FHD. Thus, I would assume that you can simply translate the equivalences. I might do a few FHD tests just to check that logic, but I can't see why it wouldn't be true. I have two remaining questions: How do I get Resolve to export better h264, for upload to YT Is Resolves Prores export that good? The h264 outputs didn't seem to be anywhere near ffmpeg, so maybe the Prores export is weak too? I'll have to return to this and investigate further. I wonder if there's a way I can configure it to be better than 420 and 8-bit too.

-

Unconventional choice, but my most used lights are: 1) The sun 2) Halogen work light from hardware store (for lens tests) 3) I own an Aputure AL-M9 which is super handy too Mostly it's available lighting for me though, so the sun but also practicals at night.

-

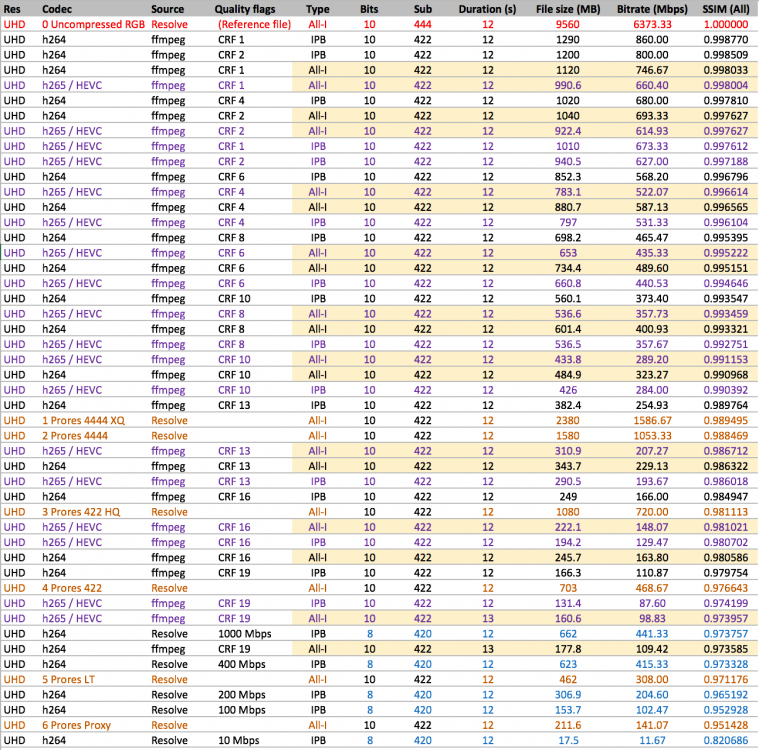

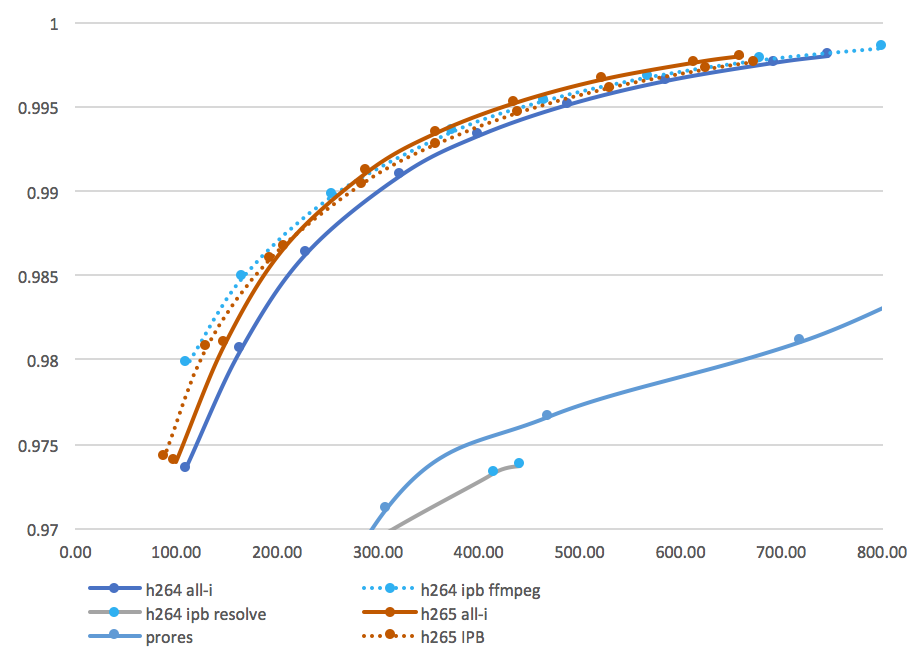

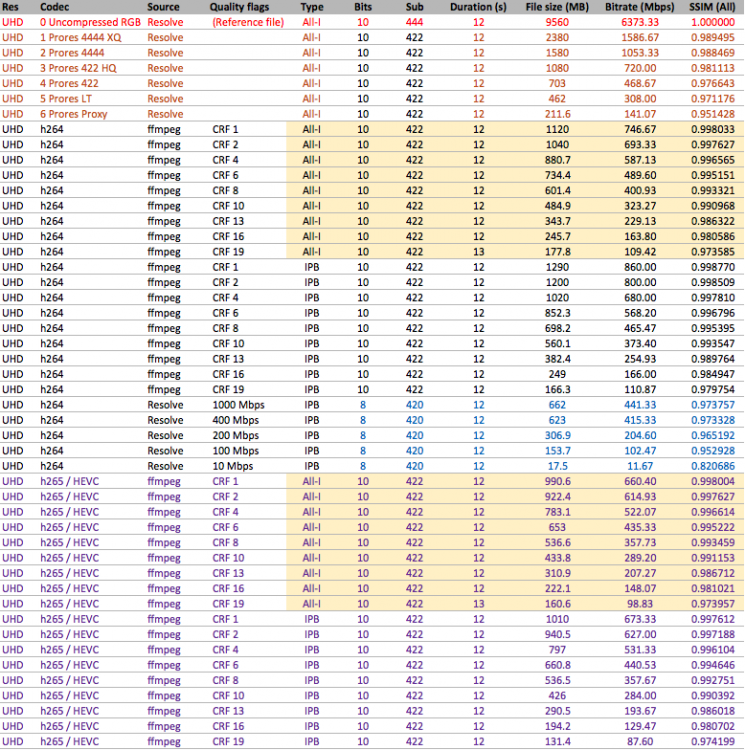

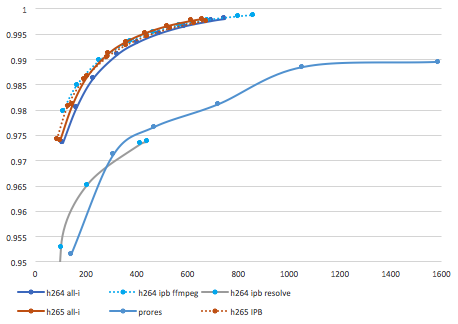

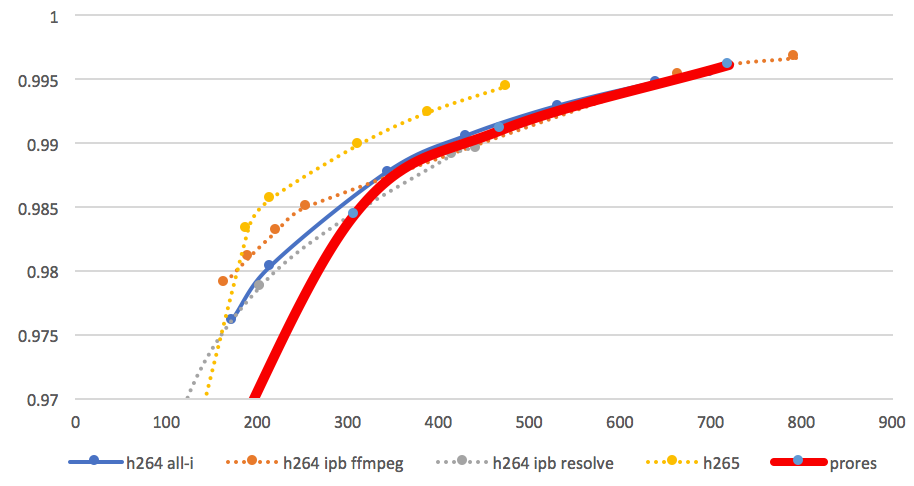

Ok, here's the second attempt..... and graphed: So, it looks like: UHD Prores 422 HQ from Resolve can be matched in quality by ffmpeg h264 422 10-bit ALL-I at around 170Mbps and a similar bitrate for h265 which is about a 4X reduction in file size UHD Prores 4444 and 4444 XQ from Resolve can be matched in quality by ffmpeg h264 422 10-bit ALL-I at around 300Mbps and around 250Mbps for h265 which is about a 3-4X reduction in file size There doesn't appear to be a huge difference between h264 and h265 all-i efficiencies, maybe only 10-15% reduction Happy to answer any questions and to have the results challenged. Let's hope I didn't stuff anything up this time 🙂

-

Thank you! That worked like a charm - unlike the h265 intra encoding, which worked exactly the same as the non-intra encoding. The SSIM comparison between an intra encode and a non-intra encode revealed an SSIM of 1.000000. 😂😂😂😂 Now to work out how to encode 10-bit intra. You already need to have a different binary for h265, who knows what support for intra it has, or doesn't have. The commentary I read was that the h264 and h265 modules have the same arguments, but obviously there are differences. I had previously considered buying a Mac Mini as a desktop and buying a low-spec laptop and the T2 chip caught my eye in that scenario. I have since realised that although the 13MBP I'm buying is only quad core and the Mac Mini is 6-core, the cores are slower and are only 8th generation (IIRC) whereas the 13MBP are 10th generation and that basically makes up the difference. I'll be getting the T2 Chip regardless, and combined with my decision to go back to 1080p and use the 200Mbps ALL-I mode on the GH5 it should cut like butter. The question then becomes how it handles the footage from the Sony X3000 which is 100Mbps h264 IPB, and from my phone, which are h265. I was wondering if I should render those to Prores HQ 1080 and then grade from there, or to just stick with the original files. I guess the T2 Chip might mean I keep the originals of those files. Interesting times.

-

Nice colours! It needs more saturation though - see below reference image!

-

No idea. I guess bigger is better for low-light, but bigger requires bigger optics, so there would be a limit. MFT seems like it might be around that size, but who knows.

-

I don't know about you, but I'm increasingly finding that feature to rather miss the mark. Not only has math stopped being my friend ("hi great to see you, wow, when was the last time I was in town, it was..." "STOP! don't do it!" "... 22 years ago at that bar!") but Facebook now intermittently: Shows me pictures of me with my ex Tells me that it's my mums birthday, reminding me that she's dead or reminding me of friendships lost, etc etc... There's a quote that I found particular amusing.. in about 60 years there will be 800 million dead people on FB. Agreed. We're nowhere near the end of this thing. We'll know when it actually starts to get desperate when the bottom falls out of the real-estate market. I don't know about you in NZ, but here in AU the market doesn't tend to drop, it just stagnates. Partly because people just refuse to sell and take the loss, as well as constant bullish marketing by real estate folks, but it goes down when people are forced to sell as they can't cut elsewhere in their living expenses to make up the difference.

-

Screw it. Goodbye 4K, hello 1080p. Why? I can either: Spend hundreds of dollars replacing my 2x256Gb SD cards with 2x512Gb UHS-II SD cards (to retain similar recording times) Shoot 400Mbps 4K 16:9 or 3.3K 4:3 10-bit 422 ALL-I Or: Spend that money instead on upgrading the SSD in my MBP upgrade I'm about to do so I can edit files SOOC Shoot 200Mbps 1080p 10-bit 422 ALL-I Completely eliminate the proxy workflow I've had to use, with all the rendering and re-conforming timelines between proxy and original SOOC footage Colour grade on 25% as many pixels as UHD, giving me either 4X fps in playback, or 4X processing ability for the same performance Use Resolves Super Scale feature when rendering for 4K YT uploads (this looks pretty good after all......) Use the digital zoom function of the GH5 instead of cropping in post (this is for when I don't have a long enough lens on the camera - the digital zoom looks like it applies to the 5K sensor readout, so isn't a crop into the 1080p downscaled image, but an adjustment of the downscale that is already occurring) All else being equal, h264 ALL-I codecs are slightly better than Prores. Prores HQ for 1080p is 176Mbps and the best 1080p mode on the GH5 is 200Mbps. Both are 10-bit, 422, and ALL-I, so all else is equal. So, if 1080p Prores HQ is good enough for feature films then that mode should be good enough for me, where I'm only publishing to YT or watching locally on TVs. The last footage I was involved with shooting that got projected came from a PD150, so the big screen doesn't factor in my world that much lol.

-

Haters gonna hate.... That's top 5% of the most sensible things ever said on a camera forum. Nice to see a departure from the "based on one video I'm going to buy it / I'll never buy it" all-or-nothing sentiments 🙂

-

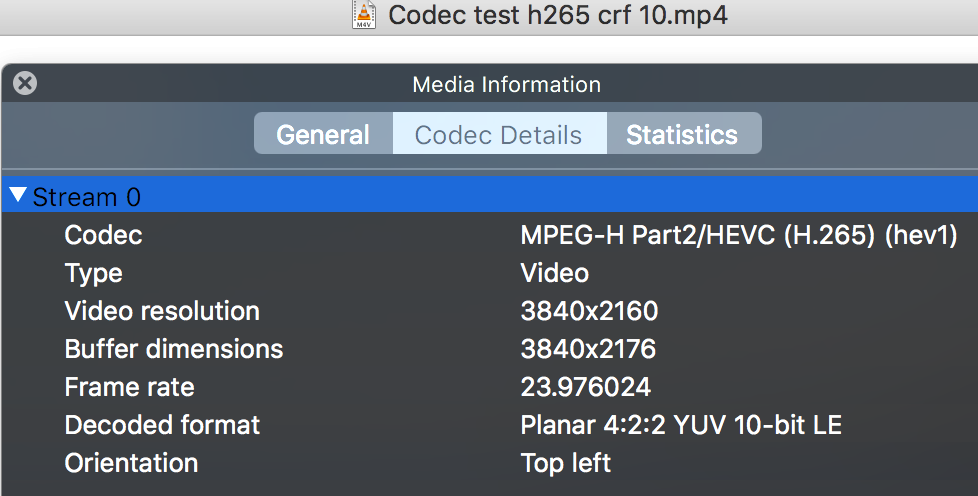

Does anyone know how to confirm that my h265 files are ALL-I? On the h264 files the ALL-I ones have this from ffprobe: "Stream #0:0(und): Video: h264 (High 4:2:2 Intra) (avc1 / 0x31637661), yuv422p10le, 3840x2160 [SAR 1:1 DAR 16:9], 745951 kb/s, 23.98 fps, 23.98 tbr, 24k tbn, 47.95 tbc (default)" and the h264 IPB have: "Stream #0:0(und): Video: h264 (High 4:2:2) (avc1 / 0x31637661), yuv422p10le, 3840x2160 [SAR 1:1 DAR 16:9], 860083 kb/s, 23.98 fps, 23.98 tbr, 24k tbn, 47.95 tbc (default)" but the h265 files I rendered with -intra as an argument have this: "Stream #0:0(und): Video: hevc (Rext) (hev1 / 0x31766568), yuv422p10le(tv, progressive), 3840x2160 [SAR 1:1 DAR 16:9], 350312 kb/s, 23.98 fps, 23.98 tbr, 24k tbn, 23.98 tbc (default) which seems to suggest they're progressive, but I definitely used the -intra flag. I even converted another one and changed the order of the arguments in case that was stuffing things up.

-

Voted none because it depends on what you're doing. Buying new equipment should be a last resort.... and if you're asking the question which is going to be a better upgrade for you then you don't know enough about what you're doing to get much value for money on whatever you buy anyway. So, my advice is to spend that money on the people closest to you in your life and spend a year shooting real projects without buying anything new, making a list of all the issues, challenges, and compromises you made in shooting real projects. Then in a years time you can go spend money to solve whatever problems you have that weren't solved in that year by learning stuff for free and practicing with your equipment.

-

I'd say it's on its way. IIRC The GH5 didn't get the ALL-I or 400Mbps codecs until firmware updates, so it might be on the cards for this one too. As a MFT user it doesn't really impact me, my GH5 shoots the same modes today than it did before this was leaked. But otherwise, this is great stuff. Panasonic pushing the envelope benefits us all in the long-term.

-

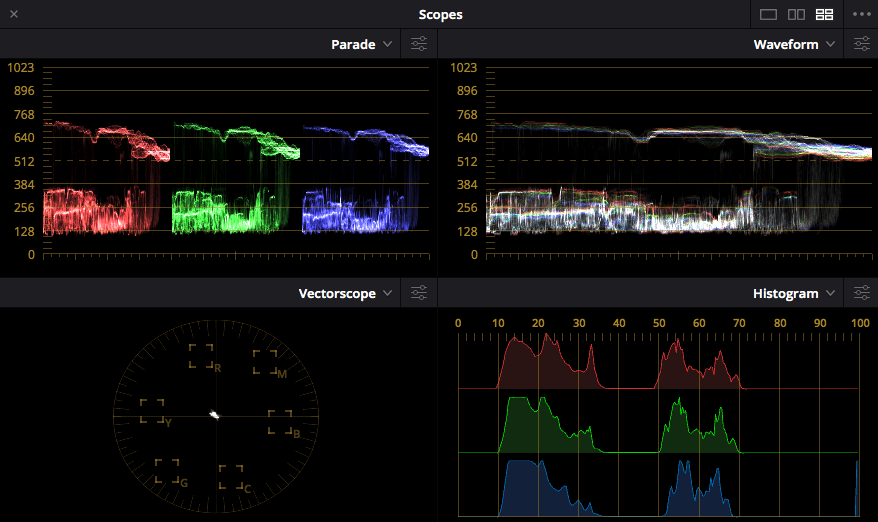

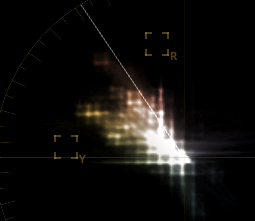

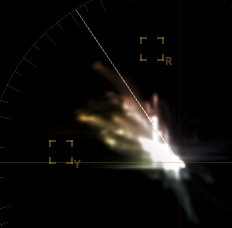

"Tag Team, back again Check it to wreck it, let's begin" Just as predicted by Tag Team in their 1993 epic hit "Whoomp! There it is!" about recording in 8-bit with LOG profiles, I'm back with the XC10 footage, getting wrecked once more. Take this delightful image of a cluster of lovely buildings on the Cinque de Terra in Italy: As you can see, the combination of an overcast day and the C-Log profile makes for a pretty flat image: So, we adjust WB, levels and some saturation, and get this lovely starting point: Problem is, look at the noise! Perhaps our 8-bit file doesn't do a good job with a log profile - let's look at the vectorscope: Yes. This is real. We talk about colour density and thickness of image.... well, this isn't it. Luckily, Resolve is the love child of a Swiss Army Knife, a tank, and Monet, so with a little Temporal NR and a touch of sharpening, we can pull this phoenix out of the ashes. Now look: Obviously I have more work to do on this shot - a lot in fact - but I'm learning. Grading with this camera is a learning experience, I just wish it wasn't like being pushed into the deep end at gun-point.

-

You're right, assuming you're talking about using these things as intermediaries, but if you've got a camera that doesn't have Prores (like most prosumer cameras) then you don't have that choice. OR, you do have that choice, but the option involves adding an external recorder for many hundreds of dollars, plus all the extra size, weight, and complexity of additional battery types, chargers, etc. With things like the P4K/P6K and others making Prores more affordable now, I suspect that many will be tempted towards one camera or other because "Prores is a professional format and h26x is a consumer / delivery format" but I wanted to test if that really did matter. After all, the only comparisons I could find were that h265 was 100x better than Prores 4444, which is obviously ridiculous. I'm about to update my ageing MBP and am looking forward to the better h265 support that will come with a new OSX and latest Resolve (I can't upgrade Resolve until I update OSX and there's a limit to how new your OSX version can be on a given hardware setup, so I'm kind of stuck in the mud until I upgrade my hardware). I just uploaded a test file to YT right now. H264 10-bit All-I. Worked just fine. ffprobe reports: I've found YT to be pretty good with input file formats. Absolutely. I wouldn't advocate for h264/h265 as intermediaries at all. DON'T DO IT KIDS!! 😂😂😂 No worries. Personally, I find that doing a few hours / days of testing is far more effective than doing hours / days googling (which often does not lead to the truth) or, worse still, is hanging out online and hearing from people that just repeat misinformation and you end up making a bad decision that either wastes many hundreds / thousands of dollars or means you have to deal with lower quality footage for months / years until you realise that you were mislead. I've wasted thousands on equipment I don't use and also spent years shooting with bad settings or flat-out with the wrong equipment because of misinformation gathered online - even from EOSHD, although it's definitely been better than average for camera forums. I also finding that posting the results forces me to do everything properly as I will be explaining it, and it's a bit embarrassing if you get something wrong, so that's motivating too! Of course, I stuffed up my reference file above, and am re-rendering and re-evaluating all the modes again... such is life and learning 🙂 I'd really like to be doing perceptual quality testing, as we look at our footage with our eyes and brain, not our statistical analysis software, but typically this involves having massive double-blind tests, which are beyond my ability to perform, so I leverage off of metrics like SSIM, which are reliable and repeatable and comparable. Does Prores at a given SSIM have a different feel than h264 at the same SSIM - you'd imagine so. At this point I'm still very satisfied with my testing though, as at least I have some idea now of what is what. I would imagine that cameras would increasingly offer h265 10-bit ALL-I codecs, and I think that's a good move. h265 is better than h264 (more efficient, giving either better quality at identical bitrates or same quality at lower bitrates) and ALL-I formats render movement nicer and are dramatically easier to deal with in post. One thing I am very conscious of is that you can go two routes for your workflow. The first is to render high quality intermediaries and abandon your SOOC files. For this you'll typically choose a friendly ALL-I codec, which trades decoding load for high data volumes, requiring an investment in large and fast storage. You will edit and grade and render from these intermediaries. The second is to render low quality intermediaries, which you can use for editing, but then revert back to the SOOC files for grading and rendering. This is my workflow and I use 720p "Prores Proxy" which cuts like butter on my ageing MBP and also fits neatly on the internal SSD for editing on the go. The challenge with the second workflow is that you can't really grade on the proxy files, and you definitely can't do things like post-stabilisation or even smooth tracking of power windows. This means that you're back to the SOOC files for grading and which means that you can't play the graded footage in real-time. This compromise is acceptable for me, but not for professional people who will have a client attend a grading session where they will ask for changes to be made and won't want to wait for a clip to be re-rendered before being able to view it. Having SOOC footage that's ALL-I significantly helps with performance of grading from SOOC footage, but it's why professional colourists have five-figure (or even six-figure) computer setups in a sound-proof cupboard and run looooong USB and HDMI cables out to their grading suite. Anyone who balks at the cost of the Mac Pro for example has never seen someone do complex colour grades with many tracked windows on 8K footage live in front of a client. Anything that cannot be explained simply isn't sufficiently understood. I'll get there, I promise 🙂

-

Hot damn! I knew you were smart, but wow..... Hmmm, I wonder if my wife hates space travel.

-

In my initial run the Resolve ones were from the original timeline, but all the ffmpeg ones were from my 422 reference file, which I have now replaced with a real reference file. I'll start re-running the conversions again. I'm thinking I'll do h264 IPB, h264 ALL-I, h265 IPB, h265 ALL-I, all in 10-bit to begin with. The 1% of file size seemed fishy, but there isn't much out there, and people do a lot of comparing h264 and h265 but not against Prores. Considering how good ffmpeg is compared to Resolve I'm now wondering if I should export a high quality file from Resolve and then use ffmpeg to make the smaller one. Of course, I publish to YT so probably not, though.

-

Plus lens choices for low light are getting better all the time too. There's never been a better time to be in the market for a large aperture prime - they get faster, and better at those larger apertures, all the time. I remember someone talking about there being three different kinds of value: value you get from the utility of something, value you get from owning something (bragging rights, etc), or the other one I can never remember which is when you hate opera but take your wife because she likes it and making her happy is something you value.

-

That makes sense. I thought you were saying that you own a lens you dislike because it's all wrong but you were persevering with it regardless! and yeah, if you're into Zeiss Otus primes, then high performance optics should be a plus not a negative 🙂

-

That makes sense. Rendering a new reference file now. I'll test a few of the other ones I did before to see if they score differently.

-

I just rendered a Prores 4444 and a Prores 4444 XQ from Resolve and while the file sizes are much larger, they get a lower SSIM score than Prores HQ. Any ideas why that might happen? They're not radically lower, so I don't think that I've stuffed it up or that there's a technical error somewhere.

-

Thanks. Reference file is "Uncompressed YUV 422 10-bit" Prores files from Resolve are "yuv422p10le" h265 IPB are "yuv422p10le" h264 from ffmpeg are "yuv422p" h264 intra from ffmpeg are "yuv422p" h264 from Resolve are "yuv420p" I'm guessing then that the h264 are all 8-bit? and it looks like Resolve outputs h264 as 420, but ffmpeg as 422? Makes sense that you downscaled to match your workflow. I didn't, but considering that we're comparing cameras that have UHD Prores HQ with ones that have UHD 400Mbps 422 10-bit h264 All-I with ones that have UHD 100Mbps 420 8-bit h264 IPB, it seems a reasonable comparison. For me this comparison is the missing piece of the puzzle, as when you buy a camera you're seldom presented with the option of shooting Prores or h264 as cameras typically choose one or the other. My motivation was really to work out how good the GH5 modes are. People say "I shot a feature film that premiered at Cannes in 1080p Prores HQ" but what does that actually mean? For example, Cinemartin claimed that h265 is 100x as efficient as Prores 4444 (which Andrew reported on here along with some other new outlets, presumably before anyone could get their hands on it to actually check). According to this excellent resource at frame.io Prores 4444 HD is 264Mbps and UHD is 1061Mbps. If the above claim was true then h265 at 2.7Mbps for HD or 11Mbps for UHD would be better than Hollywood master files. Does that sound even remotely plausible? Why would people be talking about bitrate? My phone records at many times that rate! The image quality obviously doesn't bear this out, but unfortunately if you google "h265 Prores" you don't really get much else, so that's why I was motivated to do this test myself.

-

Any RAW file will require de-bayering in order to compare to anything else. The only way to avoid softness from de-bayering is to downscale. This isn't a completely pure stress-test, but neither is real footage. Most lenses aren't pixel sharp, and our love of bokeh, motion blur and camera stabilisation of any kind ensures that much of the image is blurry and doesn't move that much from frame to frame. I have done stress testing of codecs before but it makes it more of a theoretical exercise rather than a practical real-world test. How can I tell if the files are 420 or 422? I haven't got Resolve handy right now, but VLC gives me some info: Is it the decoded format line I'm interested in? I'm skeptical as I really want the encoded format, not the decoded format, but maybe it's just badly labelled? I'd share the files, but the source files are currently up to 22Gb, so would take forever to upload and basically no-one would download them. I've done things like this in the past and the download counter just sits there after a week of uploading at my end.

-

Here's the graph of what I've done so far: I haven't done any h265 all-i tests yet, although I'm not sure if there are any cameras that use this? I'll also do Prores 4444 and 4444 XQ for completion, assuming Resolve has them. I guess the only news is that h265 is better than h264 or Prores, but it's not 2x better, at least not at this end of the curve. We're in serious bitrate territory here, so not what these h26x codecs were designed to do. Let me know if there's anything else you want me to test while I'm setup for it.