-

Posts

8,051 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I have a full thread about how shooting 1080p is often sufficient, assuming your camera is good enough. There is a good argument to be made for shooting 4K in order to get good enough quality out of your camera as some cameras 1080p isn't that great. But so far all of the 8K smartphone videos I've seen unfortunately don't really make the grade when compared with decent 1080p.

-

WOW. Those zoom shots are fantastic! How is the video on these marvellous devices? Have you been testing that too? Or is it still not good enough to be taken seriously yet?

-

It could be due to Panasonics shift to full-frame, or it could be a combination of other factors. By other factors, what I mean is: The GH4 was revolutionary, being one of the first affordable cameras to have 4K. The GH5 was revolutionary, in many ways but especially delivering 4K60 and 10-bit internal. If the GH6 is to uphold the reputation of the GH line then it would need to seriously step up and take on the competition, which leads me to... The competition being all over the place. The A7s3 release was delayed from the normal release schedule, and if rumours are true, was pulled from it launch event at the last minute because Sony weren't sure it would really hit the spot, leaving a huge fanfare for some lenses. The R5 came from no-where with 8K, after a strange release of the EOS R which seemed to be a big release but was underwhelming and quickly got a big discount. BM released the P6K, a "successor" to the P4K with a S35 sensor and an EF mount, which confused the heck out of everyone. BM then released the 12K UMP which was so left-field it was from a parallel dimension. So all in all, releases have been all over the place and unpredictable, because the market has been in the shadow of... The promise of 8K for the Olympics and now it's delayed because of.... COVID. No-one knows what impact COVID is going to have on the film-making market, especially the low-budget segment which MFT is more prevalent, so the best time to release a camera is pretty tricky thing to gauge. You'd have to be pretty courageous as an exec to pull the trigger on a huge production and release run during a pandemic, and especially one for an MFT camera because of the... Trend towards full-frame and even larger than FF with some "medium format" options in a few examples. This is a tremendously upsetting dynamic when S35 ruled the cinematography world for so long, and MFT only had a 1.4x crop compared to S35, whereas now it is considered a 2x crop at least. This makes MFT seem less palatable by an increasingly fanciful and fickle marketplace, where there is more and more focus on the flashy toys and less and less focus on steady workhorse cameras, and even the "quite market" of content creators is getting tempted by the allure of affordable RAW, which comes with all the... Legal trickiness of patents and licensing agreements and other big companies all playing chess with their lawyers at our expense. FF is but one element of a tornado of uncertainty.

-

Funny. I've also tested the telescoping version of that lens on the Micro. It's a little too long for my tastes, and definitely not fast enough, but having IS at the wide end is very useful considering that most wider primes don't have IS. The 14mm/2.5 is a great pairing for the Micro too, but lacks the IS. Of course, probably my favourite lens for the Micro at this point is probably the Laowa 7.5/2 as it has a horizontal FOV equivalent to a 22mm lens. Throw on a 2.66:1 crop and it's not a bad wide-aspect all-rounder. Of course, if I was going to use it for shooting real projects, I'd probably buy the Voigtlander 10.5/0.95, which would be equivalent to a FF 30mm f2.7.

-

Nice looking model for your test shots @BTM_Pix.. almost as good as when it wears a c-mount lens 🙂

-

They do seem very similar. It would be great to see MTF charts on the Meike lenses though, to confirm. The Meike lenses certainly have excellent performance, but they're very slow. T2.2 is probably around F2, which in DoF is equivalent to F4 in FF terms, which isn't that fast in terms of background separation. If you're just interested in the exposure value, then T2.2 might be fine. The way I see the Meike / Veydra lenses is as a set of cine lenses that has traded getting a little bit more sharpness for being one or two stops slower than the other contenders. It's easy to see the Veydras as very sharp because of the myopic tradition of only talking about how sharp lenses are when wide open, but it's not that hard for other lenses to get almost that kind of result when stopped down from their widest apertures. Sometimes the improvement when stopping down is absolutely radical: I suggest you compare the graphs from the Veydras (as a proxy for the Meike) with the graphs of the other MFT lenses that are around: https://www.lensrentals.com/blog/2018/01/finally-some-m43-mtf-testing-25mm-prime-lens-comparison/ https://www.lensrentals.com/blog/2018/03/finally-some-more-m43-mtf-testing-are-the-40s-fabulous/ https://www.lensrentals.com/blog/2012/05/wide-angle-micro-43-imatest-results/ https://www.lensrentals.com/blog/2012/05/standard-range-micro-43-imatest-results/ Not suggesting that the Meike aren't a good option, but just make sure you're aware of how they compare to their competitors before you hit the Purchase button!

-

There's a saying in business that "a rising tide lifts all boats" - it means that when times are good everyone does well, and the implication is that it's only when the going gets tough that you see who was running a solid company and who was only profitable because the going was easy. The death of compact cameras due to smartphones and now with covid, the tide is lowering and will continue to lower for some time, as the economic impacts of covid will not go away quickly. Unfortunately, business is not only about customer satisfaction, it's about money. History tells us that companies who work on hype and market protectionism and take advantage of market distortions can be successful, and often are. The camera industry is gradually being disrupted and I'm not sure that product quality will be the defining factor about who survives and who doesn't. It should also be mentioned that many cameras already out there are "good enough" to provide a steady stream of content in difficult market conditions. In that sense, content creators can choose to pay their bills instead of buy new equipment, so from that perspective the manufacturers are kind of last in line, so to speak. Unless you break your equipment, you can just choose not to upgrade.

-

Are they the same formula? In the previous video (comparing the Meike with the Veydra) he notes that the Meike has scarified the close focus distance to eliminate focus breathing, which would lead me to believe that there is at least some difference in the design, possibly only element spacing, but maybe more. Here's the part I'm talking about: Nevertheless, I think it's reasonable to suggest that the Meike performance is excellent. The comparison to the Zeiss CP2s is interesting, because it really depends on what you're looking for. My experience is that there are five schools of thought when choosing lenses: Choosing lenses that offer resolution but have flattering (ie, lower) levels of micro-contrast as this is flattering on skin texture. This is a significant force in high-end cinematography with the classic lenses being very popular in rental houses. Choosing lenses that are very sharp and very neutral in order to be combined with optical filters like diffusion filters or nets to get a flattering level of micro-contrast in-camera but also have it be adjustable, where with lenses it is much less-so. This is also popular in larger budget productions. Choosing lenses that are very sharp and very neutral to get the cleanest image out of the camera in order to process it in post with diffusion and other image tuning effects in post. I've heard of this in rare instances where someone knows the power of their preferred colourist and is willing to invest in more time and cost in post because of the fine-tuning possibilities. Choosing lenses that are very sharp and very neutral to get the cleanest image out of the camera because the aesthetic is suitable for the project. This is sometimes the case in larger budget productions, but is more the norm in lower budget productions like documentaries where the emphasis is on the content and the capturing is meant to have a more neutral tone. Choosing lenses that are very sharp and very neutral to get the cleanest image out of the camera because that's what stills photographers obsess over and the rationale of the person hasn't progressed beyond the idea that film-making is just taking many photographs very quickly. I find this to be the dominant mindset on internet forums. Of course, it's more complicated than just resolution and micro-contrast, but those seem to be the two dominant factors that drive most of the decision-making. I also noted in the big comparison that I did that some of the more revered lenses had higher resolution than average when wide-open and lower resolution when stopped down than the average lens, making them more consistent over their aperture range than the average lens, which is much softer wide open and much sharper when stopped down.

-

How big a diffusor are you looking for? There's a type of parabolic reflector where you put the light-source in the middle facing away from the subject (and directly into the reflector) and that should make the light source the size of the reflector. Like this kind of style: They fold up like umbrellas and so are pretty portable. I'd imagine they come in a range of sizes: Unless you're looking for something smaller? The idea could easily be applied to whatever size you like if you're after an on-camera solution like @BTM_Pix suggested:

-

It's beginning to sound like some of you are getting triggered by this whole thing. If the various investments and work-arounds of shooting 4K are worth the additional investment for your particular situation or emotional attachments then go for it. The history of mankind is more of a cosmic wonder than I thought.. just think of it, all the people that can't live without 4K would have died all through history up until only a few short years ago! This is beginning to get tedious. Please do yourself a favour and do a little experiment.. shoot a 1080p BRAW clip and then shoot a 4K BRAW clip, put each of them on a native resolution timeline and then load each of them up with effects until your computer can't keep up. Compare how many effects the 1080p timeline got to with the number that the 4K timeline got to. I look forward to the results of your test and the proof that the 4K timeline, and processing 4x the number of pixels, takes the same processing power as the 1080p timeline, with its quarter the number of pixels. This thread is about 4K vs 1080p, not the very specific situation and particular camera selections that you happened to make. Cool. If you render a 1080p master file then upscale it to 4K and upload that to YouTube then we can compare the numbers and see if your 6K timeline is that much better than having downscaled to a 1080p timeline and then upscaled the result to 4K YT. I look forward to comparing them.

-

When I first started working in the tall buildings in the city, I was really taken back and concerned when hearing the next rumour about how big company X was about to downsize by 600 people, and big company Y was going to outsource it's blah department. This was in the wake of the GFC, and bonuses were only a month or two salary at best! After three years I realised there are always rumours and that disaster is always looming. It takes more than whispers to cut through the noise for me. Covid is putting pressure on the whole world, there will be blood in the water, but it's not there yet.

-

You're confusing the processing power required for decoding the image from the original codec with the processing power required for doing effects once it's been decoded. RAW, and BRAW especially is a radically easier codec to work with over AVCHD. I'm guessing you don't put enough effects onto your files to really slow things down. Try putting your BRAW on a 12K timeline and apply a bunch of OFX plugins and see how far BRAW gets you then 🙂 I'm not arguing that 4K is no use, I'm just saying that it has hidden costs. It's a bit of a stretch to say that mentioning media size and encoding time covers things like the concerns of noise management from extra fans. It sounds like your 4K workflow doesn't have any hidden costs because you've already paid for them. Think about what the cheapest system that could do what you do with 1080p BRAW would look like - probably a 2012 laptop - which is close to being free at this point, so in that sense the entire cost of your whole editing setup is the "hidden" cost you have already paid. It's like trying to tell someone that there are extra costs involved in driving 400Km/h and the person saying to you "I don't know what you're talking about, I just get in my Bugatti and do it, so it totally costs the same!"

-

Most of the time I just go into Resolve and look to see what stuff is called or whatever, but I don't have the free version so can't reference it 🙂

-

I've seen that before... years ago actually. When it was written 🙂 Seriously though, I looked through that and multiple nodes wasn't mentioned, so I wasn't sure. I find BlackMagic aren't the greatest about keeping the documentation up to date and easily findable.

-

Which bit isn't correct? I've never been able to get a clear idea about which restrictions there are on the free version. If the free version has multiple nodes, then that's pretty awesome, and makes it a lot more useful.

-

It's the whole workflow. Just off the top of my head: Having to buy larger cards for capture Spending time changing media in the field Creative energy spent worrying about media management Extra batteries (if higher resolutions take extra power, not sure) and worrying about batteries going flat Having to wait longer for media to transfer to storage Having to transfer more cards to storage Having to pay for more storage Having to scale up when a drive gets full, eg, having to manage multiple drives and adjust backups and figure out extra media management protocols due to having more drives, or having to go to an expensive NAS style solution when you get more drives than the simple and cheaper docks can handle Having to buy larger editing SSDs for holding footage because the project is larger Having to buy a more powerful computer to play footage smoothly, or time spent waiting for proxies to render is longer Having to buy a more powerful computer / GPU to process the footage Having interruptions to your workflow when you max out your hardware with effects and transitions and the NLE can't play realtime anymore, or having to spend more time working with caches to pre-render those heavy computational sections of a project Having your creativity limited by sticking to processing options within your hardware performance (did you know Resolve has some time-stretching algorithms that use AI? and that's just one effect, there will be more) Having to wait longer for projects to render Having to wait longer for files to transfer during delivery and/or having to buy better/more internet to cope with the increased sizes Having to wait longer for backup cycles and media management tasks further down the line There's even the minor stuff that probably isn't that much, but includes extra cost of electricity, extra cooling, extra effort or creative drain dealing with the extra noise in the studio due to more fans or faster spinning of fans required for cooling, etc etc etc As a quick attempt at naming some stuff how was that?

-

I have bolded the most significant word in your post... "almost" 😂😂😂 I suspect that YouTube might make some kind of allowance for making 1080p videos better in 1080 than 4K videos are, as there's kind of a relationship between upload resolution and who watches stuff. People that have channels with nothing to do with aesthetics aren't likely to chase the highest resolutions. There will be exceptions of course, but as a general principle I think it holds up. I expect that @fuzzynormal probably does see a difference. I can see a difference, and once I learned where to look and what to look for, I think I could probably see that difference even if there weren't comparable shots. Perhaps the most interesting thing about this thread is that there wasn't consensus about if it was visible, or for those who had a preference, which one was even best. I think it's one of those things where being right doesn't necessarily align you with popular opinion, and it may not actually give you any kind of advantage in the end anyway. By the time we factor in all the overheads, which may be hidden for many people at this point but are real nonetheless, I think that for many 4K isn't worth it. That's easy.. we'll all need to go on a prolonged campaign to blow up my channel and get it to a million subs, then I promise I'll do the test 🙂

-

I agree that a 4K upload watched at 4K is WAY better than a 1080p upload watched at 1080p. Absolutely. The challenge with uploading 6K and 8K is that people won't watch at 6K or 8K, they'll watch at 4K or 1080p. It's kind of like me saying that you're going to love my new video because I shot it in 25K, and you have to watch it in 25K or you'll miss out. Not going to happen. At some point you have to optimise your viewing experience for the people watching, rather than the best possible experience if a viewer sells their car to setup a system. For many that's people watching in 4K, for most it's people watching in 1080p, or even 720p.

-

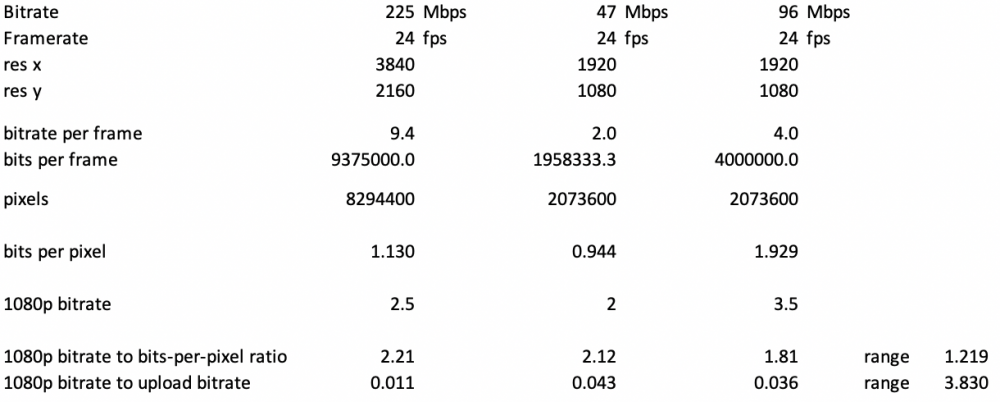

1) yes, 2) not necessarily. The 4K file was uploaded at over twice the bitrate as the better 1080p upload, yet the 1080p from it was only 70%. It might be that a 4K reference file might give a slightly better IQ per bitrate stream, that 'bump' is competing with a 70% bitrate, and considering we're talking FHD at 2.5Mbps - I'd think the bitrate would win. No. The 4K file was uploaded at over twice the bitrate as the better 1080p upload, yet the 1080p from it was only 70%. Judging from this, the logical conclusion is that more bitrate at the same resolution helps, and the same bitrate at a higher resolution HURTS. I suspect that YT might be looking at bitrate-per-pixel, which would explain why the 47Mbps 1080 was closer to the 225Mbps 4K file. Let's summarise that again, but looking at bitrate-per-pixel: Notice that the bits-per-pixel matches much more closely than the absolute bitrate.. still some variation, but much closer.

-

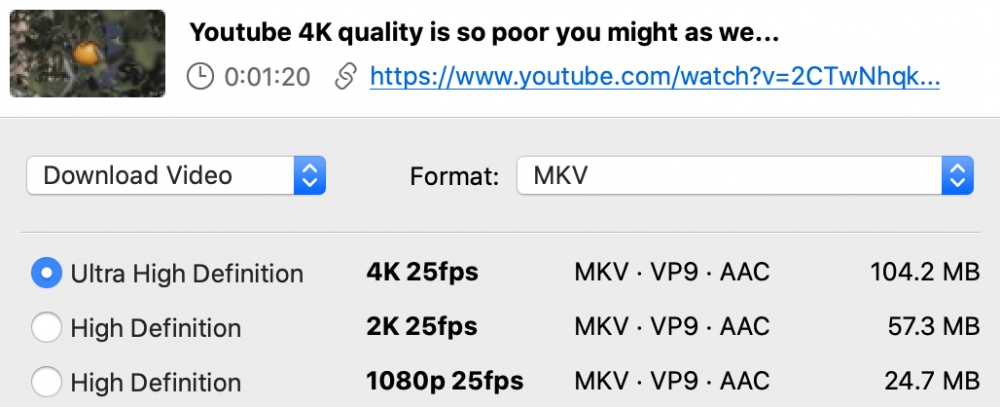

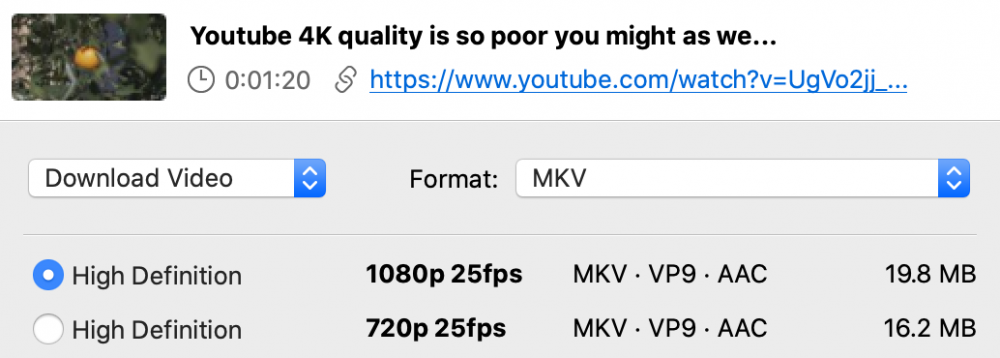

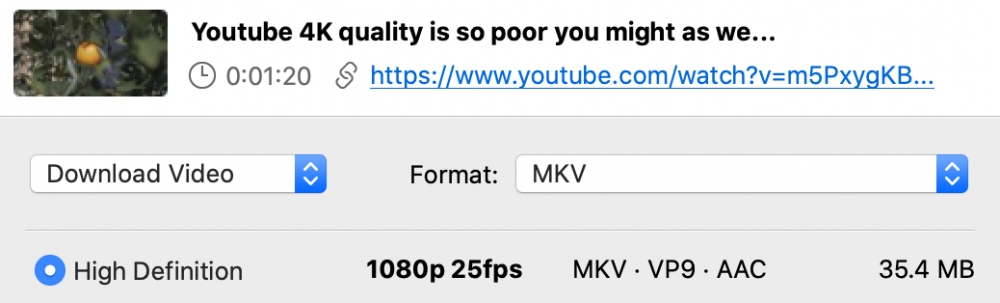

Here are the videos if you are curious to pixel peep... 4K 225Mbps: 1080p 47Mbps: and 1080p 96Mbps:

-

Here's some data... Original 4K upload 225Mbps: 1080p upload 47Mbps: 1080p upload 96Mbps: So: 4K at 225Mbps upload gives a 1080p stream at 2.5Mbps 1080p at 47Mbps upload gives a 1080p stream at 2.0Mbps 1080p at 96Mbps upload gives a 1080p stream at 3.5Mbps I'm willing to call that conclusive.. Uploading at a higher resolution is not the answer to people watching in lesser resolutions.

-

@Neumann Films @SteveV4D @fuzzynormal I think this is actually a myth. I don't think that uploading a video at 4K gives you any better quality when viewing the 1080p stream from YT. I'm doing a test right now, but I've previously looked at the 1080p stream across multiple videos, and the average bitrate for the 1080p stream was basically the same when the video was uploaded at 4K or at 1080p. What will get you a better looking image is uploading at a higher bitrate. So if you upload 1080p at 25Mbps and 4K at 100Mbps then the 1080 stream from the 100Mbps file will be better, but not because it was in 4K. People seem to still be very confused by YT....

-

I think it depends on what you're trying to achieve. A video showing test shots of brick walls, charts, high DR scenes, and scenes where a colour gradient is pushed in post to expose any banding would be very useful to a technical film-maker, but would be useless to the expressive film-maker who wants to see the aesthetic potential of the camera. Likewise, an emotional and dreamy creative piece where the composition is an interpretive dance between cast and crew can tell a creative everything they need to know about the texture and refinement of expression that the camera possesses in pure potential, and would tell the technical operator very little. The older I get the more I realise that people dramatically underestimate how different we all are to each other. Put more simply, what doesn't work for you might be useful for someone else.