-

Posts

8,046 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Which BMPCC? 2K? 4K? 6K? They're all different sensor sizes..... you need to be specific.

-

They'll likely stick with DFD, and while it is painful now, it is the future. PDAF and DPAF are mechanisms that tell the camera which direction and how far to go to get an object in focus, but not which object to focus on. DFD will eventually get those things right, but will also know how to choose which object to focus on. I see so many videos with shots where the wrong object is perfectly in focus, which is still a complete failure. This is from the latest Canon and Sony cameras. Good AF is still a fiction being peddled by fanboys/fangirls and bought and paid for ambassadors. Totally agree - as a GH5 user I'm not interested in a modular-style cinema camera. and if I was, then I'd pick up my BMMCC and use that, with the internal Prores and uncompressed cDNG RAW. It might sell well. The budget end of the modular cine-camera market is starting to heat up, as people get used to things like external monitors (for example to get Prores RAW) and external storage (like SSDs on the P4K/P6K) and external power (to keep the P4K/P6K shooting for more than 45 minutes), then modularity won't be as unfamiliar as it used to be. Everything being equal, making a camera that doesn't have to have an LCD screen (or two if it has a viewfinder) should make the same product cheaper to manufacture.

-

Is the DSLR revolution finished? If so, when did it finish? Where are we now? It seems to me like it started when ILCs with serviceable codecs became affordable by mere mortals. Now we have cinema cameras shooting 4K60 and 1080p120 RAW (and more) for the same kind of money. Is this the cinema camera revolution? What is next? Are there even any more revolutions to have? (considering that we have cameras as good as Hollywood did 10-years ago).

-

This video might be of interest in matching colour between different cameras. He doesn't get a perfect match by any means, but it's probably 'good enough' for many, and probably more importantly is a simple non-technical approach using only the basic tools.

-

Oh, the other thing I recommend is to test things yourself. I've proven things wrong in 5 minutes that I believed for years and never heard anyone challenge or question. Most of the things that "everyone knows" online is pure BS, and the ratio of information to disinformation is so small that if someone is disagreeing with the majority of people, then the majority is probably wrong and maybe the minority right. Oh, and if someone tells you something is simple, they just don't understand it enough.

-

YouTube 4K is barely better than decent 1080p.. here's a thread talking about this very topic: In terms of why your 200D 1080p is soft, that's a Canon DSLR, and nothing to do with 1080p. My advice is this: Watch a bunch of videos ON VIMEO to see what cameras are really capable of in 1080p - you'll be amazed. If you decide you want/need 4K then so be it, but do yourself a favour and try and actually look at images instead of brand names. And yes, "4K" is a brand name - just how the manufacturers of TVs marketed it to people to get them to replace their perfectly good 1080p TVs. Most movie theatres have 2K projectors, so lots of marketing was needed to get people to buy a TV that has 4 times as many pixels as a movie theatre. Forget about Canon, or be willing to pay the Canon Hype Tax. The internet is full of people who think that Canon is the king and everything else is second class. These people are fools who don't know how to tell if a camera is any good or not so they just check what brand it is and then go hang with the people they know will make them feel better. Canon has great colour science - so do most other brands. Canon has great AF - so do many other brands. Canon cripples their products because the fanboys and girls will buy whatever they're selling anyway. Go to the ARRI website, the RED website, and the BM website, download their sample clips and have a look at how plain they are. Try and colour grade them and see what you get. This should show you that the glorious images that you are seeing online from the cameras that you're lusting after, the Canon ones especially, are due to the skill of the operator in post, rather than the manufacturer who designed the camera. Good luck. My journey started with me wondering why my 700D 1080p files looked so bad and thinking I needed Canon colour science and 4K to get good images. I've now deprogrammed myself and use neither Canon equipment nor 4K, but I've spent a lot of money on glass. Good luck.

-

I've heard that VFX is a different thing entirely, and that you want RAW and at a high resolution as possible. The RAW is because you want clean green-screens without having to pull a key and be having to battle with the compression that will blur edges etc. The resolution is so that the tracking is as accurate as possible so that when you composite 3D VFX into the clip the VFX parts are as 'locked' to the movement of the captured footage as possible - VFX tracking has to be sub-pixel accurate so that the objects appear like they are in the same space as the footage. Screening in 2K is probably an advantage as well as it would mean that there is a limit to how clearly the VFX will be seen, so in that sense 2K probably covers up a bunch of sins.. like SD (and then HD) hid details in the hair/makeup department work that higher resolutions exposed.

-

@Neumann Films I posted it here because this thread is about the poor quality of the YouTube codec, rather than any commentary about the R5 or the economics or artistic value of vlogging. We all have opinions on a range of matters, but I just thought it was an interesting example, considering that Mattis audience is tech / image / camera centric, and yet even on such a channel a workflow upgrade / resolution downgrade wasn't really noticed. Even for Matti, who even the nay-sayers suggest would be heavily preoccupied with his social media engagement and comments section opinion on various camera products. For reference, I watch his content on a 32" UHD display, in a suite calibrated to SMPTE standards (display brightness is 100cd/m2, ambient light is ~10% that luma with a colour temp of 6500K, and viewing distance within the sweet spot of viewing angle, etc). Maybe some are watching on a phone, but not everyone - one of the first things those who are caught up in the hype of things like Canon CS / 4K / cinematic LUTs / 120p b-roll etc would acquire is a big TV and/or 4K computer monitor. I know that because when I got into video that's one of the first things I did, as that was the prevailing logic online, and I definitely wasn't the only one. Threads like this one are part of my journey of un-learning all that stuff the internet is full of and is often flat-out wrong. For anyone delivering via a different platform other than YouTube it's a different story. Of course, not that different if you do a little reading about how many features have been screened in 1080p in theatres and the film-makers didn't get a single comment about resolution, but that's a different thread entirely 🙂

-

Matti Haapoja got the Canon R5. Matti Haapoja shot in 4K and 8K. Matti Haapojas computer absolutely choked. Matti Haapoja went back to 1080. Apparently, no-one noticed.

-

Agreed. Like everything in film-making, it's great if you can make it look nice, but if it gets in the way of the story or content, then it's wrong. I remember a wedding photographer talking about taking group photos once, and how it is critical to get everyone on focus in a group shot, which if it's a large group of people can be tricky as the people at the edges are a different distance away. They also mentioned that the most important people at a wedding are the bride/groom, but the second most important people are the oldies as its a very common situation where "the last nice photo of grandma" was taken at a wedding, so making sure to get them in focus should be a huge priority. It's easy to forget, and make the film equivalent of a cake made entirely of icing.

-

The more I pull colour science apart, the more I realise that companies like BM and Nikon have colour science either just as good as Canon or within a tiny fraction, and also that ARRI colour science isn't perfect and there are things about it I don't care for. I know that this will get me ejected from the 'colour science bro club' but I don't care about being popular and fitting in, I care about colour science and good images.

-

There's a solution to that - it's called Manual Focus. ....and if you upgrade to Manual Focus, then basically Panasonic cameras are best-in-class! Seriously though, probably the biggest challenge with the AF crowd is that they think that PDAF / DPAF are near perfect and that DFD is unworkable. The real situation is that neither are fit-for-purpose yet. AF will be great, once it reliably focuses on where the director wants it to be focusing, and does so with the right transition speed and doesn't pulse when it's there.

-

Having something that was continuous would be great, like the Sony one, and the JVC and FS5 ones. It's not that difficult a thing to implement technically, so it's cool that some manufacturers did it. The thing to watch is that when your digital zoom is more than a 1:1 crop you're actually upscaling in-camera, and the quality falls off a cliff at that point. I tried 2x, which is a 1.35x downsampled image, but the 4x is 1.5x upsample so it's taking about 1.3K and upscaling it to a 2K image, which does not look good! Really, the proof is in the resulting image, so just give it a go and see how your camera performs. Absolutely, you're zooming into the noise and the softness of your glass. Given that most lenses soften up when stopped down a bit from their widest aperture, you may find that a general rule-of-thumb might be to stop down a bit when using the zoom function. When you're keeping the digital zoom in the range where it's still downsampling then you do get the NR benefits of rescaling. We saw that the benefits of a 5.2K image downscale to 4K was a decent improvement on things like noise, and that's only a 1.35x downscale (on the GH5) so it doesn't take a huge amount to smooth out the noise, assuming that the noise is pixel-sized, rather than the Canon noise that spans multiple-pixels for some unknown reason.

-

I've recently "discovered" some of the benefits of using the in-camera digital zoom. These won't apply to everyone, but it's worth considering and I don't hear a anyone talking about it. Let me illustrate by taking my GH5 as an example, but the principle applies much wider, especially as cameras go towards 8K and beyond. The GH5 has a sensor that's 5184 pixels wide. When you're shooting 4K, the camera downsamples the 5.2K to 4K, giving a higher quality image due to the benefits of oversampling. The benefits of oversampling are well known, and many cameras have this. The GH5 also has an ETC mode, which essentially does a 1:1 crop into the middle of the sensor. This is a common feature across manufacturers. If you use the ETC function in 4K, you get an additional 1.3x crop, and if you use the function in 1080p then you get a 2.7x crop. Both of these modes are shooting a 1:1, so you get a tighter FOV, but the image is no longer oversampled, so the quality goes down. Enter the digital zoom. In 1080p mode, the GH5 allows a 2x and 4x digital zoom. The 2x digital zoom is less than the 2.7x 1:1 zoom from the ETC mode, so (assuming that the cameras image pipeline is designed well) the resulting 1080p image should be an image that is taken from the middle 2.6K pixels and downsampled to 1080p. In other words, the 2x digital zoom is a way to punch-into the sensor but still keep the quality of an oversampled image. This principle will occur any time that the digital crop is less than the crop of going to a 1:1 area on the sensor for whatever resolution you're shooting in. The Sony ClearImage Zoom comes to mind here, where (I think?) you can zoom in by a lesser amount than the 4K 1:1 crop (which is something like 1.5x?). Perhaps other manufacturers have similar functionality too. Of course, the lower the resolution you're shooting then the more likely this will be available on your camera. For those still shooting 1080p then this is worth looking at. For those shooting 4K, this will increasingly be useful as sensors creep up towards 8K and beyond. For me though, I've now abandoned the idea of having to buy an 85mm prime, because I can simply do a 2x digital zoom with my 42.5mm lens and get the same FOV and (basically) the same image quality, and if I happen to have my 7.5mm lens on and want to quickly grab a 15mm FOV shot then I know I won't be sacrificing quality to get one. Had I known that this feature delivered such good results I may have actually bought different lenses, so it's not a trivial thing, and it's worth giving a quick go if your camera supports it.

-

Makes sense. Getting a 2.8 that is sharp from f2.8 up would probably have been more expensive. Of course, if you're cropping into the image circle then you're really only interested in the performance of the lens in the middle of its image circle. Unfortunately that's data that places like lens rentals publish, and not for that many lenses, as it's obviously very laborious to gather. I ended up with the Voigtlander 42.5mm f0.95 prime. Not sharp wide open, but almost as sharp as the competition when stopped down to their widest apertures, and can be used wide-open in a pinch. Subjectively a bit of sharpening goes a long way to matching things, especially if you're degrading the image in post as well with halation or other softening effects.

-

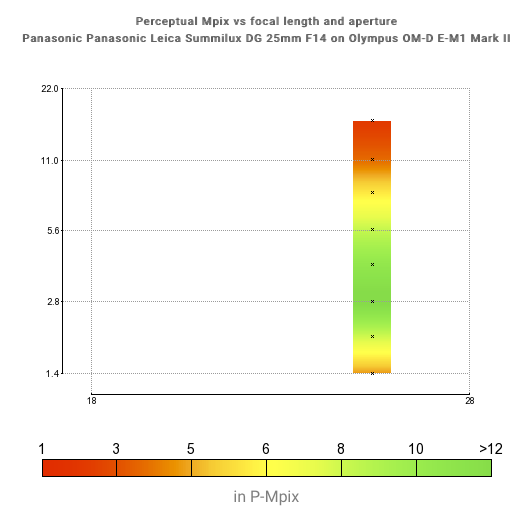

lol, the only place I didn't look was in the thread title! 😂😂😂 There's a pretty well established principle that lenses sharpen up when you stop them down the first couple of stops. As mentioned before this has exceptions, but those exceptions seem to be just that, exceptions. DXOMark has a lot of interesting lenses and you can look at the graph of sharpness (perceptual megapixels) vs aperture to see how much it sharpens up when stopped down. Typically they look like this: Where wide open they aren't as good as when closed down a couple of stops. So in that sense, the target aperture really matters, as the best way to get a sharp image at f2 might be to buy an f1.2 lens. The exception is normally much slower lenses that have the same kind of optical performance as the above, only don't open the aperture as much.

-

Absolutely. I have f0.95 primes, so you're preaching to the choir here! Actually, they didn't. Or at least, when I just re-read this thread five times looking for it, I couldn't find it! True. The issue I took, and thus my slightly sarcastic reply, was that this is viewed far too simplistically by people. Take two hypothetical lenses, one is f1.4 and the other is f2.8. Let's say that the f1.4 one isn't so sharp wide open, but the f2.8 lens is. The traditional, one-dimensional, thinking is that if you want sharp images then the 2.8 lens is the one to go for because "the f1.4 is soft wide-open but the f2.8 lens isn't", end of story, and mostly, end of how deep the persons knowledge is about the subject. The problem with this thinking is that the f1.4 lens might be sharper at f2.8 than the f2.8 lens is, but the sharp-wide-open one-dimensional thinkers don't go that far.

-

Why, f4 lenses are sharp wide open, that was your criteria. People often make the mistake of judging lenses wide open, but the problem is that not all lenses have the same largest aperture. Sometimes the best way to get a lens that's sharp at a particular aperture is to get a lens with a wider aperture than your desired one.

-

This seems to be the download page from Cinematography.net: https://cinematography.net/raw-and-exr-camera-rushes.html I haven't downloaded anything from there yet, but will check it out. I'm also unsure of the licensing, so if you explore these then make sure you're clear on that before proceeding.

-

I have consistently failed to make my own footage as good as I was hoping for, so have decided to try my hand at grading the best footage available, the sample shots from the manufacturers themselves. I've done this in the past but got sidetracked, but am now back on it. I figure that until I can make ARRI or RED footage look good, there's no point criticising my own footage - maybe the footage is fine and it's my grading skills that are solely to blame. My previous attempts, however short, made me realise a few things: flat ungraded footage from any camera looks dull there was a lot more noise in the RED and ARRI footage than I was expecting - ie, A LOT more Overall, the biggest surprise was how average the footage looked. Since then I've learned a bunch and hopefully will have more success. Anyone want to join me? Arri footage is here: https://www.arri.com/en/learn-help/learn-help-camera-system/camera-sample-footage RED footage is here: https://www.red.com/sample-r3d-files Komodo footage from Seth Dunlap is here: https://www.dropbox.com/sh/cfxdizrgt3aa4ml/AADPxF6XZIs2_uB7Xt_piHUya?dl=0 BM UMP 12K footage is here (underneath the "Generation 5 colour science" heading): https://www.blackmagicdesign.com/products/blackmagicursaminipro BM Pocket camera footage is here: https://www.blackmagicdesign.com/products/blackmagicpocketcinemacamera/gallery Sony Venice frames here: http://www.xdcam-user.com/tag/footage/ but I couldn't find any official footage for download I've downloaded footage from https://raw.film before too, so that might be worth checking out. As a reality check, I'm also pulling in the ML footage I've shot back when I was playing with it on my Canon 700D, and also the test shots I've been shooting with the BM Micro Cinema Camera. I suspect that the footage I shoot is much better than I think, and that I'm much worse at colour grading than I think. If anyone is interested in joining me that would be fun, and if there are other places to download well shot sample footage that would also be very interesting.

-

Maybe it's just marketing. The FF enthusiasts / myopians will look at this and have their confirmation bias renewed and will be left with the impression that Panasonic understands them, sees FF as being the superior sensor size ordained directly by God, and that Panasonic is the brand to get. The MFT enthusiasts will look at this, be encouraged by the frame-rates and remember the track record of the GH line, and hold their opinions for when we see the IQ. Then the GH6 can be released and the MFT enthusiasts will see that the IQ is excellent. The FF myopians won't even look at "toy camera" reviews and will be none the wiser. ....and everyone gets what they want and Panasonic lived happily ever after. *roll credits*

-

Is this just fantasy? Lockdown in pandemic No escape from reality...

-

The fundamental challenge you have is the one that we all have - there's no perfect camera. The second, very unfortunate, challenge you have is that the 'simple' task of having a small, light-weight camera that can keep up with your kid and how you live your life. What this translates to, and why I put 'simple' in quotes, is that you want a camera that can: be small and pocketable, likely implying a smaller sensor have autofocus that can keep up with a small child (otherwise known as 'world-class' - watch some AF tests if you're unsure of this) can do that AF in the lighting that you find yourself in, which after the sun goes down, will be extreme low light has the kind of IQ that you deem acceptable Basically, the above is a very difficult set of requirements, unlikely to be met by anything, so you'll have to choose which things you are willing to compromise on. Parents getting a camera for pics of their kids running around think it seems like a reasonable request, but in reality it's kind of like saying you want continuous eye-AF at f1.4 in pitch-black. Cameras are getting way better than they used to be, but we're not there yet.

-

See if you can borrow one (or buy it with a return option) and do a bit of a stress test. I say this because I have a friend who is into stills and has an old DSLR that he hates lugging around, but he's repeatedly tried going on trips with only the latest iPhone and has kept going back to the DSLR. His reason.... none of the iPhone photos are good enough for him to print and put on the wall. The moral of the story is that the best of the convenient options may still not be good enough, and you might be better off trading some of the convenience in order to get something that will work for you. In the end we only remember the footage, not the camera gear, so as long as the equipment is as minimal as it can be for the required results, and as long as it's minimal enough that you actually take it and use it, then that's the way to go. You haven't talked about what focal lengths you're interested in using, and how you actually shoot. A MILC and pancake prime isn't a bad option and I've done that as a (barely) pocketable option in the past to great results.

-

Very impressive. Almost didn't watch it as the thumbnail doesn't do it any kind of justice whatsoever!