-

Posts

8,047 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

What's VP? Virtual Productions? Is that for green-screening and VFX?

-

Test it and see. It's free and you'll know how it sounds.

-

From the April edition of Film and Digital Times page 42: https://www.fdtimes.com/pdfs/free/120FDTimes-Apr2023-150.pdf and later on... and It also showed that the BMMCC were put in fireproof boxes. BMMCC and P4K on a €30,000,000 film shot in 2021 that screened on IMAX. Just goes to show that these cameras are still relevant and in active use in productions larger than anything being discussed on here.

-

Thanks, that's good to know. My main focus for this is the GX85, which doesn't have a mic input, so the internals will have to do. I also shoot a lot with my phone now, but I'm not sure how I would integrate windcovers there. I looked at external mics for it but they're not super pocketable, so I'm still pondering that one.

-

Nice.. subtle, but these have good shape. The WanderingDP examples are typically a lot darker than you'd want for corporate work. Maybe that's what you were thinking of 🙂

-

To elaborate a bit more on the lighting discussion, I heard about shooting on the shadow side from Wandering DP, who does excellent cinematography breakdowns on YT: https://www.youtube.com/@wanderingdp/videos I went through a phase of binging his videos and although I couldn't find a good self-contained example of him talking about it, just look at all the thumbnails on the videos of his channel and you'll see that in most of them you are looking at the shadow side of their face (just look at their nose). I highly highly recommend his stuff if you haven't seen it - he's obviously a working pro and his videos contain just as much talk about how to light so you can work faster and deal with the sun moving throughout the shoot day as he does talking about how to get things to look nice (they actually go together too rather than competing with each other).

-

Nice. Production design is cool, and the skulls as the drums made me lol.

-

Definitely agree about shooting into the darker side of the subjects face, as @PPNS suggests, if you have control of the lighting etc. I'm also wondering @hyalinejim if you could get the fidgeters to stand right next to a desk so they had their thighs/belly against the edge? Maybe that would stop them moving around so much? It would also help keep them in focus too 🙂

-

People should be recording in whatever is the highest quality they can, absolutely. My point was that it's at least 44k 16bit, which absolutely creams video! These look ok, but my question on these things is how well do the adhesive pads work. I've bought a cheap no-name brand variation of these and the back of the fabric was a loose mesh that was about the worst surface you could imagine to try and stick anything to with (what is essentially) sticky-tape, and I can't see anything about these being any different. That loose weave is how all faux fur seems to be manufactured, and the images of these things seems to indicate they're no different? Even their product page indicates that the 30 stickies only gives it 30 uses, rather than them being a robust solution. If I bought these for my in-camera mics I don't want to have to re-install them every time I pick up the camera. https://www.rycote.com.au/products/micro-windjammer-30-uses I'd be happy to be proven wrong though. I'm wondering if multiple layers of a thinner material might be a better bet, if I alternated laters of fabric and adhesive tape. I bought a roll of 3M double-sided tape so I could custom-cut the shape so it could have a large sticky area and hopefully keep things in place for more than one or two uses.

-

I'm also wondering this. I plan on doing some tests to work out what the best solution is, but I haven't done it yet so can't speak to results. I do think, however, that the dead cat style is likely hard to beat because it's sort of got two 'modes' - when there's no wind the fur is sticking up in random directions and letting sound in without much resistance, but when there's a gust of wind then it pushes the fur flat and that forms a barrier against letting the gusts of wind through. Unless something has that kind of behaviour then it's likely to block more sound when there's no wind and block the gusts of wind less when they happen. However if you are looking for a smaller solution with good performance, I suspect that some sort of hybrid with multiple layers of different materials might be a good solution as the manufacturers are likely to have just gone with size rather than trying to engineer a more complex and higher-performing compact solution.

-

10s is positively glacial! Wow..... Yeah, those are all so much more compact that the Rode VideoMic Pro.... that's what I ended up buying years ago and it works well but its size and shape are definitely behind those ones above. It's nice to see that progress is being made. The other thing to take into account is the shape of the chassis that they put the mic capsule in. The more directional mics are more directional because the body is longer and all the holes along the sides all create an acoustic chamber where the sound from the sides gets cancelled out. If not designed properly the chassis can give the capsule a funny sound, even with the same electronics... acoustics are a very complicated topic!

-

Great to hear you've found something that works, that's the end goal after all. Just to comment on the above for anyone else reading who might be curious, matching the EQs of mics isn't that difficult in practice and doesn't have to be perfect, just good enough to not notice. The tonal balance of any given audio source is much more likely to be impacted by the acoustic environment that the sound is in and the location and pickup pattern of the mic in that environment. You can make a piano sound thin, dreamy, harsh, velvety, full, grating, resonant, damped, etc etc etc by just moving the microphone and a blanket around in the room its in. Mic-ing up a piano is one of the skills that takes decades of experience to do at the level of a well respected studio engineer - I once rented a piano and spent six months making recordings with it and the same mic so I'm aware of this. From a technical standpoint, audio is stunningly higher quality than video is (if that's your reference point). It's uncompressed, 16bit (or more), and has over 44,000 samples per second. In professional audio settings audio can get EQ'd again and again and again and no-one is fragile about it. The internet practically a desert when it comes to deep knowledge about what audio quality actually is and how things actually work, and the level of understanding that most 'reviews' show is less than most YouTubers who think colour grading is buying the right LUT. I mean, there is knowledge out there, but to find it (and discern what's correct and what's not) requires skills almost equivalent to being able to live off the land in an actual desert - you have to know what you're looking for and where to look. EQ is practically the last thing that you would judge quality on, because it's the first thing that you can fix in post, and pay basically zero cost in terms of audio quality. The only real barriers to EQing something to sound how you want it is the access you have to decent quality plugins (although the basic ones get nicer and nicer) and the skill to use it. Learning to EQ something to get the sound you like is roughly equivalent to learning what a log profile is and how to convert it to 709. Anyway, rant over. EQ is one of the first and most fundamental of audio production skills and shouldn't form the entire basis of how you judge equipment, let alone the entire skillset of the reviewers you listen to.

-

I'm not up with the latest on the external recorders like the Zoom units, but I found them to have several disadvantages when I looked at them in the past: They are self-powered so require separate charging setups, so it's another thing to manage and can go wrong Would you put their line-out into the camera, or record internally on the recorder to an SD? The former is good, but the latter requires extra media files to manage and will need to be sync'ed in post. You also need to turn on two devices and hit record on two devices and stop on two devices potentially, I don't know if they're smart enough to sync power and record/stop somehow They are large and are one step closer to you looking like a 'pro'... I don't know how and where you film your family, but I spend a lot of time filming in private places like museums, art galleries, amusement parks, aquariums, etc and you run the risk of getting flagged by security for needing permission etc Of course, the sound quality would be great and they'd have the power to drive two separate mics, so you could have two Rode Video Micro style mics mounted to it, for example. In terms of it sticking out and interfering with the viewfinder, that's actually a common issue with powered hot-shoe mics like the Rode VideoMic Pro. I don't know of any brackets that move the hot-shoe forward with hot-shoe to hot-shoe, but if the Zoom just takes a normal 1/4-20 then you could make a little adapter plate from a flash bracket. I've done this before using an aluminium flash bracket and using one of the screw holes for the 1/4-20 and then just gluing an adapter to it, which in your case would be the hot-shoe attachment.

-

I'd say you should film a few test clips around the house with the options you already have and then see how audible they are when you put some music over the top. The other option is to remove the mic from the hot-shoe when you put it into your bag, which will add a little to the time of taking it out and hitting record, but that might not be that much of a barrier depending on how you shoot.

-

lol about an S5ii and 18-50 being a small setup, but it's all relative. It certainly means you are less sensitive to the sizes of the small mics that people are talking about, so that's to your advantage. In terms of EQ, it's really up to your preferences - you're not going to get an 'accurate' frequency response and you'd want one about the same amount as wanting 'accurate' colour science anyway. Probably the general thoughts I'd have about EQ, and I'd be keen to hear @IronFilms thoughts here too, would be: You might want to tame any particular problems in the frequency response if they bother you when listening to the sound from it, although with any half-decent mic this is unlikely to be an issue. If you can't hear any issues then you don't have any so don't worry. You can sort of think about EQ-ing the human voice in three areas: the lowest area is how much body there is to the voice (think about how men with deep voices who work in radio sound), the middle part which is the "normal" part of the voice we think about when thinking about most people's voices, and the higher parts which are the harsh sounds in 's', 't', and 'p' sounds (called 'sibilants'). In terms of these three areas, you basically EQ them so they're to your tastes. There are as many areas across the frequency range you want, but those are the most basic. Just play with whatever EQ plugins or adjustments you have access to and work out what knobs sound like what. Audio can get really technical but you don't need to know the tech to work out how to get a sound you like. Context matters when mixing the sound on a final video. You might be adding music or ambient sounds to a video (and I'd encourage this - sound design is a big part of the experience of enjoying a film/video) and so the EQ of sounds or voices might be tweaked to ensure they can be clearly heard and understood in the final mix. If you have music then it's normal to lower the volume of the music whenever anyone speaks (called 'ducking') but, for example, if you EQ the voices to have a bit more in the higher frequencies then they can 'cut' through the music a bit more and means you don't have to reduce the music volume as much. An alternative is to EQ the music a bit, lowering the frequencies in the middle (where the human voice is) so that the voices don't have to compete against the music in those frequency ranges as much. The best way to learn is to just play with it and trust your ears. Unless you have a significant hearing impediment then just go with what you hear - there is no right and wrong. Getting a second opinion on something can be useful too. My audio workflow for my travel/home videos is this: Edit the video to a basic rough cut Work out what music I'm going to have (my videos are usually music-heavy and dialogue-light) Adjust final edit points to align to the timing of the music. It's not about always cutting on the beat, it's about making them feel like they fit together.. but a cut on a beat drawers extra attention and can be a great way to emphasise a certain moment or shot in the video, so go with what feels right. There's a school of thought that says you should edit to music even if you don't use it in the final project, just because it will give your footage an element of timing that is desirable. Mute the music tracks and mix the ambient sound / dialogue from the clips so it's broadly coherent. This typically includes matching volumes of clips across edit points, if there are sudden changes in BG noise (like cutting from somewhere quiet to somewhere loud) or the nature of the ambient sounds then I'll crossfade just the audio to soften the transition, remove any audio issues like clicks or pops (or people talking in the background), etc. This is where you might apply some EQ to individual clips or the whole audio track. I keep the ambient sound from all clips turned on, even if there's nothing there in-particular. For some reason it's audible in a subconscious way even underneath music. It makes the visuals feel more real and less disconnected. With the music still muted I'll add in more ambient sounds. This is as simple, and cliche, as adding wave sounds and seagull sounds for clips at the beach, etc. Obviously if there is already a ton of traffic noise on your captured audio then you don't need to add more, but you could try adding the sound of roadworks or other things you associate with the city too. Unless you're crusading for absolute truth in the arts then this isn't a literal work, it's a creative and emotional work, so you can embellish it as you wish. Ambient sounds increase the emotional impact of the visuals you have captured. Make the video sound the way you want people to feel when they watch it. There's a decent argument to be made for the idea that a home video should be true to the emotional experience of the events, not to the objective aspects of those events. This is how I try to make my videos anyway. Then I un-mute the music, adjust levels on the tracks and review the whole mix adjusting anything that stands out as being wrong or too obvious etc. It's a good idea to review the mix on some headphones as well as one or two sets of speakers just to ensure it's not optimised for one output device. That process might seem really involved, but it can be as easy as putting some music on an additional track and listening through a couple of times stopping to tweak here or there. I shoot with audio in mind now too, and will record music of buskers on location to use in the edit, or will record other ambient sources if I think they'll be useful. Also to remember that shots that got cut in the editing process might have good audio that can be used. I can recommend a source of free music if you're uploading to social media - even if you're publishing private/unlisted videos they still scan and apply copyright licenses etc. I find this to be true, although being able to hear your subject is paramount too. Despite the ability to record in eleventy-billion-K resolution, the latest cameras are still mostly limited to two-channel recording, which I find to be stupid, as this means you have to choose between having stereo audio or having a safety track. A Mid-Side (M+S) configuration allows you to adjust anywhere between a 180-degree stereo pickup and a mono directional mic using only two-channels, but the mics are rare and audio support is limited, and this doesn't solve the safety-track problem. I've forgotten to adjust levels on a few occasions, and even with a safety track I've clipped the living daylights out of something, so it happens. A camera that could record a mono mic plugged into the stereo input at two levels as well as recording the internal stereo mics would be a perfect solution and would cost little extra to manufacture. But I'm guessing we'll get 2-channel cameras that can record twelvety-billion-K next, rather than addressing what is a pretty straight-forward requirement. The Sennheiser MKE440 is a compelling option, if you don't mind the size and price. I've had thoughts of trying to run two Rode Video Micros in stereo with an adapter but I just never got around to it. The MKE440 is probably your best bet, that I know of anyway.

-

A few thoughts... The Rode Video Micro or V-Mic D4 Mini etc can be good options - watch comparison videos to get real-life examples of how they compare, especially their relative output levels (as you want to get the most signal out of the mic so you can turn down the internal preamps) The shock mounts are usually bulky and fragile, especially for putting into a camera bag, but I've seen alternative mountings (for example using adhesive velcro) but there might be a compact solution that fits into the hotshoe You should test the internal mics as they're often higher quality than you think People seem to think that the EQ of a microphone is what defines its quality - just listen to how people talk when comparing mics, but it's one of the easiest things to change in post so don't fall for that Be aware that AI can now clean-up audio sources to basically eliminate all the background noise and retain only the voice (Resolve now has this built-in) One thing you can't fix in post is directionality - early in my journey in video I recorded the kids riding go-karts around the track and while I was recording I thought the video would contain audio of their karts going around the track but when I got home I found it mostly contained the person sitting behind me in the stand talking about how her hairdresser had broken up with her boyfriend or whatever The dead-cat can be swapped for a trimmed down foam microphone cover designed for a large shotgun mics from eBay to create a much more slimline profile Happy to elaborate on any of these so just ask 🙂

-

Great stuff! I've done a few trips to places with reefs etc and have filmed a bit underwater (GoPros) and it's definitely not as easy as it looks, with many unexpected challenges you wouldn't anticipate if you have only shot above the surface before. I was freediving so couldn't spend much time at depth but one trick that I found was super useful was to find something interesting like a coral with fish swimming in and out of it and to place the camera down there so that the coral was framed nicely in the foreground, then I would swim up and leave it there. The fish all take a little bit to relax and then start coming out and looking at this new thing that has appeared in their world. I was using PVC pipes as my "tripod" as they are just press-fit and can be adjusted and re-arranged while out on the reef, and because they're white the fish think it might be food (meat) so are rather curious. PVC is only slightly more dense than water so a small rig made of mostly PVC will sink slowly but place very little weight on whatever you put it on. By the time the fish are starting to explore my rig I've swum back to the surface and around in a large arc so I'm now in the background of the shot swimming on the surface towards the camera, so the shot you get is a stationary tripod shot with curious fish and me swimming toward camera in the background. Then I dive down and collect the camera. I'm not sure how practical something like that would be with much larger rigs like you have, but it seemed to work well for me.

-

I'd also suggest a background that is a distant hue from the puppet and then just use a key of that hue to create the mask. It doesn't have to be the exact opposite of the puppet, just distinct from it. Tools like the keyer in Resolve can pick out quite specific hues. Just be careful not to let any light bounce off the background onto the puppet or you'll have an un-natural halo on the puppet of the background colour.

-

This is mostly true. I subscribed to the 'subtractive' model of editing where you start with all your footage and then remove the parts you don't want, making several passes, and then ending in a slight additive process where I pull in the 'in-between' shots that allow it to be a cohesive edit. I'm aware there's also the 'additive' model where you just pull in the bits you want and don't bother making passes. Considering my shooting ratios (the latest project will be 2000 shots / 5h22m likely to go down to something like 240 shots / 12m - either 8:1 or 27:1 depending on how you look at it) and the fact I edit almost completely linearly (in chronological order of filming) I might be better off with an additive process instead. I've also just found a solution to a major editing challenge, and am gradually working through the process of understanding how I'll include it in my editing style. If you're not deeply attuned to the subtleties of the image (as I know some people are) then its quite feasible to match footage across cameras, and even do image manipulation in post to emulate various lenses, at least to the extent that it would be visible in an edit where there are no side-by-side comparisons. The fact that a scene can be edited together from multiple angles that were lit differently and shot with different focal lengths from different distances is a statement about how much we can tolerate in terms of things not matching completely. I had a transformative experience when I started breaking down edits from award-winning travel show Parts Unknown (as that was what most closely matched the subject matter and shooting style I have). I discovered a huge number of things, with some key ones being: Prime (which streams high-quality enough 1080p that grain is nicely rendered) showed clearly that the lenses they used on many episodes aren't even sharp to 1080p resolution, having visible vintage lens aberrations like CA etc They film lots of b-roll in high frame rates and often use it in the edit at normal speed (real life speed) which means it doesn't have a 180-shutter, and yet it still wins awards - even for cinematography Many external shots have digitally clipped skies Most shots are nice but not amazing, and many of them were compositions that I get when I film The colour grading is normally very nice and the image is obviously from high-quality cameras This made me realise that the magic was in their editing. When I pulled that apart I found all sorts of interesting sequences and especially use of music etc. But what was most revealing was when I then pulled apart a bunch of edits from "cinematic" travel YouTube channels and discovered that while the images looked better, their editing was so boring that any real comparison was simply useless. This was when I realised that camera YouTube had subconsciously taught me that the magic of film-making was 90% image and 10% everything else, and that this philosophy fuels the endless technical debates about how people should spend their yearly $10K investment in camera bodies. Now I understand that film-making is barely 10% image, and that, to paraphrase a well-known quote, if people are looking at the image quality of your edit then your film is crap. When you combine this concept with how much is possible in post, I think people spending dozens/hundreds of hours working to earn money to trying to buy the image they like, and spending dozens/hundreds of hours online talking about cameras without even taking a few hours to learn basic colour grading techniques is just baffling. It's like buying new shoes every day because you refuse to learn how to tie and untie the laces and the shop does that for you when you buy some. Absolutely - that's a great way of putting it! My consideration is now what is 'usable', with the iPhone wide angle low-light performance being one of the only sub-par elements in my setup, and, of course, why IQ is Priority 4.

-

I'm into the edit from my last trip. Putting everything on the timeline results in a 5h22m sequence. Resolve interprets still images as a single frame, so they aren't padding out the edit time. My first pass, where I pull in just the good bits of the clips got it down to 1h35m. The way I edit is to use markers to separate locations and sequences within a location, which lets me organise the footage (Resolve isn't great if you're shooting on multiple cameras without timecode, so things are often out-of-order). For example, we went to an aquarium and saw the otters getting fed, saw the sharks getting fed, etc. In a sense, each of these is like a little story, and for each one I have to establish the scene, then have some sort of progression in the sequence that addresses the "who was there", "what did they do", and "what happened" sort of questions. I've identified over 40 location markers and over 40 sequence markers within those locations. Some locations only had one sequence, but others were full day-trips and had a dozen seperate sequences. That's over 80 stories! My next steps are to work out which stories get cut completely, then to confirm the overall style of the edit. My challenge is always how to get from one location to another and establish the change. I typically shoot lots of clips when walking, on buses / trains / taxis, etc for this purpose. As it was South Korea, known for K-pop, K-dramas, and the super-Kute things (their reality TV has lots of overlays like question-marks when people are confused or exploding emojis when people are surprised), I'm contemplating a super-cute style with lots of overlays, perhaps using animated title-cards that show where we are and what we're doing. This would be an alternative style of establishing the location. I could even have little pics of who was there (sometimes the kids came with us and sometimes not). Then I'll identify the best shots from each sequence, which other shots are required to tell the story, and then cull the rest. Normally that shrinks the timeline significantly again.

-

It is a shame, but the fact that it's a 709-style profile seriously helps out the 8-bit. If it was an 8-bit log profile then you'd be stretching it for a 709 grade, but this isn't the case with these cameras. The iPhone is a 709-style profile and is 10-bit. That's (very-roughly) equivalent to a 12-bit log profile.. very nice! The more I use the GX85, the less I find it wanting TBH. In some ways this would be a good option, but I really really appreciate the smaller size of the 14mm and the 12-32mm (at least when it's not in-use). I found that I would "palm" the camera when carrying it around: This really helped me not attract un-due attention and protected the camera from bumps in crowds etc, but kept it at the ready when needed. I could do this with a longer lens but it makes it significantly larger, and makes pocketing it a lot more challenging. I wouldn't really miss the room range above 32mm, as that's 70.4mm FFequiv when combined with the 2.2x crop-factor of the GX85s 4K mode, when walking around, as I edit in 1080p and could punch-in to 141mm FFequiv. When I was using the 14mm f2.5 I punched in using the 2x digital-zoom all the time and didn't notice any loss of image quality at all. Maybe there would be a small loss in low-light but I never noticed it in the final footage where there wasn't a direct A/B. The GX85 only has a 1.1x crop into the sensor for the 4K mode, and the 1080p mode doesn't have any crop at all. I haven't really experimented with the 1080p modes TBH as I wanted the 100Mbps bitrate of the 4K mode. I do wonder how much would be supported if we had full access to all the modes supported by the chip. Obviously the chip in the GX85 can support being given a 4K read-out, can apply a colour profile and do whatever NR and sharpening is done to a 4K file, and can compress a 4K output file at 100Mbps. To imagine that it might be able to compress a 1080p image at 100Mbps isn't that far-fetched. Who knows what else might be available on the chip. ALL-I codecs, 10-bit, etc.

-

Nice edit. Simple, but mostly friction-free. The dialogue was free-flowing too, so perhaps the subject was relaxed and concise or you made some nice edits. Either way is a good thing, being able to interview properly is a skill I know I don't have!

-

With one-handed cameras are you now taking stills and video of the same moment simultaneously? I only do video but often feel the desire to be getting multiple FOVs simultaneously (beyond what the tech can do with cropping in post). Just a week ago I was on a pier at night taking shots of the waterfront buildings and their reflections in the water when the people just near me started letting off a small hand-held firework, which was juuuuuust too wide a shot to fit into the frame on the GX85 + 14mm combo I was shooting with (~30mm equivalent), so while continuing to record with the GX85 I pulled out my iPhone and tried to also capture the scene with that camera with my other hand. Naturally, I screwed up the iPhone footage and almost screwed up the GX85 shot too because I wasn't keeping the frame steady and it was already not fitting in the frame. I haven't found that file in post yet (I'm mostly through pulling selects from the 5h22m of footage from the trip), and I didn't review it that night because we were leaving the next day and I started to pack instead of taking time to review my dailies. When we're all shooting with 1000Mbps 8K cameras then I'll be able to put on a super-wide and then crop a 1080p frame for the normal and mild-tele in post, but until then I can't get all three from the same file.

-

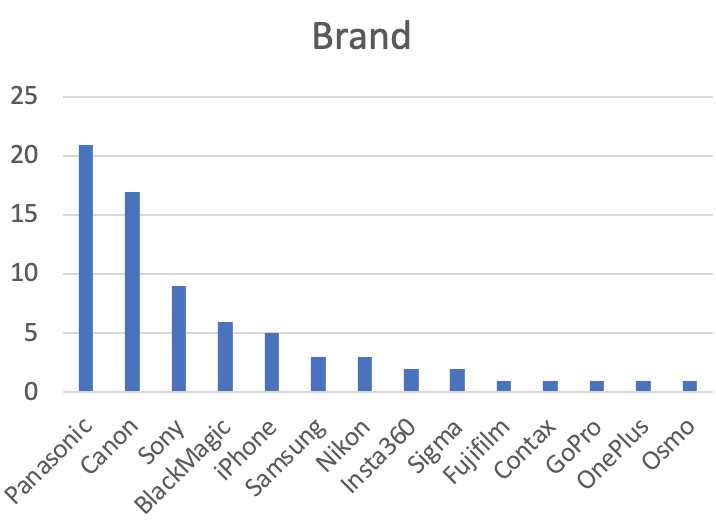

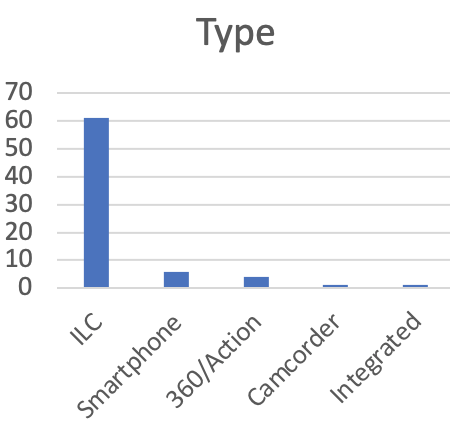

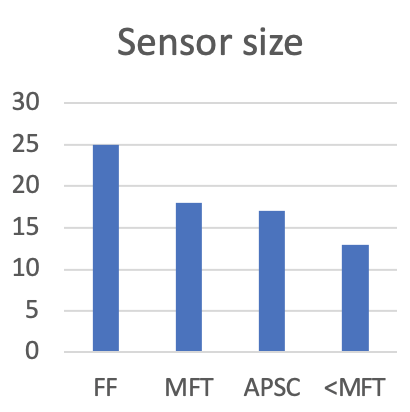

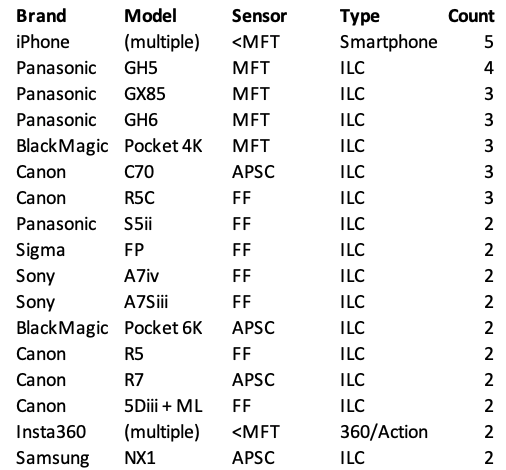

Current totals and some very shallow analysis. Not much overlap between what is used and what is discussed, and not much respect for what people are actually using.

-

This leaves me with the best setup being either the iPhone by itself, or the iPhone + GX85 which means that the iPhone can do the wide and the GX85 can do the normal and tele FOVs. The 14mm is good when combined with the 2x digital zoom, but still lacks some flexibility. When I got home I put on the 12-35mm f2.8 zoom and while it's far more flexible, going a bit wider and hugely longer, the additional size puts it half-way to the size of the GH5 and severely hurts Priority 1 and 2. This left me with a choice: iPhone wide + GX85 + 14mm iPhone wide + GX85 + 12-32mm f3.5-5.6 iPhone wide + GX85 + 12-35mm f2.8 iPhone wide + GX85 + wide-zoom like 7-14mm or 8-18mm The question was if the iPhone wide camera was good enough in low-light. I reviewed my footage and basically it's good enough indoors in well-lit places like shops or public transit, good enough in well-lit exteriors like markets, but very very borderline in less-well-lit streets at night. For my purposes I'll live with it for the odd wide and will lean heavily on NR, which will give me the ability to have a longer lens on the GX85 which extends the effective range of the whole setup. I did a low-light test of the iPhone, GX85 and GH5 and found that the GH5 and GX85 have about the same low-light performance, iPhone normal camera is about GX85 at f2.8 and the iPhone wide is about GX85 at f8. Considering that iPhone-wide/f8 was very borderline, if I aim for something like f4 or better then the lens should be fast enough in most situations I find myself. I don't really find myself wanting shallower DOF than the 14mm f2.5, and could even have less without much downside. This means that the option of a zoom lens is not out of the question, and the 12-32mm pancake seems a very attractive option. It's larger than the 14mm when it's on and extended, but when it's off it's basically the same, and it would give a huge bump in functionality. I took a number of timelapses on the trip too, and having a zoom would make these much easier as well. I'm contemplating the 45-150mm as well, but we'll see.