-

Posts

8,047 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Yes! I definitely agree with you - there must be optimisations that can be made. Please Panasonic!!

-

I recommend doing a proper WB. I've seen a bunch of side-by-side tests where they set both cameras to the same Kelvin WB and then nothing matched. I have no idea why the cameras wouldn't all match the WB, but it looks like they never do. I'd suggest trying a custom WB on a grey card and then trying the comparison again?

-

Interesting and comprehensive analysis of GoPro from an economics / business point of view. It's 43 minutes long, but is in plain English and well constructed. It's interesting how they've managed to stabilise in the last few years and are now segmenting their lineup, which seems to be strategy that protects them from smartphones and other market pressures. Pity they didn't do that a decade ago...

-

Actually, the XH2S and P4K are great options when combined with the LUTs/powergrades from Juan Melara: https://juanmelara.com.au/products/bmpcc-4k-to-alexa-powergrade-and-luts https://juanmelara.com.au/products/x-h2sfuji-to-alexa-powergrade-and-luts Juan is a working DP and colourist, and is one of the most knowledgeable colourists online, especially when it comes to matching complex transformations. I've bought from him before and had good experiences. The A/B results speak for themselves too. Here's the Fuji A/B shots, and the P4K ones match even better.

-

My year 11 English teacher decided that there wasn't enough life education in school, so he told us stories, and let us sleep in class, but still taught us what we needed to pass the tests. One recurring theme he'd tell us stories about was how he'd get away with buying hifi equipment and smuggling it into his house without his wife finding out. The most memorable one was how he got away with buying a pair of large subwoofers, which were about 1 meter/yard cubed each. Here's the procedure he recommended: Buy them, but have the store hold them until you can arrange a good delivery time Subtly arrange for your wife to be away overnight (e.g. concert tickets for her and a friend to something she'll like but you won't and then a "girls night" stay at a hotel afterwards) Arrange to have the subwoofers delivered as soon as she's gone Put them in the corners of the room Cut some plywood to cover the sides of the subwoofers that aren't against the wall, so it's not visible Cover over the ply with a nice tablecloth and have it go all the way to the floor Put a pair of matching fancy trays and a pair of plants your wife likes and put them on top When your wife comes home, tell her you bought her some plants, if she asks about the boxes, just say they're boxes but change the subject After a few months, remove the false boxes and adjust the cloths so they don't interfere with the subwoofers, and wire up the subwoofers Only use the subwoofers on very low and only when she isn't home After another few months, you can start using the subwoofers while she is home, but only very very subtly If she ever notices, say they've been there for years and act surprised she doesn't remember - immediately start talking about their specifications and history of the company so that she is eager to change the subject I enjoyed his English classes.

-

HA! you don't hear the colourists saying they can match the lesser cameras to the Alexa in post! The first thing they will say is about managing your expectations. The second thing will be about managing your expectations. The third thing might not be about that, but also might still be.... I have no idea what your situation is, but it might not be a bad strategy to just buy a set of matching cameras and then insist on using them on every job - you'd likely lose work because you're putting conditions on things but the final quality of the projects you shoot would go up. Then you could focus on optimising your craft rather than struggling with random camera after random camera on each job, making your work increase over time and making you more desirable...

-

The footage will look highly processed, even if you shoot Prores, unless you shoot Apple Log. It's not the codec that disables all the processing, it's the Apple log. I'm pretty sure that means you can shoot Apple Log and h265 and have an image that isn't over processed, isn't the huge file sizes, and you can record internally and not have to have an SSD etc.

-

It's all slightly relative too - I recall a video that Toneh made where he tested a bunch of cameras to see what level of exposure they got at the same ISO setting, and the results were that there was quite a lot of variation despite them all being tested in controlled conditions - IIRC the differences were more than a stop different across the range of cameras.

-

LOL. That can be taken two ways.. I'd suggest it's a subtle hint rather than a comment on the cameras! In discussions with colourists where the subject comes up, opinions I've seen range from "results are limited by the quality of the footage" through to "these cameras shouldn't ever have existed"!!

-

Ah, I missed that. Any idea what ISOs they correspond to? I'm guessing one is three stops above the other, and this document here lists some cameras sensitivities and ISOs, so maybe 500 and 4000?

-

Actually, here's my nomination for best-bang-for-buck camera nowadays - the Blackmagic Micro Studio Camera 4K G2 just announced by BM. Here's why: It's tiny, and fits the entire ecosystem of the previous Micro models, including battery type (Canon LP-E6) It can record BRAW up to DCI 4K60 to USB-C.... so you don't need a big expensive external recorder, you can use any old HDMI monitor you like! BM specifies 13 stops of DR, the same as all their previous cinema cameras, and up from the 11 stops they quoted for the previous Micro Studio camera It has AF (it won't be fast, but the previous BMMCC didn't have AF, and the OG BMPCC one was quite useful) It's $995! So, it's smaller and cheaper than the P4K, but appears to only have one native ISO. Interesting.

-

I was saying that, based on the lengths that product placement and cross-marketing and stealth / undercover marketing will go to these days, but points raised since then have dissuaded me from that perspective. The world is chaotic and sometimes things happen by accident, but the online camera ecosystem sure is eating it up! To be honest, despite me being critical of Sony for many aspects of their business and product strategies, and of the Sony fan-boys/fan-girls for being so one-sided and so loud ad nauseam (and frequently in the face of reality) the image that they're extracting from the sensors in the FX3 and FX30 is impressive, and I definitely respect the form factor for being small but also practical / reliable. Of course, from the company that has a virtual monopoly over sensor production, they'd be crazy not to make their products get the best from them and actively block other companies from getting access to the best results (or at least making them pay a lot for that level of performance).

-

Interesting. It did a pretty good job then. The things that I noticed first was the skin tones. This is what I always look at, and is most important. The one on the left has those nutty tan and brown hues and the one on the right has 'porange' (pink&orange) and red tones. The hues of the skin tones are the most important thing for me in images. I also noticed the differences in the green/cyan area top-right, which has greens on the left but none on the right. BUT, the skin tones are so important that they're the entire ballgame if you're cutting an interview between two angles. A really quick and dirty way to match skin tones when they're so far apart is to just rotate the hue of the entire image. This sounds like a brutal thing to do to an image, but it will never break the image and we are so sensitive to skin tones that what is a huge change in skin tones is imperceptible on everything else in the image. If there is anything that goes off in the background then you can easily do a Hue-vs curve adjustment on it, which is much more prone to inaccuracies and stressing the image so it's far preferable to rotate the hue of the whole image to suit the skin tones and then do the Hue curves on the background rather than the other way around. This assumes that you've correctly white-balanced and exposed the cameras, and are doing colour management and transforms properly of course.

-

Yeah, and the C200s are smaller so maybe easier to wrangle? If you have two of them, it might even mean having two setups ready to go and not having to re-rig in the middle of a shoot perhaps?

-

Test video - GX85 with Standard profile and 12-35mm F2.8 wide open. A few shots were ISO 400 or 800 towards the end. SOOC: After grade: Despite being fully manual, the shots had significant colour variation. Not sure if it was the vND or what, but colour management is critical to give ability to WB and expose in post on 709 footage.

-

That would be interesting, but wouldn't it compete with their A6xxx line too much? Sadly, what you're talking about would actually make a half-decent vlogging camera. I suspect Sony is too spec-focused to do such a thing. Rather than remove resolutions and frame rates they'd rather just remove reliability.

-

Isn't that sort of the point though - the people in the fishbowl getting spammed are the people who might actually buy it, whereas the people outside our fishbowl who aren't reading these things aren't likely to buy it at all, so aren't worth trying to market to. I think it's a good example of well executed market segmentation.

-

So the old RED cameras don't get any credibility anymore? Wow.. considering how great the image is, and considering RED was never perceived as a consumer brand, it's a pretty fickle attitude! However did you guess?!?! ❤️❤️❤️ Actually, after recent releases, the second hand market for them might have gone vertical!

-

True, but they're gradually chipping away at it, and sometimes even in the right directions....

-

Continuing the discussion about overall size of total rig, I just found an interesting lens for APS-C - the Sigma 18-50mm F2.8.. it's interesting because it's got a fixed aperture and is small for a APS-C lens. Apparently it's a common lens for minimalist travel etc, when paired with the (very small) 11mm F1.8 prime for the ultra-wide / vlogging end.

-

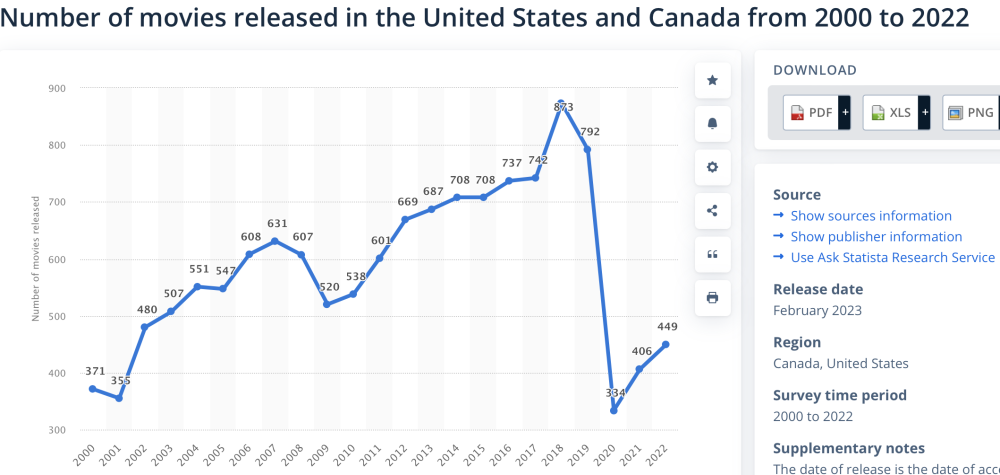

It was marketing. If it was shot on FX6 there would be zero press, but look at the social media frenzy that this film is enjoying - including this thread. There are normally multiple feature films released per day, but how much press are you reading on photography / videography websites about any of the others? https://www.statista.com/statistics/187122/movie-releases-in-north-america-since-2001/

-

Did you grade the FX30 to match the Alexa, or the other way around? I see differences, but they're subtle and not significant. IIRC it was Steve Yedlin that talked about the idea of the "cut back" test for camera matching. Ie, if you were to have a shot from camera A, then another shot, then CUT BACK to camera B, would you notice that A and B were different. I think this is a good test because in real film-making you don't normally cut directly between two cameras showing the same scene from the same angle, so there is some distance there, and so in A/B testing you shouldn't cut directly between the same scene and angle because it's too stringent a test. Yes, in theory you would cut between two angles of the same scene, but in lots of film-making you'd be changing the lighting setup between filming those angles, so getting a perfect match isn't relevant in these situations either.

-

I'd challenge your concept about worth.. all cameras are tools to do a job, and maybe that job is to create footage or to provide nostalgia or demonstrate taste or status, but they're all outcomes. I'd suggest that the Red Raven would excel at most of these. If you lust after the name then it would do that, if you wanted to demonstrate taste or status then it does that, and if you want to create footage with it, holy hell does it do that..... I am not really an expert about the Red ecosystem or what all the cool kids are doing, but it looks pretty good to me. To a certain extent it seems like Red is a bit like Apple, in that you're only cool if you have the latest model and because no-one wants yesterdays model they're all undervalued. For USD2K, you could make some incredible wedding or corporate videos or beauty or branding videos, and if you knew what you were doing you could make some incredible money doing that with an image that good.

-

I know of at least one group of professional colourists who are profiling some of these cameras themselves because the available information isn't sufficient to do proper transforms. You might think all the information is there, but it's not. I don't know enough about colour spaces to know why the information that's been provided isn't sufficient, but if it was then these transforms would have been built already and the professional colourists wouldn't be having to grade these files manually, or build their own transforms for them.

-

The A7CR sensor is ~9500 pixels wide, so at 3x it's only looking at an area ~3170 pixels wide, so almost 4K but not quite. The elephant in the room is that it's a 40mm - 120mm, instead of the wider range on the others. Of course, the Sony 24mm F2.8 lens is also very small and the same math would apply to zooming with it. The level of detail visible is due to the processing of the data from the photosites, not the number of them. I do really like the idea of having a high-resolution sensor and cropping digitally to emulate a longer focal length, as I have repeatedly mentioned when talking about the 2x digital zoom on the Panny cameras, but ultimately I want all the flexibility I can get out of focal lengths. My dream setup now is the GX85 and 14-140mm lens. At only a little larger this setup is still 4K at 10x and isn't missing the 24-40mm zoom range either. Your FF setups are approaching SD at these zoom levels.... I have also found that when cropping into a lens you magnify all the aberrations of that lens. I'm comparing quite optically compromised variable aperture zooms with your (likely) highly tuned and very sharp primes, but when the image is blown up to 3x size then I'm not sure which would win - zooms are compromised but not necessarily that compromised. I also realise these comparisons don't take into account the low-light performance of the camera, the AF, DoF capabilities, and lots of other advantages of full-frame, but believe me when I tell you that users of smaller-sensor cameras are all very very aware of these compromises, but size is of such priority that it takes precedence. One of the things that I find hugely ironic is that if I had $10K to spend on a camera, the GX85 might still be the best choice. If I had $100,000? Still the GX85. If I had $1,000,000? Maybe I'd call up Panasonic and ask for them to CUSTOM MAKE me a camera! Seriously, when size is your primary limitation, your options really are very limited.