-

Posts

7,493 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Posts posted by kye

-

-

5 minutes ago, jhnkng said:

I am hugely excited for this camera, but having owned both the Pocket V1 and the Micro, I’m a touch wary about what else I have to buy to make the camera acutally work through the day. Whether it’s a ton of batteries AND enough chargers to be able to charge them all without me having to wake up at 3am to do a battery swap, or an OLPF for the Micro, there’s always *something else* that needs to be purchased. I’d love Blackmagic to sell an LP-E6 fast charger that can be powered off USB-C PD, especially if it can be run off an USB-C PD compatible power bank (like ones that can charge USB-C laptops), so I can put a spent battery into the charger and keep it in my bag through the day. Being able to rotate 3-4 batteries for all day location shooting rather than having to manage 8 batteries makes a HUGE difference for multiday shoots.

Is this what you want?

https://www.amazon.com/gp/product/B00QGJ91A4/ref=oh_aui_detailpage_o02_s00?ie=UTF8&psc=1

It seems that it takes the same batteries as the XC10, and I have bought and tested the above and it seems to work ok.

-

5 minutes ago, webrunner5 said:

It just proves to me how cheap you can really make a damn good camera in this day and age.

I disagree. Canon made many damned good cameras (once their potential is unlocked with ML) and at very reasonable prices.

The BMPCC is still excellent value for money, but not because BM managed to make hardware for that price, it's because they were willing to unlock the potential of the hardware.

-

10 hours ago, mkabi said:

I'm sure people at RED going - WTF???? 4K/60p RAW at $1295????

They won't be the only ones!!

-

8 hours ago, Don Kotlos said:

I didn't see that correlation anywhere, but yes I agree that a "good" 1080p is more than enough. But unfortunately with most cameras in this price segment (other than BM and GH5/s) to get a good 1080p means you have to shoot at 4K and convert to 1080p in post.

Absolutely, and to further expand on that I think it's a sliding scale. If the 1080 is great (eg, BMPCC) then use it straight out, and if the camera/codec is rubbish then you'd need to downscale 4K to 1080 for it to be of good quality, but there are cameras in the middle where the quality is sufficient to use 3K or 2.5K and when downscaled to 1080 they are good. In these cases there is room to crop into the image a bit and still have a good 1080 delivery. Cropping isn't everyones cup of tea, but it allows refinement of composition, and also things like (light) stabilisation in post without really hurting the 1080p, so in this way it makes things a bit more usable and is relevant artistically.

-

1 hour ago, Kisaha said:

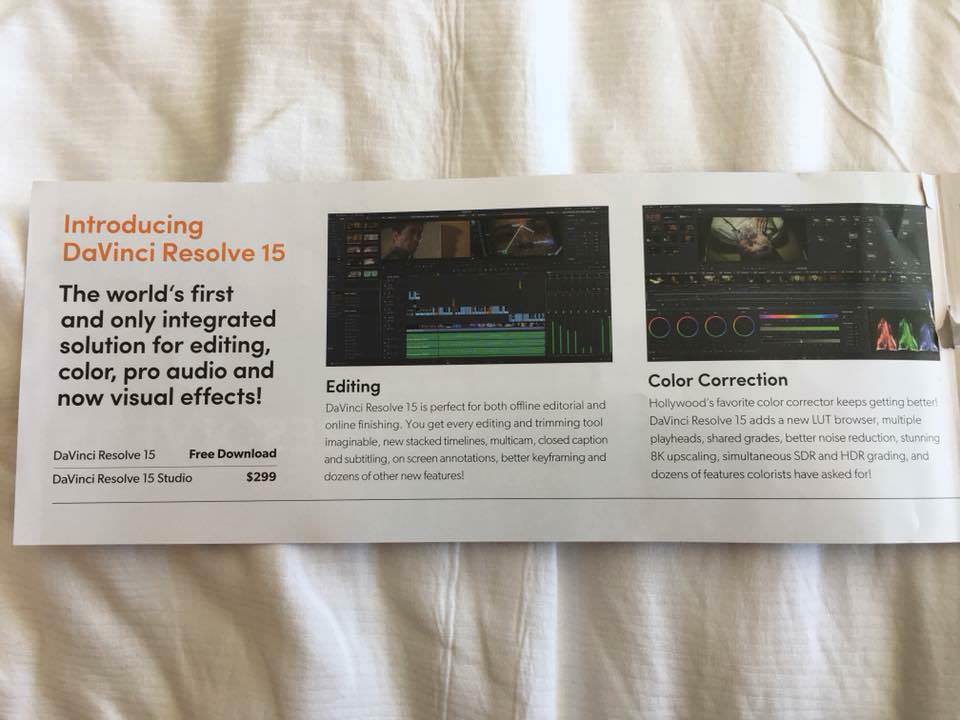

There is a new Adobe version, which I haven't download yet (I have paid dearly updating Adobe apps before! huge problems and late deliveries, almost non deliveries, because of them) and I read that this is a major release (but that's what they said for previous ones too!). Audio was an issue in previous Resolve editions. Izotope is top, I am not sure anyone can reach that, or should (as it is a very specialized program, and it is good to have those!).

We will see, I will try the 15 when it is available with a small project in the near future. Adobe disappoints more times than not.

My impression is that Resolve 15 will be an all-in-one that features a colour module at v15, an editor at v3, fairlight at whatever version it is but at integration v2, and Fusion at whatever version it is at but integration v1. I chose it for myself because I don't need (or even understand) most of the features that a pro editor would want or need, but would want much more sophisticated colour capabilities (I view the colour module as Adobe Lightroom for video with full tracking).

If you're someone that wants or needs video editing features of a mature editing platform then I'd be careful switching to Resolve and make sure you do your research first. It's about strengths and weaknesses. Also, be really specific with researching what particular features you use - it might work great for most things but if the things you rely on are buggy or whatever then you won't have a good experience.

I do believe, however, that Resolve is the future. I have heard from multiple others that Resolve is getting better many times faster than the other options, and while I've only been a user since v12.5 during that time they've added Fairlight and Fusion, so even in that short period of time they're moving incredibly quickly. I can also attest to it getting less buggy over time. I used to have Resolve v12.5 crash outright about once every 30-60 minutes of use (it saves regularly so normally it's not a big deal) and it would start acting funny and you'd need to restart it quite a bit too. V14 almost completely eliminated the crashes, but it will still go funny every now and then, with hotkeys suddenly not working, and all manner of other things just not doing what you'd expect.

It's definitely a work in progress, but to give you an idea about what life in Resolve is like, when I saw Adobe release the Lumetri colour panels I immediately thought "is that all?", then I saw the reaction from YouTubers and laughed out loud. That upgrade is laughably minute. It's the right stuff for sure, and very usable, but I was using things in Resolve that Lumetri doesn't have within the first 20 minutes of having installed Resolve, and use them on most grades. It's like people getting excited over being given a hotkey for splitting clips.

-

2 minutes ago, anonim said:

I'd say - by large margin it is star of the show. For 300$ - if Resolve's technicians somehow manage to integrate Fusion's engine without mid-step of transcode, it is not just obvious Adobe killer, but and Flame also.

It's only $300 if you're not a Resolve license holder already. If you are, then you are likely to get free upgrades for a long time (maybe forever). Resolve users who bought their license at V8 can still use them on 14. I bought mine as a v12 license, but it was second hand so who knows.

In a way I don't even mind a transcode, just having it integrated would be wonderful. I'm new enough to the game that this whole idea of round-tripping seems like word processing by choosing fonts and colours and page layouts in individual applications.

8 minutes ago, jonpais said:@kye Simultaneous SDR and HDR grading? I wonder how that works. hmmm

I am the last person who would know, but perhaps they're talking about ACES support? I'm not sure how much you know about ACES but in theory you capture footage on whatever devices and then run them through a profile to then have them in the ACES colour space, then you can grade them however you like, and then you run them through whatever output profile you like, for example Rec.709, Rec.2020, etc. Of course, you're never actually seeing what the ACES colour space looks like, because you'll be monitoring it through an output profile suitable for your monitor. IIRC they say that if you grade on a Rec.709 monitor and then output to a Rec.2020 profile then it will take the dynamic range and colour space and somehow squeeze it to fit the output space. I say squeeze because ACES is something like 26-bit internal, so everything is a squeeze from there. I think they created it to have a larger colour space and DR than the human eye so that it can keep pace with future technology advancements.

I've looked into ACES a little as a way to try and match my different cameras, but most of them lack profiles yet.

-

2 hours ago, anonim said:

This is probably the highlight of the entire show for me (and we don't even know most of what is being announced yet)..

This is awesome for a number of reasons:

- My workflow focuses on capturing cleanly and then working hard in post to create the artistic parts, so this is an upgrade to probably the largest component of my workflow

- It's free

- It now includes Fusion, which apparently has much better trackers than Resolve, which for my stabilisation requirements (which are crazy) will be fantastic

- Did I mention it's free?

- It has all sorts of stuff I wouldn't know to ask for. Like "Multiple playheads".. ummm, what?

-

What's that thing where the hot-shoe mount normally goes? Is it a 1/4-20 hole? Of some kind of sensor?

My impression is that it looks like quite a functional camera, and for those that run-and-gun, might be a body that doesn't need as much rig added to it, which would make it a bit more low-key which is great for not attracting attention.

Something I've seen a lot on YT and with photographers is that once a camera goes above a certain size then you basically can't film in public as the 'public officials' hassle you for film permits. -

21 hours ago, Castorp said:

Apples reasons for developing their own solutions for the entire chain has a lot to do with reliability. Their reliability comes from controlling all the bits and making sure they work together.

The sum is a system that is greater than the sum of its parts because there is coherent intention throughout.

I think it’s a bit cynical to presume it’s all about “locking in” it’s users.

When I was transitioning to Mac I had a number of different things that I wanted to link together between Apple and non-Apple providers. Every issue I had throughout my transition was an Apple/non-Apple incompatibility or clash. We are talking about things like Google calendars, invitations from Outlook/Exchange, messaging, etc. Almost any time I wanted to access a service with my Apple and non-Apple devices it was either that it wouldn't work on the Apple (but the service worked on most of the other brands, so the non-Apple supplier was compliant with standards etc), or if the issue was that the non-Apple device I owned couldn't access the Apple service then it was because Apple had locked them out.

When I made the final changes to all-Apple those problems basically went away, and not only that but I got a bunch more shared functionality than I had originally been trying to achieve.

With very few exceptions, when it comes to Apple-provided features my iPhone and MBP have the same functionality (messaging, making and receiving phone calls, calendars, contacts, FaceTime, mail, Safari bookmarks and history, notes, etc), but to this day I have to use a third-party app to accept meeting invitations from Outlook.

Tell me how they aren't preferencing their own ecosystem above others when the ability to make and receive phone calls on a laptop (or my Apple Watch) is more important than receiving Outlook meeting invitations...

It's a bit like saying that it's cynical to say that the banks are happy to take your money in error, but when you ask around the mistakes are almost always in their favour.

-

8 hours ago, Trek of Joy said:

After moving to FCPx my workflow has sped up considerably.

When I did my PC v Mac analysis the software didn't come into it - I didn't change software at all. I did a big analysis of what I wanted from a computer, including listing all software applications. It surprised me, but almost every piece of software I use is available on both platforms.

The analysis didn't really involve much difference on the hardware side either (I was looking at laptops) and the decision came down to integration. Things like my entire family are on Apple Messenger and there's no client for MS, which means that communicating with them becomes more difficult. Calendar integration is one of those things that *should* work but I've had issues with in the past. Etc.. I literally decided on PC v Mac based on ecosystem alone.8 hours ago, Robert Collins said:In my experience, PC v Mac threads never really die until people end up debating the benefits of Linux

Linux is excellent, but I've passed the stage in my career where I want to spend time being a systems administrator as well as a user. This was one of the main things with MS. MS makes you spend time as a sysadmin because things just don't work, Linux makes you spend time as a sysadmin because there's a huge learning curve in getting used to everything. There's still a learning curve for MS and Mac but I've already climbed those and don't wish to climb another.

Besides, OSX has unix at the back-end and whenever I find myself choked by Apples "our way or the highway" attitude I just pull up a terminal window and implement a work-around. Most of the benefits and none of the sysadmin-ness.

-

19 minutes ago, webrunner5 said:

Sony has been about as big of a horses ass as any of them for goofy standards. They have become a bit more Normal lately, but this proprietary stuff just sucks for the average Joe, Jose' out there.

Most of the big names are doing it.

The strategy when you want to get on top is to take your advantage of being smaller and with less technical 'heritage' to hold you back and invest some of your potential profits in a better value-for-money product to grow your market share.

Then once you're at the top the strategy becomes to push the boundaries of the Anti-Competitive laws in order to squash the little people trying to steal your market share. Freeze them out by technology licensing, do deals with them and then refuse to pay and bankrupt them in court, or if they have patents then buy them out and shelve the tech. Lots of strategies and lots of hours spent by smart people getting paid by the big bully to keep control of the playground. To badly paraphrase Captain Jack - "There is only what a <company> can do and what a <company> can't do".

-

38 minutes ago, Juxx989 said:

they would really have to fumble this in a Epic lengedary fashion for me not to have is before years out.(most likely a lot sooner)

I'd suggest that people not buying one because they fumbled it is less likely than people not buying one because of other more tempting options also appearing. There's lots going on in this price-range (when you consider all the support equipment required they're up against lots of other -seemingly- more expensive setups).

-

I moved to Apple from PC / Android and I did so knowing that I was paying more for a given level of hardware performance.

What I am getting, however, is a higher level of personal performance, and this is what I am paying extra for.

The amount of time I spent in the PC world struggling to get Microsoft Windows to properly use the BrandA driver for the BrandA chip on the motherboard with the BrandB driver for the BrandB plug-in interface card to connect to the BrandC device I want to use... On Mac I spend time working on my work, not on trying to get my computer to do what I tell it.

My dad was in charge of the PCs for a large educational institution and once had the pleasure of ordering a custom-built server (~$10k worth of high-end hardware) and after a month of not being able to make it work he sent it back to the distributor. Luckily the distributor was happy to take it back as the school bought large amounts of equipment from them. The problem was that it wouldn't complete an installation of the OS, and the problem was a known issue and the manufacturer of the RAID controller, the HDD manufacturer and the motherboard manufacturer were all blaming each other as to why the combination didn't work.At Apple, if something doesn't work, someone gets yelled at and told to fix it, which works because they control all the moving pieces.

You will notice that Apple vs PC articles that recommend PC over Mac often point out that: the hardware performance (ie, MFLOPS or certain chipsets) are cheaper in PC, that for every good feature a Mac has there's a PC that has a better specification, etc. The flaws to this logic are that CPU speed is not workflow speed, and that Laptop A might have a better screen, and Laptop B might have a better battery life, but you can't buy a Laptop with the screen from A and the battery from B.

Articles that recommend Mac over PC often talk about how 'things just work' which unfortunately isn't as true with the last few OSX versions as it used to be, but I sure as hell don't spend time on forums reading about manufacturers blaming each other for why my mouse won't work.In case I sound like an Apple fanboy, I'm really not. Apple are monopolistic corporate criminals who are large enough to exploit the weaknesses in international taxation law to basically shaft every country they do business in, which would justifiably put any of us who did it in jail in a matter of minutes, but they're big enough to get away with it. The reason I buy their stuff is they suck slightly less than the alternatives.

-

I see this from a slightly different point of view.

The main "pro" that I see is that it has the potential to improve workflows for those who are already using the compatible equipment (FCPX and external recorders) or are willing to change their workflow to do so.

The main "con" that I see is that this is quite a deliberate move on Apples behalf to further separate themselves and their ecosystem from being globally compatible. As someone who is now an Apple user (MBP, iPhone, iPads) but didn't used to be (PC, Android) I became acutely aware of how Apple will deliberately do things to restrict global compatibility and drive people to an all-Apple ecosystem. My distain for Apple is significant, and perhaps only surpassed by my distain for Microsoft, who are also masters of playing this game, which is one of the main reasons I changed platforms.

It's not the creation of a new codec that is the risk here, it's that it places a greater likelihood that other more open codecs will not receive as much attention or support. We have all suffered as the innocent bystanders in 'format wars' from Blu-ray v HD DVD to VHS v Beta and before that with Quad etc. Some of us have also suffered at the hands of a feature that promised interoperability but was unusable due to some kind of bug, but unfortunately the manufacturer never fixed it and we lost months or years waiting and hoping that it would be rectified.Manufacturers are out for themselves and will shaft the customer as much as is possible to get away with while still gaining sales by being the 'least worst'. Take Canon and ML as an example of what was possible vs what was delivered.

- KnightsFan, webrunner5 and gethin

-

3

3

-

5 hours ago, jonpais said:

I don’t know this guy, but for some reason, he’s offended that I’m retired and living in Vietnam. He ridicules my work and contributions behind a veil of anonymity. He belittles a dozen or more other forum members for adding useful comments to the subject. He has the audacity to instruct the founder of the website how to run his business. And rather than sharing information, he has spent most of his time since joining EOSHD attacking other members, either for their age, their level of expertise, or because of their interest in still photography.

I tend to see people as either contributing to a conversation (be it information, questioning, humour, etc) or not contributing (either time-wasting, or being negative, driving agendas, etc).

The best response to those not contributing is no response, I think there was actually some science done on this but I'm not 100% sure, however the problem is that we are always so tempted to reply that the urge often gets the best of us. Another wonderful symptom of the human condition!

The still you posted above looks great Jon, is this part of a short or feature, or camera test of some kind? I particularly liked the combination of saturated blues and desaturated browns, as well as the key lighting (Rembrandt lighting IIRC?).

-

7 hours ago, kidzrevil said:

I only shoot 14 bit. The 2-4 bits you chop off has a massive amount of data in them. The most I’ll do is convert the 14 bit file to a 12 bit cdng

Where/how do you see the data in those 2-4bits? I mean, is it highlights, shadows, saturation, 'thickness' of image.. ?? I compared bit depths and couldn't see any difference, but I also don't know where to look

-

1 hour ago, cantsin said:

- 1" 4K 400 ISO 12-stop sensor from the Micro Studio/URSA Broadcast + 1" 1080p 800 ISO 14-stop sensor from the old Pocket/Micro Cinema in swappable sensor+mount units, improved 4k sensor in the future.

- 24-30fps 4K raw (because of cooling and bandwidth constraints), higher framerates in 1080p (and sensor crop).

- ProRes Raw + all other conventional ProRes codecs + CinemaDNG in different quality/compression levels.

- Likely a 4K DNG stills mode.

- Active MFT mount (as before).

- LP-E6N battery like the Micro Cinema Camera, short battery life in 4K.

- Fixed screen of mediocre quality (cost-cutting measure as with the previous Pocket), no touchscreen (as indicated by the three function buttons on top of the camera).

- False color in addition to focus peaking and zebras, maybe a display option for anamorphic desequeezing.

- SD card for storage, requiring 300 MB/s Sandisk Extreme Pro cards for 4K raw recording.

- Full-size, robust HDMI port (like the Micro, unlike the old Pocket).

- Mediocre audio, bad preamps, bad in-camera mic (as with all BM cameras).

- No OLPF, UV/IR filtration still required.

- Maybe a Bluetooth interface + smartphone/tablet camera control app.

- Firmware will be similar or the same as the URSA Mini camera OS, with the same improved user interface.

- Larger housing with better grip, maybe some clever design to improve handheld shooting.

- Price at no more than $1500.

So, in short, a camera that is excellent when it is used as one third of a cine setup, but not a good standalone performer...?

-

8 hours ago, kidzrevil said:

@kye the workflow before compression may be non destructive but you are still shifting a lot of data around. These transforms exaggerate noise and other artifacts in the image. Its better to use floating point transforms than the hardcoded transformations in LUT’s for color space conversions. Davinci Resolve Color Management & ACES are floating pointing operations that are far superior to LUT’s especially when matching cameras. Filmconvert does a good job too but I’ve found variations in their profiles.

I did a bit of reading before my previous reply and I didn't get a straight answer on LUTs vs floating point algorithms. The logic on LUTs was that they have two main problems: a lack of data points, and potential issues in-between the data points.

The thread that I read included people saying that even a LUTs with thousands of data points are still the equivalent of a curve with only ten control points on it, so in terms of matching to the foibles of a sensor put through a cameras internal profile transforms, it is potentially going to have a bit of error.

The second issue about what happens in-between the points isn't that interpolation isn't possible (with all the variations of linear / quadratic / polynomial / etc functions available) it's that there's no consistency between standards and so although your program might do a good job of interpolation, who knows what the software that the LUT was designed on was doing or if they match. This would become much more important the lower the number of data points in the LUT becomes.What I took from that was that RCM and ACES are ok and LUTs are a question-mark. In the end the proof is in the pudding (as the common saying goes) so it's just if you prefer the final result or not. When I think about this stuff I get enthusiastic about taking some time to try and reverse-engineer what a particular LUT or transform is doing so I can learn from it, but then life happens and my energy fades.. one day I might get around to it.

I haven't forgotten my homework.

-

27 minutes ago, JordanWright said:

Yeah swappable mounts make sense as it could potentially use the Ursa mini pro mount

There is logic to this as there is convenience in a manufacturer providing compatibility across their range of cameras. Not only does it mean that on set you can have the A-cam and B-cam (and C-cam?) arrangement sharing lenses etc, but it also means that when someone buys into a system at the lower end there's less friction for them to upgrade within the system.

I have no idea what compatibility there is within their current range, but this would be an opportunity to further align things.

-

54 minutes ago, kidzrevil said:

@kye sheesh thats a lot of transforms for 10 bit low res footage. Your end result looks great but I challenge you to get the look you want in 3 nodes ! You can do it !!! Your 10bit footage will look a lot better trust me. You should only transform your color space once in your grading chain

Challenge accepted!

The workflow comes from here.

I'm currently trying to get a workflow that allows camera matching between all my setups and either this setup with the Colour Space Transform plugin or potentially ACES transforms seem like the best candidates. Unfortunately I haven't found profiles for ML RAW, iPhone, or Protune, so I'm still left to rely on my (modest) grading abilities.

In terms of colour space transforms, don't get them confused with LUTs as they have advantages, not clipping the data is one of them. The above suggests that they are lossless transformations so don't degrade signal quality (and Resolve has a very high bit depth internally).

-

51 minutes ago, Kisaha said:

Beware, maybe a new XC camera with EOS M mount, is around the corner (possibly not!) with touch dual pixel AF and all the good Canon things, and usual 8bit codecs!

An XC update could add another contender into this space for sure.

One thing that I think is a big deal that people aren't really talking about in the context of new cameras is what ML RAW is doing for existing Canon cameras.

I had the 700D and wanted better video quality so was looking at the XC10 / BMPCC / RX10 / etc, but now my new 700D + ML RAW + Sigma 18-35 + Rode VideoMicro setup really has changed how I think about that camera. In ways it's far surpassed the BMPCC - not quite in image quality but definitely in battery life, sound quality, and with ML RAW Crop mode, my 18-35 is also a 87-169mm without a loss of resolution, and without having to cart extra lenses around etc (although the 18-35 is as large and heavy as two lenses!).

I have no idea if it's possible for ML, but if they could make a module that did full-sensor readouts (5K video) and saved compressed files with variable bitrates, that might take it into another league again.

-

Mini Slider

In: Cameras

38 minutes ago, Ty Harper said:Looks like Edelkrone's finally did it: https://edelkrone.com/products/motion-box

Holy wow.. that is a KILLER product. Technology is moving forwards in leaps and bounds, it really is incredible!

I don't need one, I don't need one, I don't need one, I don't need one, I don't need one, I don't need one, I don't need one, I don't need one...... *mutters*

-

23 hours ago, elgabogomez said:

I’m not defending that clip, first time I saw it I abandoned it halfway. But it’s not clipped, not blown, not over sharpened, yes the skin tones are not handled nicely but that’s the grade not the camera. The lack of “cinematic “ in that video is not technical, is just not saying much and it’s not even a camera test that brings much to the conversation. So my point is that cinematic look needs as much dynamic range as pancakes need butter...

I recently watched an interview with a DoP talking about equipment and he basically said that his preference is that the equipment not impart anything on the 'look' of the film, and therefore he chooses equipment that will accurately capture what is put in front of it. Therefore it was about operating the camera like a technician, choosing lenses that are sharp with minimal distortion, and faithfully executing the direction of the others on set who are artistic, like director etc. His view was that the look of a film is created by the stuff in front of the camera and what is done in post.

I've just spent about 20 minutes trying to find the link and FML I can't find it.. I consume too much from too many sources!

He did mention that some people like using vintage lenses and creative filters etc, and didn't criticise that approach. I must admit that I personally find this perspective to make sense, and I've looked at things like the Tiffen Mist filters and decided that I can do a 'good enough' emulation of them in post (which lead me to include the Glow OFX plugin into my workflow) but with the added benefit that the effect isn't baked-into the footage and therefore I can tweak it in post to get it how I like rather than being stuck with what the filter gives me.

-

11 minutes ago, kidzrevil said:

@kye looks really good !! What kind of grain are you using ? Filmconvert or an overlay ?

Thanks! That comment means a lot coming from you

At the risk of providing too much information, the total workflow was:

- ML RAW 1728 10-bit

- MLV App (Mac) ---> Cinema DNG Lossless

-

Resolve Clip Node 1

- WB

- Basic levels

-

Resolve Timeline Node 1

- OpenFX Colour Space Transform: Input Gamma Canon Log ---> Output Gamma Rec.709

-

Resolve Timeline Node 2

- Noise reduction (Chroma only - the Luma noise in RAW is quite pleasant)

-

Resolve Timeline Node 3

- Desaturate yellows (Hue vs Sat curve)

- Desaturate shadows + highlights (Lum vs Sat curve)

-

Resolve Timeline Node 4

- Slightly desaturate the higher saturated areas (descending Sat vs Sat curve)

-

Resolve Timeline Node 5

- OpenFX Colour Space Transform: Input Gamma Rec.709 ---> Output Gamma Arri LogC

- OpenFX Colour Space Transform: Input Colour Space Canon Cinema Gamut ---> Output Colour Space Rec.709

-

Resolve Timeline Node 6

- 3D LUT - Rec709 Kodak 2383 D65

-

Resolve Timeline Node 7

- Sharpen OFX plugin

-

Resolve Timeline Node 8

- Film Grain OFX plugin: Custom settings, but similar to 35mm grain with saturation at 0

-

Resolve Timeline Node 9

- Glow OFX plugin

-

Resolve Fairlight settings

- Master channel has compressor applied to even out whole mix

-

Render settings:

- 3840 x 1632

- H.264 restricted to 40000Kb/s

I credit the overall architecture of the grade to Juan Melara - I cannot recommend his YouTube channel enough.

To those starting out, in case that looks like a stupid amount of work, it's fast as hell once you save the structure in a Powergrade. Once I'd converted to CinemaDNGs the whole edit process only took a couple of hours including music selection.

2018 - The year of the VDSLRs?

In: Cameras

Posted

I agree.

I do wonder if there's a hidden third niche of people who shoot home videos.

The reason that I have for thinking this would exist is that as a consultant I regularly see maybe one-in-twenty office workers in a workplace that own a 5DmkIII and bought it because they're interested in taking pictures of their kids. They often buy a 'proper camera' when they first start a family. Making friends with a lot of these people via photography I also realised that most of them don't post family pictures online anywhere at all, so it would be very difficult for non-office-workers to even know these people exist.

I wonder how many of them are shooting video now? We probably can't tell. Most vloggers aren't parents yet, but it seems like those that are don't show their kids online (eg. Casey Neistat) but I find it impossible to believe that a man that can edit video like him wouldn't have been making home videos as well.

In terms of their buying habits I wonder if they're a different market.. I'm in this market and I do notice that my buying habits aren't quite the same as others on here who are doing film work for the consumption of others rather than themselves.