-

Posts

8,050 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

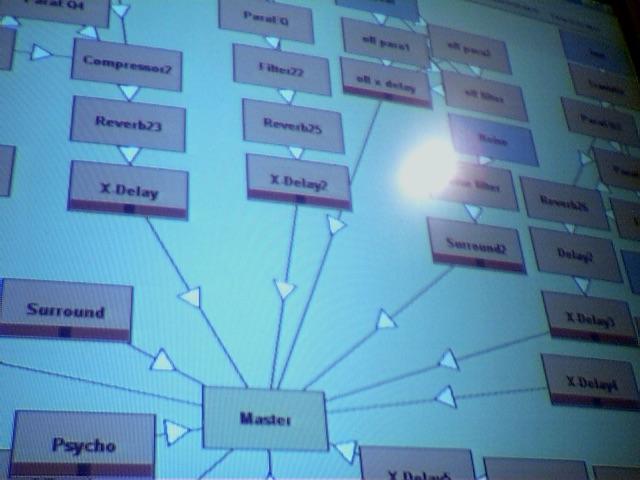

Interesting. I didn't realise that they were keeping Fusion as a standalone product, although that might not be the long-term direction. I know that in terms of integrating or modifying software significantly, certain bits are a lot easier to get working than other bits. Maybe the roadmap is to gradually add in the bits that Resolve hasn't enabled yet, or maybe not, who knows Yeah. I found it interesting the BM guy searched the forum to see how many times it was mentioned, so maybe they do try and respond to users. I kind of got the impression that they do, but also they have a strong strategy - integrating an NLE, Fairlight, and now Fusion into Resolve wouldn't have been them responding to forum users! It was also interesting the BM guy said that it wasn't necessary, and someone countered by pointing out that 8K support also wasn't necessary.. touché! Agreed. I noticed a huge difference in crashing between 12.5 and 14, so I think someone really pushed for it to get more reliable. It went from crashing about every 20 minutes to maybe every 8 hours of use for me, so that's significant. One thing I can kind of sense is that a lot of the niggling bugs have been around for many versions, so I think they might be harder to get to issues more to do with mashing things together instead of just isolated in one module or whatever. Things like the "I get no audio" bug which seems to be fixed by anything from muting and unmuting in each panel to changing system output device or messing around with the database, and has been with us since v12.5 and probably before that even. I agree it's big and blocky. I alternate between using it on a 13" laptop and using the same laptop with a UHD display. It's better on the larger display, but it's definitely not as customisable as would be ideal. I think this is due to the heritage of it being around for a long time, like most other long-lived software packages look old and inflexible. In a sense this is something that also runs through the film industry too, so much of the processes, techniques, terminology, etc is rooted in out-of-date technologies or previous limitations that are no longer present. This is really a symptom of what happens when you take a challenge (make a film) break it up into the steps (shoot, develop, edit, test-screen, picture lock, sound, colour timing, distribute) and then when the technology changes you update each part individually but not the way that it was broken up in the first place. The things that the film-industry talks about as being revolutionary seem completely ridiculous when viewed from an outside perspective, like the director working with the colourist to create a LUT that was used on-set to preview the look as it's shot, or being able to abandon the idea of the picture-lock by being open to making changes to the edit if the audio mixing discovers any improvements that could be made. These are obvious no-brainer things if you haven't gotten used to those limitations over time. In terms of Fairlight I think that's the case too. Having a mixing desk approach made sense when audio equipment was all analogue, but it's always seemed restrictive to me. Here's a screenshot of the free modular software-only tracker that I was writing music with about 20-years ago. Every blue box was an audio generator and every orange one was an effect. Every arrow had a volume control and every module had an unlimited number of inputs. Every module could be opened up and adjusted with sliders for each parameter. Every slider could be automated. Anyone could develop new modules and share as .DLL files. As computers got more powerful you could have more modules. We got to the point where it became difficult to work with because you couldn't adjust the size of the boxes on the screen and so you'd have stacks of boxes on top of each other. I used to write tracks like the above where there was a separate effects chain for every instrument, except things like compressors where you want them to drive the whole mix. My friend used to connect every module to every other module just to see what they sounded like, and he'd get these amazing textures and tones. He was a big fan of Sonic Youth and apart from writing electronic music with me he also used to play guitar and integrate samples from that, and later on we get into glitch and heavy sampling. I think I would have gone insane if I'd been trying to write music limited to the architecture of a mixing desk, or couldn't just right-click and bring up a new module.. what do you mean every reverb instance costs $1000?????

-

Screw PT Barnum. I think you're drinking the Trump /MAGA Kool Aide - that both the past is better than the present, and that somehow we can get there by copying random aspects of it regardless of causality.. or logic Makes sense. Any technique is valid as long as it supports the end goal.

-

Now you're just shit stirring! So much to comment on. So I'll shit-stir back... "He lives and dies with a 70-200mm, 100-400mm lens." Have you ever filmed anything inside a house? If you had, you'll realise that a 70mm+ lens is only good for videos where the voice-over starts with "Has acne haunted you all your life?" "Philip Bloom seems to do ok using tele lenses" No he doesn't. He loves getting shallow DOF with his camera tests, which are exclusively shot at long focal lengths because he's shooting people (or cats) without their permission. When he makes a real film he uses whatever focal lengths are appropriate, and for the B-roll goes to great pains to choose angles where there's foreground, like putting the camera on the ground or peeking out from behind foliage or posts or whatever. "but all these fast lenses are I think unessential" Using red in painting is unessential. Film-making is unessential. So are clothes, ice-cream, and sports of any kind. If we're going to live like that then let's all just live in caves - you go first "30, 40, 50 years ago when movies were the I think the best" You're right, I got it all wrong! It was the lenses that made cinema of the 70s/80s/90s greater, not the availability of cheap VFX, the 2-second attention span of modern society, or the fact that the movie on the big screen is competing with Instagram, Twitter and instant messaging "Kool Aide all those fast Chinese lens makers are pushing" // "wonky looking lens" Rent some expensive fast glass - you'll be surprised! Any lens gets sharp when stopped down two stops, that means f0.95 lens gets sharp at f1.9, when typical lenses are still blurrier than when they just woke up Besides, don't people think the modern look is too sharp? I know you do - you think that camera lenses were what made classic cinema better than Michael Bay, in which case, lenses being a little soft when wide open is just what the doctor ordered Besides, are you aware of the changes in DOF with focal length? If I have a 50mm lens at f4 and want to have the same DOF with a 24mm lens, to match a shot, you know you need faster than f1 for the same DOF at the same distance, right? What about if I need to add a bit of 3D to a super-wide landscape shot?

-

Odd, I clicked on the link from this thread and it worked. I also pasted it into a browser that wasn't logged in as me on there and it worked too. I'll paste it again - maybe this works? https://forum.blackmagicdesign.com/viewtopic.php?f=23&t=47367 No need to buy a Fusion license, it's built into Resolve now with v15, so you get that with your existing license. The downside to Resolve is bugs. They're moving so fast to add features (or integrate entire packages!) that they have some lingering bugs and others that crop up randomly. Id say Fairlight audio issues are with the interface, and wouldn't render out from the Deliver page, but I could be wrong. I've been using it since v12.5 and have experienced a few annoying issues, but nothing in the exported files. I watched the two Fairlight guides that BM recently released (there weren't any good free resources before that) (link, link) but TBH I didn't see anything in there that was surprising to me. I've used a few DAWs before, and am familiar with the traditional architecture of how a mixing desk works as well as how a normal mix and a mastering session would be constructed, so I guess that's why it seems pretty straight-forward. I kind of was surprised that there wasn't more to it, because when I first heard about it over a decade ago having a multi-track recorder with infinite channels and built in effects, dynamics, para EQ, etc would have been pretty mind-blowing. It's also worth noting that the screen layout of Resolve is quite flexible and you can expand, contract, minimise and hide panels as you like, but the controls to do those things aren't immediately obvious so there is a belief out there that you can't customise it at all. I guess it depends on how you work and what your preferences are. If you're just interested in the audio equivalent of the high-quality but generic and bland Canon 2.8 zooms then Fairlight is probably fine. If you're the kind of person who has 28 different parametric EQ plugins because each has a different tone then obviously it's not a good match. In audio there is a spectrum that people work across, running from absolutely pristine audio reproduction at one end (where the goal is to get the audio from the room published with as little damage as possible) to creative mayhem at the other (where no-one cares what went into the microphones as long as the final product is great). Music writing typically extends further into the more drastic end of processing than straight film audio, so that's going to push the tools in different directions. For me, I'm more interested in taking the sounds I've recorded, cleaning them up if required (thus buying these izotope plugins), combining them in a way that supports the visuals, both in a literal sense for dialogue, but also in an emotional sense with ambience, volume automations, music, etc, and then getting them exported. Good audio should be effortless and not draw attention to itself - so that's what I tend to use the tools for. Having one set of generic tools (EQ, dynamics, and volume automations) works just fine in that sense as I'm not really creating, I'm editing. And of course, having it integrated makes a huge difference, as my brain can't deal with picture-lock before I start thinking about audio! There's nothing wrong with either - if you're working on a Hollywood blockbuster then you'll want to be doing sound design in a much more creative way, adding loads of foley and FX to really push the output in the right direction.

-

Cool. Any examples you can post?

-

What kind of projects do you do?

-

Yeah, if there's no point to it then that's when it's distracting and lowers the quality of the output rather than adding to it. Faster lenses are sharper, although we can apply the same logic - are you chasing sharpness because it adds to the dramatic context of the project, or just because "it's cool"? There's a long history of photographers pursuing sharpness because if you want to make a large print and have it seem lifelike then resolution matters. In moving images I don't think that this automatically translates. Using shallow DOF for no reason is just as bad as using deep DOF for no reason in my book. Of course, big budget productions have the luxury of making sure the background is relevant to the plot, so having a deeper DOF doesn't automatically add in unrelated elements to a storyline.

-

Do you aspire to the "more is more" style of Michael Bay, or the wealth of Michael Bay? Either one is fine - no judgements from me - just curious if the style is the goal, or a means to an end.

-

True, but only being able to get shallow DOF outside or in a warehouse isn't always practical. Buying lenses is kind of like buying a set of paints. If you buy a slow lens then you're missing some colours, and you might want to use them sometimes. Of course, if you buy a fast lens and get all the colours, you'd be stupid to only use the extra colours you don't get with a slower lens. Buy all the colours and then use the ones that make the best painting.

-

It does look like a good program. You're right that Resolve doesn't support non-48khz outputs yet. This thread was interesting: https://forum.blackmagicdesign.com/viewtopic.php?f=23&t=47367 Of course, if you compare $399 for Fairlight with $60 for Reaper then it doesn't look so good, but there's more to Resolve than just the Fairlight page

-

Thanks @Towd - that's useful. I didn't think about the ETC mode being unavailable. I guess you can just crop in post, although that would be a slight shift in how I work so I'd have to get used to it. In terms of being too sharp, I'm curing that with lenses - like many people do. Although if you're delivering in 1080 I'm not sure how much that really matters, I haven't done much testing to see, and for me it doesn't matter that much for my projects. I love the combination of small form-factor, image quality, and that it's a workhorse not a diva. Very few cameras can claim all three.

-

You could be right, but those shots don't seem to be good examples to prove your point. The first two shots are two-person shots and in both the closer person is similarly out-of-focus, it's just that the Indy shot has a closer background and the Iron shot is further away. The background isn't important in either shot, so how obscured they are doesn't matter. In the second two single shots, what matters is how related the character is meant to be to the background. The Indy shot shows the character in the setting more strongly, whereas the Iron shot shows the character disconnected from the setting, perhaps for dramatic reason and perhaps not. The thing about DOF discussions is that saying "DOF is great and looks lovely" or "DOF is a cheat and is overused and was never used in cinema" are both completely missing the main point of DOF, and that is that it is an artistic device used by the film-maker to control the scene. If you want to isolate a subject then you blur the background, which is true from an optical perspective but also from a dramatic perspective. They famously used very shallow DOF in The Handmaidens Tale to give it a claustrophobic sense, in accordance with the dramatic context, and the fact you couldn't see that far or get a wider perspective was also in line with the dramatic context of the world of the main character. DOF should be used to communicate and reinforce the dramatic context of the story. I make home videos as a hobbyist and it seems like I'm the only one here talking like fast lenses are a tool rather than a toy. It's like people think that buying a fast lens means you have to use it wide open the whole time.....

-

What compromises for video do you think they're making to get good photo quality? What does the G9 do better for photographs? What about timelapses?

-

Here are some of my impressions... Simple compositions, often with a single subject centred in the frame Lots of closeups, often with shallow DoF, both for product shots but also headshots Camera movement combined with speed-changes, often synced to the music Another thing I noticed is that your style is kind of 'loose'. What I mean is that a less relaxed / more up-tight style would only do movement with fixed sliders and tripods, wouldn't be comfortable getting as close to people, wouldn't use different speeds on the same shot, wouldn't use the fancier transitions as editing punctuation, wouldn't use the odd fisheye shot. It's like you've taken a more boring style and turned it up a couple of notches, but haven't abandoned the fundamentals. Nice work!

-

No no no @kaylee.. your style as a film-maker, not as a forum-user ???

-

This sure is a pretty complicated topic. I get that if you shoot in Prores it is applying a log curve of some kind which shifts the middle point more than the highlights and shadows. A variant of BMD Film perhaps. But i'm still confused about RAW - is the RAW signal still in Linear? Sensors see in linear, so if they're somehow changing it then surely that's no longer RAW?

-

Yeah, I've been on maybe a dozen no-budget film sets, probably all 6-12 people crews, and it's nothing like making films by yourself. Being a Youtuber might half-qualify you for being a runner, but that's barely a qualified position anyway, so....

-

Yeah, and let's talk about something other than equipment

-

If you showed your entire catalogue to a bunch of strangers, what defining characteristics would they identify in your work? Do you like your style? What makes you do things like that? How did you learn? And for bonus points, what one characteristic would you want to add to your style if you could just snap your fingers and have it? For me, I don't think I have a good answer as my style is still changing and developing, but I think I have pretty good composition, both in terms of framing but also moving the camera around to get the best angles. I make home videos and they are mostly set to music without much dialogue, kind of like moving photographs, and I think I do a good job of editing with music and incorporating that rhythmical element. I like my style broadly, but I'm still working on defining and refining it and each project gets better quite substantially I think. I learned composition from doing stills photography for years, starting as a way to document my vacations and then incorporating street photography as a way to "practice" while at home. In terms of editing to music I have written electronic music for many years, and so in a way I am familiar with how to break down a song in a rhythmic sense and kind of program the visuals like a beat, with some shots lasting a single beat, some two beats, some four, and also knowing when to go off the beat, how to shake things up from looking mechanical, in the way that you might perform a solo over the top of some rhythm elements and not always stick rigidly to tempo or timing. If I could learn a style I think it would be making dialogue-driven films, as in a way I'm kind of afraid of using dialogue because I'm not as comfortable with it, especially as my videos are kind of highlight reels and dialogue snippets might not make sense when edited back-to-back with each other, and so not being as familiar with that is kind of limiting. Even things like narrating would be good to learn how to do. Your turn!

-

Thanks. I also got the impression that the 5K mode are h265 and the 4K modes are h264. This is a big deal for me, from what I read h265 gets similar image quality at about heal the bitrate of h264, so going from 150Mbps h264 to 200Mbps h265 is more like the quality of 400Mbps h264. In terms of the 422 vs 420 it's definitely a down-side, but it's offset by the extra bitrate, codec efficiency, and slightly extra resolution, so it should result in a net gain overall. I'll have to do some trials with it. 30p limit doesn't phase me, I'm shooting mostly in 25p now unless something is obvious slow-motion material. Apart from the benefit of HLG, it also helps in low-light. Once you've cropped and downscaled the image, is the overall "look" the same as the 4K modes?

-

I've been reading about the 5K Open Gate mode and wondering if I should be using that instead of normal UHD? I'm currently shooting the 4K 10-bit 150Mbps mode in HLG (I only have a Sandisk 95MBs UHS-I card so can't shoot 400Mbps). I understand that the 200Mbps 5K mode will give an increase in resolution, bitrate, and will allow me to re-frame in post, which are all desirable features, but are there any hidden downsides to using this mode? What hassles or catches are there? I read that the 5K mode is h265, but is the 4K mode h264, or h265?

-

Isn't that what John does in the original video?

-

I don't know about messing things up, but I recall someone on other forums saying that sometimes YT people get hired on a film set and IIRC they said that they are pretty useless overall because YT is just so different to a large set and how it works.