ND64

-

Posts

855 -

Joined

Content Type

Profiles

Forums

Articles

Posts posted by ND64

-

-

5 hours ago, Eric Calabros said:

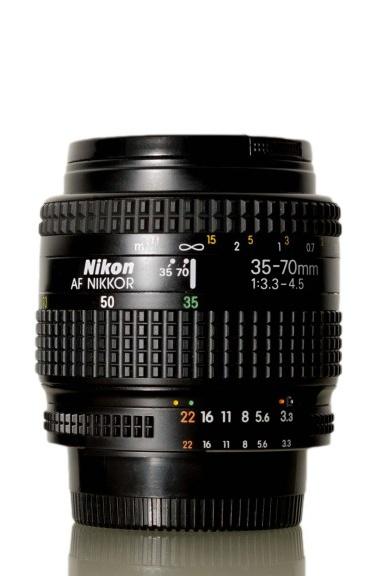

This cheap lens discontinued two decades ago, a lens that even Ken put that in Nikon 10 worst lenses list! I put this on D7500, an almost 6k DX sensor, equivalent to 8k FF sensor, and resolution was pretty good. Sure you find many optical shortcomings in these things, but thats not all because they are old! They're designed for film, which has a slight curve, not for perfectly flat digital sensors, so corners are softer that what they looked in 1980. AND they're designed to be compact, suitable for FM2 style cameras. when you prioritize the size, you have to make compromise in optics.

Here is frame from 4k file with D7500 (which has a heavy crop)

And %100 crop. Remember mini star? ?

-

This cheap lens discontinued two decades ago, a lens that even Ken put that in Nikon 10 worst lenses list! I put this on D7500, an almost 6k DX sensor, equivalent to 8k FF sensor, and resolution was pretty good. Sure you find many optical shortcomings in these things, but thats not all because they are old! They're designed for film, which has a slight curve, not for perfectly flat digital sensors, so corners are softer that what they looked in 1980. AND they're designed to be compact, suitable for FM2 style cameras. when you prioritize the size, you have to make compromise in optics.

-

-

-

If the adapter works flawlessly, they don't have to be in a "rush hour" in lens making. They are ready to release a ridiculously compact 500mm f/5.6 for the F mount. I bet they have already made its AF motor mirrorless-compatible. This is also true about Canon (they even want to release a EF mirrorless, which won't have the lack of lens issue at all).

Besides, unlike Sony, Nikon and Canon and Sigma, have full control on the whole glass process. Though the capacity is not very stretchable, they been doin this business from A to Z, for decades. So if they really want to deliver a dozen new lenses in a relatively short time, they can. Don't expect Canikon accepting help from third parties as Sony did. That's embarrassing for these two big guys that calling themselves Optics company!

-

I was thinking about the complexity of our visual system that recognizes such small differentiations without any chart.

- Mark Romero 2 and webrunner5

-

2

2

-

20 minutes ago, IronFilm said:

Yes, I expect we'll see 20+ Z mount lenses from Third Parties within a year. Easily, for sure!

9 of them wil be Sigma Art. IF they can reverse engineer the Z.

-

-

A square sensor costs a lot more.. we cant expect that from a trillion dollar company .. you know ?

-

On 8/1/2018 at 5:03 PM, MdB said:

Nope. I know exactly what they 'design'. Go ask Phase One or Hasselblad who aren't too 'proud' to tell the truth

They're so "humble" that intimidate reviewers

https://petapixel.com/2018/08/01/my-drama-with-hasselblad-bullying-and-their-latest-comparisons/

-

7 hours ago, MdB said:

No, that's an assumed benefit. It's actually considerably less than that. With a greater loss in high ISO

Its only "assumed" for trolls. For photographers, its real. And there is no photographic "loss" in high ISO. Actually, D850 noise has slightly higher quality.

7 hours ago, MdB said:According to whom?

According to lack of knowledge about Nikon advantages even among its loyal customers. According to their almost invisibility in social media.

7 hours ago, MdB said:there is nothing in the article that points specifically to Nikon actually having much say about anything in the 'design' process.

Oh you needed a copy of circuit design to evaluate! Well, they can't give it to you ?

14 hours ago, Robert Collins said:14 hours ago, Eric Calabros said:Or possibly not....

https://petapixel.com/2017/03/22/sony-keeps-best-sensors-cameras/

Oh certainly yes. Sony semi is way larger than Sony Imaging, and they don't say No to their high-volume-ordering partner in favor of their imaging division. Bussines facts don't care about your feelings.

- Andrew Reid and hansel

-

2

2

-

17 hours ago, MdB said:

And nor can you in the extended DR. Plus the A7R III has greater DR at the same ISO. Who really cares?

With ISO 64 you can expose for an extra 2/3 of a stop without clipping highlights. Thats "real" benefit.

16 hours ago, Nikkor said:Do you guys hold stock options at Sony?

If they had stock, they knew Sony Semiconductor is completely independent from Sony Imaging, and has strategic relationship with Nikon.

6 hours ago, MdB said:Why does every Nikon fanboy think their company is so much more special than Phase One or Hasselblad? Because the marketing dept wants them to feel that way.

Funny thing is Nikon's marketing is the worst in the entire camera/optics industry. They let Dave to visit their not-visited-before R&D rooms, but what Dave wrote is just his reaction to what he experienced. Simple as that.

-

11 minutes ago, MdB said:

This comes at the expensive of high ISO noise. For some people one is an advantage and for others the other. That doesn't make a single clear cut 'better' sensor.

Read noise at ISO 6400: A7R3> 1.05e D850> 1.2e

You can't see this difference in images.

-

D850 pixel stores 10,000 more electrons than A7R3 pixel. So ISO 64 is not just a gimmick number that anybody can add to the spec list. Its indication of a state of the art read out circuit that is capable of handling such a massive amount of electrons. Also, Sony's advantage is not their fab devices and tools (they're far behind Samsung, TSMS, GloFo in nod size anyway), their advantage is their huge asset of IP. AND some of those IPs are bought from Nikon, like PDAF and some Exmor goodies.

-

Well, TowerJazz has several fabs.. if they can make sensors for Panasonic, they certainly can supply Nikon, which orders in much higher volume.

-

On 7/4/2018 at 3:20 PM, Andrew Reid said:

I am excited about this one, could be very popular with us if they get video right.

However I am not sure why the rumour states 25MP and 45MP, when current tech from their supplier Sony is 24MP and 46MP. Seems like an odd move to use different sensors with 1MP difference.

NR sources usually report numbers a bit higher or lower than what it actually is, mostly because they have early access to technical spec list, rather than marketing materials. Total number of pixels is not the "effective" pixels.

However, I think at least one of these two sensors is going to be made by TowerJazz.

-

5 hours ago, Mokara said:

They cancelled the DL series because they were having manufacturing difficulties and the delays meant that it would have been effectively obsolete relative to the competition by the time it arrived.

They said they cancelled it because they were not profitable as they expected at that price range.

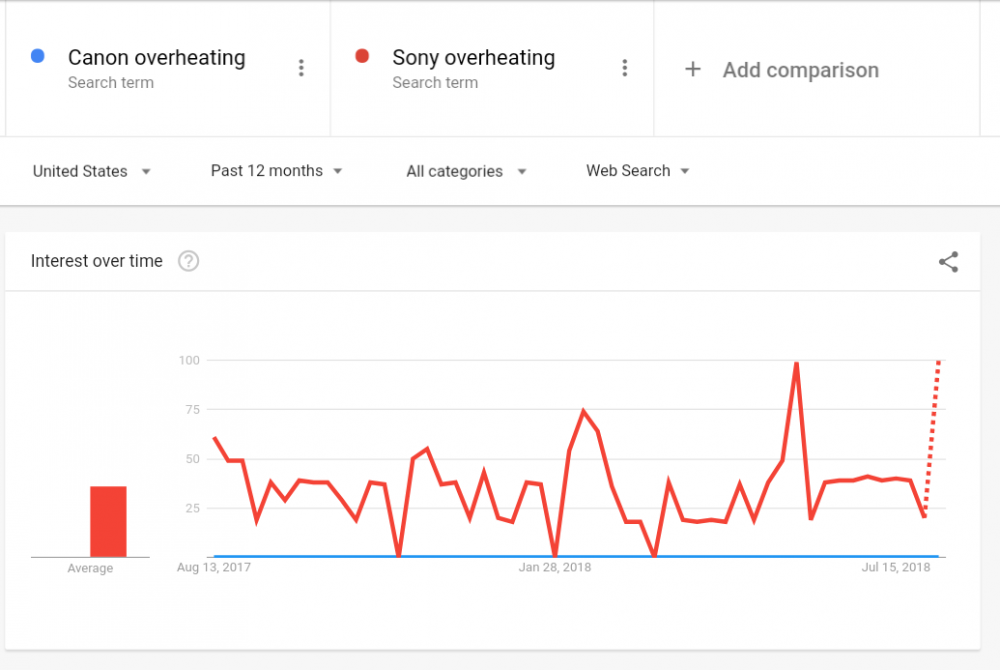

5 hours ago, Mokara said:Overheating in cameras is due to the processor, not the sensor

Even with your logic, stacked sensors are the bottleneck, since there is a processor AND a DRAM right behind them!

5 hours ago, Mokara said:They probably did an analysis of the exif data on publicly posted images and concluded that almost no one uses the wide apertures anyway, while longer focal lengths predominate

Give customers a 18-50mm lens and see what happens in exif data of publicly posted images.

-

Now I understand why Nikon cancelled the DL. If you can't make a modern premium compact and yet keep it under $1000, you better forget it.

-

Thanks a lot bro

-

DJI Ronin S

In: Cameras

This is the result of having direct communication with your customers (Japanese should take note), yes its bulky, but in this case bulk is not necessarily a negative thing for me.

With this developments in stabilizer industry I bet camera makers will ditch IBIS eventually. Its costly complicated mechanical thing that also exacerbates the heat issues.

-

OR they can deliver BOTH solutions, a Z mount Df style mirrorless, AND a D750 successor without mirror. And see which works better in long term.

But lets face the fact. The problem legacy lens owners have is not really about mount compatibility. The problem is the AF motor in their lenses. All mirrorless system use CD as main AF or complementary AF to PD, and you know DSLR lenses suck in CD. They are designed in a way to move heavy glasses in few but big steps, not doing many but small back and forth movements.

-

-

Top dogs in DR has only 12 stop if you count the "useable" ones. So for today's sensor tech, anything beyond 14bit is just wasting the storage space. If they could manage to reach 15 stop or something, then we talk about 16bit, but that sensor should have the most noise-free pixel ever invented to achieve that.

http://www.photonstophotos.net/Charts/PDR.htm#Hasselblad X1D-50c,Nikon D850

-

On 4/7/2018 at 5:17 PM, Geoff CB said:

Medium format color in a small camera.

What's special about medium format color and why you think its not achievable by 14bit raw?

Samsung. Out of new ideas to copy

In: Cameras

Posted

I don't use fake bokeh effect, and most of the time I use my phone's camera just for documentation, not taking memorable photos. and I also don't play game, and when I do, I play 2D ones.

What Note 9 does for me that my current smartphone can't? Nothing.