-

Posts

7,566 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Another tip is to tell people to hold the camera really still for 10s. I once gave a camera to some friends during an event to film and when I looked at the footage afterwards I realised that everyone waved it around like they were watering the lawn - I ended up pulling stills from it as the camera only settled onto anything for 3-4 frames. I was mad until I remembered that I did that the first few times I filmed anything. It seems to be a common thing when people hold a camera for the first time. If you do get vertical video then you can use several shots side-by-side in a Sliding Doors kind of way, and I've deliberately shot vertically on my phone on a travel day for the express purpose of using this layout. I used it to show the same location over time so showed the shots simultaneously instead of via jump-cuts, and I also did a bunch of shots out of the window of the moving train to show the diversity of the landscape. This works for fancy b-roll, but if you get a speaking part then maybe put that one centre and use the audio from that one and have the ones on the sides as b-roll. Definitely get people good mics. I've used the Rode ones that plug into the microphone port on a smartphone and they're pretty good, but IIRC the cheap knock-offs are almost as good and much cheaper. You can use the opportunity to get creative shots too.. The trick to take-away from this video (which is a bit of a one-trick video) is that the camera was mounted to something stable and had the subject in a consistent location in the frame without much movement. I think you're in danger of each person taking footage that is all the same - one person might only take landscape shots from far away or another might only take close-ups. Some advice about trying to get lots of different shots (far away, close up, from above, from down low, etc) and to make them interesting. I suspect that people will want to shoot good footage but won't know how. Think of how many beginners say things like "I would never have thought of doing that". It's also dangerous to give people too many instructions, so I'd suggest only giving them the top ones. Maybe something like: hold your phone horizontally / point the microphone at the person talking / hold it steady and count to 5 before hitting stop / get lots of different shots / make them interesting. Good luck! If you're given enough footage you can make something useful, shooting ratios are a thing and b-roll doesn't have to be super related.

-

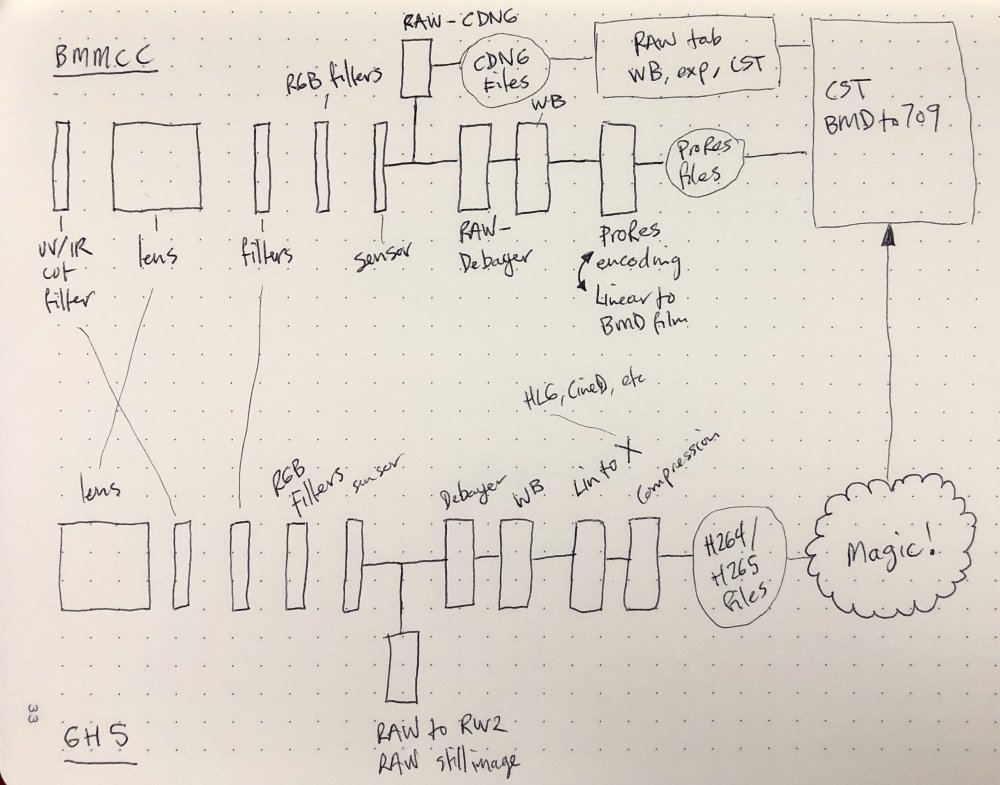

Ok, I think I've got my head around a few things. The unknowns are: UV filter Lenses RGB filters on sensor WB image processing on GH5 Colour profile (3D LUT) on GH5 Compression UV filter and Lens I have eliminated by testing the UV filter and 14mm lens vs 14-42mm lens without UV filter, and these are within ~1% of each other, so as I am not going to get within 1% in my matching, I can eliminate these as variables between the cameras. RGB filters on the sensor I can work out the transformation for by matching a RAW still image from the GH5 to a RAW DNG from the Micro in uncompressed RAW. Compression is separate to colour so I can treat that separately via resolution tests. WB image processing on the GH5 and the Colour Profile will take some doing, but here's the plan. The camera applies the RGB filters, then on top of that applies the WB, then on top of that applies the Colour Profile. So, what I have to do is to correct the colour profile, then the WB, then convert the RGB filters from the GH5 to the Micro, then I will have a matching GH5 image in Linear to the Micro image in Linear. So, firstly I work out the RGB filter adjustments (via a 3x3 in Linear). Then I construct the following: <colour profile compensation> ------> <WB compensation> ----> RGB compensation. At first I will know the third, but not the first and second. The trick is separating them, so I can shoot the same image in two different WB settings. If I make it so that one of the shots has had the right WB and one has the wrong WB, then I just make it so that one has a WB adjustment built in and one does not, and then I work out how to make the colour profile compensation so that it works for both WB settings. This should separate the WB adjustment from the colour profile compensation. This should give an adjustment that will work regardless of the WB on the clip. Hooray for year 10 algebra

-

I'm now more confused. I think this is the system we're dealing with: The challenge is that on the Micro I am shooting RAW and so the only colour variable is the RGB filters on the sensor, but with the GH5 the variables are (something like) the RGB filters on the sensor, the WB setting, and then the colour profile, which is likely to be a 3D LUT with all sorts of unknowns in there. A realisation that's useful is that I can take a RAW still image on the GH5 and that should allow me to do a straight 3x3 adjustment in Linear to match them, without having to worry about the WB and 3D LUT that's on the video files. I should also be able to lock the GH5 down on a tripod and take a series of RAW images and video files and then I can try and use the RAW image as a reference to isolate the WB+3DLUT away from the RGB filters on the sensor, although this doesn't seem to help that much as the 3D LUT is where all the nasties are. I suspect that: the RGB filter adjustment is a 3x3 RGB mixer adjustment in Linear the WB adjustment is an adjustment of the levels of RGB in Linear and that the 3D LUT is all sorts of wonkiness I've also worked out that I can use my monitor as a high DR and high saturation test-chart generator. It won't be calibrated, but it shouldn't matter because the entire idea of this conversion is that any light that goes into either camera should result in (broadly) the same values after my conversion, so absolute calibration shouldn't matter. I've also realised that this is a mixture of black-box reverse-engineering where there are a few black-boxes and we can take guesses about how each is likely to work. Of course, Resolve isn't a black-box reverse-engineering tool, and it's very difficult to get pixel-perfect comparison images between the two cameras so that's kind of what makes it a challenge. I'm wondering if I should be converting things in reverse order, peeling the onion at it were, so doing the opposite of the 3D LUT, then the WB, then the RGB filter. I've also realised that I should switch to Cine-D profile instead of HLG, because although it gives a slight reduction in DR, it will enable the conversion to work on >30p footage too.

-

I am a hobbyist and I was a big champion for AF, now I'm a big champion for MF. What changed for me was: I thought MF would be way harder to get right than it actually is (although I don't shoot in the most difficult situations) I also thought that having something perfectly in focus at all times was required I was upgrading and it came down to the A7iii vs GH5 and the only significant downside of the GH5 was poor AF, so I did an few experiments with MF and discovered that I actually like the look of MF as it makes things more human and suits the style I prefer I think that there are situations when AF is great (doing those gimbal shots where you run around the bride/groom at a wedding for example) but I suspect that a lot of people new to this just haven't put in the work to discover how good they are at MF and what the aesthetic really looks like. Plus, Hollywood gets shots OOF all the time.

-

@mercer very nice image - deeper colours than your normal grades but it sure works!

-

It's not personal.. for me it's about trying to reduce the myths that mislead people. Like "MFT lenses are soft wide open" and "FF lenses are all sharp wide-open" There are MFT lenses with way more than 2K resolution wide open, and the P4K shoots RAW 4K so there's no limitation on the codecs either.

-

This conversation started with "MFT isn't as good as FF because MFT doesn't have wide lenses that are sharp enough wide open" and now we're here talking about how softness is something that is actively sought after by the folks that make the best images around. Apart from cropping or doing green screening, it sounds like your point is that MFT can't be used to make deliberately softened 2K output files because it doesn't have >30MP lenses, which just doesn't make sense.

-

Get them to film something too A 5 minute film made from footage shot on the trip would be a great project!

-

I appreciate that this is part of a much larger subject, but I think you're still not getting this. There is a perspective that many people take, which assumes that higher resolution is better, and that back in the day people chose the highest resolution lenses that they could and it was just the tech that limited that. This is where you (and others) are fundamentally disconnected from the high-end of cinema - the point of me showing you the article on netting is that people are actively going out of their way to reduce the sharpness of fine detail - which is a more technically accurate way of saying that people are reducing resolution. Deliberately. They're actually taking what is on offer currently and paying to soften it. They even used to do that in the days of film too, back when the technology had lower resolution. Even those older lenses were too sharp! This is a fundamental lack of understanding - when people say "I want my video to be cinematic" and then they say "I can't do X because it's not sharp enough" they are arguing against themselves. Part of the cinematic look is the lower resolution. If you're still unconvinced this is a real thing, then here's an article talking about Knives Out and Star Wars: The Last Jedi and how some/all of these were shot on digital but were processed (including halation, which is a particular type of softening of fine detail) on purpose. https://www.polygon.com/2020/2/6/21125680/film-vs-digital-debate-movies-cinematography You cannot possibly think that they didn't have enough budget to do whatever they wanted, and could have shot it in RAW 8K with $100k lenses, so I hope this demonstrates that this is a serious thing that Hollywood actually does. The people who are talking about resolution and sharpness are in a different world than this, looking in lustfully at the images and then insisting on doing things that will take them further away from the look they want rather than closer to it. But they don't know, because they're only talking to the stills photographers online.

-

Not ideology.. we're all chasing an image that's as high-end or highest quality as we can. The issue that we have here on this forum is that we are very out-of-touch with what techniques are used to get the images that we admire, from large budget productions, or from classic films anyway. There is a lot of stuff that isn't spoken about online, and it kind of exists outside of the internet, or at least is hidden behind paywalls. It's easy to spend a long time consuming content and interacting with people online without ever really knowing that this stuff exists, especially now with social media 3.0 actively creating echo-chambers. In order to move out of the realm of ideology completely, let's look at some data. I'm going to assume that you know how to read an MTF chart, since you seem to value resolution so highly. If you don't then I'd highly recommend you look into them, they're invaluable. One thing you may not be aware of however, is that lenses don't have a "resolution". To say one lens is 14MP and another is 16MP is oversimplification to the point it's misleading, because it's not that simple. The resolution of a lens will blur detail, which essentially has a softening effect, lowering contrast of that detail. Yes, there does get a point where if the detail is fine enough then the lens has lowered the contrast of that detail to zero, but the transition from it being very sharp (ie, high contrast) to very soft (ie, low contrast) is actually a progressive one. MTF charts show this softening effect by measuring contrast at varying lines per mm, lp/mm, and obviously the higher the lines per mm you use to measure, the less contrast a given lens will have. This is an important distinction, as we will see later. Amongst the most popular workhorse cinema lenses are the Zeiss CP.2 primes. Here is how they measure: https://www.lensrentals.com/blog/2019/06/just-the-cinema-mtf-charts-zeiss-cine-lenses/ Here is one of the MFT charts: This indicates that with fine detail they have very low levels of contrast, ie, low resolution. Here's a chart from the Xeen testing article: https://www.lensrentals.com/blog/2019/05/just-the-cinema-lens-mtf-charts-xeen-and-schneider/ This is even lower resolution again. Lets compare a 50mm Zeiss CP.2 to a high resolution lens: The Zeiss is obviously inferior in resolution terms to the other lens by quite some margin. Also, that other lens also happens to be an MFT lens. So why are cinematographers happy with such poor resolution. Well, there's two factors that I can see, one is that digital video has been low resolution historically, and the other reason is that cinematographers actually like lower contrast lenses and will go to reasonable lengths to lower it. Remember when I said above that a "lower resolution" lens is actually a lens that has relatively lower contrast on finer details than another lens. Have a read of this article: https://www.provideocoalition.com/the-secret-life-of-behind-the-lens-nets/ This article talks about how cinematographers are deliberately putting netting / fabric between the lens and the camera, in order to lower the contrast of fine detail. ie, to lower resolution. Some examples from that article... A close-up with no net: Black sparkle mesh: Silver sparkle mesh: Cropping is indeed a thing now, especially on lower budget productions where multiple cameras or multiple takes with different focal lengths are beyond the budget, and in this case, people may now move to controlling image softening in post. Film halation effects also do this. To go one step further, softer light sources do this, especially for skin texture, which is where the desire for lower contrast is typically expressed. In photography there is a very strange set of behaviours: Insatiable desire to get the highest resolution lenses and the highest resolution camera and the highest quality image format (RAW) in order to capture the finest details, then.... Get the model to put on makeup (which lowers the contrast on larger skin features like lines, wrinkles, etc - it's called "concealer" for a reason) Cart around ridiculously huge soft boxes in order to smooth the skin (lowering the level of contrast across all detail sizes) Now we go into photoshop and we: dodge/burn with a brush (lowering the contrast of medium sized details) paint over skin areas with filters that lift shadows, or similar (lowering the contrast of all skin details) and then potentially we do frequency separation, where we literally seperate the frequencies, which correspond to small, medium and large detail, and then basically eliminate medium sized detail What is left after all this contrast reduction (lowering resolution) seems to be that we have the models eyes and hair rendered in glorious detail, but skin at detail levels approaching 720p with a Petzval lens. I'm not sure about you, but in the context of all that, I've kind of lost the logic as to why we need a wide angle lens that is 36MP wide-open (8K resolution). They do exist, but go have a look at DXOMark and tell me how many lenses there are that exceed 30MP that anyone here can afford.

-

Last night I confirmed that the Panasonic 14mm f2.5 and the Panasonic 14-42mm kit lens are very similar in colour, so are close enough to be considered identical, which means I can shoot with both the GH5 and the Micro at the same time. This is really important as it means that I can shoot clips for comparison in rapidly changing lighting, instead of having a gap where I have to move the lens between cameras and the light might change during that delay. In order to accomplish this, I've just spent / wasted about an hour finding a rig that allows both cameras to be usable at the same time, where I can access everything on both, and can be tripod mounted or hand-held.. Behold the ugliness! I've also worked out a bunch of lens combinations that will have the same FOV where the "good" lens is sometimes on the GH5 and sometimes on the Micro. My plan is that once I've matched the colour and resolution we can get to testing mojo, and for that I want to be able to eliminate the factor of the lens, and I'll also be doing some blind (and anonymous) tests to see which people prefer, so having a rig that I can shoot with easily will be critical. As such, I had to work out all the combinations of lens filter thread sizes and making sure that every lens on both could get a variable ND and the Micro would always have the IR /UV cut filter too, so all those are now ordered. That was another hour of my life I'll never get back! I've also been thinking about what Juan said and I think I've wrapped my head around it and now have a plan.... 😈😈😈

-

AWB is what I shoot, and I think it just depends on how close a match I really want. The more difference I can tolerate the more flexible the inputs will be. What kind of footage would you like? Ha ha ha.. no, not easy at all. But that's half the fun isn't it? I don't like easy challenges Thanks for the tips, I'm basically doing this on what I've learned from messing around in Resolve and watching your LUT re-creation videos a million times! Would you suggest literally doing the corrections in linear? What tools would I use - my understanding is that the LGG wheels are in rec709 and offset is in log, but I don't know which would be that useful in linear?

-

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

kye replied to Andrew Reid's topic in Cameras

I just watched the entire season of I Am Not OK With This in one sitting... please pass on my congratulations to your brother - it was great stuff! I highly recommend everyone else checks it out, it's very well done Looking forward to your doc too! -

The other point about lenses and sharpness is also very strange, as in higher end professional cinematography there are three groups: Those who deliberately chase softer lenses for how they render detail in a more flattering way Those who chase a clean modern resolution (but then use cinema lenses which are actually very soft in comparison to photography lenses - I suggest people go look at the actual data and do the research) Those who truly chase high resolution lenses and use high resolution sensors The last segment is by far the minority. I get it that if you're looking for a hybrid camera then you'll want imagesso sharp that you need those metal mesh gloves that butchers use just to pick up the SD card, but for cinematography, anyone who talks about sharpness is really just showing how disconnected they are to the cine industry in general. and ditto for autofocus.

-

It's interesting that the people above that are saying "I use FF, but don't judge me because I tried m43 and couldn't get around the issues" and then they go ahead and list a bunch of details about MFT and just get half of them wrong.... No wonder they couldn't get MFT to work for them - they fundamentally didn't understand it. I get that everyone shoots differently, it's when they say "FF is better because it takes photos, but MFT just makes pig sounds and steals your CC details" that I get a bit annoyed. Then again, I should account for this being the internet and just expect that idiots will just hang around waving their opinions around..

-

I realise that I might not have gotten the WB perfect on the outside shots - I set it to the outdoor preset instead of doing a custom WB, so I'll have to have a look at that. I am curious about what effects getting the WB wrong actually has, apart from 'screwing it up' which we all knew. I shoot auto-WB due to the situations that I shoot in so having a transform that is resilient to WB inaccuracies would be useful to me. Of course, emulating the Micro and getting a decent transform are two separate things, but still. Also remember that if I do it then I'll have proven you wrong, but if I fail to do it then you haven't been proven right, it's just that my attempt failed to prove you wrong

-

Juan just posted in his P6K to Alexa thread that he can do dawn to dusk with one transform, which makes sense to me. I also disagree with the statement from @Deadcode that you can't do it in a single LUT, and that ACES is the way, because it makes me wonder what ACES is, if it's not a single transform? and even if we take it that it's not a single transform, that would mean that the camera is changing the colour depending on circumstances, which to be properly processed would mean that the transform would have to know what colour the camera was using. I just need more diverse data. I have halogen, I can get natural light in a range of conditions, and I have the Aputure AL-M9 which claims a CRI of 95+, which will be good enough to use as a check, even if not as a source to match against.

-

Ah, ok.. I had forgotten that they were so different, so that makes sense. I can always build a different conversion for daylight. I'd just need to film the requisite reference frames. It seems odd that this would be the case though - essentially you just have a red sensor which receives light over an exposure and converts it to a digital value, and the same with green and blue. If they happen to be inside or outside doesn't change this basic principle. I've been thinking that the way I processed v0.4 and v0.5 isn't the best way, as it doesn't involve a 3x3 or a YUV matrix, which is how I've seen lots of LUTs and film emulations reverse-engineered, and I would imagine is similar to how the Micro colour science acts too - it exhibits certain things that make me think that those things might be going on. Right - next attempt is to film some daylight reference shots and try to build a conversion that works across both.

-

If anyone wants a laugh, check this out. This is what happens when you put the GH5 and Micro side-by-side and film outside. This is the exact same transformation as the above, which seems to match pretty well, but when you take it outside...... ........not so much!! Ha ha ha.. This is after setting the GH5 to WB of full sun, and carefully WB in post on both shots. Other images are all screwed up by about the same amount. The only variable here that is in play is that I had the Panasonic 14mm f2.5 lens on the Micro and the Panasonic 14-42 kit zoom on the GH5, so in theory there could be colour shifts, but both were set to F8 so were far away from whatever shifts might happen when they're wide open. I guess if this stuff was easy then everyone would do it. lol.

-

This is the grade applied to the GH5 200Mbps 1080 mode - I'm interested to hear opinions on the resolution vs the 4K mode above. GHMM 1080 normal exposure:

-

Version 0.5 Have added in compensations for matching at low-levels and highlights. These happened with some loss of fidelity in the mids, but we're probably well into nit-picking territory here. I've added the ISO noise and someone in a PM said the Micro had more detail, so I've also removed the blur that I had on the GH5, so it's now a straight downscale to 1080. I've also decided that it's now called GHMM. If things don't have a cool name then google says they don't exist. BMMCC +2 stops: GHMM +2 stops: BMMCC normal: GHMM normal: BMMCC -2 stops: GHMM -2 stops: There are a few hues where the brightness is quite a bit off, but these required very strong and very specific adjustments, which are likely to break real-world images, so I've kind of limited my grade to smoother adjustments. There's still some riskier adjustments around the skin tones, but we'll see how well they go in real life in the future.

-

Very interesting. I wish there was some kind of standard for testing these things, so that it would be obvious what characteristics various lenses have and what filters might be similar. They'd be easy enough to design tests for, just the effort of doing so and then spending the money to build the database.

-

And before that, people got excited when there was film fast enough to close down the aperture to have deep DoF shots.... in full daylight! There was a deep DoF fad where everyone wanted to be able to create deep DoF shots. Many famous movie scenes were shot where action unfolded in the foreground, mid-ground and background simultaneously, and it was all in focus! Imagine the possibilities!! Perspective is great - it's just that we need so much more of it than we think we do. I like to do my own experiments and actually try things out, and probably the greatest thing I have learned during all my years of experimenting is that half the stuff that "everyone knows is true" is actually complete BS. Plato, who was (and still is) one of the greatest philosophers of all time, was the first book on biology printed on the printing press and distributed widely. One of the things he wrote was that women had less teeth than men. The interesting thing about this isn't that he was wrong, it was that it was so easy to check, but for whatever reason, it wasn't done. Moreso than that even, was that people never questioned it, despite being able to easily prove it within a few minutes of the question being posed. The volume of things we think we know but are wrong about is only eclipsed by the things that we don't know, and the things we don't even know to ask about.

-

I find these conversations to be hilarious.. I suspect that no-one in this thread, or on these forums perhaps, actually knows enough to compare FF to crop sensors. Comparing FF vs MFT at a given FOV and DoF and exposure value is a complex picture, and no-one here is actually including all the variables. There are lots of people on here that don't even understand that you have to apply the crop factor to the aperture value but not the exposure value! No-one has even mentioned the GH5S dual-ISO yet - can anyone here speak with any actual experience of the impacts on DR and low-light ability between the A7iii and the GH5S when partnered with equivalent lenses? What about the P4K or P6K? What about the difference between the dual-ISO P6K shooting RAW vs the A7iii shooting compressed video? What about now? What about the relative noise levels in the shadows once we downscale the 6K RAW images onto a 4K timeline? Still feeling confident in these broad sweeping statements are we? What about when we apply enough NR to the 4K downscale of the 6K RAW images from the P6K to match the smoothing effects of the A7iii compression? Still feeling like an authority on the subject? Take a reality pill and a big step back people....

-

People are never satisfied. Mostly that's because they want something that they're not willing to put the work into getting. I have the GHAlexa LUT from Sage and I'm still doing this. I think having a LUT doesn't actually help a persons film-making all that much unless they were doing everything really well except colour, in which case that will significantly improve their weakest link. Putting any LUT on uninspiring footage won't help at all, and buying a LUT without understanding what it's doing only gives you the choice of using it or not using it, which isn't actually that useful if you don't know how to colour grade yourself and can adjust shots to get the most out of the different lighting in each shot.