-

Posts

970 -

Joined

-

Last visited

hyalinejim's Achievements

Long-time member (5/5)

1k

Reputation

-

hyalinejim reacted to a post in a topic:

Chat: Films, art and cinema

hyalinejim reacted to a post in a topic:

Chat: Films, art and cinema

-

hey! i was wondering if the LUT you created for this is still available - the Drive link seems to be broken.

many thanks!original post:

-

I made a colour accurate LUT for the S5II and it's available here:

https://lumasweet.com/shop/p/lumasweetlifelikes5ii

The LUT in the post you mention was probably an early version of this.

-

-

hyalinejim reacted to a post in a topic:

Why FF is the digital age hybrid king?

hyalinejim reacted to a post in a topic:

Why FF is the digital age hybrid king?

-

hyalinejim reacted to a post in a topic:

Shallow DOF jumped the shark?

hyalinejim reacted to a post in a topic:

Shallow DOF jumped the shark?

-

hyalinejim reacted to a post in a topic:

Let's bring back the good, old-fashioned camcorder of the 1990-2000s, but with modern specs.

hyalinejim reacted to a post in a topic:

Let's bring back the good, old-fashioned camcorder of the 1990-2000s, but with modern specs.

-

hyalinejim reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

hyalinejim reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

hyalinejim reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

hyalinejim reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

hojomo reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

hojomo reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

PannySVHS reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

PannySVHS reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

This is probably what Adobe were trying to do with the Lumetri colour panel, to provide Lightroom-like controls for video users. The only problem is that it can't tell what the input is, so its behaviour is nothing at all like Lightroom, unfortunately. I'm not sure if this is related or not, but I remember there was a plugin called Logarist (which I never used). The idea was that you tell it what kind of log you're using and then its behaviour is consistent across cameras.

-

Michael S reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

Michael S reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

PannySVHS reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

PannySVHS reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

kye reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

kye reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

kye reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

kye reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

hyalinejim reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

hyalinejim reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

While I agree that it's very pleasant to grade a project where all clips have the same exposure level, I've found that I really like the ACES CC curve - because it's perfectly straight! This makes matching exposure a doddle. I just need to move the whole waveform up or down to match one shot with another. Again, if I could slap on a LUT and walk away I absolutely would - I would even burn it in! But for real world shooting it's incredibly difficult to nail exposure every time. And by "nail" I mean get the exposure that looks best to me. The topic of how to expose correctly is a rabbit hole when shooting outside of the studio: angle of the grey card to the light source, how to use a reflective meter correctly, worrying about K constants etc. Even when shooting negative film, there is always room for adjusting the brightness of the image in the printing or scanning process to compensate for "errors" in exposure. For me, with digital video, the ideal solution is to shoot log, adjust the signal in ACES CC space to balance all clips, and send that to a nice conversion. This is a slightly different approach to what @kye suggests in the first post, I think, which is to not accept a given conversion as is, but to grade each clip to taste after the conversion. Instead, I have a conversion that I feel is nice enough without further tweaking, but I am using ACES to digitally re-work the signal in such a way that it's very similar to what you would get if the scene were brighter/darker, high-key/low-key, warmer/cooler. In this way it's actually very similar to shooting RAW photographs and using a RAW converter. And it allows for conscious decisions such as ETTR strategies, as well as compensating for errors. So my post work involves a brightness slider, a contrast slider and a colour wheel. And that's it!

-

kye reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

kye reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

PannySVHS reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

PannySVHS reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

MrSMW reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

MrSMW reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

If was able to nail exposure and white balance in every shot I wouldn't need any tweaking other than contrast. But I don't think anyone expects there to be a magic transform that doesn't require some small bit of work. We also want to leave ourselves a bit of flexibility in deferring some decisions until post.

-

kye reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

kye reacted to a post in a topic:

Simple colour grading > Camera colour science (Why you should learn to colour grade)

-

Lol! I have used just one LUT for every single client job I've done over the last 5 years. It emulates the colour response of slightly overexposed Portra 400 film. I pop it on and adjust brightness, contrast and white balance in ACES space before the LUT. It takes 15 to 30 seconds to do most clips, and makes matching shots a breeze. I've made a new version for myself every time I get a new camera, so technically it hasn't been just one LUT, it's been three. But it does the same thing for each camera.

-

hyalinejim reacted to a post in a topic:

THE Big Question

hyalinejim reacted to a post in a topic:

THE Big Question

-

hyalinejim reacted to a post in a topic:

Canon Highlights?

hyalinejim reacted to a post in a topic:

Canon Highlights?

-

hyalinejim reacted to a post in a topic:

Why Christopher Nolan uses a flip-phone

hyalinejim reacted to a post in a topic:

Why Christopher Nolan uses a flip-phone

-

Yes, I agree. I used that liitle APSC one on a bunch of interviews and ended up blurring the footage.

-

Too late to add an edit, so here it is: Yes, you're right, in development you can adjust the contrast and density (exposure) of the negative. But ECN-2 is a fixed process, there's a recipe to follow. Let's say you didn't have enough light and knew you underexposed the scene. You would have to send an instruction to the lab to push that whole reel 1 stop or whatever. Now, bear in mind that this is my understanding. I could be talking out my ass here!

-

@TomTheDP I think there is a "second exposure" when the negative is exposed to the print film. This is the origin, I seem to remember reading, of the term colour timing - you can control the brightness by adjusting the duration of the exposure. So if you had overexposed your scene to get cleaner shadows, let's say, you would now underexpose it to the print film, I imagine. I guess it was also possible to introduce colour filtration at this stage to correct for "white balance". I'm basing all this on half-recollections of things I read years ago, so take it with a pinch of salt. But certainly when you expose a negative to photographic paper, this is how it's done. I've done a bit of that and it's fun!

-

I went through a phase of trying to find the perfect cheap (non-L) EF zoom to use for film photography. I was looking for something small and relatively lightweight. When I tested them on digital they were all kind of horrible, specifically in terms of veiling flare, which I dislike quite a bit. I guess I am a fan of more contemporary coatings. But it is fun shopping for lenses!

-

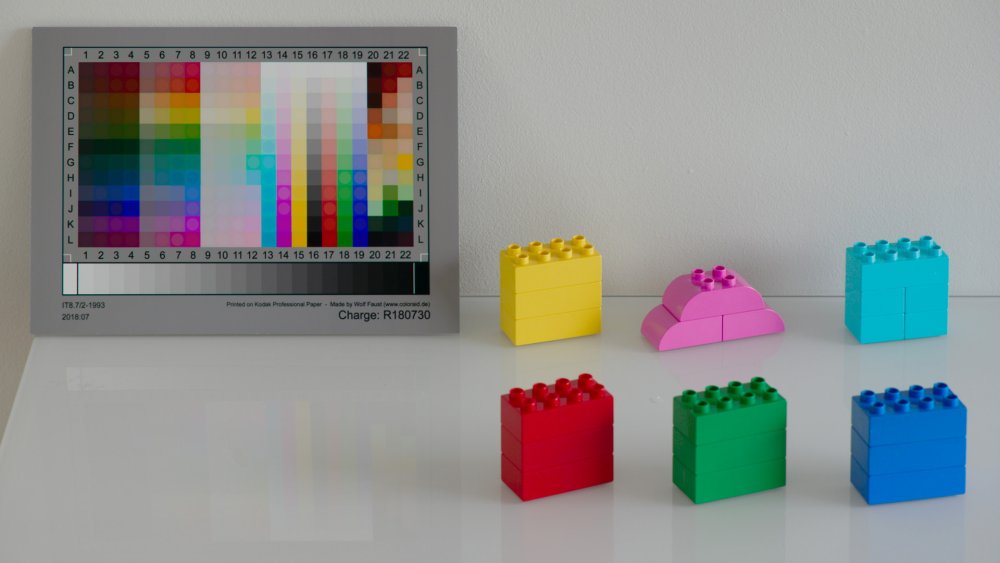

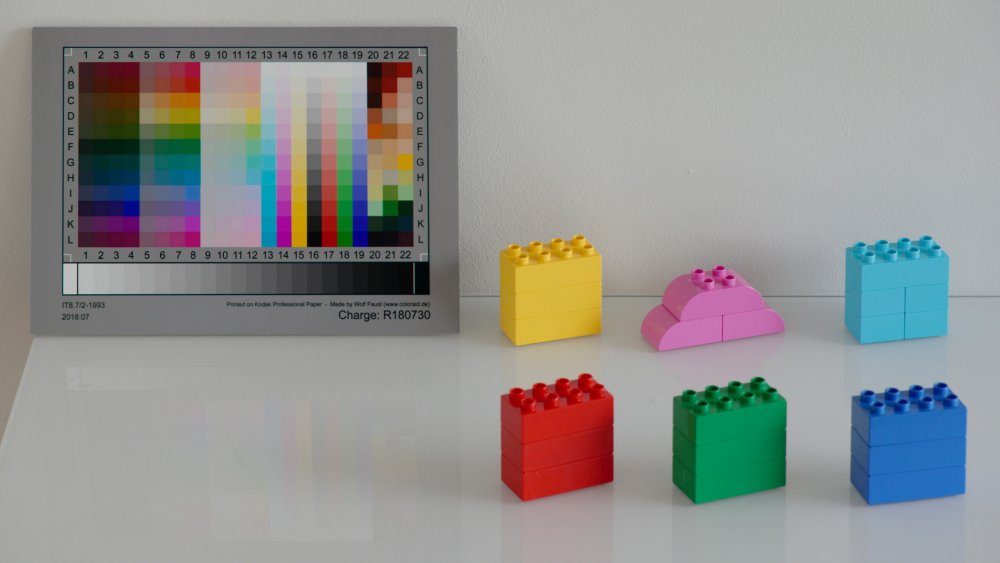

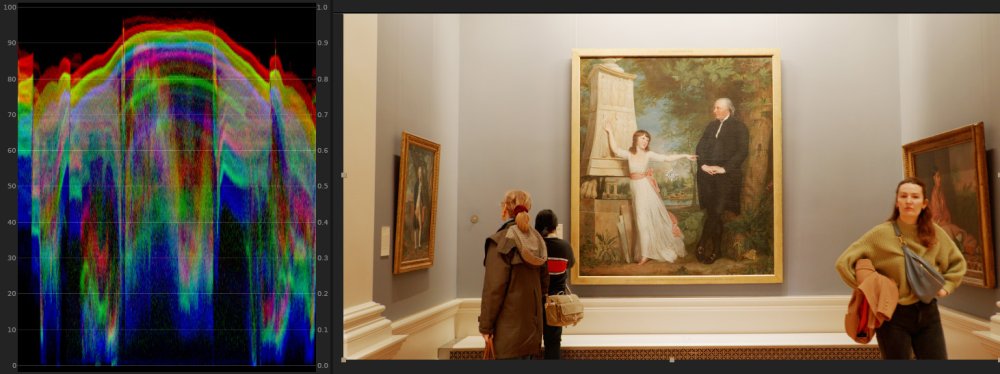

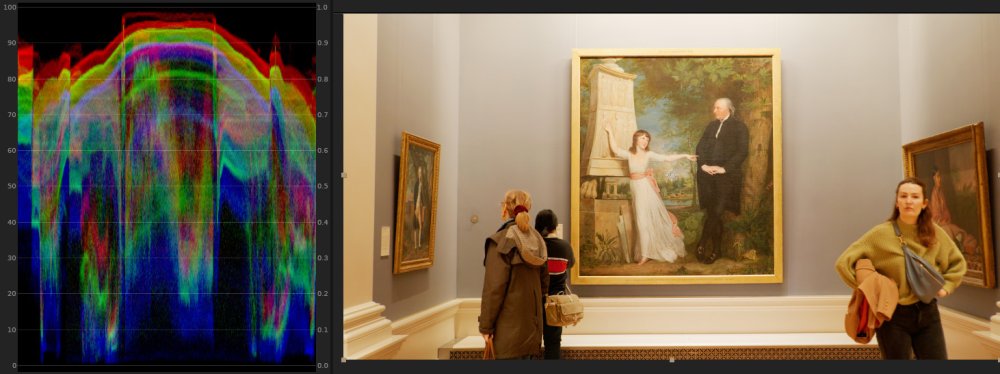

Panasonic S5 II (What does Panasonic have up their sleeve?)

hyalinejim replied to newfoundmass's topic in Cameras

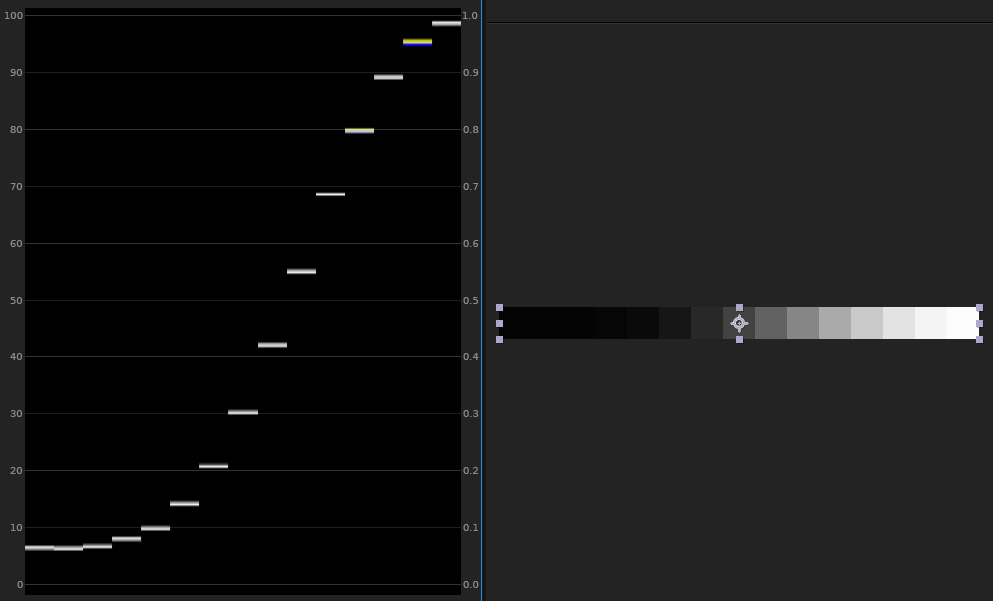

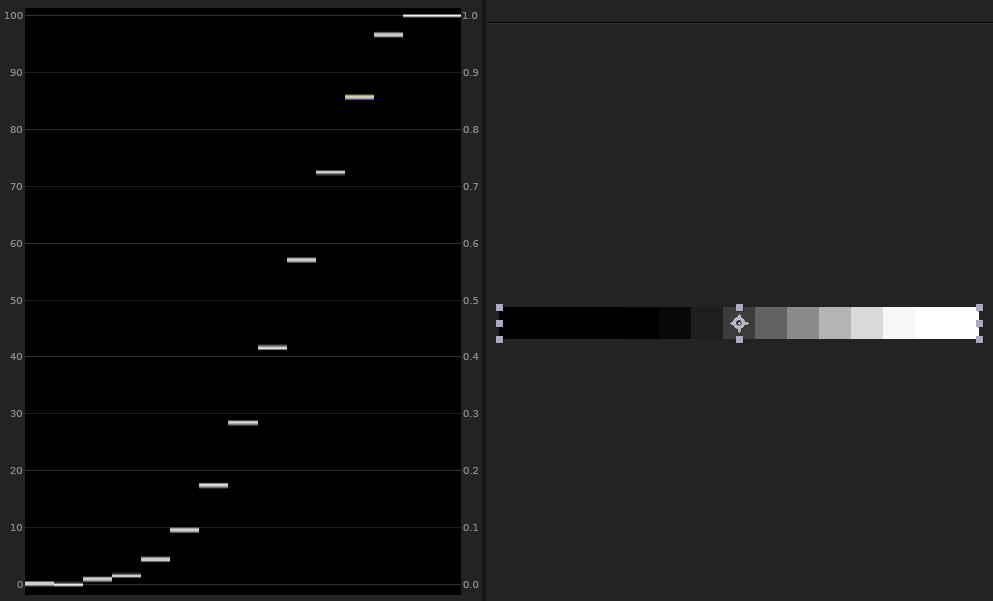

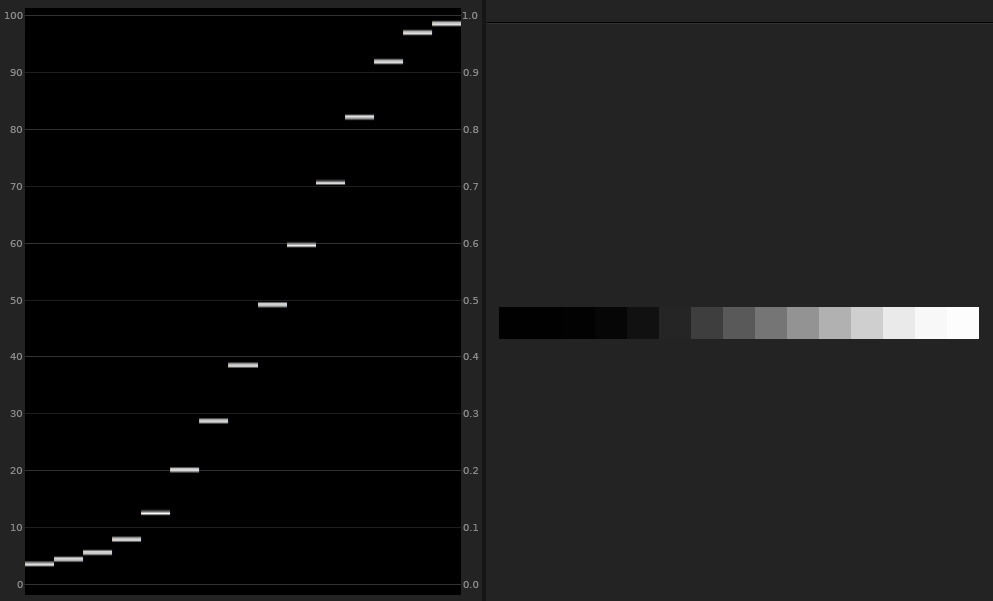

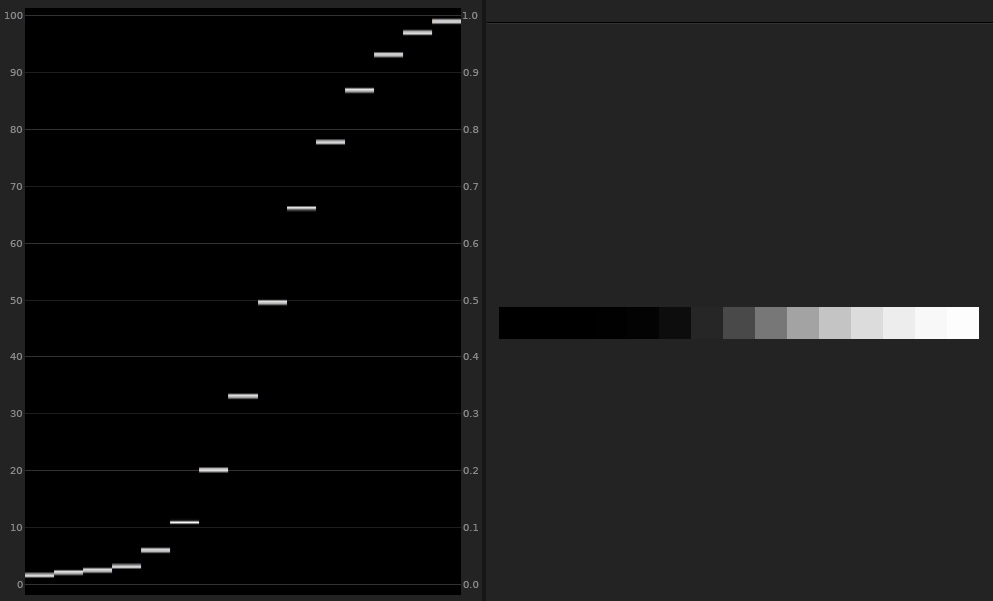

Lol, yes it was! It started off as me saying to myself "Oh, I suppose I might as well knock up an accurate colour lut for my new camera, should probably take an hour or two", but it ended up taking a little bit longer than that! It's an interesting idea that the V35 to V709 LUT is primarily designed for monitoring. It certainly works very well in that regard when it comes to colour because, as you say, there's very little clipping or over-saturation. But I always found the curve a bit weird for these reasons: Middle grey stays at 42 IRE. But middle grey for Rec709 is 49 IRE. The shadows are lifted. It looks OK on a Rec709 display, ie: dark areas do look dark: But on an sRGB display you can never get true black without pulling the shadows down after the LUT. Panasonic says that "Output is in legal range only" but if you convert the output from legal to data levels then the shadows are properly dark, but the highlights go into the superwhites, which is annoying: I had a good long think about what kinds of curves to supply with my conversion. I settled on Panasonic's default curve (for those that are used to it) No curve at all (for those who want to create their own) A flat curve to just de-log the footage but keep highlight and shadow detail (for those who like to tweak exposure and contrast after the LUT. Middle grey = 49 IRE) A really nice, strong filmic curve (for those who like to tweak exposure and contrast before the LUT. Middle grey = 49 IRE) LumaSweet FLAT: LumaSweet FILM: Yes, without any gamut mapping or saturation control the more saturated colours get ugly fast. Here I've put the reference values of the chart as an overlay of little circles. If you can see them there's a discrepancy. Here's ACES V-gamut to Rec709 colour: LumaSweet Lifelike colour: So my conversion handles highly saturated colours pretty well, straight off the bat. Even compared to Panasonic's V709, it is usually better at preventing very bright or saturated colours from slipping off the waveform in the shadow areas, and almost as good in the highlighights: Panasonic V709 colour LumaSweet Lifelike colour -

If the lens doesn't communicate the focal length metadata to the camera body (which I guess this won't as it's an old lens) then you need to enter it manually. For a zoom, it's a nuisance as you would need to re-enter the focal length every time you change it. There's a way to save a number of lens presets. So you could save 40 and 80 (if you're only going to use those two). Then there might be a way to assign the lens preset menu to a custom button. I'm not sure as I don't have my camera with me right now. But there would still be a few button presses involved. If you were planning to use the lens at the same focal length for the whole shoot then it would be no problem. But switching from one to the other and back again might slow you down.

-

That starting point I posted about in the S5II thread is designed to give reality-accurate colour, but for my own work I use a film emulation. Over the last few years I've profiled around a dozen different films, both negative and slide film. The best of these for the commercial work that I do is Portra 400, which is kind of the world's favourite negative film anyway. Since the GH5, through the GH6 and now the S5II I've used this emulation for colour and contrast. For me it's more convenient to slap on a LUT (a good one though!) rather than having to figure out how to grade each project, scene or clip from scratch. Then, the only grading I need to do is to make adjustments to brightness, contrast and colour balance before the LUT, perhaps saturation sometimes. The engineers at Kodak spent years researching and improving their film stocks to give use a really nice colour palette. It takes a fair bit of time and effort for me to make an emulation that works well. But once it's made I can fly through the grading process in post, which is very useful when there's a deadline, and get great colour. It's very rare that I would need to do intermediate or advanced grading techniques like adjusting individual hue ranges or isolating colours, although of course it's very useful to know how to do those things. I think ever since the days of VisionColor (I might be misremembering the name), a picture profile for the 5DII, I really liked the idea of finding a colour transformation I like for the camera I use and sticking to it.

-

I don't think that's quite right. If you follow the manufacturer's recommendations for development then you should get what you see on the data sheet ie: 16 stops for Vision 3 or whatever amount it is they're claiming, I forget the number. Do you mean the transfer from the neg to the print stock? The print stock would have a much lower dynamic range, because it's for projection, but so does your monitor. You compress the stops for the output device, in both cases.

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

hyalinejim replied to newfoundmass's topic in Cameras

The problem is that there is only one conversion, the V35 to V709, but it's not accurate for all cameras because there are different sensors in them. I don't know if it was even accurate for the V35! Ideally, Panasonic would either calibrate the V-Log colour for each model so that it matches the input that the LUT expects (there is an internal transform from RAW to V-Log), or else create a custom conversion that's specific to each model. If you want an accurate as possible conversion for the S5II or S5IIx then LumaSweet Lifelike will do that job. From what I've seen of other cameras (GH5 and GH6) they're all significantly different from each other, and there's no real pattern to it, other than they all share bright, desaturated blues (though not to the same extent). Reds are all over the place between the three models. People complained about magenta skintones on the GH5. The GH6 has much brighter skintones (which looks nicer) but it's too far towards orange to be accurate. The hue of S5II skin is fairly accurate but it's so dark as to cause blotchiness. The only scope Panasonic has for calibrating colour across models under their current system is in the internal RAW to V-Log conversion for each camera. They're stuck with the problem of varying sensors (or colour filter arrays, IR filter, or whatever other hardware is causing the colour variations). They're stuck with a single conversion LUT. I guess the only thing they can tweak is the matrix that converts RAW colour to V-gamut. This is just a grid of numbers and is a very general kind of correction. Its effect on colour can be a bit like herding cats. And I would guess that this is why the colour varies so much between models. I'd love to know if it's the same problem with Canon and Sony, or whether their implementations of log are a bit more consistent.