KnightsFan

Members-

Posts

1,382 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by KnightsFan

-

You should be able to send audio from one of the Camera Outs on the DR-60 to the microphone jack on the NX1 via an aux cable. However, I tried this once and got horrible noise, so your mileage may vary. For syncing, I usually read the name of the audio file off the DR-60 screen. That way I know which audio file goes with which video file. You could also write down the filename on a whiteboard or clapperboard and hold it in front of the camera, but I've found it's easier to have that identifier in the camera's internal audio than it is to have it in the video image. Whichever works for you. You should also make a loud clap to have a place to sync on. In post, it's usually easy, but time consuming, to sync audio and video manually. Alternatively, there are several automatic tools. Premiere and Resolve both have builtin features where you select an audio file and a video file, and then it automatically syncs them. I've found they work some of the time. PluralEyes seems to also be a popular tool, though I've never used it.

-

I read that it's a limitation of the physical design of the E-mount. The mount diameter is very small, so lenses can't project a large enough image circle to allow sensor movement without vignetting. Source: https://petapixel.com/2016/04/04/sonys-full-frame-pro-mirrorless-fatal-mistake/

-

I got one of these Talentcell power banks: https://www.amazon.com/Talentcell-Rechargeable-6000mAh-Battery-Portable/dp/B00MF70BPU/ref=sr_1_1?ie=UTF8&qid=1532111479&sr=8-1&keywords=talentcell+6000+mah It powers my F4 for several days of shooting on a single charge. It can simultanteously provide power and charge from an AC outlet, which saved me once when I forgot to charge it the night before. I have my battery mounted to the top of the F4 with a custom clamp, but you could probably use a cell phone tripod adapter to hold it on the F4. You could also use gaffe tape if all else fails.

-

Exactly. I can just do away with the USB cable clamp, so that I can unplug it when necessary to avoid the glitch, but it's a lot less sturdy. @iamoui Thanks for the suggestion! I considered that early on. But I like powering both the monitor and camera (and any other accessories) from the same powerbank. Furthermore, the battery grip will add extra height, which throws the balance off my rig. If this glitch can't be solved, I'm satisfied with having a non-permanent USB cable that I can detach when necessary. And eventually I hope to figure out a way to have it "quick release." But it would certainly be ideal if there was a way to work properly with the setup I shared in the photo.

-

I see, makes sense! Yeah, don't leave any long HDMI cords dangling about. I think if you're in a shop and don't need to move the monitor about very much, 7" won't be too large. I personally might even favor the suggestion of a PC monitor, or an HDTV that you can see from across the room. Is there any chance you can get audio meters and peaking out from your GH5? Speaking to the monitors you've noted, my experience with cheaper monitors is that pixel-to-pixel never works with 4k. The Bestview pretty clearly states that it DOES, but I assume that both Feelworld monitors do NOT, despite what any YouTubers say. The Feelworld monitors I've used will zoom in, but they zoom in on the downscaled image, not the original 4k one. So it's really pretty useless. So it seems that the Bestview is the best for viewing focus, though pricey. I wonder if you could ask a seller whether UHD 23.976 and 29.97 are supported? It does seem very odd if not. Out of the Feelworlds, it seems the main difference is the number of custom buttons, which really doesn't make an $80 difference to me. I usually leave focus peaking on. I switch false color on and off regularly, but tbh I don't regularly toggle any other features.

-

I, too, switched from 7" to 5" because the large screen was unwieldy. However, the size was not an issue for locked off tripod shots. It was just a problem for handheld or those occasional really tight spaces. I could see 7" being perfect for a one man show, where I assume you'll be using a tripod most of the time. However, if you ever do another project with a crew or handheld shots, I think the 5" will be more useful. Either way, what about using a long HDMI cable and bringing the monitor with you, rather than leaving it on the camera? You can just set it down out of frame when you're ready to roll.

-

There are two USB modes, Mass Storage and Remote Access. In Mass Storage mode, the camera will lock up if a USB cable is plugged in (such as a permanent right angle adapter...) It's in Remote Access that I get the HDMI glitch. True, the batteries last a long time compared to many other cameras. But 90% of my shots are on a tripod with room for a massive battery, so it's a choice between changing batteries every two hours, or never having to worry about batteries.

-

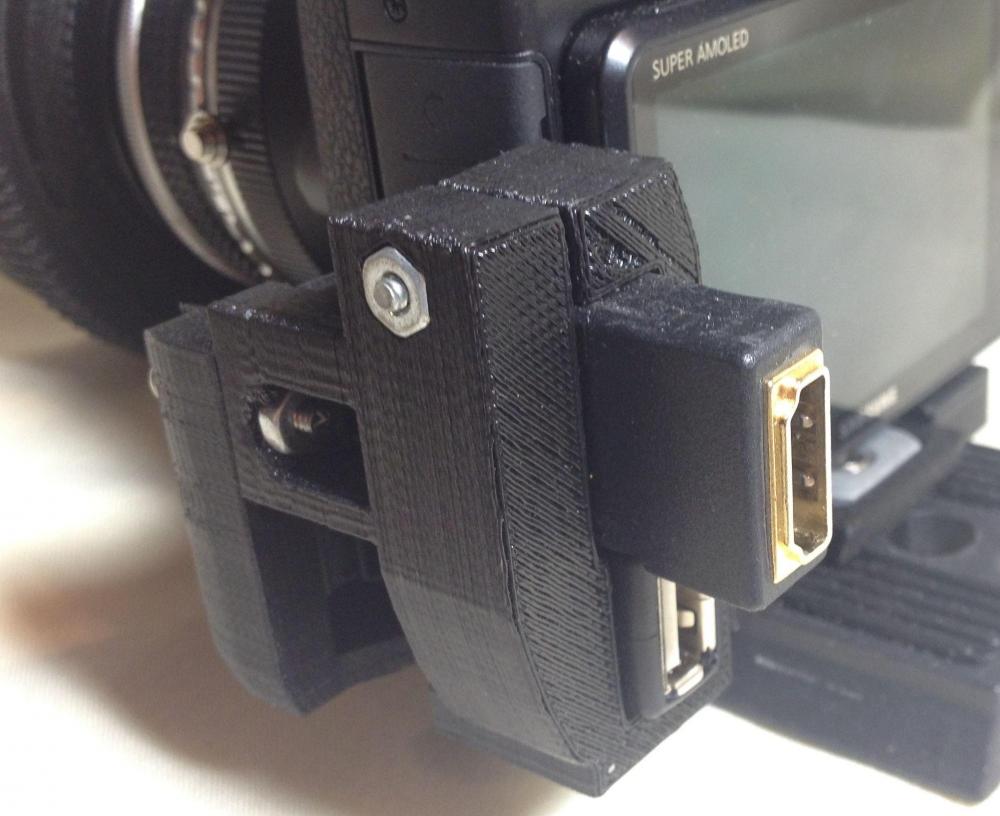

One of the biggest annoyances about the NX1 for me is that the HDMI output and USB recharging barely work together. If anyone else has overcome this issue, please let me know. Essentially, if there is a USB cable attached to the NX1, but the cable is not attached to a powerbank when the camera is powered on, the HDMI output will not work at all. My ideal setup is to power the camera from a TalentCell powerbank, which also powers my monitor (fantastic solution, btw, it runs all day and can even be tethered to an AC outlet). To that end, I 3D printed a clamp that holds both an HDMI and USB adapter. It's sturdy and great for cable management. However, if I power on the camera without attaching the USB cable to the power bank first, the HDMI out will not output any signal at all. For example, if I power on the camera as it is seen above, with right angle adapters in place that go nowhere, then there will be no way to get an HDMI signal out of the camera--- I would have to power off, attach the USB cable to my powerbank, and then power the camera back on. Pretty much any other combination works perfectly: if the camera is powered on without the right angle adapters in place, no issues. I can even disconnect the USB cable after powering on the camera, and HDMI will continue to work. It really just seems to be an issue of whether there is a USB cable -to-nowhere at the moment that the NX1 is switched on. Has anyone else encountered this, or even better, overcome this?

-

I'm not saying it looks like film grain, but digital noise seems fine to me before compression.

-

Compression also hurts digital noise. Noise from uncompressed Raw files looks great; it's only after compression that it becomes video-y.

-

Liliput A5, or any other cheap 5" or smaller competitors?

KnightsFan replied to IronFilm's topic in Cameras

@Timotheus No, it does not have anamorphic mode. -

Liliput A5, or any other cheap 5" or smaller competitors?

KnightsFan replied to IronFilm's topic in Cameras

Forgot to mention: + Almost no latency with a 1080p signal. It's only noticeable at all on very fast whip pans. I have not tried with a 4k signal yet. -

Liliput A5, or any other cheap 5" or smaller competitors?

KnightsFan replied to IronFilm's topic in Cameras

I got the A5 in a rush (after finding last minute that the Ninja 2 does not work with the NX1), and have been shooting with it every day for the past two weeks. I'm pretty happy with it. Regarding the discussion of the brightness, it's adequate. I've had the brightness at 50% and it's viewable outside for framing and peaking, though it's no match for an EVF. All in all I would totally recommend it to anyone on a budget who doesn't want a massive 7" monitor. But make sure your batteries work before using! Here are my pros and cons, loosely in comparison with the Feelworld FW760 that I used to use. + Smaller but still 1080p. 5" vs 7" is a huge difference - and also it has thinner bezels and is thinner so it's actually much much smaller. + MUCH lighter. Makes my setup more balanced + HDMI out + Mounting points on three sides + Screen is fantastic. Focus peaking works very well. + It remembers settings through power on/off cycles. The FW760 would reset everything if it powered down. - The battery plate is finicky. It's not a problem for me since I power everything from a power bank (I didn't even own any batteries for my FW760). It will not work with a Wasabi LPE6, but it will work with an Ipax LP-E6, even though BOTH of those batteries power a 5D3 perfectly. - Plasticky. I think the FW760 felt a little sturdier, but not by much. However, the mounting points seem secure and it's not going to fall apart in your hands. Sort of the build you expect from a screen this price. - Only one customizable button. - False color does not have a color scale on the side, so you have to guess what IRE each color corresponds to - The 1/4-20 threads are too shallow. I had to put a few washers on my friction arm's thread. - The hot shoe mount that comes with it is junk. - It would have been really nice to put one of these on the back: https://www.amazon.com/SmallRig-Camera-Threaded-Monitor-Accessories/dp/B01NCK79G2 . I had one on my FW760 and then used a lock washer on the friction arm to keep it from accidentally rotating. -

I'm not 100% sure I'm following you. If you mean that the focus throw of the lens is too short to accurately pull focus, that is entirely due to the construction of the lens. You can make the same optics have a focus throw of 5 degrees or 300.

-

No, we were talking about pixel vignetting, which is caused by pixels being recessed. Oblique rays of light get occluded by the "rim" of the pixel. The farther from the center of the image, the more oblique the rays that strike that pixel. Thus, more light is occluded by the "rim" at the corners which causes vignetting. My thought was that if you simply take a design and scale it down, none of the angles will change. So the light rays passing through a 50mm on full frame will have the same angles as they would through a 25mm on MFT, if measured at corresponding points on the sensors. Now, I just did a back-of-the-envelope calculation that implies that I was wrong, since we are measuring at equivalent DOF, which interestingly makes the diameter of the aperture equal. (Maybe that's obvious to all of you, but I found it interesting). But I don't know enough about optics to be sure that any of my thought process is correct! True! That is why Andrew compared a 17.5mm f0.95 to a 35mm f2.0. Halving the focal length requires an extra two f-stops wider aperture for equivalent depth of field.

-

I'm probably wrong, I'll need to read up more on lens design. It was an intuition based on the fact that scaling an object doesn't change any angles--but again, I am not sure it's right. I hoped someone could explain why it was right or wrong. @Brian Caldwell That's how I understood you before, thanks for clarifying!

-

Exactly. So my intuition was that a 17.5mm f0.95 on MFT would have roughly the same angle of incidence as a 35mm f2.0 on FF, thus making pixel vignetting a non-issue when comparing the two. @Brian Caldwell has given me a few more things to read up on, thanks! Though, if I understand you correctly, the obliquity only darkens the edges of the bokeh, and will not have a large effect on the part that is in-focus?

-

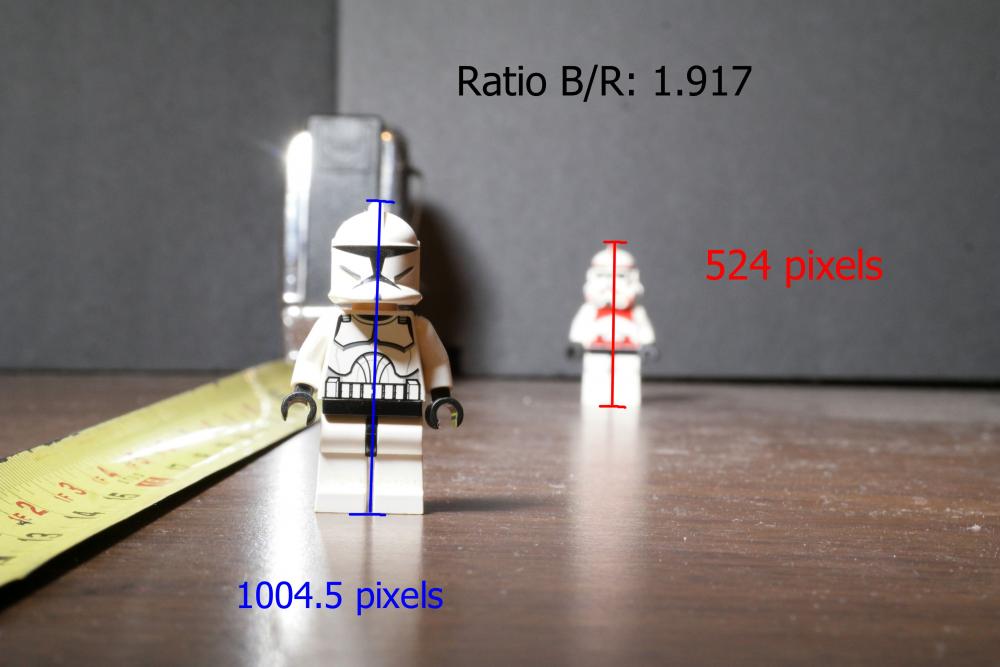

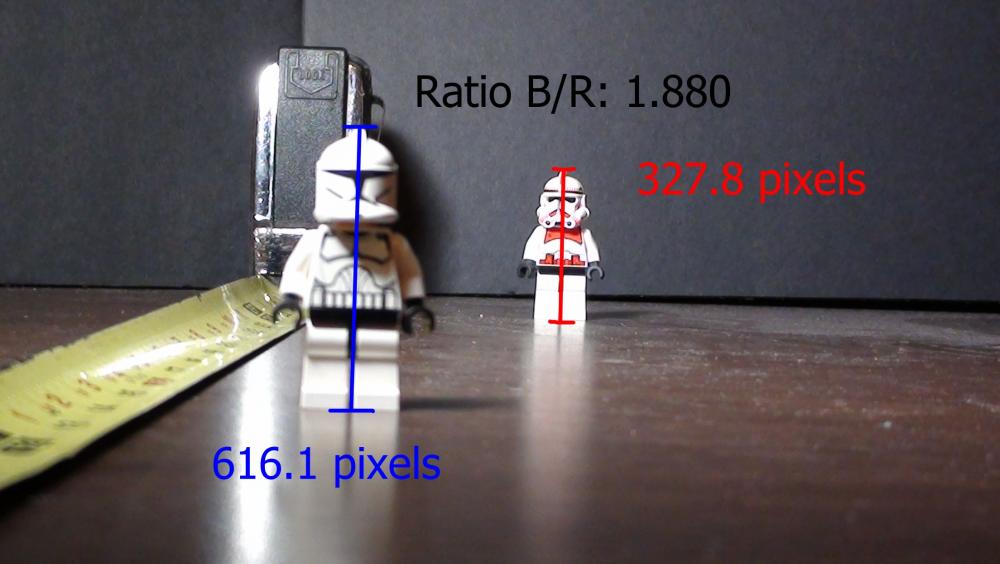

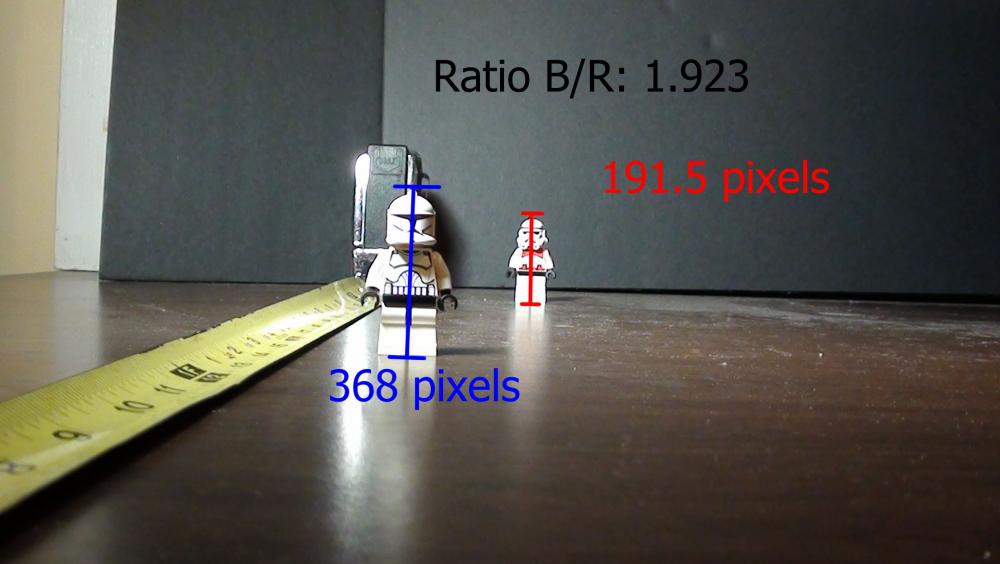

@blondini No, perspective distortion is only affected by distance from the camera to the subject. No matter what sensor/lens combination you use, if the camera and the subjects don't move, then the ratio of the size of two subjects will remain the same. I did a quick and dirty test to illustrate. It's a little imprecise (the camcorder would NOT focus on the guy in front...) For all three images the camera is in the same place. I suspect the small discrepancies in ratio (2.2% error) are mainly due to moving parts inside the camcorder when it zooms, which changes its actual distance from the subject. But this is an easy thing to test yourself. First image is a 4mm lens on a 1/4 type sensor Second image is a 55mm lens on an APS-C sensor As you can see, the ratio of the figures is the same. You could even use a wider lens and the ratio remains, because the distance has not changed: Third image is a 2mm lens on the 1/4 type sensor. Quote from Wikipedia: Yes, it would. As long as the camera is in the same place, the relative size of the plane compared to the people will remain the same regardless of the lens or sensor. If you don't believe me or my Legos, go try it yourself!

-

Same reason why there is less vignetting on a center crop from a full frame image. Angle of incidence becomes more oblique the farther from the center of the image.

-

@horshack If I'm not mistaken, pixel vignetting is entirely due to angle of incidence on the sensor. Smaller sensors have less oblique angles of incidence at the corners. I could be wrong, but I bet that pixel vignetting won't be a factor in this comparison. I'd love to see evidence either way, though.

-

I would get a MoBo with more PCI slots. They can be used for many expansions, including GPU, I/O ports, capture cards, WiFi cards, and more. I would also get a case with lots of large fans. I've got something like 3 120mm and 2 140mm fans, which keeps it cool enough to prevent the loud GPU fans from kicking in. Lots of large fans => low RPM => less overall noise.

-

@Andrew Reid Yeah, "directly related" isn't the correct wording, but we mean the same thing. As sensor size increases, you can either increase resolution with the same pixel size, or increase pixel size at the same resolution. You said so yourself in your disclaimer at the end: "For example you can have higher megapixel counts because there’s simply more real-estate on the chip surface to add more pixels." That is why I said "assuming similar tech." The a7r3 has better tech. I should have specified photo-sensitive area, rather than simply "surface area" and would perhaps have been clearer. Wait, you just posted an article about how the 17.5mm f0.95 is comparable to the 35mm f2.0. If you don't have to change both numbers, why aren't you saying the 17.5mm is comparable to a 35mm f0.95? But anyway, I'm just explaining Northup's argument. He claims there is marketing material on their websites that is misleading, idk if that's true but his crop factor equivalence works for the comparison he's making, despite being poorly worded and convoluted.

-

Assuming we're talking about the same video, he was saying that manufacturers were erroneously marketing their lenses giving equivalent focal lengths, but not equivalent apertures. Like marketing an 14-42 f2.8 as a 28-84 f2.8. His point was that IF you change one number, you have to change the other. And he did specify that he was talking about equivalent depth of field - his title card for talking about it is "Aperture & Depth of Field." He was using ISO to talk about exposure, which he explained early in the video. In his logic, once you compensate for exposure with ISO, you have to compensate with aperture as well. It is a roundabout logic, but it does account for the lower light gathering power of a smaller surface area, and thus the need for more gain (and thus more noise or lower resolution) to reach the same ISO. True, but the light gathering power of the space is directly related to its size. The SNR ratio of a smaller sensor will be lower than a larger sensor (assuming similar tech), given the same image scaled down. Hence the f0.95 doesn't actually have any low light advantage over the f2.0, if you look at the system as a whole.

-

This is not true. If it were, photos of a total solar eclipse wouldn't work on different size sensors.

-

I may be misunderstanding, but it seems you want to transcode each original clip to a lower file size, which you might use at a later date to make new edits? Unfortunately, every time you transcode, you will lose some quality. It's up to you to determine how much you can lose and have it still be acceptable, since the only way to avoid losing quality is to keep the originals. So the question is, how much space do you want to save? Do you want to clips to take up half the space? A quarter? In general, you will want to use a space-efficient codec with the maximum bitrate you can. A 50mbps H.265 file will most likely be indistinguishable from the original. If I were you, I'd conduct a few tests with different encoding options, and then compare the smaller files to the originals to find the optimum size/quality that you like.