KnightsFan

-

Posts

1,214 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Posts posted by KnightsFan

-

-

This is cool. I was rooting for an F2 with 32 bit float on 2 XLR inputs and their new Zoom modules, more of a little brother to the F6. But this is a nice, cheap alternative to the Track E. I wonder if you can pair multiple F2's with the app and record them at the same time, maybe even in conjunction with the F6 which also connects to an app? Otherwise I can't see this being a useful replacement for lav mics, if each one needs to have the record button hit on each device individually. More useful for vloggers than filmmakers it seems.

-

@herein2020 Ouch. What hardware/OS do you have? I'll try it eventually and see if I have better stability. I guess the real headline should be that this is a beta, and if I recall correctly the 16 beta started off pretty unstable as well and went through many public iterations before coming out of beta.

-

Wow, I was not expecting this to have so many new and useful features. Useful to me:

- collaboration with the free version: I have studio, but it would be beneficial to allow others to hop in and view the timeline

- The color warper looks incredible

- Fairlight improvements. We'll have to see how usable it is. Thus far I've dislike Fairlight, but it would be very nice to get all my post work into the same program. Reaper is very hard to beat, but I'll have to try out the new fairlight features.

- H264/H265 proxies. If I can dial the bitrate and resolution way down, we can have native proxies that don't take up a ton of disk space. Previously I made ~1Mb/s proxies with ffmpeg, but Resolve doesn't play particularly nicely with outside proxies. Going native might solve those problems

- Effects templaes

- Audio waveform in Fusion--awesome! I'd love to also be able to see the timeline output on the Fusion page, I didn't see anything about that anywhere though.

- Custom vector shapes. Would have been really useful for a project I did last weekend, and will come in handy once or twice per year.

-

I also got a Z Cam E2M4, though somewhat unwillingly if I'm honest. I got it for the image quality, frame rate options, and wireless control. If such a thing existed, I might have gotten a DSLR-shaped E2*. However, the benefit of the boxy shape is it's easier to balance on a gimbal, and I do intend to swap out the mount for a turbomount at some point, which is a useful modularity. I also really appreciate the NPF sled, which lasts forever without the bulk and extra accessories for V mount. The other aspect that I really like are the numerous 1/4-20 mounts. I generally use the camera pretty bare, but I have a NATO rail on either side and can slip on handles in 2 seconds for a wide, stable grip. A DSLR would need a cage for that.

Most of the annoyances with DSLR-shaped camera come down to photo-oriented design, not the than non-modular design. Lack of easy, locking power connectors, lack of timecode, fiddly HDMI D ports, incomprehensible menus, lack of NDs--all could be solved while maintaining a traditional DSLR shape, and some camers do come along with some of them from time to time.

On the other hand, cameras like the FS7 and C100 are packed with nice features, but I really don't use any of them apart from ND's and they just make for obnoxiously large bodies that are even harder and more expensive to use. My perspective though is from narrative shoots where we spend more time on rehearsals and lights than anything else, so we're never concerned with setup times for the camera.

*before anyone mentions the GH5S, the E2's image is much nicer in my opinion and has way more video perks.

-

@hyalinejimI don't disagree with anything you said. But I do think that the difference between the two images you posted is very subtle to the point that without flipping back and forth, neither one would really stand out as "thicker". That's why I'm saying thickness is mostly (not entirely, but mostly) about the colors in the scene, as well as of course exposure and white balance. There's a definite improvement between the pics, but I don't think that it makes or breaks the image.

On the other hand I think the colors in my phone pics went from being stomach-turningly terrible to halfway decent with just a little water.

Another way to put it, is I don't think you'd get a significantly thicker image out of any two decent digital/film cameras given the same scene and sensible settings. You can definitely eke small gains out with subtle color adjustment, and I agree with your analysis of what makes it better, I just don't see that as the primary element.

-

Quick demo of the effect of water on image thickness. Just two pics from my (low end) phone cropped and scaled for manageable size. These may be the worst pictures in existence, but I think that simply adding water, thereby increasing specularity, contrast, and color saturation makes a drastic increase in thickness. Same settings, taken about 5 seconds apart.

-

-

31 minutes ago, kye said:

Thicker? It makes is look like there was fog on the water 🙂

I can't match the brightness of the comparison image though, as in most well-lit and low-key narrative scenes the skin tones are amongst the brightest objects in frame, whereas that's not how my scene was lit.

Yes, I think the glow helps a lot to soften those highlights and make them dreamier rather than sharp and pointy and make it more 3D in this instance where the highlights are in the extreme background (like you said, almost like mist between subject and background).

I agree, the relation between the subject and the other colors is critical and you can't really change that with different sensors or color correction. That's why I say it's mainly about what's in the scene. Furthermore, if your objects in frame don't have subtle variation you can't really add that in. The soft diffuse light comign from the side in the Grandmaster really allows every texture to have a smooth gradation from light to dark, whereas your subject in the boat is much more evenly lit from left to right.

36 minutes ago, kye said:The skintones I'm used to dealing with in my own footage are all over the place in terms of often having areas too far towards yellow and also too pink, and with far too much saturation, but if you pull the saturation back on all the skin then when the most saturated areas come under control the rest of the tones are completely washed out.

I assume you're also not employing a makeup team? That's really the difference between good and bad skin tones, particularly in getting different people to look good in the same shot.

-

@kyeI don't think those images quite nail it. I gathered a couple pictures that fit thickness in my mind, and in addition to the rich shadows, they all have a real sense of 3D depth due to the lighting and lenses, so I think that is a factor. In the pictures you posted, there are essentially 2 layers, subject and background. Not sure what camera was used, but most digital cameras will struggle in actual low light to make strong colors, or if the camera is designed for low light (e.g., A7s2) then it has weak color filters which makes getting rich saturation essentially impossible.

Here's a frame from The Grandmaster which I think hits peak thickness. Dark, rich colors, a couple highlights, real depth with several layers and a nice falloff of focus that makes things a little more dreamy rather than out of focus.

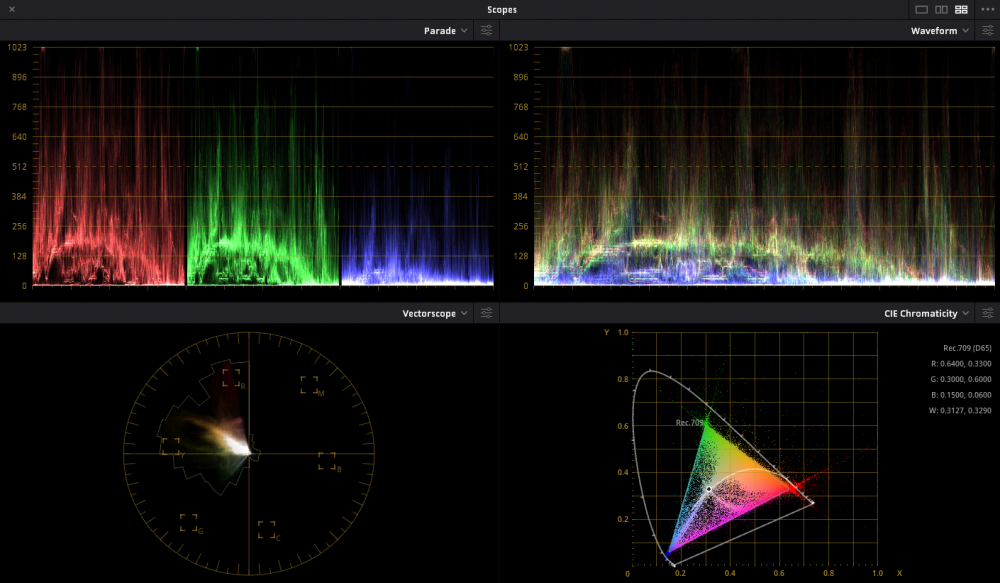

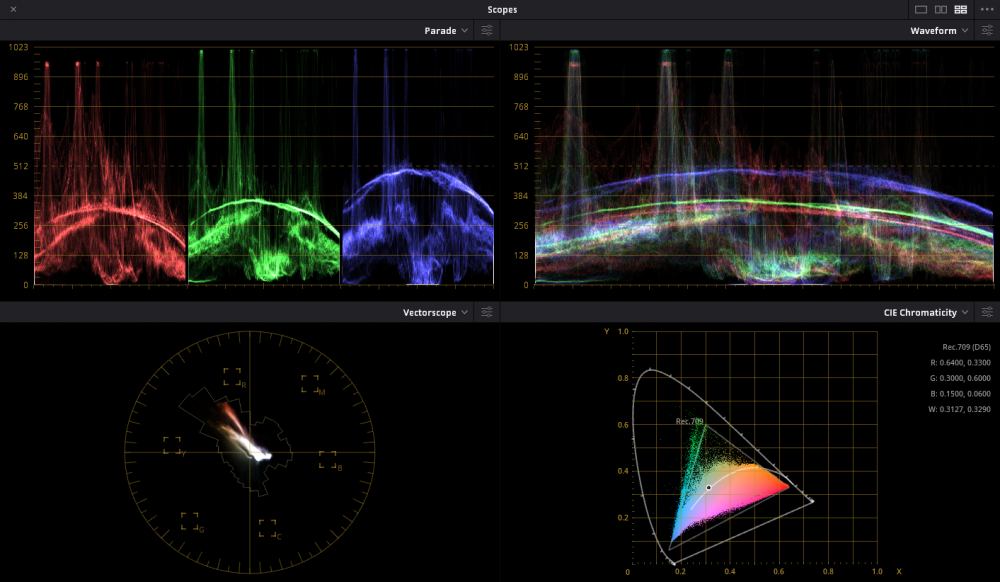

And the scopes which clearly show the softness of the tones and how mostly everything falls into shadows.

For comparison, here's the scopes from the picture of the man with the orange shirt in the boat which shows definite, harsh transitions everywhere.

8 hours ago, kye said:That was something I had been thinking too, but thickness is present in brighter lit images too isn't it?

Maybe if I rephrase it, higher-key images taken on thin digital cameras still don't match those higher-key images taken on film. Maybe cheap cameras are better at higher-key images than low-key images, but I'd suggest there's still a difference.

Perhaps, do you have some examples? For example that bright daylight Kodak test posted earlier here

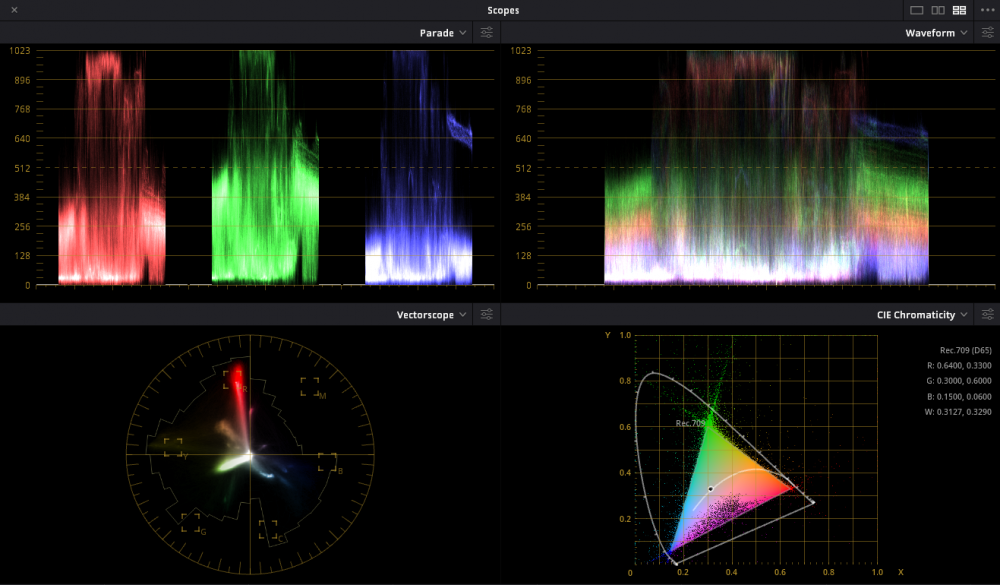

Has this scope (mostly shadow though a little brighter than the Grandmaster show, but fairly smooth transitions). And to be honest, I think the extreme color saturation particularly on bright objects makes it look less thick.

-

@tuppMaybe we're disagreeing on what thickness is, but I'd say about 50% of the ones you linked to are what I think of as thick. The canoe one in particular looked thick, because of the sparse use of highlights and the majority of the frame being rather dark, along with a good amount of saturation.

The first link I found to be quite thin, mostly with shots of vast swathes of bright sky with few saturated shadow tones.

The kodachrome stills were the same deal. Depending on the content, some were thick and others were thin. If they were all done with the same film stock and process, then that confirms to me that it's mostly what is in the scene that dictates that look.

1 hour ago, tupp said:We have no idea if OP's simulated images are close to how they should actually appear, because 80%-90% of the pixels in those images fall outside of the values dictated by the simulated bit depth. No conclusions can be drawn from those images.

I think that's because they are compressed into 8 bit jpgs, so all the colors are going to be smeared towards their neighbors to make them more easily fit a curve defined by 8 bit data points, not to mention added film grain. But yeah, sort of a moot point.

-

5 hours ago, tupp said:

Then what explains the strong "thickness" of terribly framed and badly lit home movies that were shot on Kodachrome 64?

Got some examples? Because I generally don't see those typical home videos as having thick images.

5 hours ago, tupp said:Unfortunately, @kye's images are significantly flawed, and they do not actually simulate the claimed bit-depths. No conclusions can be made from them.

They're pretty close, I don't really care if there's dithering or compression adding in-between values. You can clearly see the banding, and my point it that while banding is ugly, it isn't the primary factor in thickness.

-

I've certainly been enjoying this discussion. I think that image "thickness" is 90% what is in frame and how it's lit. I think @hyalinejimis right talking about shadow saturation, because "thick" images are usually ones that have deep, rich shadows with only a few bright spots that serve to accentuate how deep the shadows are, rather than show highlight detail. Images like the ones above of the gas station, and the faces don't feel thick to me, since they have huge swathes of bright areas, whereas the pictures that @mat33 posted on page 2 have that richness. It's not a matter of reducing exposure, it's that the scene has those beautiful dark tonalities and gradations, along with some nice saturation.

Some other notes:

- My Raw photos tend to end up being processed more linear than Rec709/sRGB, which gives them deeper shadows and thus more thickness.

- Hosing down a scene with water will increase contrast and vividness for a thicker look. Might be worth doing some tests on a hot sunny day, before and after hosing it down.

- Bit depth comes into play, if only slightly. The images @kyeposted basically had no difference in the highlights, but in the dark areas banding is very apparent. Lower bit depth hurts shadows because so few bits are allocated to those bottom stops. To be clear, I don't think bit depth is the defining feature, nor is compression for that matter.

- I don't believe there is any scene where a typical mirrorless camera with a documented color profile will look significantly less thick than an Alexa given a decent colorist--I think it's 90% the scene, and then mostly color grading.

-

I don't know what all the negativity is about, this looks pretty good to me. Worse specs than a Z Cam E2, but you gain Panasonic Brand (brands aren't my thing but brands are worth real money), SDI, timecode without an annoying adapter, and you can use that XLR module if you want. Plus it takes SD cards instead of CFast. If Z Cam didn't exist I'd get this for sure.

-

I don't have super extensive experience with wireless systems. I've used Sennheiser G3's and Sony UWP's on student films, and they've always been perfectly reliable. The most annoying part is that half of film students don't know that you have to gain stage the transmitter...

Earlier this year I bought a Deity Connect system, couldn't transmit a signal 2 feet and I RMA'd it as defective. Real shame as they get great reviews and are a great price. What I can say is their build quality, design, and included accessories are phenomenal. I very nearly just bought another set, but my project needs changed and I got a pair of Rode Wireless Go's instead.

I've been quite happy with the Go's for the specific use case being in a single studio room. For this project, having 0 wires is very beneficial--we're clipping the transmitters to people and using the builtin mics--so they are great in that sense. If your use case is short range and you aren't worried about missing the locking connector, you can save a lot of money with them. I will say I wouldn't trust them for "normal" films as the non-locking connector is a non-starter no matter the battery life and range. Though I think they will still be useful as plant mics, they are absolutely tiny!

-

9 hours ago, kye said:

I have bolded the most significant word in your post... "almost" 😂😂

I'll amend the statement... "encoders universally do better with more input data." If you keep the output settings the same, every encoder will have better results with a higher fidelity input than a lower fidelity input.

Therefore, if YouTube's visual quality drops when uploading a higher quality file, then it is not using the same output settings.

-

25 minutes ago, kye said:

1) yes, 2) not necessarily.

The 4K file was uploaded at over twice the bitrate as the better 1080p upload, yet the 1080p from it was only 70%.

It might be that a 4K reference file might give a slightly better IQ per bitrate stream, that 'bump' is competing with a 70% bitrate, and considering we're talking FHD at 2.5Mbps - I'd think the bitrate would win.

My second fact wasn't about whatever YouTube is doing, I was just stating that encoders almost universally do better with more input data. So if in YouTube's case the quality is lower from the 4K upload, then they must be encoding differently based on input file--which would not be surprising actually.

So I have no idea what YouTube is actually doing, I'm just explaining that it's possible @fuzzynormal does see an improvement.

-

I haven't used YouTube in many years, but here are two facts:

1. YouTube re-encodes everything you upload

2. The more data an encoder is given, the better the results

While the 1080p bitrate is the same for the viewer whether you uploaded in 4k or HD, it's possible (but not a given) that any extra information that YouTube's encoder is given makes a better 1080p file. Though my intuition is that the margin there is so small, uploading in 4k will not give any perceptible difference for people streaming in 1080p.

-

With the handheld footage and moving subjects focus is lost quite a bit. The shot of the orange for example I see no difference between all 3 since the orange is moving in and out of focus. But on that first shot of the plant, which is relatively still, A is the clear winner.

B and C are pretty close, but I do think that one shot has C losing ground.

32 minutes ago, kye said:Would you suggest a shot with lots of stuff moving? That's not that easy to find, although I guess the ocean would be pretty good for lots of random motion at a far focus distance.

I'd suggest shots that are similar to what you actually use since those will be most useful. I'm on a tripod most of the time, which is where you'll see the most difference between 4K and HD especially with IPB compression.

-

-

For me A > B > C. C looks the worst to me but mainly because that shot at 1:08 looks digitally sharpened which was visible in a casual viewing. I wouldn't have noticed any other differences without watching closely. I'm not sure how matched your focus was between shots but in the first composition A seems a little clearer than B which is a little clearer than C, could easily be focus being off by just a hair though.

-

30 minutes ago, Ty Harper said:

Thanks for the insight. I know my cpu will be a potential bottleneck eventually but I'm not comouter tech savy enough to know what that breaking point would be (at 2 1080TIs, 3?). I'll prob never invedt in a Hackintosh again... I'm also not interested in shelling out $2K for something newer.... but $400-$800 on an extra gpu, another SSD.... seems feasible....

If the CPU is bottlenecking, then money you spend on a new GPU will be wasted until the bottleneck is resolved. Same with the SSD--if that's not working at its max currently, then you can spend all the money in the world and it won't help at all. I bring up the CPU because I had a similar CPU and GPU, and upgrading the CPU made a world of difference.

If you're set on upgrading your GPU, you might want to look at the upcoming 3000 series cards before buying another 1080. The 3070 was announced at $499 for a release in October with over twice the CUDA cores as the 1080 at a TDP of 220W, which is much less than the TDP of two 1080's. Plus it will work even in software that doesn't specifically use dual GPU's.

-

First if you're editing high bitrate footage from an external HDD make sure that's not the bottleneck. A 7200 RPM drive reads at 120 MB/s, even even two uncompressed 14 bit HD raw streams would go over that.

I have a GTX 1080 and use Resolve Studio. Earlier this year I upgraded from an i7 4770 to a Ryzen 3600, and got an enormous performance boost when editing HEVC. So while the decoding is done on the GPU, it's clear that the CPU can bottleneck as well. When I edit 4K H.264 or Raw my 1080 rarely maxes out.

Overall I'd be pretty surprised if you need another/a new GPU for basic editing and color grading. Resolve studio is a better investment imo than a second 1080.

-

What type of editing do you do (VFX, color, etc)? Do you currently use Premiere or Resolve Lite? What parts need performance increases?

-

@kye Have you done any eyeballing as well? Interesting how ffmpeg's ProRes does better than Resolve's. I expected the opposite, because when I did my test, HEVC was superior to ProRes at a fraction of the bitrate, which doesn't seem to be replicated by your measured SSIM. I wondered if my using ffmpeg to encode ProRes was non-optimal. I didn't take SSIM recordings, and I have since deleted the files, but some of the pictures remain in the topic. From your graph, it seems impossible that HEVC at 1/5 the size of ProRes would have more detail, but that was my result. ProRes, however, kept some of the fine color noise in the VERY shadows, far darker than you can see without an insane luma curve. I wonder if that is enough to make the SSIM drop away for HEVC, as it's essentially discarding color information at a certain luminance?

(Linking the topic for reference--in this particular test I used a Blackmagic 4.6k raw file as source so you can reproduce it if Blackmagic still has those files on their site)

5 minutes ago, kye said:Exporting some trials now. It occurs to me that the previous way I was exporting was Quicktime -> h264 so I'm trying MP4 -> h264 which has more arguments.

Good call, I've read that MP4 is better than MOV. Not sure if it's true, but worth checking.

Davinci resolve 17

In: Cameras

Posted

I have an AMD CPU so might get different results. But my guess is that if its crashing with H265 files then it's something with the GPU, and since I also have Nvidia it might be the same story.

That sounds like they're heading the right direction. One reason I don't use Fairlight is that the UI was so slow and sticky.

Haha, I sure hope not! At least Blackmagic is honest about it being a beta.