-

Posts

8,050 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Not at all. Their AF will ruin your life. Not a single Panasonic camera has ever created a single frame where a single item was in focus. Not even if you filmed in a forrest - it would pick a focus distance where no single trunk, branch, twig or leaf was in focus. It's so bad that it's probably the cause of global warming and religious extremism!

-

I agree they have an image problem. My point was that it's based on ignorance, which cannot be cured.

-

I meant the old P6K, as I was responding to @PPNS saying "dont really get the point of the p6k anymore since the release of the pro anyway, i just would've discontinued it" Now with the new P6K being the same size as the pro, yeah, that size advantage is gone. I heard someone say that you don't need the internal NDs if you prefer to use external ones, potentially as they might be higher quality than the internal ones (I think they were referring to people who use filters in a matte box). FrameVoyager also said they had problems with their P6K Pro NDs breaking because the camera was dropped by luggage handlers so the NDs are another moving part that can fail. Seems like a typical camera industry thing, as: You get some upgrades from the previous model and combined with the same price point it's a "better" value for money You pay for it with down-sides that you wouldn't have wanted and aren't welcome for a range of users That's basically how the camera industry rolls right?

-

I'm reminded of Werner Herzog saying that his cinematographers need to know their equipment and their especially their camera/lens so that they could shoot without looking through the viewfinder but would still know the framing. It was his minimum criteria for a cinematographer to be considered competent IIRC. It is one of the things that they talk about with street photography and using only a single prime lens - you get to the point of not needing to look because you already know the lens and the compositions you'd get. ...and yes, the grass is always greener with other cameras, but apart from resolution and increased DR, the 5D ML combo is right at the top of what is available, basically regardless of price.

-

They must rectify the chatter based on nothing? Good idea. Once they've done that they can teach the governments of the world how to rectify conspiracy theories, the science community how to rectify things like climate denial and creationism, sociologists how to rectify extremism and fundamentalism, etc. There's probably a lot of money in that, but even if it turns out there isn't, we'd still be left with a world that makes more sense, so that's probably a reasonable runners-up prize 🙂

-

In the best conditions I think the 5D with ML images are right up there with the Alexa. No, it's not equal, but within the 5Ds DR limitations, it really is an excellent image. Canon sensors and colour science are famous for good reason.

-

Absolutely. That takes the count to two people with calm, reasonable, and grounded in experience options about Panasonic AF. Still dozens to go. I've never said that AF wasn't useful, wasn't valid, or was somehow lesser than manually focusing. My issue is with people who endlessly suggest that the AF isn't usable in any situation by anyone ever (or simply jump straight to implying that Panasonic washing machines and microwave ovens are all going to evaporate since the quality of the AF on the first version of the GH5 will somehow bankrupt the entire multinational consumer brands entire existence).

-

The non-pro still has a number of advantages over the Pro model - size being a significant one I think. Of course, you could make the case that once a camera is as large as the P4K it doesn't matter if it's 50% larger, and there's some logic to that, but you're really getting into the territory where you can't hand-hold it indefinitely. I use a GH5/lens/mic hand-held and it's borderline for being able to hand-hold it indefinitely and on larger shooting days I've ended up with sore wrists the next day. Sure I'm not a professional camera operator so I don't have the strength there, but I'm also a relatively physically capable person so I'd say I'm somewhere in the middle. For me the major issue with the 6K range is that it's not a mirrorless mount. I understand that you can adapt m42 lenses to EF, but there are a number of DSLR lens mounts that aren't compatible, so it kind of eliminates maybe half or two-thirds of all FF/S35 vintage lenses from consideration. I like having MFT where I can just assume there will be an adapter and sensor coverage and perhaps even a speed booster option.

-

Yeah, you kind of assume it'll be less, but getting any info on the DR of the video mode of early cameras seems to be impossible when I've gone looking. Having a few stops less certainly isn't ideal, even to emulate lesser film stocks, but I do think there's sufficient scope for getting a nice amount of contrast. Good points and you're right, although DR is getting better with each new sensor generation. Smartphones are getting smarter through taking multiple exposures in super-quick bursts which works a lot of the time (but not all the time) and of course are benefiting from improvements in sensor tech too. I do think there's a point where things are enough though. One of the main challenges I have found is fitting in the stops of DR that you get from a modern camera into the limited DR of 709 - it's a question of what parts of the luma range to throw away and/or which to compress, and in making those decisions you're deciding about which things should have contrast and which won't. I guess part of my point with this thread is that the lower DR from 'worse' cameras forces you to choose that when filming, and also makes you have a "full" amount of contrast with most scenes, which I think is a fundamental aspect of nice images, except in very-low DR scenes of course.

-

Welcome to the club - these things are great little cameras!! What sort of subjects are you going to use it for, and what lenses etc are you planning on using? I bought mine to be a second/time-lapse/backup camera to my GH5 for travel and for at-home for my "Go Shoot" project which is just trying to get out of the house and go shoot little adventures / days out / etc. In terms of lenses, I think there's a few ways I'm intending to use it. The first is with the 15mm F8 lens-cap lens for something truly portable but this only works outside during the day, or the 14mm F2.5 for a small but more capable setup. The second is with a modern zoom for flexibility of getting shots as they present themselves, like my 12-35mm f2.8 or the 12-60mm f2.8-4 which I'd love to buy (for the extra reach). The third is to go with primes and shoot more intentionally, like the 7.5mm F2, 17.5mm F0.95, 50mm F1.4 trio. The fourth is to go a bit vintage with a single prime, like a 28mm F2.8 with a speed booster (making it a 43mm FF equivalent lens). The fifth is to go for a vintage zoom, using my Tokina 28-70mm F3.5-4.5 with speed booster (making it a 43-110mm F5.5-7 FF equivalent lens). I do like the idea of using vintage glass on it because it softens the 4k which is a bit sharp for my tastes. The idea of all the various lens options etc was to make me excited to go out and shoot but also to use the outings as a test-bed for styles of shooting and lens choices and experimentation in editing and colour grading etc. The only way to get good at making films is to make films, and to make lots of them, and to try stuff and learn from what works and what doesn't. The Cine-D hack is really good too - if you haven't got that in there already it's highly recommended. The CineD profile is actually quite reminiscent of the colours that an Alexa would give you. Not the same, of course, but they both push a 'correct' image in the same sorts of directions and do the same sorts of desirable colour things. If you're not keen on the hack, the Natural profile is actually quite similar to the CineD profile in that it has a different flavour but is still employing the same nice colour treatments that the CineD and Alexa colour science do, and makes a great base to tweak in post if you're keen to customise the colours.

-

What if I sew a pillowcase onto the chest part of my jumper?

-

Yeah, there's lots to talk about in there, but I think the reality is that it's just different. Digital highlights are spectacular quality until they clip and quality goes instantly to zero, whereas film has a rolloff that just gets lower and lower quality, almost forever. Sure, you can bring the highlights down a couple of stops and there isn't nothing there, but try exposing skintones at 5 stops over and then bring them down by 5 stops - that will show that film doesn't have the DR of an Alexa, and not in a particularly kind way either! Shadows for both are similar for film and digital in that they both descend into a noise floor, and for that you have to take some sort of signal:noise ratio as the threshold, but of course we're aware that this can be gamed and that the practical and aesthetic attributes of this don't line-up to the maths of testing software and the 'enthusiasm' of camera PR departments. My point was that by the time you take a 10-stop digital image and effectively flatten the top couple of stops into a rolloff and the bottom couple of stops into a rolloff then you're not going to be missing much. Sure, film might have had another few stops compressed into the highlight rolloff and maybe you could recover a bit of stuff from them, but when presenting that rolloff in a final grade where it's up close to 100IRE it's barely-perceptible data with almost zero contrast, so any differences between the two in that final image aren't going to be that visible. I'm also aware that Kodak went on an all-out offensive in defence of film as digital was taking over and they were fighting for their lives. I remember going to a lecture by a Kodak technician in about 1995 and it was just 90 minutes of them talking about how film had more DR and that it meant you didn't have to light as accurately and so it paid for itself. He may as well have just got onto his knees on the stage and begged us to keep using film so they wouldn't go bankrupt. I remember leaving the lecture and just thinking 'but the tech will continue to get better' - it was almost embarrassing. Besides, rec709 broadcast had a similar DR and that's what we have seen for out entire lives apart from HDR and movie theatres.

-

Cool - one person did their own testing and evaluations in their own particular scenarios with their own lenses. Only about 50 more critics left to see if they're talking from experience or hype 🙂 I mean, I shoot MF so I really don't care, but it's pretty obvious that most critics are remembering the AF of the GH5 v1 firmware and haven't actually fact-checked themselves in about half-a-dozen cameras and dozens of firmware updates.

-

Is it a small battery though? Or is it a normal sized battery with an enormous and very bright screen? The 6K Pro has a 1500 nit 5 inch FHD touchscreen display - with any other camera that would be a separate monitor and would require a separate battery (or two). You can't get something for nothing, despite how much we want to!

-

I have vague memories of seeing people attach a separate video camera to the rig so that they'd have a recording of what was 'in the can'. Later film cameras had analog video out feeds did't they? I thought they split the light and had a separate video sensor in there for monitoring and recording. Lots of benefits to having a recording (of whatever quality) for the delay (maybe several days/weeks) before getting your developed film back. I know big budget features would be using the studios overnight lab to watch dailies the next morning, but if you didn't have that access sometimes it was a long wait.

-

The fundamental problem is that no camera can see into the future, whereas stabilisation in post can look at the whole clip and do things at one point in a clip that anticipate something that happens further into the clip. It's why the cameras with heavy stabilisation have a delay in the display - they are literally delaying what they show you so that they are able to 'see into the future' a tiny bit. If it was in-camera and didn't have a huge delay (eg, no more than half a second) then it would be so inaccurate that it wouldn't be worth much.

-

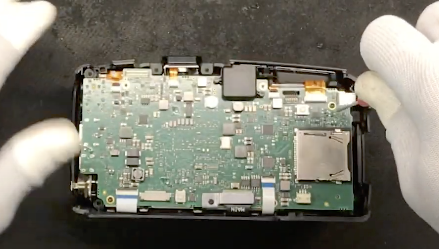

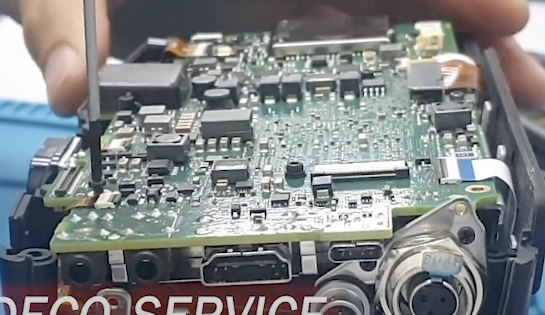

My understanding is that there's a ton of work in designing a PCB, and it looks like their main PCB is large and potentially has almost everything on it (I haven't studied it though). 4K PCB: 6K PCB Anyway, making the 6K the same as the 6K Pro (notice how the 6K is now the same size as the 6K Pro?) would give them economies of scale and synergies, while being able to configure the other things (NDs, brighter screen, etc) to be different. It makes sense as a commercial move for them in terms of pricing and manufacture. I've had the sense through their seemingly random previous camera models that they were leaping from one design to the next perhaps based on what was cheap and available at the time. This meant that every camera was largely a new design, making them more prone to QC issues. Seems this update is aligned to that perhaps. Pity it wasn't a Komodo-style box design though. The BMMCC still has no replacement, and nothing even remotely close in terms of size, so I'm still holding out hope for a box camera. If it has a usable top display I'd consider it.

-

Great news indeed. I've seen some of the recent stabilisation done from camera gyro data (was it from a Sony camera?) and the stabilisation was truly truly impressive, and I'd know because I shoot handheld and have spent a lot of time on some tricky shots trying to stabilise them. I've developed techniques that I haven't found anyone else online even talking about so I'm pretty sure I'm quite far down the rabbit hole. Finally, an out of body experience that doesn't require 'self medication' and a hangover!

-

Please do share some G6 footage! I bought the GF3 for stills images and it didn't disappoint... it was an MILC that shot 12MP RAW images, and was actually pocketable with the 14mm f2.5 lens. Think of the hype around the P4K when it came out, when all it did was the same thing, only it shot those RAW images continuously and recorded sound at the same time. Ironically it was bested by the Canon 700D - the only camera I still own that I don't shoot with anymore, despite it being Canon colour science and the largest sensor I own! Being good for stills sure doesn't automatically translate to being good with video, that's for sure.

-

That was part of my motivation... to completely obscure the camera with treatments in post. We all focus on the camera, debating the fine points of the limits of camera features, and then only take advantage of a tiny taste of what is possible in post-production. It's like shopping all over the world for the finest ingredients, then taking them into the kitchen and boiling the crap out of everything. Will overcooked fine ingredients taste better than overcooked cheap ingredients, probably, but it's hardly the potential that existed. We're all running around shopping for ingredients and steadfastly refusing to learn to cook, or for those that do know their way around a kitchen, passing up the opportunity to learn to cook well.

-

Good luck with that! At this point I think half the people on these forums should list "Panasonic AF hater" on their CV under "Personal interests". Besides, all it takes is a quick look around and see all the flat-earth, plandemic, moon-landing / holocaust deniers, and you realise that it's more of a miracle that anyone is remotely sensible about anything at all!

-

Yeah, it's about having the highlight/shadow rolloffs and blur/grain. The OIS on the iPhones really is phenomenal - I wasn't ninja-walking and don't have particularly steady hands and even the walking shots were pretty good. I don't think I stabilised anything in post either, which would have over-stabilised things - the walking shots were already a bit too gimbal-like for my tastes. Having a nice 10-bit codec on a phone would make such a difference - Prores would be spectacular.

-

I'd imagine it would make you slow down and be more deliberate? I remember when I was shooting stills there was a heavy "anti-chimping" sentiment, which mostly overlaps in rationale.

-

Assuming you're still reading this thread, I'd suggest the following: Ignore all the tiny pieces of technical detail that the tech-obsessed and argument-prone contributors have shared in this thread and return to first-principles. First principle - get your shots in focus. Nothing else matters if a shot is out of focus. If you're shooting kids or anything else that isn't under control then either get phase-detect AF or learn to manually focus. I shoot similar to you and manually focus - it's a skill and requires some practice but it's do-able. Getting shots in focus with the best auto-focus cameras is also a skill that requires practice. Second principle - get a nice looking image - whatever that means for you. The nicer the camera the more likely it will require colour grading and most people can't colour grade to save their own lives, let alone create lovely images. Once again, this is a skill that can be developed, but making lovely videos requires (literally) a dozen or more skills so you just might not have it in you to learn to colour grade as well as edit, mix sound, master, learning NLEs, media management, etc etc. If you don't want to learn to colour grade then you're going to rely on the picture-profiles from the camera and that will potentially limit the dynamic range and other image attributes, sometimes quite significantly. Third principle - get the first two right and then buy the camera and then don't look back. Go learn about the other dozen skills. Highly skilled people can create feature film quality results with any of the cameras you're talking about so there's a snowflakes-chance-in-hell that the camera will be a limitation, it will be your level of skill. Fourth principle - if you get to here let me say this again. The camera doesn't matter. The only people that will tell you otherwise are camera nerds (like here in this forum), camera manufacturers (who want your money, over and over again), and camera influencers (who want the views and royalties and commissions and manufacturer kickbacks etc). Seriously. Buy the camera that will get your shots in focus and then move on.