slonick81

Members-

Posts

40 -

Joined

-

Last visited

slonick81's Achievements

Member (2/5)

28

Reputation

-

slonick81 reacted to a post in a topic:

CG/VFX for free

slonick81 reacted to a post in a topic:

CG/VFX for free

-

newfoundmass reacted to a post in a topic:

CG/VFX for free

newfoundmass reacted to a post in a topic:

CG/VFX for free

-

Hmmm... Maybe it's an option but I feel uneasy in many ways about it. Anyways, thank you for kind words, it really helps and matters these days. But let's not test Andrew's banhammer itch on political threads - I'd like to chat on the topic anywhere else and to keep this thread around graphics and effects.

-

SMGJohn reacted to a post in a topic:

CG/VFX for free

SMGJohn reacted to a post in a topic:

CG/VFX for free

-

newfoundmass reacted to a post in a topic:

CG/VFX for free

newfoundmass reacted to a post in a topic:

CG/VFX for free

-

Davide DB reacted to a post in a topic:

CG/VFX for free

Davide DB reacted to a post in a topic:

CG/VFX for free

-

Yes, this is true, and it's obvious from my profile on Upwork. I'm taking current situation into account but I'm trying to find someone who doesn't care.

-

Thank you! Frankly speaking, I have no idea about worldwide rates on such jobs, and if I'll be able to cover my current life expenses - common food, internet and cheap apartments, then it'll be a huge success for me already. But here I'm just trying to keep myself in shape and to do something good for the community. Sure, I have been trying to get something on Upwork but no luck since September. I tried to apply on projects I'm used to work on - like movies, music videos, commercials - and had no response, which is understandable; I also tried to get simple small jobs when people are looking to cleanup/key a shot or two, or to make some trivial graphics and motion design - and had no response as well, which puzzled me greatly. Maybe something crucial was missing in my responses? Thank you for recommending Fiverr, I'll try to apply there.

-

SMGJohn reacted to a post in a topic:

CG/VFX for free

SMGJohn reacted to a post in a topic:

CG/VFX for free

-

Davide DB reacted to a post in a topic:

CG/VFX for free

Davide DB reacted to a post in a topic:

CG/VFX for free

-

Can't get enough job these days, so my skills may be useful here. I can do green screen keying, cleanups/object replacement, tracking, basic 3d modelling and stuff around such topics. Nothing fancy, I am not a world wide acknowledged cg artist but have some skills and experience. Here is my eclectic reel - https://disk.yandex.ru/i/V9USqVPwl0DDOg (it's accessible in Georgia so I assume it's accessible in other countries, too). Rules are: 1. You give me one shot/clip at a time, I do it for free. 2. I get the right to publish it here, on my youtube channel and use the resulting clip and the creation process as demonstration of skills and self promotion to other people and organizations. 3. You can use the result of my work as you wish until it contradicts term no.2. Or at least inform me about the change of situation. Of course there may be clips I won't be able to do, and I'll do my best to point it out right at the start. Also I'm still trying to earn for life, there may be a delay before I can do your clip (and I'll tell you about it). So if you have a complicated case in burning ASAP situation it may be better to contact an established CG facility on commercial basis. In general I'm trying to build up portfolio and maybe get in touch with new customers but at the moment I just don't want to get rusty. Ask questions if any. Chat on the topic (CG/VFX) is also welcomed in this thread.

-

Usable. You may miss small details but overall framing is good and destinctive until you catch direct sun reflection. I still have this annoying bug with converting dng so I'd like to know if it's a general Poco x3 problem or just quirks of my device.

-

It has PDAF the half of forum here was raving about. And this sensor is most likely going to GH6, too. Real life performance is still a thing to check. I was hoping Jordan would give a word or two about it but no clues yet.

-

Juank reacted to a post in a topic:

RAW Video on a Smartphone

Juank reacted to a post in a topic:

RAW Video on a Smartphone

-

PannySVHS reacted to a post in a topic:

RAW Video on a Smartphone

PannySVHS reacted to a post in a topic:

RAW Video on a Smartphone

-

Original image is even more natural and neutral, I was trying to add some contrast to highlights and shadows to see if there any banding or other issues aside expected noise. "Processed" look is easily achievable by applying contrast, strong NR and strong unsharp mask with radius about 1,5-2px. The lens itself is somewhat soft (it's a 200$ phone) and it's impossible to degrease it every time you get it out of pocket (I clean it with dedicated cloth but it's still not ideal - sometimes you can guess by light streaks the direction of last cleaning move :) Well, I still think there is exposure shift for compressed image, it should have retained at least some details of building sign. The thing is everything goes harsh and crude in highlights really fast - color, gradations, details I'm really pleased to noticed that Mirsad Makalic (the author of Motion Cam) is working hard on improving the app. A lot of issues I mentioned are addressed - you can set up you storage location any time, the story about 900-frame chunks is history - this part is completely reworked, focus slider is much more usable, RAW video buffer can be bigger and there is indicator for filling it during capture... I mean - join in, your input may be valuable, and you have chance to shape the app for your needs.

-

Some DR tests on rare sunny winter days - raw images vs. native camera app video recording. No detail enhancements for raw (no NR, sharpening, dehazing and such), just shadows/highlights and gamma curve adjustments in ACR to get the better idea of what's going on. All shots are in native resolution, 1:1, no scaling. I'm still not confident about exposure settings because of live feed high contrast. I tend to check the overall image with AE on and then dial manual settings to nearly same looking picture. Native video is shot in auto mode. It actually represents well in terms of contrast the image you see in live view shooting raw. So, as you can see there is no big difference in DR (like you may expect comparing raw and "baked-in r709" footage from cinema camera). "Bridge" scene got a bit overexposed in video compared to raw, I have a feeling that it was possible to bring back overexposed building in the back without killing shadows with minor exposure shift. What makes the difference for me is highlight rolloff - it is natural and color consistent, just look at snow in front in "hotel" shot and sun spots on the top of "bridge" shot. Shadows are more manageable for me, too. Yes, there is a lot of chroma noise but it's easily controlled by native ACR/Resolve tools, luma noise is very thin and even, so you can balance between shadow details and noise suppression up to your taste. But the most prominent difference is due to zero sharpening. Just check small details like branches, especially on contrast background. It's not DR related, but I can't avoid mentioning it. Is raw a necessity for such result? Dunno. Maybe no postprocessing, log profile and 10bit 400Mbps h26x instead 8bit 40Mbps will give comparable results. If you have a phone that is capable of such recording modes I would love to see the compare.

-

Wow Tested on my Poco X3. TLDR: barely functional but promising. Settings: anything aside RAW10<->RAW16 show little effect on performance. "Raw video memory usage" seems to benefit from being maxed out but it's more a feeling than fact. You'll have to select "raw video" and exposure settings every time you switch from app's main screen (like switching between apps or entering settings). OIS can be activated but doesn't work in my case, thus the image is shaky. Focus control is kind of distributed strangely: 90% of slider is mapped to nearest 1m, the rest of focusing range is cramped in the tiny space at the right end. Nice for macro, I guess, not for anything else. AWB should be locked or it will affect the image otherwise. Raw: I was able to get mostly reliable recording in all conditions at max crop (40% H/V, 2772x2082) at 24/25 fps for durations about up to 1-1,5 min. Was not testing for longer times because of huge file sizes and some quirks. I was able to record 4K-ish resolutions (4112x2082) outdoors (it's about 0°C now) but it drops frames starting from 15-20s indoors. Overheating? No framing guides for crop area - use your imagination looking at full sensor image feed. Raw images are initially written in chunks of 900 frames as zip archive somewhere in system folders (haven't found location yet). You have to manually unzip and transfer them as dngs in "manage videos" by tapping "queue" button. You can set the destination folder once at first conversion, I was not able to find this setting anywhere else, the only way to change this directory was to reinstall the app. And yeah, no sound at all yet. Processing: transfer times are huge on my phone mostly because of USB2 transfer speed. Files are bulky, I filled 60GB of free space just with a dozen of clips. Considering buying TF card to unzip dngs and swapping it out to card reader (if projects goes well in this direction). AE2021 and Resolve 17 work well with dng sequences, Premiere 2021 refused to import. I had to rename dng sequence according to unique folder name manually because they were named with same base name - frame-#####.dng. There is some glitchy "transitional zone" between two 900-frame chunks where frames are randomly tossed to different chunks. At first I thought it was just frame dropouts but later I was able to reconstruct frame order manually at post - see image. Image quality is much better, especially at moderately high ISOs (800-1600). Compressed image degrades at this range - details are getting mushy, colors - muddy. Raw on the contrary has very manageable grain structure and highlight rolloff, lacks any sharpening. DR is better in a sense that you're getting more usable DR but it's far from being cinema camera wide - you should be careful with highlights, and the absence of any exposure assisting tools doesn't help at all. Is it worth trying? Yes, I think. It's not the way you'd shoot something for fast turnarounds, or for a long duration. It's more an artistic experiment. The project is in a very early stage now but it's all about polishing interface and performance, adding useful features, improving general stability - the core idea is functional. I'm really happy to stumble upon this project - I haven't feel such joy and excitement since shooting photos with Nokia 808. Basically it's a 8mm raw camera you have no excuses not to carry with you all the time.

-

deltallaek started following slonick81

-

Camera resolutions by cinematographer Steve Yeldin

slonick81 replied to John Matthews's topic in Cameras

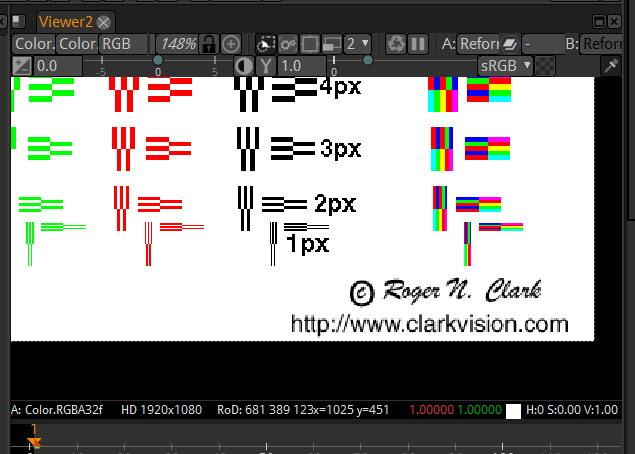

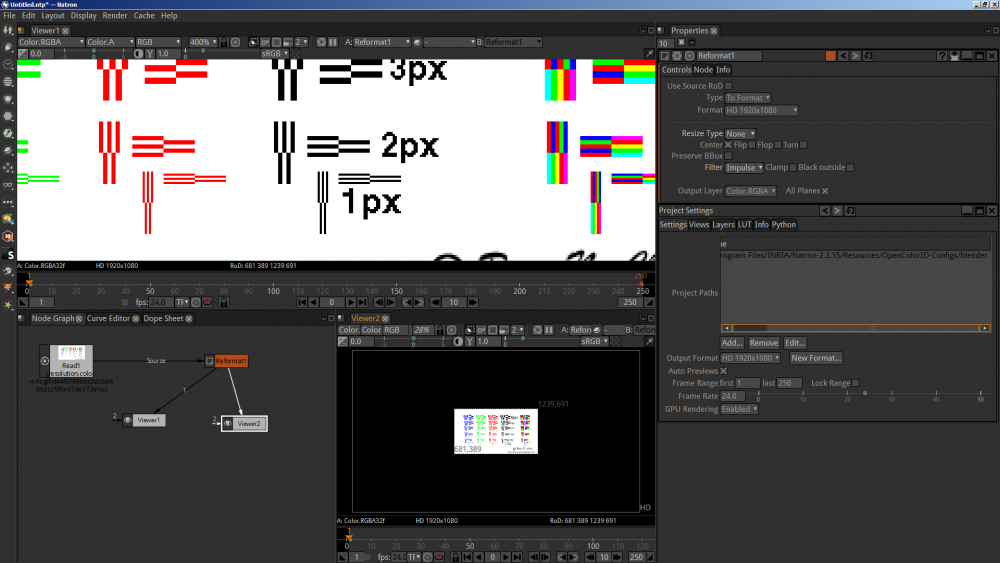

So Natron we go! As you can see, latest win version with default settings shows no subsampling on enlarged view. The only thing we should care about is filter type in reformat node. It complements original small 558x301 image to FHD with borders around, but centering introduces 0.5 pixel vertical shift due to uneven Y dimension of original image (301 px) so "Impulse" filter type is set for "nearest neighbour" interpolation. If you uncheck "Center" it will place our chart in bottom left corner and remove any influence of "Filter" setting. The funniest thing is that even non-round resize in viewer won't introduce any soft subsampling with these settings. You can notice some pixel line doubling but no soft transitions. And yes, I converted the chart to .bmp because natron couldn't read .gif. It's the only thing you're percieving. Unless you're Neuralink test volunteer, maybe. Well, that's how any kind of compositing is done. CG artist switches back and forth from "fit in view" to any magnification needed for the job, using "1:1" scale to justify real details. Working screen resolution can be any, the more the better, of course, but for the sake of working space for tools, not resolution itself. And this is exactly what Yedlin is doing: sitting in his composing suite of choice (Nuke), showing his nodes and settings, zooming in and out but mostly staying at 1:1, grabbing at resolution he is comfortable with (thus 1920x1280 file - it's a window screen record from larger display) In general: I mostly posted to show one simple thing - you can evaluate footage in 1:1 mode in composer viewer, and round multiple scaling doesn't introduce any false details. I considered it as a given truth. But you questioned it and I decided to check. So, for AE, PS, ffmpeg, Natron and most likely Nuke it's true (with some attention to settings). Сoncerning Yedlin's research - it was made in a very natural way for me, as if I was evaluating footage myself, and it summarised well a general impression I got working on video/movie productions - resolution is not a decisive factor nowdays. Like, for last 5 years I need one hand's fingers to count projects when director/DoP/producer was seeking intentionally for more resolution. You see it wrong or flawed - fine, I don't feel any necessity to change your mind, looks like it's more a kye's battle to fight. -

Camera resolutions by cinematographer Steve Yeldin

slonick81 replied to John Matthews's topic in Cameras

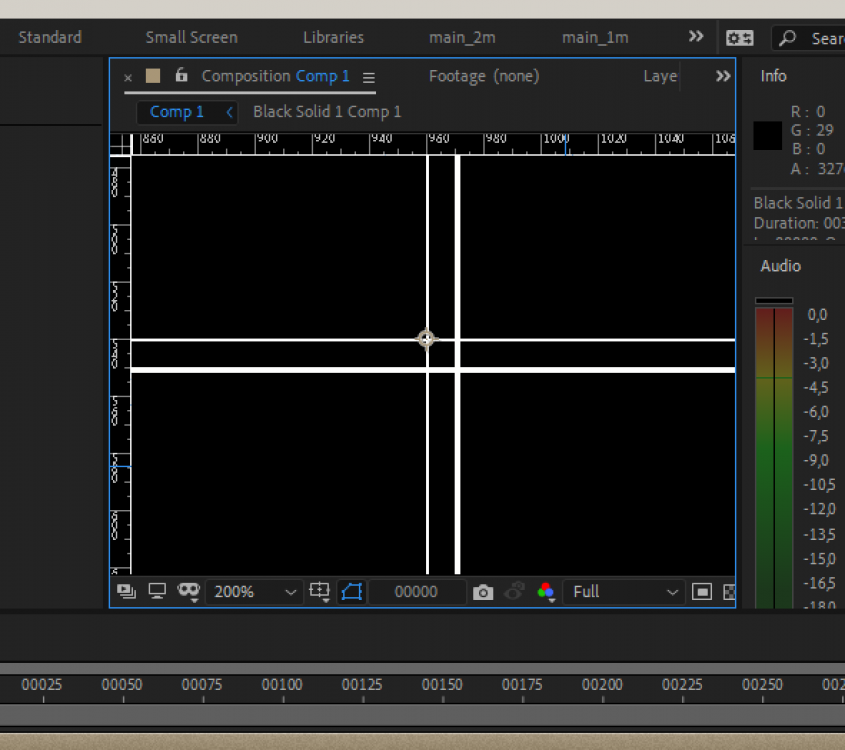

Shure. Exactly this image has heavy compression artifacts and I was unable to find the original chart but I got the idea and recreated these pixel-wide colored "E" and did the same upscale-view-grab pattern. And, well, it does preserve sharp pixel edges, no subsampling. I dont have access to Nuke right now, not going to mess with warez in the middle of a work week for the sake of internet dispute, and I'm not 100% shure about the details, but last time I was composing something in Nuke it had no problems with 1:1 view, especially considering I was making titles and credits as well. And what Yedlin is doing - comparing at 100% 1:1 - it looks right. Yedlin is not questioning the capability of given codec/format to store given amount of resolution lines. He is discussing about _percieved_ resolution. It means that image should be a) projected, b) well, percieved. So he chooses common ground - 4K projection, crops out 1:1 portion of it and cycles through different cameras. And his idea sounds valid - starting from certain point digital resolution is less important then other factors existing before (optical system resolving, DoF/motion blur, AA filter) and after (rolling shutter, processing, sharpening/NR, compression) the resolution is created. He doesn't state that there is zero difference and he doesn't touch special technical cases like VFX or intentional heavy reframing in post, where additional resolution may be beneficial. The whole idea of his works: starting from certain point of technical resolution percieved resolution of real life images does not suffer from upsampling and does not benefit from downscaling that much. For example, on the second image I added a numerically subtle transform to chart in AE before grabbing the screen: +5% scale, 1° rotation, slight skew - essentially what you will get with nearly any stabilization plugin, and it's a mess in term of technical resolution. But we do it here and there without any dramatic degradation to real footage. -

Camera resolutions by cinematographer Steve Yeldin

slonick81 replied to John Matthews's topic in Cameras

The attached image shows 1px b/w grid, generated in AE in FHD sequence, exported in ProRes, upscaled to QHD with ffmpeg ("-vf scale=3840:2160:flags=neighbor" options), imported back to AE, ovelayed over original one in same composition, magnified to 200% in viewer, screengrabbed and enlarged another 2x in PS with proper scaling settings. And no subsampling present, as you can see. So it's totally possible to upscale image or show it in 1:1 view without modifying original pixels - just don't use fractional enlargement ratios and complex scaling. Not shure about Natron though - never used it. Just don't forget to "open image in new tab" and to view in original scale. But that's real life - most productions have different resolution footage on input (A/B/drone/action cams), and multiple resolutions on output - QHD/FHD for streaming/TV and DCI-something for DCP at least. So it's all about scaling and matching the look, and it's the subject of Yedlin's research. More to say, even in rare "resolution preserving" cases when filming resolution perfectly matches projection resolution there are such things as lens abberations/distorions correction, image stabilization, rolling shutter jello removal and reframing in post. And it works well usually because of reasons covered by Yedlin. And sometimes resolution, processing and scaling play funny tricks out of nothing. Last project I was making some simple clean-ups. Red Helium 8K shots, exported as DPX sequences to me. 80% of processed shots were rejected by colourist and DoP as "blurry, unfitting the rest of footage". Long story short, DPX files were rendered by technician in full-res/premium quality debayer, while colourist with DoP were grading 8K at half res scaled down to 2K big screen projection - and it was giving more punch and microcontrast on large screen then higher quality and resolution DPXes with same grading and projection scaling. -

Color saturation is defined not by variety of values of any single (R, G, B) channel arcross the image but by difference between channels in given pixel. Thus color fidelity is defined by amount of steps this difference is digitised. If you have image with (200, 195, 205) RGB values in one point, (25, 20, 30) in other, (75, 80, 70) in third that doesn't mean the image color range is 25-200, it's 10 and it's lacking color badly.

-

I was trying it when it was late beta. The core functionality worked well at that time, it was playing BMCC 2,5K raws on average quad-core CPU an 760-770 nVidia cards, debayering quality was good and after some digging I was able to dial the look I wanted to get. The interface was clumsy to a degree - panel management was painful sometimes, some sliders were off scale so it was hard to set proper values. But it wasn't too unbearable or irritating. The main show stopper for me was it's inability to efficiently save results to ProRes/DNxHD for proxy edits. It was technically possible to stream Processors' image output to ffmpeg but the encoding speed was slow. So at the end it wasn't faster than Resolve at this task, and Resolve had much better media management and overall functionality. Besides Resolve is more universal as transcoder, it's able to work with Canon, RED and ARRI raw files. I'll try to test current version if I have time. But I think they targeted narrow niche and missed some time: CUDA and Win only, no fast proxies, limited input formats support, Resolve is quite a beast now, hardware is much more powerful than 3-4 years ago. But if you shoot a lot with BM cameras mostly it may fit your needs really well, why not?