-

Posts

355 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by EthanAlexander

-

I know you're being sarcastic, but you're making an invalid point - it's about perspective. The artistic choice comes from deciding how close or far you are from your subject. Roger Deakins, for example, loves 27mm and 21mm on S35 because it's wider than is traditional for a 1-shot which allows him to get closer to people and make the audience feel like they're closer. It doesn't have to do with the FOV, which you could match with a longer lens from far away, it has to do with perspective, which changes when you get closer. People know, even if only subconsciously, how close the camera is to the subject. So whether you're on S35 or FF or whatever, the feeling will be the same if the camera is in the same spot, and then to match the FOV you just use an equivalent focal length (So a 27mm on S35 or a 40.5mm on FF).

-

It wasn't. I meant imperfections in real world tests, not larger lenses. This is why I said "real world availability of manufacturing" - I do believe there is a general "look" that comes from larger sensors, but I think it has more to do with the lenses that are available. The lack of availability of extreme wide angles with super shallow depth of field (such as a 12mm f0.7) is a big reason FF is hard to match with smaller sensors like MFT. It also accounts for the fact that not every sensor is going to deliver the same results for things like dynamic range etc. But in a computer, equivalency is accurate, and we can come close enough in the real world that only minor differences happen. The only difference is the size of the image circle that comes out the back. The size of the sensor then determines the field of view. This is why if you're shooting on an APSC camera with a FF 24-70mm 2.8 lens or an APSC 18-55mm 2.8 (two common Canon zooms, for instance), you'll get the same image for the entire overlapped range of focal lengths of 24-55mm. I invite you to test this out yourself and see.

-

Thank you. From that other thread: I'm about to get my first MFT camera so I'm excited to do my own tests vs FF, but it really seems to me that mathematically there's no difference between formats, it just comes down to real-world imperfections and real world availability of manufacturing. For instance, one thing that I think stands out with larger formats is shallow depth of field at wider fields of view: 24mm 1.4s are commonly made for FF cameras, whereas a 12mm f/0.7 doesn't exist to my knowledge so you can't match that look on MFT. It's technically possible it just doesn't get made because the cost wouldn't be worth it.(.64 speed boosting a Sigma 20mm 1.4 would be pretty close though)

-

I used to think that there was something distinct about lenses with longer focal lengths but after reading a lot of smart people's posts (smarter than me) on forums like this and then doing my own tests, I realized it really only has to do with perspective and equivalency. I think this just has to do with the fact that generally better IQ cameras tend to be larger sensor, and the people with access to these kinds of cameras tend to have higher skills and more importantly, better camera support, lighting, set design, etc. One thing that is KINDA true about larger sensor cameras though is the extremes, like super SUPER shallow depth of field at wider angles, are really hard to achieve with smaller sensors. For instance, a 24mm at f/1.4 looks great and has that "mojo" on FF that would require a 12mm f/0.7, which to my knowledge doesn't exist, and even speed boosting the same 24mm FF lens with a .64x metabones would only give you an f/1.8 equivalent. lol it's all good the guy is writing on indiewire so you'd think it would be accurate ?

-

The author of this article does not understand perspective. It's the kind of thing I used to think until I took the time to understand that for instance a 50mm has no magic powers to change the way light works over for instance a 25mm. "...specifically a shallower depth of field and more compressed rendering of space. In other words, the large format allows you to see wider, without going wider, as you can see in the example below." ? This is false. It's 100% false. There is no such thing as lens compression, only perspective. If you're standing in the same place, perspective will be the same, and as @kye is pointing out, within reason, you can mimic the look of any size sensor by matching the FOV and using an equivalent aperture (and then compensating the ISO). Having said that, there are certain things that are hard to do, like super shallow depth of field on wider lenses on MFT, or mimicking the look of a 50mm 1.2 FF on MFT, etc. You can just get a wider lens though... for instance a 12mm on MFT is the same as a 24 on FF in FOV

-

Just purchased an SLR Magic hyperprime 25mm T0.95 and 10mm T2.1! Next purchase (maybe today) will be the Z-Cam E2. I have been wanting an internal ProRes cam for a while, and the 10 bit 4K 120 h265 looks great too. With the SLR Magics I should be able to fake a full frame look. Can't wait to test it all out - I'll post results.

-

...and adding a bunch of green tint in post ? But seriously, yeah it's what you see in a lot of promotions, too, where any camera can look good, including an iPhone, if you've got the production design, makeup, location etc etc which really teaches a lesson if you think about it - the camera just needs to give you a clean image and after that it's up to you to captivate an audience. I have to publicly apologize and retract my last post, though, because I'm already back to looking at cinema lenses. Thinking of picking up some SLR Magic hyperprimes to go with either a Z-Cam or MBPCC4K. Just a couple lenses I swear...

-

I think I'm on a "screw buying new lenses I'm spending money on filters and light modifiers" phase. Layering diffusion, colored lighting and practicals, haze, actually caring about incidental bounce enough to use negative fill... aaaand FINALLY got a black pro mist! Should get to use it out for a talking head shoot on Friday, but so far I'm really liking even just boring test shots.

-

Canon 1DX III, FF 4k60p with 422 10bit internal

EthanAlexander replied to ntblowz's topic in Cameras

I hope it was helpful... Mokara just went and downvoted a bunch of my posts across multiple threads as retaliation ? -

Canon 1DX III, FF 4k60p with 422 10bit internal

EthanAlexander replied to ntblowz's topic in Cameras

BRO, @Michi said this way back at the beginning and you've just been arguing for the sake of arguing. He was saying that on the EOS R and the 1DX2 by using math it seems like the 4K crops were 1:1 readouts of different megapixel sensors, hence the different crop sizes. So he said if this continues this will have more crop on the Mk3 than on the Mk2 because of the higher megapixel count. Then he says at the end of his first post this could change with any kind of oversampling. So WHY keep arguing unless you're just trolling? You're literally cluttering the EOSHD boards with your arguments and pro-Canon nonsense that's been proven wrong. Every once in a while you contribute something but it's only 10% of the time... Michi's original post: -

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

EthanAlexander replied to Andrew Reid's topic in Cameras

Yes - For anything they're actually involved in the production (even if it's just money) the camera list is in effect, but they will purchase anything after the fact if they judge it good content that people will watch, regardless of the camera. It's pretty smart, really - they're pushing forward 4K HDR to future-proof their own content while also being flexible enough to pick up any kind of good content. -

Maybe in a few select offerings but 98% of the smartphone market wouldn't be interested - even I wouldn't because there's just no point when you're making so many compromises, especially in low light where you can see image breakdown so fast even using Filmic Extreme bitrates (~130Mbps HEVC) on the latest iPhone. HEVC and HEIF are really quite good for best-of-both-worlds size vs quality on phones, especially considering cloud storage and data speeds - it's why I actually love that Canon is going to offer HEIF on the new 1DX MkIII. I agree with the rest of what you're saying though

-

At least I get to keep my money in my pocket on this one. I'd love the improvements but not worth the cost to upgrade by any stretch. No these are not the same (OLPF is for anti-aliasing, ND is for cutting light). The variable part is a cool concept though that I've never heard of. I never really had any problems with moire/aliasing on the A73 unless I was shooting high frame rate HD so maybe this means it would have cleaner slow motion by being more aggressive, but turn off when shooting 4K or stills so it's even more detail than before? I'm just hoping...

-

Seriously, where are you @Mokara ? Wait, did Canon stop paying you to write excuses now that they're putting in 24p?

-

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

EthanAlexander replied to Andrew Reid's topic in Cameras

I appreciate your desire to dig into this further. In any linear recording, each stop brighter is actually getting twice as many bit values as the previous darker stop, starting with 1 for the darkest and 512 for the brightest in 10 bit. That means the brightest stop is actually the "top half" of all the bit values (so in 10 bit 513-1024 would actually be reserved for just one stop of light.) If you want each stop of light to be represented by an equal amount of values (for instance, ~100 as you are suggesting) It requires a log curve to map the input values to that. (How many and which values get used for the different stops is what makes the differences between different log curves like SLog2 and 3, V Log, N Log, etc.) They won't be sharing bits before compression whether it's linear or log, but to your point, you're right and this is a big reason why shooting log on a high compression camera is troublesome - the codec has to throw away information and that means that values that are close together will likely be compressed into one. This is why I said several times that highly compressed vs raw recording is a big factor. But if we're talking raw recording with lossless or no compression, or even ProRes HQ frankly, then a 10 or 12 bit file mapped with a log curve will look practically the same as a linear 14 bit recording. Either way you still have to decide where you want middle grey to land, which means you're deciding how many stops above and below you're going to get. -

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

EthanAlexander replied to Andrew Reid's topic in Cameras

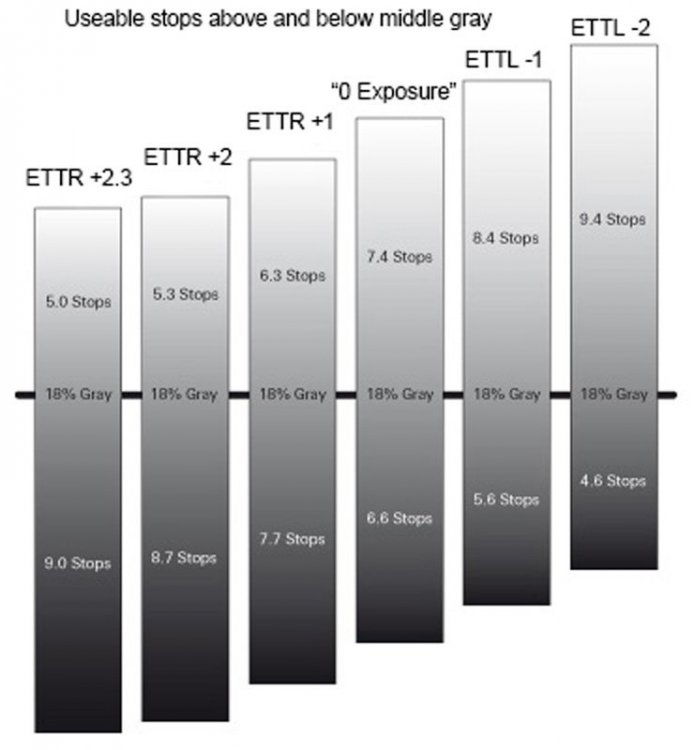

There seems to be some confusion about what's happening with ETTR so hopefully this clears it up. 2 things that will never change no matter how you're exposing V Log are the "shape" of the curve and the dynamic range (For this post we will assume 14 stops). V Log will always be V Log will always be V Log. So, technically, the highlight rolloff will always be the same. @Jonathan Bergqvist and @Mmmbeats are right in this regard. BUT - the useable part of the dynamic range (of the scene) is definitely shifting and it is definitely destructive, as @helium is pointing out. Once you lose something to overexposure, you'll never get it back. If a scene only has, for instance, 7 stops of dynamic range, then you could easily argue that ETTR will offer a better image because of the high SNR which will lead to low noise and a cleaner image. We're fitting 7 stops into.a 14 stop container so it's easy to make sure everything is captured. You could probably argue that when shooting raw or super low compression, anything less than the full dynamic range should be ETTR by the amount of stops in the scene fewer than the maximum allowed by the log curve. The complication comes in when dealing with scenes with high dynamic range. This is when you have to decide what to put into the 14 stop "container." When using ETTR, you're making a compromise - higher SNR for less useable dynamic range in the highlights. This is definitely a "destructive" choice in the sense that this can't be undone in post. You'll never get back those stops in the highlights that you chose to sacrifice. For many people and scenes, this is an acceptable tradeoff. You're also getting more stops dedicated to your shadows, which can be useful. If your scene has a lot of stops above middle grey, then ETTR will definitely limit the amount of useable recorded dynamic range, and exposing according to "manufacturer guidelines" (or even lower) will indeed give you the better result if you're trying not to lose anything to overexposure. This image shows how the captured range of 14 stops never changes but the amount of stops above and below middle grey definitely do change. I think it is for the Alexa but the concept applies to literally any curve, so just ignore the exact numbers of stops above and below 18% and look at how it always adds up to 14. These changes are 100% baked in no matter how you record (raw or highly compressed). There can be color shifts as well, but that's a totally different topic to dive into... -

This part is really cool though! I'd love for this to come to video on their other cameras. Imagine how much faster piecing together edits would be!

-

There's nothing to understand in the second and third ones... Personally, I think they're garbage. Love the first one though. I rewatch it every couple years.

-

Yours

-

You just proved his whole point ?

-

After watching this video and the one before it, it's easy to see that ALL of RED's claims should be reexamined. Their false use of "made in the USA" and false claims of designing their own sensors go well beyond ethical business practices. I have little doubt that the industry will be better off once this patent is revoked. It's smoke and mirrors, and it's holding back fair competition.

-

I got my start 15 years ago on a VX2100. Skate videos and comedy short films. Those were the days...

-

Canon EOS R first impressions - INSANE split personality camera

EthanAlexander replied to Andrew Reid's topic in Cameras

This is not true. I collaborated with someone and we had an A73 and EOS R - the colors were easy enough to match but the Sony footage was WAY more detailed and even after adding sharpening to the Canon footage it was noticeably softer. We shot 1080p60 mostly, with in camera sharpening turned down all the way.