-

Posts

1,839 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by jcs

-

Custom menu top of page 3. You probably also want your shutter set to 1/50 or 1/48 if the GH5 can do it (2x your frame rate). Unless you're looking for a specific effect or don't have an ND handy (exposure tuning).

-

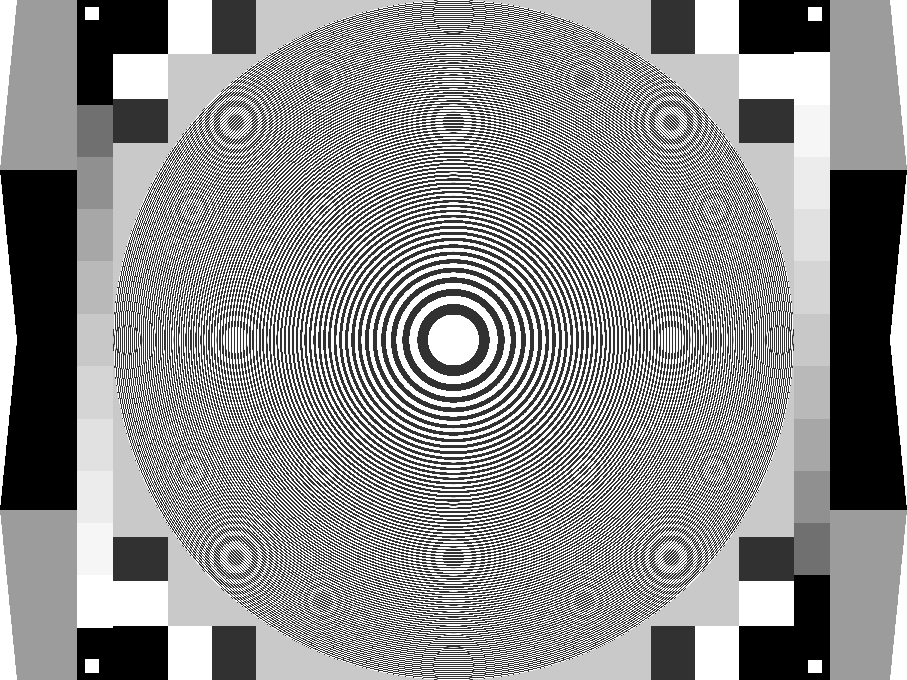

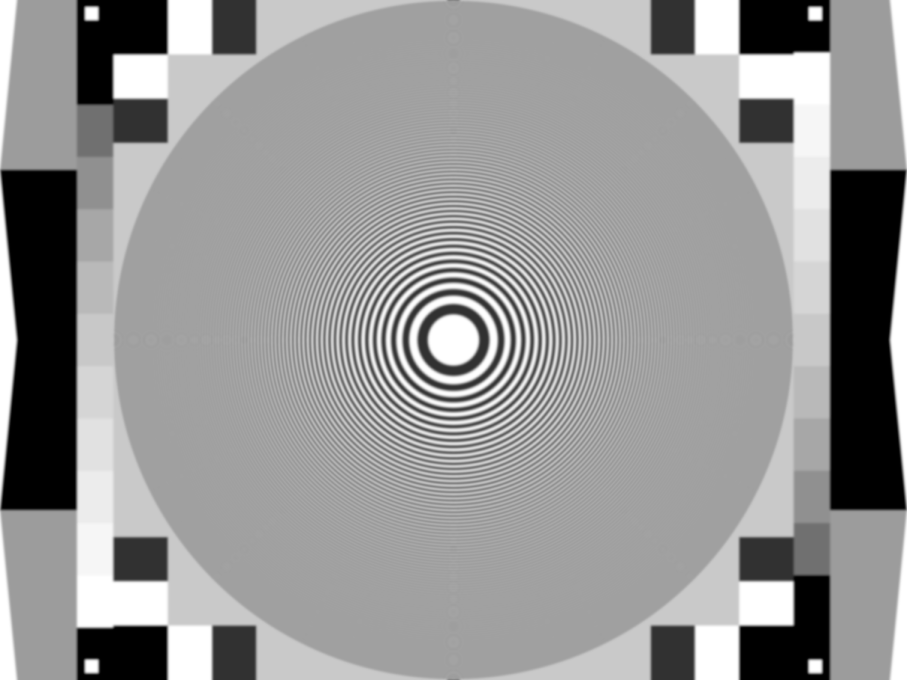

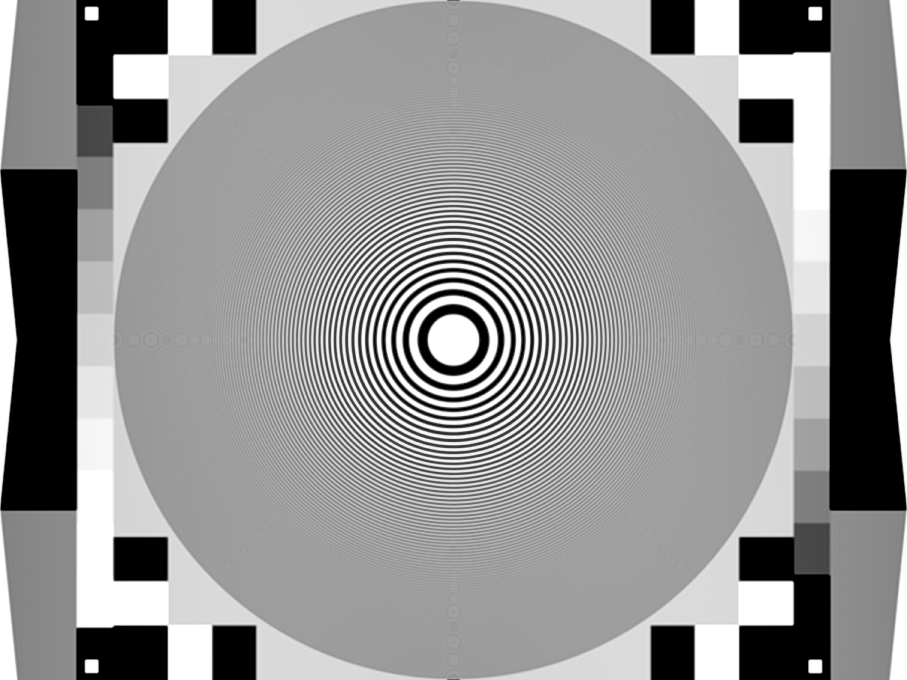

When an OLPF is used there will be no hard or square edges, instead, a gradient, closer to a sinusoid, rounded squares... You can simulate an OLPF and camera sensor (hard quantization) by Gaussian blurring the test image, then resizing the image down to say 12% using Nearest Neighbor. Testing this ZoneHardHigh.png from here: http://www.bealecorner.org/red/test-patterns/ (really brutal alias test!), you'll see that with no blurring before resizing it aliases like crazy, and as blur goes up, aliasing goes down, however the final image gets softer. In order to get rid of most of the aliasing, the blur must be very strong (12% target): Resized Nearest Neighbor (effectively what the sensor does with no OLPF): Gaussian Blur before resize (10 pixels), simulating an OLPF: Then adding post-sharpening (as many cameras do), though some aliasing starts to become more visible: Then adding local contrast enhancement (LCE, a form of unsharp masking, performed before the final sharpen operation): Thus there are definite benefits to doing post sharpening and LCE after a sufficient OLPF gets rid of most of the aliasing (vs. using a weaker OLPF which results in a lot more aliasing). Yeah the F65 is pretty excellent.

-

F65 details: https://www.abelcine.com/store/pdfs/F65_Camera_CameraPDF.pdf. The test chart validates Sony's claims: Still need to see 4K charts for the Alexa 65 and Red 8K. It does appear that so far only the F65 delivers true 4K. Regarding sine wave, square waves, and harmonics, I though you might find it interesting that you can create any possible pixel pattern by adding up all 64 of these squares (basis functions) with the appropriate weights to create any possible pixel pattern for the 8x8 square: "On the left is the final image. In the middle is the weighted function (multiplied by a coefficient) which is added to the final image. On the right is the current function and corresponding coefficient. Images are scaled (using bilinear interpolation) by factor 10×." That's how you do Fourier Synthesis with pixels (equivalent to sine waves with audio).

-

The math & science is sound- we're just getting started with computational cameras; it will get much better (already looking decent from your example).

-

If we line up the test chart just right to the camera sensor, we can get full resolution: http://www.xdcam-user.com/tech-notes/aliasing-and-moire-what-is-it-and-what-can-be-done-about-it/ And if we shift it off center we can get this: If we look at Chapman's images above, we see that while we can resolve lines at the resolution of the sensor in one limited case, in all other cases we'll get aliasing. More info including optics: http://www.vision-doctor.com/en/optic-quality/limiting-resolution-and-mtf.html. The OLPF cuts the infinite frequency problem. With the proper OLPF to limit frequencies beyond Nyquist, there should be no aliasing whatsoever. When not shooting test charts, the OLPF version will look softer vs. a non-OLPF version as false detail from aliasing will be gone. Again, I think the >2x factor and Bayer sensor camera's ability to resolve at-the-limit detail without aliasing is related to 1/4 resolution R-B photosites, which is a different problem. If the OLPF was tuned to prevent color aliasing, the image would look very soft and this is a tradeoff in most cameras. Whatever Sony is doing with the F65, it provides clean, detailed 4K without apparent aliasing from an "8K" sensor (rotated Bayer).

-

Have you edited 5D3 1080p 14-bit RAW or Red RAW vs. 1080p 10-bit DCT compressed log? The only 10-bit log camera I have access to is the C300 II (also does 12-bit RGB log). In my experience, the DCT compressed 10- and 12-bit log files have actually more post editing flexibility due to the extra DR of the C300 II. I've yet to see compression cause visible issues. Have you had similar experience between RAW and 10-bit log DCT (ProRes, XAVC, XF-AVC etc.)? So in the case of generative compression, which is lossy on the one hand (not an exact copy), it would edit the same as 10+ bit log DCT (ProRes, XAVC, XF-AVC etc.). Now here's where it gets interesting. Normally we'd opt for RAW to get every last bit of detail from the camera for VFX, especially for chroma key. Not only will generative compression not suffer from macroblock artifacts, it can be rendered at any desired resolution. It's like scanning a bitmap shape and having it converted to vector art. The vector art can then be transformed and rendered at any scale without artifacts. So even for VFX, generative compression will be much better than RAW. Maybe generative transformation is a better term, since it's effectively an improved representation (even though it's not a bit exact copy).

-

Generative compression isn't a replacement for RAW, since ultimately RAW is a finite resolution capture. So RAW loses both in being fat and inefficient with additional inherent flaws due to the Bayer sampling, and more importantly is severely limited in quality as max resolution is fixed. What generative compression will do is provide both vastly smaller files and superior image quality at the same time, as the video will be rendered to the resolution of the display device, in the same way a video game is rendered to the display. Upgrade from a 1080p monitor to 4K or even 8K, and your old content has just improved along with your new display device, for free. This is state of the art compression, and we're only getting started! https://arxiv.org/pdf/1703.01467.pdf

-

What? That's the ND for a nuke photo.

-

Start with the Panasonic 12-35 2.8: great all-around lens. Also the Voigtlander 25mm .95: fantastic character and bokeh.

-

Haha thanks for the laugh! You should try balloon juice, it has electrolytes! I think I know how Luke Wilson's character felt

-

You're right it's not about 2x or divide by 2. It's about > 2x, exactly 2x will alias. I think what's bugging you is the hard edges and infinite harmonics requirement. Since it sounds like you have a background in sound and music synthesis, let's talk about it from the point of view of sound and specifically resampling sound. In order to resample sound, we must have a signal > 2x the desired target frequency. Let's say we have a 48kHz sample and we need it at 44.1kHz. Can we just drop samples? Sure, and you know what that sounds like- new high frequency harmonics that sound bad. First we have to upsample the 48kHz sample just over 88.2kHz (add zeroes and low pass filter, use a sinc interpolator). Note that the resulting 88.2kHz signal has also been low pass filtered. Now we decimate by dropping every other sample and we have alias-free 44.1kHz. The same > 2x factor applies to camera photosite sampling images as well, and we can deal with that optically, digitally, or both. The way we get around the square wave from black/white edges is via the low-pass filter, or OLPF. So the sensor will never see a sharp discontinuous infinite harmonic edge Here are all the possible harmonics (frequencies from low to high) for an 8x8 group of pixels, from: https://en.wikipedia.org/wiki/Discrete_cosine_transform Aliasing typically occurs in the higher frequencies. The trick with the OLPF is instead of only black and white, we'll get some gray too, which helps with anti-alising. It helps to look at aliasing in general: https://www.howtogeek.com/73704/what-is-anti-aliasing-and-how-does-it-affect-my-photos-and-images/ The right A is anti-aliased by adding gray pixels in the right places on the edges. The right checkerboard is softer and alias-free. You can see that if we blur an image before resizing smaller, we'll get a better looking image. So, theoretically, a monochrome sensor, with a suitable OLPF can capture full resolution without aliasing. I think the problem with Bayer sensors is the 1/4 res color photosites. By using a >2x sensor we then have enough color information to prevent aliasing. Also, for a monochrome sensor, it might be hard to create a perfect OLPF, so have relaxed requirements for the OLPF, capturing at 2x, then blurring before downsampling to x and storing, might look as alias free as is possible. So while I see your point, in the real world it does appear that as we approach 2x, aliasing goes down (F65 vs. all lower res examples). Are there any counterexamples?

-

Have you noticed how @HockeyFan12 has disagreed with me politely in this thread, and we've gone back in forth in a friendly manner as we work through differences in ideas and perceptions, for the benefit of the community? The reason you reverted to ad hominem is because you don't have a background in mathematics, computer graphics, simulations, artificial intelligence, biology, genetics, and machine learning? That's where I'm coming from with these predictions: https://www.linkedin.com/in/jcschultz/. What's your linkedin or do you have a bio page somewhere so I can better understand your point of view? I'm not sure how these concepts can be described concisely from a point of view solely from chemistry, which appears to be where you are coming from? Do you have a link to the results of your research you mentioned? It's OK if you don't have a background in these fields, I'll do my best to explain these concepts in a general way. I used the simplest equation I am familiar with, Z^2 + C, to illustrate how powerful generative mathematics can create incredibly complex, organic looking structures. That's because nature is based on similar principles. There's an even simpler principle, based on recursive ratios: the Golden Ratio: https://en.wikipedia.org/wiki/Golden_ratio. Using this concept we can create beautiful and elegant shapes and patterns, and these patterns are used all over the place, from architecture and aesthetic design to nature in all living systems: I did leave the door open for a valid counter argument, which you didn't utilize, so I'll play this counter argument which might ultimately help bridge skepticism that generative systems will someday (soon) provide massive gains in information compression, including the ability to capture images and video in a way that is essentially resolution-less. Where the output can be rendered at any desired resolution (this already exists in various forms, which I'll show below), and even any desired frame rate. Years ago, there was massive interest in fractal compression. The challenge was and still is, how to efficiently find features and structure which can be coded into the generative system such that the original can be accurately reconstructed. RAW images are a top-down capture of an image: brute force uncompressed RGB pixels in a Bayer array format. It's massively inefficient, and was originally used to offload de-Bayering and other processing from in-camera to desktop computers for stills. That was and still is a good strategy for stills, however for video it's wasteful and expensive because of storage requirements. That's why most ARRI footage is captured in ProRes vs. ARRIRAW. 10- or 12-bit log-encoded DCT compressed footage is visually lossless for almost all uses (the exception being VFX/green-/blue-screen where every little bit helps with compositing). Still photography could use a major boost in efficiency by using H.265 algorithms along with log-encoding (10 or more bits). There is a proposed JPG replacement based on H.265 I-frames. DCT compression is also a top down method which more efficiently captures the original information. An image is broken into macro blocks in the spatial domain, which are further broken down into constituent spectral elements in the frequency domain. The DCT process computes the contribution coefficients for all available frequencies (see the link- how this works is pretty cool). Then the coefficients are quantized (bits are thrown away), and the remaining integer coefficients are further zeroed and discarded below a threshold and the rest further compressed with arithmetic coding. DCT compression is the foundation of almost all modern, commercially used still and video formats. Red uses wavelet compression for RAW, which is suitable for light levels of compression before DCT becomes a better choice at higher levels of compression, and massively more efficient for interframe motion compression (IPB vs. ALL-I). Which leads us to motion compression. All modern interframe motion compression used commercially is based on the DCT and macroblock transforms with motion vectors and various forms of predictions and transforms between keyframes (I-frames), which are compressed in the same way as a JPEG still. See h.264 and h.265. This is where things start to get interesting. We're basically taking textured rectangles and moving them around to massively reduce the data rate. Which leads us to 3D computer graphics. 3D computer graphics is a generative system. We've modeled the scene with geometry: points, triangles, quads, quadratic & cubic curved surfaces, and texture maps, bump maps, specular and light and shadow maps (there are many more). Once accurately modeled, we can generate an image from any point of view, with no additional data requirements, 100% computation alone. Now we can make the system interactive, in real-time with sufficient hardware, e.g. video games. Which leads us to simulations. In 2017 we are very close to photo-realistic rendering of human beings, including skin and hair: http://www.screenage.com.au/real-or-fake/ Given the rapid advances in GPU computing, it won't be long before this quality is possible in real-time. This includes computing the physics for hair, muscle, skin, fluids, air, and all motion and collisions. This is where virtual reality is heading. This is also why physicists and philosophers are now pondering whether our reality is actually a simulation! Quoting Elon Musk: https://www.theguardian.com/technology/2016/oct/11/simulated-world-elon-musk-the-matrix A reality simulator is the ultimate generative system. Whatever our reality is, it is a generative, emergent system. And again, when you study how DNA and DNA replication works to create living beings, you'll see what is possible with highly efficient compression by nature itself. How does all this translate into video compression progress in 2017? Now that we understand what is possible, we need to find ways to convert pixel sequences (video) into features, via feature extraction. Using artificial intelligence, including machine learning, is a valid method to help humans figure out these systems. Current machine learning systems work by searching an N-dimensional state space and finding local minima (solutions). In 3D this would look like a bumpy surface where the answer(s) are deep indentations (like poking a rubber sheet). Systems are 'solved' when the input-output is generalized, meaning good answers are provided with new input the system has never seen before. This is really very basic artificial intelligence, there's much more to be discovered. The general idea, looking back at 3D simulations, is to extract features (resolution-less vectors and curves) and generalized multi-spectral textures (which can be recreated using generative algorithms), so that video can be massively compressed, then played back by rendering the sequence at any desired resolution and any desired frame rate! I can even tell you how this can be implemented using the concepts from this discussion. Once we have photo-realistic virtual reality and more advanced artificial intelligence, a camera of the future can analyze a real world scene, then reconstruct said scene in virtual reality using the VR database. For playback, the scene will look just like the original, can be viewed at any desired resolution, and even cooler, can be viewed in stereoscopic 3D, and since it's a simulation, can be viewed from any angle, and even physically interacted with! It's already possible to generate realistic synthetic images from machine learning using just a text description! https://github.com/paarthneekhara/text-to-image. https://github.com/phillipi/pix2pix http://fastml.com/deep-nets-generating-stuff/ (DMT simulations ) http://nightmare.mit.edu/ AI creating motion from static images, early results: https://www.theverge.com/2016/9/12/12886698/machine-learning-video-image-prediction-mit https://motherboard.vice.com/en_us/article/d7ykzy/researchers-taught-a-machine-how-to-generate-the-next-frames-in-a-video

-

Balloon juice? Do you mean debunking folks hoaxing UFOs with balloons or something political? If the later, do your own research and be prepared to accept what may at first appear to be unacceptable- ask yourself why you are rejecting it when all the facts show otherwise. You will be truly free when you accept the truth, more so when you start thinking about how to help repair the damage that has been done and help heal the world. Regarding generative compression and what will someday be possible: have you ever studied DNA? Would you agree that it's the most efficient mechanism of information storage ever discovered in the history of man? Human DNA can be completely stored in around 1.5 Gigabytes, small enough to fit on a thumb drive (6×10^9 base pairs/diploid genome x 1 byte/4 base pairs = 1.5×10^9 bytes or 1.5 Gbytes). 1.5 Gbytes of information accurately reconstructs through generative decompression, 150 Zettabytes (10^21)! (1.5 Gbytes x 100 trillion cells = 150 trillion Gbytes or 150×10^12 x 10^9 bytes = 150 Zettabytes (10^21)). These are ballpark estimates, however the compression ratio is mind-boggling. DNA isn't just encoding an image, or a movie, it encodes a living, organic being. More info here. Using machine learning which is based on the neural networks of our brains (functioning similar to N-dimensional gradient-descent optimization methods), it will someday be possible to get far greater compression ratios than the state of the art today. Sounds unbelievable? Have you studied fractals? What do you think we could generate from this simple equation: Z(n+1) = Z(n)^2 + C, where Z is a complex number? Or written another way Znext = Znow*Znow + C? How about this: From a simple multiply and add, with one variable and one constant, we can generate the Mandelbrot set. If your mind is not blown from this single image from that simple equation, it gets better: it can be iterated and animated to create video: And of course, 3D (Mandelbulb 3D is free): Today we are just learning to create more useful generative systems using machine learning, for example efficient compression of stills and in the future video. We can see how much information is encoded in Z^2 + C, and in nature with 1.5Gbytes of data for DNA encoding a complete human being (150 ZettaBytes), so somewhere in between we'll have very efficient still image and video compression. Progress is made as our understanding evolves, likely through advanced artificial intelligence, to allow us to apply these forms of compression and reconstruction to specific patterns (stills and moving images) and at the limit, complete understanding of DNA encoding for known lifeforms, and beyond!

-

Imagine a meeting with Netflix executives, marketing, and lawyers, along with reps from ARRI, Red, Sony, and Panasonic, regarding the new 4K subscriptions and 4K content. Red, Sony, and Panny say "we have cameras that actually shoot 4K" and ARRI says "but but...". Netflix exec replies, we're selling 4K, not the obviously superior image quality that ARRI offers, sorry ARRI, when you produce an actual 4K camera like Red, Sony, and Panasonic, let's talk. Netflix marketing and lawyers in the background nod to exec. A while later ARRI releases the Alexa 65 with a 6.6K sensor and it's accepted (actually 3 Alev III sensors rotated 90 degrees and placed together, AKA the A3X sensor). Nyquist is > 2x sampling to capture without aliasing, e.g. sample >4K to get 2K and >8K to get 4K (along with the appropriate OLPF). 4K pixels can have max 2K line pairs- black pixel, white pixel, and so on. ARRI doesn't oversample anywhere near 2x and they alias because of it. That's the math & science. From Geoff Boyle's recent chart tests, the only cameras that showed little or no aliasing for 4K were the "8K" Sony F65 and the 7K Red (only showed 1080p on Vimeo, however small amounts of aliasing might be hidden when rendering 4K to 1080p). We can see from inspection of current cameras shooting those lovely test charts, that as sampling resolution approaches Nyquist, aliasing goes down, and as we go the other way, aliasing goes up. As predicted by the math & science, right? Since the 5D3 had eliminated aliasing, when I purchased the C300 II I was surprised to see aliasing from Canon (especially for the massive relative price difference)! The C300 II has a 4206x2340 sensor which is barely oversampled and uses a fairly strong OLPF producing somewhat soft 4K. Unlike the 1DX II 1080p, which produces fatter aliasing due to the lower resolution, it's still challenging with the C300 II 4K and fine fabrics. Shooting slightly out of focus could work in a pinch (and up post sharpening), however it will be cool when cameras have sufficient sensors to eliminate aliasing. Given how powerful and low-energy mobile GPUs are today, there's no reason in 2017 for cameras to have aliasing, other than a planned, slow upgrade path as cameras gradually approach Nyquist. Once there, what's the resolution upgrade path? Can't have that before there are 8K displays

-

That would be using all I-frames (no compressed motion deltas). To really leapfrog H.265, they've got to apply the generative encoding concept to inter-frame compression. A hybrid concept would be to use generative encoding for I-frames, and use concepts from H.265 for inter-frame encoding.

-

@HockeyFan12 I think you mean low pass filter (vs. high pass)? Anti-aliasing filter, aka OLPF = optical low pass filter. A low pass filter lets low frequencies 'pass' and cuts higher frequencies. I would code a square wave using the equation with the sgn() function here (or something not using a transcendental function if speed is critical): http://mathworld.wolfram.com/SquareWave.html. You can see how summed sinusoids can be used to recreate a square wave in the last equation in that link (Fourier Synthesis). One would not code it this way as it would be very slow! Bayer reconstruction is pretty neat math, however it doesn't 'overcome Nyquist' and actually is especially prone to color aliasing. This is a pretty cool: https://***URL removed***/articles/3560214217/resolution-aliasing-and-light-loss-why-we-love-bryce-bayers-baby-anyway. Simple link summary: more resolution = less aliasing, which means more actual resolution captured (vs. false detail from aliasing). The C300 only oversamples in green, and barely so at that (really need at least four pixels => one pixels vs. two pixels => one pixel). Actually we need more than 2x the pixels per Nyquist, exactly 2x is problematic at the max freqency (thus an OLPF can help here). The Alexa is undersampled for 2K/1080p at 2.8K and even 3.4K. The F65 (and perhaps Red) make clear what it takes to get alias-free, true 4K resolution. How much you can cheat in practice with less sensor resolution and tuned OLPFs can be tested shooting those fun charts Interestingly, Super-Resolution uses the information that is aliasing to construct real detail from multiple frames.

-

I haven't use the A6500, however if the Pocket's HD looks like it has more real resolution vs. the A6500 that means that the Pocket is storing more real detail in the output file. E.g. the A6500 is doing a form of binning etc. that results in loss of detail (information) and thus lower resolution is captured. As you've seen with the C300 I, a 4K sensor with a decent oversample to 1080p results in excellent resolution 1080p. The C300 I is a special case- it averages two sets of greens to get 1080p, and takes R & B as is, thus no deBayering takes place, and the results speak for themselves. So 'Ks' on the sensor side and how those K's are processed into the desired output do indeed make a huge difference. Best results for 1080p on the A7S II and GH4 was to shoot 4K and downsample in post (probably the A6500, too example here*, what artifacts are you seeing- is in-camera sharpening turned off?). Hasn't it been clear for years that some cameras provide much more detailed 1080p (and now 4K) than others? The standard way to test real resolution to validate (or invalidate) camera manufacturer resolution claims is to shoot boring (and controversial, lol) test charts! Like Geoff Boyle does here: https://vimeo.com/geoffboyle * this 4K video looks much better- very detailed! He's using a tripod so that helps with compression artifacts. That's one way to work around low bitrates- keep motion to a minimum. Another way is to record externally at a higher bit rate.