-

Posts

1,839 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by jcs

-

Good news for the Alexa 65: 3840/.58 = 6620, so the Alexa's 6560 photosite width should provide decent anti-aliased 4K.

-

Set Gain in the first node to 2.0, that will fix the black subtract issue.

-

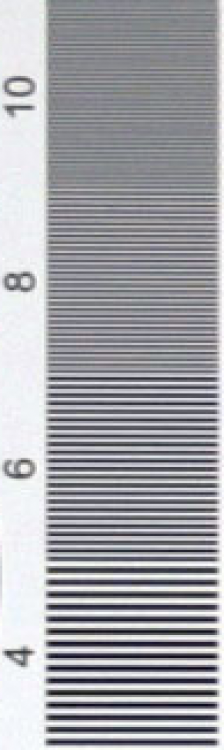

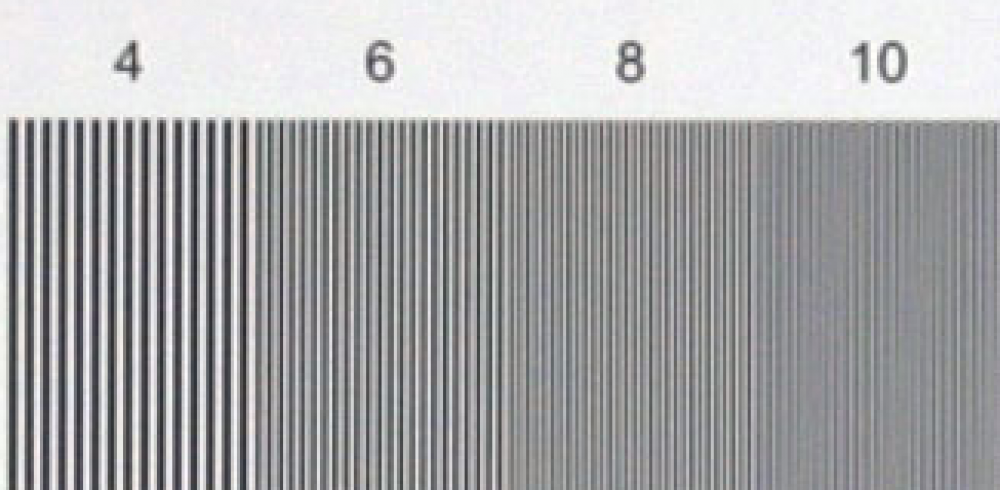

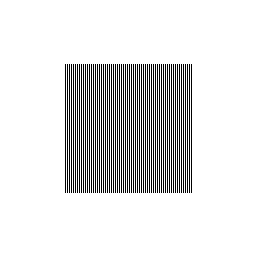

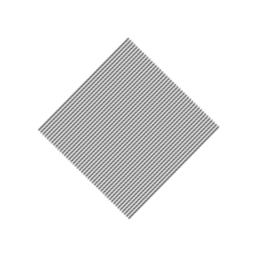

The major issue here is the industry is a mess with confusing terms regarding resolution. So let's forget about external terms and keep it simple discussing the concepts and real-world results. What Graeme said is true only for the perfectly aligned cased, as noted and agreed on previously. As soon as we move slightly out of perfect alignment, we get major aliasing. Nyquist says > 2x and a line pair is = 2x. What's the next value: 3x, however that's not even/symmetric so the next is 4x (computer graphics operations tend to be on even or power of two boundaries for performance reasons). Also note the FS100 resolution chart shows similar horizontal and vertical resolution- it's a measure of sensor photosites (becoming pixels) per physical measure of space (e.g. pixel micrometers per physical mm): https://www.edmundoptics.com/resources/application-notes/imaging/resolution/ So it's not the total sensor width or height, it's the photosite density in space relative to image space resolution on the chart/object. The FS100 chart crop you posted is aliasing like crazy, showing high frequency mush along with lower frequency folding harmonics (4 visible bands). Where does aliasing start: when can we represent clean black & white lines, and when does it get messy and start to alias? At 400, we see nice edge-rounded clean lines (antialiased; OLPF rounding square wave). At 600, aliasing has started, actually just past 400, perhaps at 540? At 600-800 aliasing is severe, we can no longer get clean lines, and at the end of 800 it's highly aliased mush, approaching extinction of detail at 1000. Examining horizontal resolution: Again, at 400 we've got clean lines (2 black pixels with 1 gray pixel sides, 1 or 2 white pixels, and so on), and after 400, it starts to alias and we can't see clean lines anymore, by end of 600 it's starting to blend to gray, and after 800-1000 it's gray mush diagonal fat aliasing and very low contrast high-frequency-aliased lines as we approach extinction of detail. Thus the horizontal and vertical resolvable detail are about the same: just past 400 lines, perhaps 500 being generous. The total output pixel width and height don't really matter, We could have a 4:3 sensor or 2.35:1, etc., it wouldn't matter for the chart: it's the density of the photosites not final pixel width & height that matter. So in the real world it's clear we can never resolve single pixel alternating lines of resolution (it's not even possible to draw them in a bitmap, as we'll discuss below). This is a Bayer pattern, vs. a monochrome sensor, so that's part of the issue (but not as much as you'd think, since we are only working with black & white and all pixels contribute to luminance (note color aliasing starts after 400 as well)). So, we agree that an HD sensor can capture 1920x1080 pixels without aliasing in the perfectly aligned case, right? (theoretically- would be nice to see a test chart showing this possibility). The debate is what do we need for alias free capture? Nyquist says > 2x. We must tune the OLPF to filter out lines/detail smaller than one pixel (line) width. Though in practice, as with audio capture, we get much better results with higher oversampling (analog low pass filters are far from perfect, including OLPFs), so the finest real world feature should be blurred to a diameter > 1 photosite/pixel width, so it can be represented by 2 pixels minimum. In practice this isn't really enough, and ideally 3 or more pixels are needed (as seen above with the FS100 chart). At 4 pixels we're at the 'second divide by 2'. Take the alternating black and white lines you drew, and rotate them a couple degrees. Look what happens to the lines: a random jumble of pixels- massive aliasing! We can use your favorite topic, sinusoids to figure out how to create alternating lines that can be rotated and moved around the pixel grid without aliasing (same problem with a sensor capturing the real world). One pixel wide alternating black & white lines (64 black lines): Now lets rotate them 44.4 degrees: We get massive aliasing, and similar lower frequency folding harmonics as present on the FS100 test chart. Now let's draw lines with one center pixel and an 'antialias/sinusoid-approx' pixel on either side, so lines are now using 3 pixels instead of 2 pixels to represent the finest detail (>2x vs. =2x above, per Nyquist), 43 black anti-aliased (sinusoid approx) lines: And let's rotate 44.4 degrees: No more aliasing, as expected. 64/43 = 1.49 = 1.5, and 64/1.5 = 43. So we can use divide by 1.5 to show us anti-aliased line resolution for line triplets (vs. line pairs) in this purely computer graphics example. In the real world divide by 2 works very well, e.g. 8K F65 creating antialiased 4K. And again, as resolution drops from 8K down towards 4K, as shown in the test charts, aliasing increases as predicted. In summary, Nyquist states >2x, 3x works well in a computer graphics test (/1.5), and 4x (/2) works well in the real world, as shown in test charts. Measuring many real-world test charts for Bayer sensors, he found .58x before aliasing, pretty much the same as predicted by the 3-pixel line test (where 4 pixels would be even better). 1/1.5 = .67, 1/2 = .5, (.67 + .5)/2 = .58! http://lagemaat.blogspot.com/2007/09/actual-resolution-of-bayer-sensors-you.html. So .58x is pretty much an excellent predictor of alias-free resolution possible with a Bayer sensor. Here's another test showing we need at least 3x real-world sampling to eliminate aliasing, as predicted by the computer graphics test: http://www.clarkvision.com/articles/sampling1/

-

@HockeyFan12 hey man, no worries, all good Remember, there are no square waves hitting a sensor with an OLPF. Graeme's statement is flawed, and this is the root flaw: "If we think of a wavelength as a pair of lines" and that you only need one sample per pixel. You can't combine two lines/pixels as a wavelength accounting for Nyquist 2x sampling. Each pixel represents a sample, and we need at least two pixels (really 3) to represent a pixel (or line) without aliasing. Ask Graeme to provide test chart results showing a 4K sensor resolving 4K lines without aliasing (or 2K sensor providing 2K lines without aliasing). Why do you think his statement isn't replicated anywhere else? Have you found any 2K/HD test charts that go above 1200 lines? If his statement was correct, why is the C300 I resolving only 1K lines? What about the FS100 & F3 also only resolving around 1K lines? If his statement was correct they'd be resolving 2K lines, right? Consider the possibility that you are being hung up on Graeme's statements regarding sinusoids and the possibility that his statements aren't accurate given real-world test chart results alone. Also, think about how many end-result pixels are needed to represent sinusoids vs. lines: you need gradient pixels to form the sinusoid, right? How many horizontal pixels do you need to form a sinusoid? Is it more than 1 pixel wide? If so how is it possible to sample 2K into 2K or 4K into 4K without aliasing? That article reflects what I found when studying camera test charts. For example the 8K F65 provides only 4K of resolution without aliasing. This is Sony's position as well. Note Canon states ~1000 TV Lines for a 4K sensor (really 2K resolving 1K without aliasing, as per Nyquist). Again, here are actual camera resolutions, performing as per Nyquist (not Graeme's invalid statement), from https://www.provideocoalition.com/nab_2011_-_scce_charts/: "The chart lists line pairs per sensor height; the more traditional video measurement is “TV lines per picture height”, or TVl/ph. A “TV line” is half a line pair, so double the numbers shown to get TV lines.", which means in no uncertain terms horizontal resolution. If you are still not convinced, you can download and print your own charts for free: http://www.bealecorner.org/red/test-patterns/ (print multiple sheets and assemble if printer resolution not high enough).

-

@EthanAlexander (from PM): you can do Unsharp mask in Resolve with 2 nodes: the first node "doubles" the image intensity and saturation, then the next node subtracts a Gaussian blurred copy from the "doubled' image. Mathematically it's 2*(original RGB pixels) - (blurred original RGB pixels). If you don't blur the 2nd copy, 2*original - original = original: you can use that to make sure the operation is set up correctly. Then start blurring the 2nd copy to see the results: small blur is the traditional Unsharp mask, and larger amounts perform LCE. I don't use Resolve very often (only to test it every now and then), here's what I came up with in a couple minutes of experimenting (maybe Resolve experts have a better way): In Color, add a Serial Node. Set the Gain to 2.0 Add a Layer Node to this Serial Node Right-click and set the Layer Mixer Composite Mode to Subtract The output should appear normal Add a Box Blur to the newly created node after the Layer Node was created Set Iterations to 6, Border Type: Replicate, and turn up strength and see how it works: you need a lot of blur for LCE Use Gaussian blur and less strength instead of box blur for traditional unsharp masking sharpening That should get you started (fully GPU accelerated too)!

-

Here's a test chart of the FS100 1920x1080 camera: Looks like it does right around 1000 TV Lines. Funny this HD test chart caps out at 1200, might be hard to find an HD test chart that goes up to 2000 4 years ago I looked at this issue, pretty much forgot about this post: Bayer sensors produce only 1/2 stated resolution (based on real-world test data): http://lagemaat.blogspot.com/2007/09/actual-resolution-of-bayer-sensors-you.html

-

Do your own homework, brother All you need are 2K and/or 4K test charts.

-

Haha, that's some indica brother! Maybe you should wager with Canon?

-

Thanks, I don't need your money. Just post your 2K or 4K chart results (online or your own).

-

I think this is just entertainment now, and you are maybe joking about the whole thing. It's not about being right or confident, you can't even draw 4K lines in a 4K bitmap without aliasing except in the perfectly aligned condition. So I'm asking you to do something that is impossible: shoot a chart with a 4K sensor and resolve 4K lines without aliasing. If you don't believe me, prove it to yourself by either searching online for evidence to support your belief or shoot a 2K or 4K test chart and see for yourself.

-

Why isn't there anywhere else on the internet saying the same thing as Graeme? Very simple, 4K test chart showing 4K lines or bust. Or 2K test chart showing 2K lines or bust. Lol, now I have to send you money so you can prove your point?

-

Haha what are you smoking, dude? Did you read the NFS article stating the F3 and C300 have the same basic resolution. Canon states 1000 TV lines (are they wrong too?), NFS matches the F3 with 540 LPpSH = 1080 TV Lines, isn't that clear? Or are they wrong too? http://nofilmschool.com/2011/11/canon-cinema-eos-c300-4k-sensor-outputs-1080p All you have to do is produce a test chart showing a 4K sensor resolving 4K lines to prove your point.

-

Line Pairs per Sensor Height is confusing and doesn't mean what you think it does. Line Pairs per Sensor Height = TV Lines = horizontal resolution. Note in this article, the C300 I is said to have basically the same resolution as the F3: http://nofilmschool.com/2011/11/canon-cinema-eos-c300-4k-sensor-outputs-1080p 1000+ TV Lines ~= 540 LPpSH ~= 1080 TV Lines (horizontal resolution).

-

This is wrong, especially the bold (emphasis mine), from: http://www.reduser.net/forum/showthread.php?157330-Is-this-chroma-aliasing/page2&s=73723d93a5062b3cc1c05cd4e21f54e3 Each pixel is a sample, and we need at least two samples per pixel to represent the finest detail possible without aliasing (3 pixels would be better). If Red's statements were true, you'd have no trouble finding HD sensors resolving 2000+ lines or 4K sensors resolving 4K+ lines: where are the test charts or manufacturer spec sheets showing this? The practical limit for HD is around a 1000 lines, and for 4K is around 2000 lines (horizontal resolution) before aliasing becomes significant.

-

Maybe weight is closer +battery and memory card for the C200?

-

Do you have a 4K test chart showing 4K lines captured without aliasing (Nyquist says 2K before aliasing)? Until then, every single online technical discussion by camera/microscope companies show Nyquist /2, including Canon stating ~1000 TV Lines resolution possible (horizontal pixels; Nyquist is 1920/2 = 960, so 40+ pixels will be starting to alias) in 1920x1080 from the 4K Bayer array on the C300 I. Happy to review Red's description- I suspect some kind of misunderstanding in terms somewhere.

-

One reason many folks don't see that much of an improvement in quality of 4K vs. 2K/1080p, is that most cameras aren't actually capturing true 4K. It's the same reason cameras that capture in 4K and downscale to 1080p can look better than cameras that shoot 1080p: the 1080p cameras (or modes) aren't actually capturing true 1080p. Per Nyquist, we need 4K photosites to capture true 1080p (Bayer sensor), and 8K to capture 4K. Just like the 8K F65 being the first 4K camera to capture close to true 4K, the F35 also used double the number of photosites to capture 1080p (and actually triple horizontally). See this post for more details and images: http://www.dvxuser.com/V6/showthread.php?318627-F35-Beginners-What-is-the-sensor Note that with a 4K sensor, the Canon C300 I was rated by Canon just over the theoretical limit (960) at 1000 TV Lines (1920/2 = 960 (/2 = Nyquist)): https://www.canon-me.com/for_home/product_finder/digital_cinema/cinema_eos_cameras/eos_c300/specification.aspx. So "1000 or more TV Lines" includes aliasing, and indeed the C300 I aliases on fine detail. The Sony F3 also has excellent 1080p, getting 540 line pairs or 1080 TV Lines (horizontal pixel resolution: 1080 might be confusing since it's the same as the native vertical resolution: just a coincidence unless they used an OLPF to get that specific value): Thus it may be the case that until more 4K cameras have 8K sensors, the jump in 2K/1080p to 4K quality won't be as dramatic for most people. The same pattern as when people began to realize their 1080p wasn't true 1080p until shooting in 4K and downsampling to 1080p in post.

-

@tomekk @tupp frequency separation is very similar to what MSDE does. It uses a high-pass filter and Gaussian blur in the spatial domain: https://fstoppers.com/post-production/ultimate-guide-frequency-separation-technique-8699 Wavelet's operate in the frequency domain (same as Fourier transforms (+DFT/DCT) with different pros/cons). For wavelet frequency filtering, an image is converted into a wavelet, desired frequencies are filtered, then the wavelet is converted back into an image. Note no compression or decompression takes place (same with a DCT and inverse DCT, which can also be used for frequency filtering). The GIMP plugin performs a wavelet transform which allows frequencies to be decomposed (vs. decompressed) and filtered before converting back into an image. Yeah, it's puzzling that Photoshop doesn't provide frequency-based filtering options or that no one's made plugins for Photoshop/Premiere/AE/FCPX/Resolve etc. For retouching stills, I haven't used frequency separation since I got Portrait Pro: http://www.portraitprofessional.com/.

-

You're right, if you want 28,321,819+ views you gotta shoot SD! (shot on the 5D Mark 3 & green screen )

-

Overall ARRI is my favorite cinema camera brand, however I haven't seen anything from ARRI that blew me away like Lucy and Oblivion with the F65. F65 beats ARRI on test charts, would love to see a people/skintone head to head test ?. Imagine 8K => 4K processing in a small camera with IBIS, 10-bit 422, and DPAF- that's all possible today, without a fan! Held back for business reasons. The only way to demand anything is to not give them money for crappy products ?

-

I wouldn't be surprised- upscaling, adding noise, and sharpening alone work pretty well. I'm pretty sure PP CC (and AE?) use bicubic scaling. Yedlin's tools (Nuke?) may also provide more advanced (and expensive) scalers such as lanczos-3, which along with sharpening performed best in this 2014 state-of-the-art study: https://hal.inria.fr/hal-01073920/document, surprisingly performing better than super-resolution (which creates real extra detail from aliasing information). I think Yedlin could have made a better point by shortening the videos dramatically: way too long and rambly, especially if the goal was to appeal to studio execs and producers with short attention spans I jumped around his videos and didn't see the application of Local Contrast Enhancement or mention of acutance (perhaps I missed it?), which should have been paramount in such a test. It really felt like an advert/defense-a-tutorial for ARRI's low-res sensors (including the Alexa 65- still not capable of True 4K (only ~6.6K Bayer photosites; need 8K)). Love ARRI's color, F65 wins for ultimate color and real 4K detail (see Lucy, Oblivion). From https://hal.inria.fr/hal-01073920/document: This is my experience as well, so I fundamentally disagree with Yedlin. True 4K capture, with real detail, will always be better than upscaling and fancy tricks like MSDE. Will most people notice or care? That's a different argument

-

Regarding Sony & Panasonic vs. Canon for post-sharpening: Canon DSLR's are simply softer, where the finest detail is gone, either from the OLPF or from sensor binning/processing. You can see this when studying resolution charts. Canon C-series cameras (except the 1DC) are all very sharp, however they also alias like crazy for high-frequency images (fabric, brick, etc.). The 5D3 has practically no aliasing, however it's very soft. Part of the 5D3 H.264 softness comes from low-quality in-camera processing, as we can see much more detailed results when using ML RAW with post-de-Bayer and sharpening. Part of the Canon DLSR video softness may also be related to business reasons: protecting the C-line. As was noted in the Netflix 4K thread, only the 8K F65 produces True(-ish) 4K- everyone else is cheating (except perhaps Red 8K). This is easily observed by examining the test charts and aliasing. Cameras that are 'cheating' can appear sharp, however that's partially from aliasing (note the F65 is razor sharp & detailed with no visible aliasing!). Trying to sharpen soft, aliased footage in only the high frequencies can look ugly, as you've noticed. So what I did with MSDE (not a standard term; made it up), was sharpen the high frequencies only so much (else will get ugly), then went to a lower frequency and sharpened more, then finally to an even lower frequency, for the final sharpen. Here sharpen means contrast enhancement: amplifying differences in pixels and groups of pixels. Sharpening in the normal use of the word is contrast enhancement at the highest frequencies only. Adding pixel-level noise first creates the highest possible frequency information for texture. I tried adding noise later and it didn't work as well: even more noise was required to see the effect. When we add noise we are increasing acutance (not real resolution), however we are also reducing the signal to noise ratio, so we want to use a little as possible. To my eye, the results of this test look a lot like film, vs. the typical Sony & Panasonic video look, don't you agree? I think one of the reasons film looks the way it does is because of the acutance result from the chemical process and zero aliasing, where grain provides texture so it has that somewhat soft and detailed look at the same time. MSDE is based on spatial domain transforms, it's also possible to perform detail enhancement in the frequency domain using the discrete cosine and wavelet transforms (DCT & DWT): https://link.springer.com/chapter/10.1007%2F978-3-642-01209-9_13. Not clear why this isn't used more- could be patent related. New technologies based on feature extraction (generative processing) will be able to figure out generative structures and be able to re-render them at any resolution. Genuine Fractals made progress in this area a few years ago: https://blog.codinghorror.com/better-image-resizing/. While the results are 'sharper', it wasn't good enough vs. bicubic, Lanczos, etc. The MSDE technique only needs noise, convolution sharpen (or perhaps Unsharp Mask with a width around .5-1.0), and Unsharp Mask. It will work in any NLE where these effects are available (native or via plugin), and it's free

-

@EthanAlexander thanks, yeah I've been mentioning LCE for a while, thought I'd expand the concept and show how it's done. I'd use the same settings for 4K and do minor tweaks as desired. Sharpen is typically implemented with a convolution sharpen, where surrounding pixels are used to increase high-frequency detail (by enhancing differences). Unsharp Masking works by subtracting a blurred copy (reducing low frequency: a high-pass filter), and has a variety of uses based on the radius. FCPX seems to have a hybrid sharpen- a plugin which does PP/AE style sharpen and Unsharp Mask is probably needed for FCPX. AE CC Test: Same soft 1DX II 1080p file (no stabilization, so wider shot), 4K Comp. Resized to 2K before upload (tried uploading 4K, was resized to 2K by website, so uploaded Photoshop resized 4K to 2K instead): AE Settings: Noise: 2%, color, no clipping Sharpen: 50 Unsharp Mask: Amount: 41, Radius 5 Unsharp Mask: Amount 50, Radius 300 AE's Sharpen appears to have a bug- edges sharpened without repeat, so thin line around border: do Transform / Scale to fix (in this case 200% became 201% (2K to 4K scale)).

-

Slow-mo shootout - which camera gives the most detail at 120fps?

jcs replied to Andrew - EOSHD's topic in Cameras

This will work with all cameras, especially the soft-ish Canon 1080p To fix aliasing we need Super Resolution , which uses aliasing information to create new, real detail (not trivial, but perhaps will be available someday). -

Sometimes we need to enhance the detail of a shot: a very soft lens, slightly out of focus, slow motion, post cropping (for story/emotion or after stabilization), and so on. Most are familiar with the sharpen effect and the unsharp masking effect. We can combine both effects, as well as use unsharp masking to create a local contrast enhancement effect as well. Canon 1DX II and Canon 50mm 1.4 at 1.4, 1080p (Filmic Skin picture style): Multi-spectral Detail Enhancement (let's call it MSDE, based on the physics of Acutance) Fine noise grain: adds texture and increases perception of detail (Noise effect: 2%, color, not clipped) High frequency sharpening: in PP CC this is called Sharpen (as a standalone effect) or via Lumetri/Creative/Sharpen (as used here: 93.4) Mid frequency sharpening: Unsharp masking effect with amount 41 and a radius of 5 Low frequency sharpening (Local Contrast Enhancement or LCE): Unsharp masking effect with amount 50 and a radius of 300 While this may be a bit too sharp/detailed for some, it illustrates MSDE, and one can add detail to taste using this technique. Note we didn't use a contrast effect or curves to achieve this look. MSDE can also be use to improve HD to 4K upscales: apply after upscaling. Also a great way to use Canon's soft-ish 1080p along with DPAF (since it's not currently available in any other cameras on the market). The GH5 is the new kid on the block with excellent detail, however Canon still looks more filmic to me and has excellent AF Someday Adobe will GPU accelerate their Unsharp Mask effect (it's a trivially easy effect to code too!), so this can easily run in real-time while editing.