KnightsFan

Members-

Posts

1,382 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by KnightsFan

-

ProRes is built for editing speed, whereas H.265 was designed to maximize quality on low bitrate streaming, especially 4k and 8k. I knew that at some lower bitrate, H.265 would retain more data than ProRes, but I was surprised that it does so well with just 1/5 the data. Of course, it took 6x longer to encode! I would love to see your results, if you publish. Keep in mind that not all encoders are equal, and H.265 has a LOT of options. ProRes is easier in that regard, as you just pick from Proxy, LT, Normal, HQ, and XQ with predictable size and quality. Edit: the difference in detail is negligible between ProRes HQ (180 Mb/s) and the H.265 file. Also I didn't see any real difference between ProRes and DNxHD at equivalent bitrates.

-

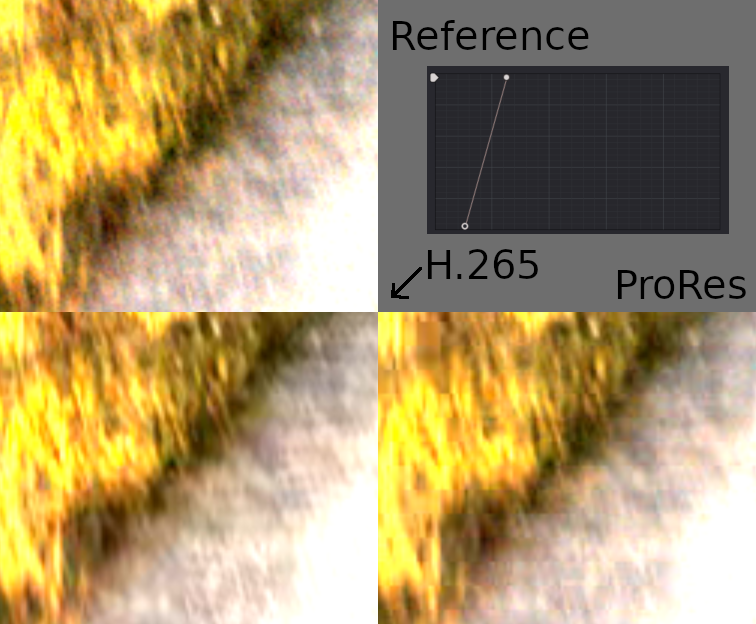

Spoilers for anyone who wants to do a blind test! It turns out that A is ProRes and B is H.265. As @Deadcode points out, B (H.265) has noticeably more detail. However, the ProRes file retains a tiny bit more of the chroma noise from extreme shadows the original video. As you can see in the 300% crop below, all that sparkly noise is simply gone H.265, and while the ProRes has some obvious blockiness, you can still faintly see the noise. This is of course a VERY extreme grade (see the curve from Resolve). With extreme grades the other direction, the ProRes looks better up until the VERY extreme, when its blockiness shows. As I said before, encoders have many options so this doesn't mean much for cameras. However, if you are uploading to a site with file size limits, H.265 is a good option. Also, as @OliKMIA pointed out, this is not a comprehensive test: it's 3 seconds of HD, and only looks at one scenario. This shot is quite stable, so the interframe flavor of H.265 that I used has an easier time. Shakycam will reduce the quality of H.265. Furthermore, the encode time on ProRes was significantly less: 11s vs 68s. I could have used a faster preset for H.265 which would speed up the encoding at the expense of quality (my guess is that cameras, tasked with real-time encoding, are not as good as the preset I used). On the other hand, I have a 2013 CPU. Perhaps newer CPUs with hardware encoding could narrow the gap? I'm not sure. If you want to look at the original files (before I turned them into 380 MB monsters!) One final note: I accidentally encoded both with AAC audio, so ~6kb of the file size of each is an empty audio track. ProRes: https://drive.google.com/open?id=102Ivc9Xa1Z7mPzCK8TgTqwh2GExMPOkZ H.265: https://drive.google.com/open?id=1NxMofYvrHcP6DHx5VECRwMNw8qA4KXOD

-

@thebrothersthre3 I find them to be equal for all practical purposes, even with extreme grading (by extreme I mean waaay out of the realm of usefulness). There are differences, but neither seems more accurate. I haven't tried green screening, and that would be an interesting test, but I don't have a RAW camera so I'm limited in the scenarios I can test. And naturally it defeats the purpose of the test if I start with anything less than a RAW file. Interestingly, despite having a hard time making any substantive distinction between the two, when comparing the PSNR (peak signal to noise ratio), ProRes is higher than H.265 at this compression level. H.265 needs to get up to around 50% of the bitrate before the average PSNR is the same--and even then, the PSNR on I frames is higher than ProRes, but the PSNR on P and B frames is lower. That's to be expected. I could also push the preset to be even slower, or use two pass encoding for better results on H.265 as well. No, I did RAW -> Uncompressed RGB 444 And then Uncompressed -> H265 -> Uncompressed And also Uncompressed -> ProRes -> Uncompressed I did it this way so that I would not be limited by Resolve's H.265 encoder, and so that I could do PSNR tests from Uncompressed, without having to futz with debayering messing up the PSNR comparison. I did a short clip to keep the file size manageable at only 380 MB each. I originally did 4k, but I figured no one wanted to download that! If there is interest I am happy to do more extensive examples.

-

I did a quick comparison between ProRes and H.265 encoding, and thought I'd share the results with everyone. I grabbed some of Blackmagic's 4.6k RAW samples and picked a 3 second clip. In Resolve, I applied a LUT and then exported as an uncompressed 1080p 10 bit RGB 444 file. This is my reference video. From this reference file, I encoded two clips. One clip is H.265, and the other is ProRes SQ, which I compared to each other and the reference video. The reference video (which I did not upload) was 570 MB. One of these files was created from the reference file using "ffmpeg -i Reference.mov -c:v libx265 -crf 20 -preset slower -pix_fmt yuv422p10le H265.mov". The file size is 7.81 MB (1.4% of the reference) The other file was created using "ffmpeg -i Reference.mov -c:v prores_ks -profile:v 2 ProRes.mov". The file size was 44 MB (7.7% of the reference, or ~5x the size of H.265) In order to keep the comparison blind, I then converted both the ProRes and H.265 files to uncompressed 10 bit 422. So you shouldn't be able to tell which is which from the metadata, file size, playback speed, etc. You can download these files (380 MB each) and do extreme color grades or whatever stress tests you wish, and compare the quality difference yourself. https://drive.google.com/open?id=1Z5iuNkVUCM9BgygGkYRXizzr6XnSXDK7 https://drive.google.com/open?id=1JHkmDKZU4qdS7qO_C0NwYH2xkey0uz7C I'd love to hear thoughts or if anyone would be interested in further tests. Keep in mind that there are different settings for H.265, so this test doesn't really have implications for camera quality. since we don't know what their encoder settings are. However, it could have implications for intermediate files or deliverables, especially to sites with file size limits.

-

C5d puts the alexa at 14 using their SNR = 2 measurement. I agree. But you cant blame z cam when everyone from sony to blackmagic exaggerate their dr. Only arri has the godlike status that allows them to be honest and still sell products.

-

Z Cam E2 will have ONE HUNDRED AND TWENTY FPS in 4K??

KnightsFan replied to IronFilm's topic in Cameras

It's actually been in and out of stock a few times at B&H. -

If the signal is lower, the same noise will be more apparent. Or perhaps Nikon is doing some automatic corrections as suggested above.

-

Z Cam E2 will have ONE HUNDRED AND TWENTY FPS in 4K??

KnightsFan replied to IronFilm's topic in Cameras

I don't know about holding back, but it's all about priorities, I think. Z Cam is a bleeding edge company. They make 360 cameras and are actively working on integrating AI into their cameras. Z Cam has a smaller community, and many owners at this point expect bugs and workarounds--it's the price of using bleeding edge. Blackmagic is focused on bringing cinematic imagery at a low cost: they care more about color science and integration into pro workflows than they care about high frame rates and next gen tech. Their target audience is more likely to have learned on film than Panasonic or Z Cam. The P4k is also significantly cheaper than the other cameras, the fact that it even competes spec-wise is impressive. Panasonic is orders of magnitude larger than either company, and needs to compete with the other giants (Canon, Sony, Nikon), both today and tomorrow. Their products need to have near 100% reliability, and be easy for consumers to use. A single bug could kneecap initial reactions to a product, permanently damaging their reputation. Why spend the R&D money on 4k 120 if Sony isn't, and when that money could go towards QA? Of those three companies, it seems to me that Z Cam has the most incentive to innovate with technology. It wouldn't surprise me one bit if Z Cam ends up with the best specs. -

Z Cam E2 will have ONE HUNDRED AND TWENTY FPS in 4K??

KnightsFan replied to IronFilm's topic in Cameras

I haven't seen any third party tests, but my guess is that they are exaggerating by 2 stops, like most manufacturers. Or, to look at it another way, Arri under-exaggerates by 2 stops. It's kind of like Canon claiming 15 for the C300, although Canon's 15 is measurably less than Arri's 14. Also a lot of the footage I've seen of the WDR mode has bad motion artifacts, so it's probably unusable except for really static shots. -

Z Cam E2 will have ONE HUNDRED AND TWENTY FPS in 4K??

KnightsFan replied to IronFilm's topic in Cameras

Not officially. They are still waiting for licensing, but a few users have found a way to turn on ProRes via a sort of hack. Those independent sources do confirm the camera's abilities, though. It seems pretty unlikely to me that they are using another camera for their test footage. They are planning on implementing Raw in the E2 alongside ProRes and H.265. Granted, the P4K will have BRaw, which I predict will be better than Z Cam's Raw format. -

We Are There... The Current Top Hybrids Are Good Enough

KnightsFan replied to DBounce's topic in Cameras

I shot with an xt3 and an nx1 on a recent project, and while the xt3's footage was visibly better in 4k 24 when i pixel peeped, i really can't say it really made a difference for my project. Of course having that quality in higher frame rates and a faster readout are real benefits, but even the nx1 peaked for diminishing returns in terms of color, compression, and dynamic range. At this point the biggest upgrades i want are for workflow: timecode, ergonomics, false color, and such. -

No problem! Good luck!

-

I haven't used Adobe since CC 2015 so I have no idea how Resolve's encoder stacks against theirs. I suspect that it's just that their H.264 encoder isn't great and that they don't really focus on that as it isn't a "pro" codec like ProRes or DNxHR. To be honest, I don't know much about ProRes in general, but my impression is that there are fewer options, whereas H.264 is a massive standard with many parts that may or may not be implemented fully.You'd have to do your own tests, but I would doubt that Resolve's ProRes encoder is as bad as their H.265 one. Actually, to be fair, their H.265 encoder isn't even their product, you have to use the native encoder in your GPU if you have one, and if you don't, you can't even export H.265 at all I think.

-

No converter ever seems to have the options I'm looking for. I first started using ffmpeg to create proxies. After shooting I run a little python script that scans input folders and creates tiny 500 kb/s H.264 proxies with metadata burn in. I tried other converters but I had so many issues with not being able to preserve folder structure, not being able to control framerate, not being able to burn in the metadata I want, etc. I've also had issues with reading metadata--sometimes VLC's media information seems off, but if I use ffprobe I can get a lot of details about a media file. I also use ffmpeg now to create H.265 files since Resolve's encoders are not very good. I can get SIGNIFICANTLY fewer artifacts if I export to DNxHR, and then use ffmpeg to convert to H.265 than if I export from Resolve as H.265. And recently I did a job that asked for a specific codec for video and audio, the combination of which wasn't available to export straight out. So I exported video once, then audio, then used fmpeg to losslessly mux the two streams. And another little project that required me to convert video to GIF. It's become a real swiss army knife for me. Yes, Resolve has a batch converter. Put all your files on a timeline, and then go to the deliver page. You'll want to select "Individual Clips" instead of "Single Clip." Then in the File tab you can choose to use the source filename.

-

So the current situation is that: 1. You have H.264 footage, but it is not linking properly in Premiere 2. You can convert H.264 to ProRes with Adobe Media Encoder, but it bakes a black bar at the bottom 3. You can convert H.264 to ProRes with Compressor, but there is a color shift 4. IF you could convert to a properly scaled ProRes file, you can get it to work properly in Premiere. Are all of those correct and am I missing anything major? If not, one option is to use ffmpeg for conversion. It's is my go-to program for any encoding or muxing tasks, and I've never had any issues with it encoding poorly or shifting colors. Is this an option?

-

I dont think thats a fair assessment of blackmagic. Prores is, for whatever reason, an industry standard. Blackmagic includes it because thats what standard workflows require. If blackmagic were responsible for making prores standard, then you could say they were just trying to market an inferior product. Moreover, as much as i believe that more efficient encoding is better, it is significantly easier on a processor to edit lightly compressed material. Editing 4k h265 smoothly requires hardware that many people simply dont have yet, such as the computer lab at a university i recently used. Prores was simply easier for me to work with. But for the most part, you are right. Processors seem to be a limiting factor for cameras at this point. Even "bad" codecs like low bitrate h264 can look good, if rendered with settings which are simply unattainable for real time encoding with current cameras. Its great to see sony making bigger and better sensors, but with better processors and encoders, last generation sensors could have better output.

-

Have you looked at the file in other programs? Can you confirm whether it's exclusively a Premiere problem, or is the problem with the files themselves?

-

Because downsampling decreases noise, thus giving more dr in the shadows (at the expense of resolution of course).

-

will we ever see the rise of the global shutter cameras?

KnightsFan replied to Dan Wake's topic in Cameras

My point was that eventually DR and sensitivity will be good enough, and the convenience of global shutter vs. mechanical will take over. 14 stops vs. 17, 14 vs. 140, same thing if we don't have screens that can reproduce it anyway. Global shutters are already sought after for industrial uses, which means there is always going to be some innovation even if consumers aren't interested. When GS sensors get good enough to put in consumer devices and make satisfactorily good photos at a low cost, then we'll see them proliferate, and us video people will benefit as well. I don't think the DSLR video market is large enough to push towards global shutters on its own. I think global shutters are more likely to be pushed in the photography world. Just my prediction. -

will we ever see the rise of the global shutter cameras?

KnightsFan replied to Dan Wake's topic in Cameras

If global shutter technology gets reasonably good, I imagine it will find its way into photo cameras in order do do away with shutters. If 10 years from now we have a choice between 20 stops of DR with 6ms rolling shutter vs. 14 stops with global shutter, I'm sure a lot of people would choose the latter.