-

Posts

8,047 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I can understand that it's an emotional thing, but I feel I have moved past attachment to any particular format or technology, and have become focused on the experience and the outputs. MFT is great, I have 5 MFT cameras and some really nice lenses, but it's a means to an end. I recently discovered I prefer the size of the GX85 to the GH5, and happily took the loss in image quality for the benefits in the shooting experience. If someone created a camera that was slightly smaller than the GX85 and had equal or better image quality and features, I'd consider switching, not giving the slightest consideration if the sensor was 1/2.5" or Medium Format. The S5 is a great camera, take lots of great images and enjoy it! Enjoy the images you captured with the MFT gear too. Cameras matter, but the better you become at using them, the less they restrict the quality of the work.

-

In terms of assessing the maximum size for printing, that's another whole debate, but a way to test it is to pull the image up on a 4K display, and then zoom in to the image until you start seeing the video compression artefacts, then reduce the size down a bit, and then measure how large the image would be if you printed it that large. Remember also that the larger you print an image the further back you will tend to view it from. A friend of mine won a certificate and got one of those canvas prints about 2' x 2' across. She sent in an early iPhone photo that was a close-up of one of her kids faces (it was a lovely photo) and the printing place said it wasn't "high enough quality" (read: not enough megapixels) and she had to insist they print it. She hung it high up on their photo wall and due to how the furniture was arranged you could see it throughout their open-plan living/dining/kitchen area, but you wouldn't have looked at it from closer than about 5' away, and even then you'd be looking up at it significantly. It looked great. Had it been taken with a high megapixel camera would it have been sharper? Sure. Would she have ever been able to afford a high megapixel camera? No. Would you ever take that kind of photo with a "real" camera? Unlikely.

-

It should be pointed out that it is not mandatory to have a short shutter speed for photos. Let's review two examples. The first is this guy, who is obviously playing at an extreme level here, with huge energy and drive: It is an incredible photo, there's no doubt. But does it convey the sense that he's pushing himself to the limit? The more I look at it the more it looks like it could be a still life, maybe he was on wires and it's a setup. In a sense, it implies motion but doesn't actually express any. Contrast that feeling to this image: There is no denying this. Not only is it a great photo, and not only does it show that world-class people are pushing themselves to the limit (the three guys on the right definitely are!) but it shows the results of that effort. Now scroll back up to the first image - what is the energy level of the first image now? I would suggest that the obsession with short shutter speeds is part of the same obsession with getting "sharp" images, which is driving lenses to be clinical, sensors to be enormous, megapixels to be endless, computers to be behemoths, and images to be soul-less. To be a bit practical, there are likely limits to how much blur you want in a scene, and it's relative to the amount of motion involved, which often varies quite significantly, even from moment to moment in a lot of sports. Some more examples of varying amounts of blur.. This one is a great image, but if it was important who the defender was then it's too blurry. If not, then maybe not.. Sometimes it's not important to get anything completely sharp.. images without feeling are of limited value, and I find that motion is full of feeling. Here's one that is full of emotion (especially if you knew the subjects): Also worth mentioning is that you don't always have to have the subject still and the background blurred, it can be the other way around, but it changes the subject of the image somewhat. and with video you're taking lots and lots of photos so the creativity is endless... Remember - don't let the technical aspects blind you to the point of capturing things in the first place.

-

I did a "Send Feedback", which thanks to the ability to attach a screenshot was pretty straightforward in showing the issue. Interestingly, there's no ticket number or anything - you pitch your feedback into the void and that's it apparently. As a paying customer normally that buys you some kind of human support, I guess not with YT? Or maybe not for me as I'm in the family account but I'm not the account that paid for it.

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

Yeah, that guy isn't the most thorough reviewer, but of course he's probably a better barometer of what the YT Camera Bros think of it than someone like Gerald. -

It seems that it's only being tested on web, and that it still needs more work... I click on it, it says I need Premium membership (which is odd considering I'm logged in and the YT logo says "Premium"), then when I click on the "Get Premium" button it takes me to a page that says the offer isn't available and I need to go into settings to manage my subscriptions and purchases. I'm part of a YT Premium Family Sharing account, so maybe that's the issue.

-

Just saw a new option appear in YT.... Some quick googling revealed that it's only for YouTube Premium (paid) membership, which I am a member. Anyone else seen this? Do we know what is going on under the hood?

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

The few YT camera hipsters I've seen review it had positive things to say... TL;DR: Stabilisation - "gone are the days of needing a gimbal" Colours and DR - "Super easy to handle" "easy to colour grade and get a nice aesthetic" (buy my LUT pack) AF - "GH5 was terrible" "S5 is so reliable" "it sticks onto him the whole time" "the AF is unbelievable" SSD recording - "prores is easy to work with" "speed up your workflow" "This is one of the sexiest looking cameras out there" "I would highly recommend checking it out". -

Help me on an eBay hunt for 4K under $200 - Is it possible?

kye replied to Andrew - EOSHD's topic in Cameras

Are you perhaps thinking of the thread where we posted our submissions to the EOSHD $200 camera challenge? or the thread with the results? Submission thread: Voting / results / discussion thread: I love that my butchered GoPro is the thumbnail of that thread! Not sure if everyone else would see the same shot, but I'm pretty sure that the camera gods would be happy knowing it died trying to be converted to a D-mount camera. -

I agree, zooming definitely increases the visibility of the noise in the image. One thing I think worth remembering is that cinema cameras have traditionally been quite noisy and noise reduction used to be a standard first processing step in all colour grading workflows. It is only with the huge advancements in low-noise sensors, which allowed the incredible low-light performance that high-end hybrid cameras have today, that this noise has been reduced and people have gotten used to seeing clean images come straight from the camera. I just watched this video recently, and it is a rare example of high-quality ungraded footage zoomed right into. He doesn't mention what camera the footage is from, but Cullen is a real colourist (not just someone who pretends to be one on YT), so the footage is likely from a reputable source. It's also worth mentioning that the streaming compression that YT and others utilise has so little bitrate that it effectively works as a heavy NR filter. This really has to be seen to be believed, and I've done tests myself where I add grain to finished footage in different amounts, uploaded the footage to YT, then compared the YT output to the original files - the results are almost extreme. So this also plays a role in determining what noise levels are acceptable.

-

Interesting comparison. For anyone not aware, it's worth noting that since the iPhone 12, they quietly upgraded to doing auto-HDR and the h265 files are also 10-bit, as well with the prores files being 10-bit of course. It is interesting how little they promoted this - I have an iPhone 12 mini and didn't even know about it for the first year of owning it! This is what really unlocked the iPhone for me - I have used iPhones since the 8 for video but prior to the HDR / 10-bit update it really wasn't a competitor to anything other than cheap camcorders, as it lacked the DR to record without over-contrast or clipped highlights and bit-depth to really be able to manipulate it sufficiently in post to remove the Apple colour science and curve.

-

I was quite excited when Apple introduced prores support, thinking that it would mean that images would be more organic and less digital-looking, but wow was I wrong - they were more processed than ever. I think the truth is hiding partly behind that processing, where the RAW image from them would struggle in comparison. I shot clips at night on my iPhone as well as other cameras, and I noticed that the wide angle camera on my iPhone was seriously bad after sunset - even for my own home video standards. I did a low-light test and worked out that the iPhone 12 mini normal camera had about the same amount of noise as the GX85 at F2.8, but the iPhone wide camera was equivalent to the GX85 at F8! and that was AFTER the iPhone processing, which would be way heavier than the GX85 which had NR set to -5. Apple says that the wide camera is F2.4, so basically the GX85 has about a 3-stop noise advantage. There are lots of situations where F8 isn't enough exposure. I'm not sure where we're at in terms of low light performance, but this article says that this Canon camera at ISO 4 million can detect single photons, so there is a definite limit to how large each photosite can be. Of course, more MP = less light in each one. In terms of my travels, I still prefer the GX85 to the iPhone when I can - being a dedicated camera it has lots of advantages over a smartphone.

-

One of the (potentially numerous) differences when swapping from 4K to 6K (on a 4K timeline) is that the downsampling goes from being before the compression to afterwards. Compression is one of the significant contributors to the digital look IMHO (just compare RAW vs compressed images side by side), and if you can downsample from 6K to 4K in post then you're downsampling and also interpolating (blurring) the compression artefacts that happen on edges. I would favour a workflow where the image is debayered, downscaled, then recorded to SD with very low or even no compression. It would effectively have the benefits of downsampling and the benefits of RAW, but without the huge file sizes of RAW at the native resolution of the sensor. Of course, no-one else thinks this is a good idea, so....

-

I find IBIS to be critical to my workflow personally, as I shoot exclusively handheld and do so while walking etc, but I definitely understand that I'm in the minority. But yeah, having that eND would be spectacular - set to auto-ISO / auto-eND / 180 shutter / desired aperture for background defocus and you're good to go!

-

Lots of them are, but as you brought it up, here are my impressions on the above: It's still far too sharp to be convincing - I noticed this in the first few seconds of the video - TBH my first impression was "is this the before or after footage?".. of course, once you see the before footage then it's obvious, but it didn't immediately look like film either The motion is still choppy with very short shutter speeds - this would require an VND which isn't so easy to attach to your phone I think that people have some sort of hangup about resolution these days and as such don't blur things enough. For example, here are three closeups from the above video. The original footage from the iPhone: Their processed version: A couple of examples from Catch Me If You Can, which was their reference film: As you can see, their processed version is far better, but they didn't go far enough. I've developed my own power grade to "de-awesome" the footage from my iPhone 12 Mini and detailed that process here, but here's a few example frames: I'm not trying to emulate film, I'm just trying to make it match my GX85. Here are a couple of GX85 shots SOOC/ungraded for comparison: I haven't got the footage handy for the above shots, but here are a few before/afters on the iPhone from my latest project from South Korea. iPhone SOOC: iPhone Graded: iPhone SOOC: iPhone Graded: iPhone SOOC: iPhone Graded: I'm happy with the results - they're somewhere in between the native iPhone look (which I've named "MAXIMUM AWESOME BRO BANGER FOR THE 'GRAM") and a vintage cinema look. My goal was to make the camera neutral and disappear so that you don't think about it - neither great nor terrible. Going back to Dehancer / Filmbox / Filmconvert / etc.. these are great plugins actually and I would recommend them to people if they want the look. I didn't go with them because I wanted to build the skills myself, so essentially I'm doing it the hard way lol. The only thing I'd really recommend is for people to actually look at real film in detail, rather than just playing with the knobs until it looks kind of what they think that film might have looked like the last time they looked which wasn't recently... I keep banging on about it because it's obvious people have forgotten what it really looks like, or never knew in the first place. And while they're actually looking at real examples of film, they should look at real examples of digital from Hollywood too - even those are far less sharp than people think. The "cinematic" videos on YT are all so much sharper than anything being screened in cinemas that it's practically a joke, except the YT people aren't in on it.

-

I have a vague recollection that some cameras were able to tag or put comments onto specific images as you were taking them (or just afterwards) and that the info would be embedded in the images metadata or some such. It might even have been you that mentioned it. Anyway, if you were taking full-res stills and they were being sent instantly to the photo/video village for quick editing and upload to the networks and social media channels, then you could simply tag which images you thought should be cropped? If you are shooting full-res images then cropping in post is the same as cropping in-camera I would imagine?

-

Great post - I just wanted to add to this from a colour grading perspective. I did a colour grading masterclass with Walter Volpatto, a hugely respected senior colourist at Company 3, and it changed my entire concept of how colour grading should be approached. His basic concept was this: You transform the cameras footage into the right colour space so it can be viewed, and apply any colour treatments that the Director has indicated (like replicating view LUTs or PFEs etc) - this is for the whole timeline You make a QC pass of the footage to make sure it's all good and perhaps even out any small irregularities (e.g if they picked up some outdoor shots and the light changed) At this stage you might develop a few look ideas in preparation for the first review with the Director Then you work with the Director to implement their vision The idea was that they would have likely shot everything with the lighting ratios and levels that they wanted, so all you have to do is transform it and then you can fine-tune from there. Contrary to the BS process of grading each shot in sequence that YouTube colourists seem to follow, this process gives you a watchable film in a day or so. Then you work on the overall look, then perhaps apply different variations on a location/scene basis, and then fine-tune particular shots if you have time. He was absolutely clear that the job of the colourist was to simply help the Director get one step closer to realising their vision, the last thing you want to do as a colourist is to try and get noticed. It really introduced me to the concept that they chose the camera package and lenses based on the look they wanted, then lit each scene according to the creative intent from the Director, and so the job of a colourist is to take the creativity that is encapsulated into the files and transform them in such a way that the overall rendering is faithful to what the Director and Cinematographer were thinking would happen to their footage after it had been shot. Walter mentioned that you can literally colour grade a whole feature in under a week if that's how the Director likes to operate. I have taken to this process in my own work now too. I build a node tree that transforms the footage, and applies whatever specific look elements I want from each camera I shoot with, and then it's simply a matter of performing some overall adjustments to the look, and then fine-tuning each shot to make them blend together nicely. In this way, I think that the process of getting things right up front probably hasn't changed much for a large percentage of productions.

-

I don't have any technical information or data to confirm this, but my theory is that Sony sensors are technically inferior in some regard, and this is what prevents the more organic look from the majority of modern cameras. My understanding is that these are the only non-Sony-sensor video-capable cameras: ARRI (partners with ON Semiconductor to make sensors to their own demanding specifications) Canon BM OG cameras (OG BMPCC and BMMCC) Digital Bolex (Kodak CCD sensor) Nikon (a minority of models - link) RED (there's lots of talk about who makes their sensors, but it's hard to know what is true, so I mention them for completeness but won't discuss them further) What is interesting about this list is that, to my understanding at least, all these cameras are borderline legendary for how organic and non-digital their images look. Canon deserves a special mention as its earlier sensors are really only evaluated when the footage is viewed RAW, either taking RAW stills or using the Magic Lantern hack). There are questions if it could be related to CCD vs CMOS sensor tech. This thread about CCD sensors from Pentax contains dozens/hundreds of images that make a compelling case for that theory. I have both the OG BMPCC and BMMCC as well as the GX85, GH5, Canon XC10, Canon 700D, and other lesser cameras and have tried on many occasions to match those to the images from the BM cameras, and although I managed to get the images to look almost indistinguishable (in specific situations) they still lacked the organic nature that seems to be shared across the range of cameras mentioned above. I regularly see images from my own work with the Panasonic cameras and my iPhone, as well as OG BM camera images and RAW images from the 5Dmk3 and Magic Lantern (courtesy of @mercer) and once you get dialled into the look of these non-Sony sensors, it's hard not to notice that the rest all look digital in some way that isn't desirable.

-

I also wish they'd continue pursuing the look. It wouldn't be too difficult either, as you can design a camera that has both looks - modern and classic. It's been shown that the Alexa is simply a high-quality Linear photometry device, and that the colour science is in the processing rather than in the camera. This leads me to think that you should be able to design a sensor to be highly-linear and then just have processing presets that let you choose a more modern image vs a more organic one. Depending on the sensor tech, you could even design a sensor to have a 1:1 readout for the modern look, and some kind of alternative read-out where the resolution is reduced on-sensor to match the look of film, which might even be a faster read-out mode and give the benefit of reduced RS. Obviously I'm not a sensor designer, so this might be a technical fantasy, but it would be worth exploring. Otherwise, cameras like the GH5 have shown that you can re-sample (either down or up) in-camera, so that sort of processing could be used to tune the image for a more organic look.

-

I think that we're a very long way away from people only having a VR display and not having a small physical display like a phone. Think about the progression of tech and how people have used it.. Over the last few decades people got access to cheaper and smaller video equipment with more and more resolution, and also to cheaper TVs that also got larger and higher resolution (and sound systems got better too), but despite this people still watch a considerable amount of low-resolution content on a 4-6" screen. This shows that people value content and convenience rather than immersion. The other factor that I think is critical is that photography / videography is highly selective. The vast majority of shooting takes place in an environment designed for some parts to be visible in the footage (and are made to look nice) and other parts are not (and often look ugly as hell). The BTS of a commercial production shows this easily and the BTS of most bedroom vloggers would likely show that all their mess was just piled up out of frame! Much/most/all(?) of the art of cinematography is choosing which things appear in the frame and which things don't - if every shot can see in every direction then it's a whole new ballgame. Casey Neistat played with this in a VR video he did some years ago, and I recall him talking about how they designed it so that the viewer would be guided to look in the right directions at the right times, rather than looking away from the action and not getting the intended effect. The idea that "where to look" would pass from the people making the content to the people consuming the content is something we haven't ever had in the entirety of the visual arts (starting from cave paintings, through oil paintings, to photography, then moving images). Furthermore, even if the first part happens and we all have a VR phone rather than a display that we hold in our hand, the VR goggles can display a virtual screen hovering in place just as easily as VR content, so VR goggles will enable VR content but won't mandate it. For these reasons, I don't think the industry will be moving to VR-only any time soon.

-

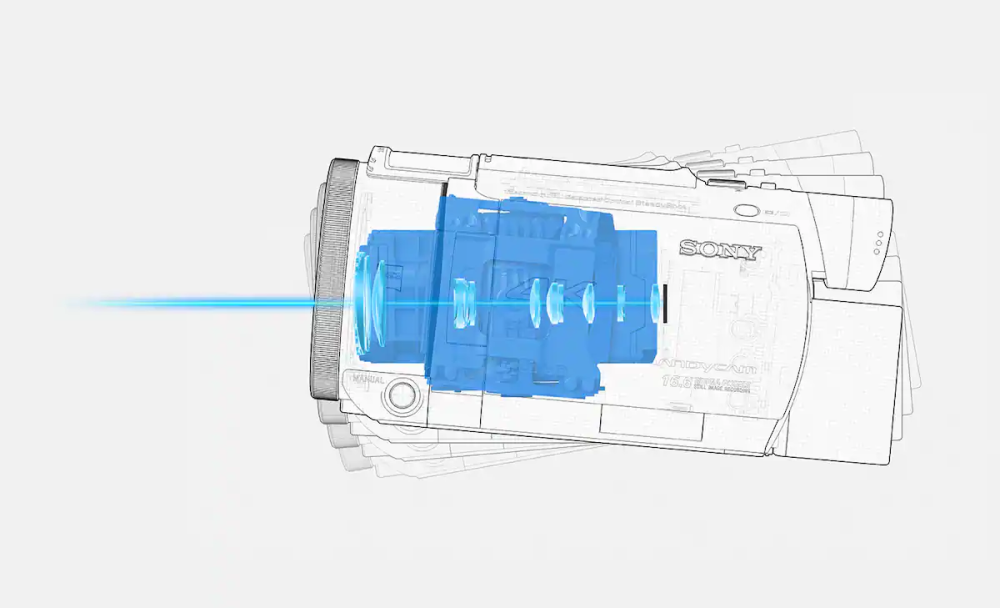

I'm definitely over arguing, but I though I'd close off this discussion of the stabilisation in the X3000 because I wasn't aware of how it worked and I think it's different to IBIS and to OIS. It seems to rotate an assembly of the sensor and lens together, sort of like an internal gimbal. I suspect this is functionally different to IBIS and OIS because in each of those they only shift one element of the lens, and the other elements remain fixed, potentially leading to distortions in the optical path. I suspect this is what causes the extreme corner wobble with extreme wide-angle lenses paired with IBIS. Also different to IBIS is that is is only a 2-axis stabilisation system, yaw and pitch: This lacks rotation, which has caused issues for me in the past - one example was when I was holding it out of the door of a helicopter and it was being buffeted by the winds and rotating the camera quite violently. I never managed to find a stabilisation solution in post that compensated for the lens distortion, but I suspect that if I did I would have discovered that there was also rolling shutter issues in there as well because of the fast motion. Anyway, it's mostly been replaced by my phone now, although I should compare the two and see what the relative quality of each is. The wide-angle on my phone wasn't good enough for low-light recording unfortunately. I just wish people would talk about film-making.

-

I'm also kind of over the GH5, despite it being my 'best' camera! On my last trip, which was to Korea, I ended up shooting much more with the GX85 and 14mm F2.5 pancake prime, just because it was smaller, faster, and less invasive, leading to the environment I was shooting being nicer, no-one talks about how the camera choice can make the subject look better! I also love camera tech, but I wish it was going towards the images that people want, rather than just treating specifications like memes, but somehow people don't understand that the specs they want don't lead to the image they want. It's easy to think that we're just hanging out and talking, but if you look around online this is one of the most in-depth places where cameras are discussed - most other places are one sentence or less - and so I would be surprised if we weren't influencing the market. I mean, can you imagine if instead of talking about megapixels, we were creating and posting stunning images, and every thread was full of enthusiastic discussions and great results? It would have a ripple effect across much of the camera internet. I'd go one step further than you - It's easier to talk about technical aspects than it is about creativity. I've partly swapped to the GX85 because I realised that it creates an image superior to probably half or two thirds of commercially produced images being streamed right now. The difference between me shooting with a GX85 and all content shot before 2020 that is still being watched is everything other than the camera. There are shows on Netflix that aren't even in widescreen, they're that old, and yet the content is still of interest. I had an ah-ha moment when I analysed the Tokyo episode of Parts Unknown (which won an award for editing) and saw the incredible array of techniques and then did the same analysis on some incredible travel YT videos and realised it was a comparison between a university graduate and a pre-schooler. Compared to the pros, we all know very little, and it's hard to talk about that, so we just talk about cameras. It's almost universal - it's almost impossible to get anyone online to talk about creativity regardless of what discipline is involved.