-

Posts

8,047 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Not really, and it's sort-of complicated. The short version is I choose the mode with the bit-rate and codec I want, then look at what resolution options the camera gives for those settings. The long version is that it's more complicated, and you should really do tests to see what works best for you as it might vary between situations, for example if it involves more or less movement in the frame, more or less noise in the image, etc. At a high-level, there are a few advantages to shooting at the delivery resolution: The downsampling happens in-camera, rather than in your NLE, so it should be faster in post Rolling shutter might be less Bit depth on the sensor read-out might be higher But, there are also some advantages to shooting a higher resolution and downsampling in post: Downsampling in post is likely to be higher quality than in-camera, because in-camera it has to be done real-time and things like battery life and heat dissipation are considerations Any non-native resolutions might involve line-skipping, pixel binning and other non-optimal downsampling, which this may avoid Compression artefacts tend to scale with the resolution, so downsampling to timeline resolution in post lessens how visible these are You can punch-in in post more if the need arises, which also occurs when stabilising For a given bitrate the image quality is normally higher at a higher resolution, so you're starting with a better position For my setup, I shoot the GH5 in the 200Mbps ALL-I mode because it's ALL-I and therefore is nicer in post. I decided this over the 150Mbps 4K mode because that is IPB, making it much more intensive to edit with, and also over the 400Mbps 4K ALL-I mode because that would require me to buy a UHS-II card which was hugely expensive. However, I shoot the GX85 in the 4K 100Mbps IPB mode rather than the 1080p 20Mbps IPB mode. The 4K mode is IPB, but having 100Mbps is more important than the editing performance. I'd rather have a 100Mbps ALL-I mode, but if we're wishing for things then we'd be changing topics, so I choose the best from what I have. In terms of the GH6 and Prores, they're all 10-bit ALL-I modes (as they should be!) so the performance in post is mostly a moot point compared to IPB codecs, so it's probably more a case of making the trade-off between disk space and if you need the extra resolution for anything in-particular. I think with projects that are more straight-forwards then shooting at 200Mbps 1080p is perfectly sufficient. Remember that many low-budget feature films were shot with 1080p Prores HQ around 170-180Mbps and they were screened in theatres on screens larger than the walls of most private home-theatres, so the image quality should be sufficient for anything we could be doing. If you're shooting in uncontrolled situations where post is difficult, or if you're unsure what the footage will be used for in future, or if you're doing VFX, essentially if there are any special circumstances around the project, then having more resolution and MUCH higher bitrates might be worthwhile. There's an argument to be made for shooting slightly higher than the delivery resolution, at maybe 2.5K for a 1080p master, so that's something to think about as a sort-of middle ground, if that is available in the GH6 - I'm not that aware of what modes it offers and I know the GH5 has some intermediate sizes, like 3.3K 4:3 in the anamorphic modes.

-

Hopefully that will get better over time. Overall I view it as a good thing - if you're willing to go to fully-manual lenses then the budget Chinese manufacturers have drastically reduced the price of lenses over even the last 5 years, and they're now doing the same to anamorphics.

-

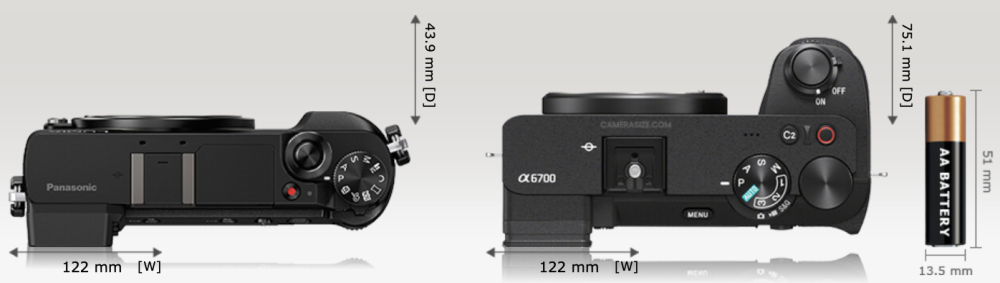

Yes, according to Camerasize, it is a similar size as the GX85: Obviously it's thicker and has a larger grip, but this will be dwarfed by the size of the lenses. Here are both with their kit zooms, which are probably amongst the smallest likely to be used if size was a key consideration: While I'm no fan of Sony cameras, it is good to see that there is still an interest in making small cameras - camera size matters and the general approach from the industry is that only people who can have huge cameras deserve image quality, which is simply not true.

-

This page is my reference for bitrates and other technical details: https://blog.frame.io/2017/02/13/compare-50-intermediate-codecs/ It doesn't show all the resolutions, but Prores is a constant bitrate-per-pixel (so UHD is 4x 1080p and DCI4K is 4x 2K) so you can always figure it out for custom resolutions. That said, for UHD, the bitrates are: HQ is 707Mbps, 422 is 471Mbps and LT is 328Mbps. If their 5.7K is the same aspect ratio as UHD then it would be 2.2x the number of pixels and bitrate. I guess 400Mbps isn't that far off if that's for 5.7K. I know that 1080p HQ is about 175Mbps, and the attraction for me was that UHD Prores Proxy was 145Mbps, so you get the benefit of the higher res without the ridiculous data rates. Still, 400Mbps isn't that much more than 175Mbps.

-

I've found a couple more videos where it's enabled, but I can't see much difference. I wonder if it would be more visible if the material had grain or lots of fast movement.

-

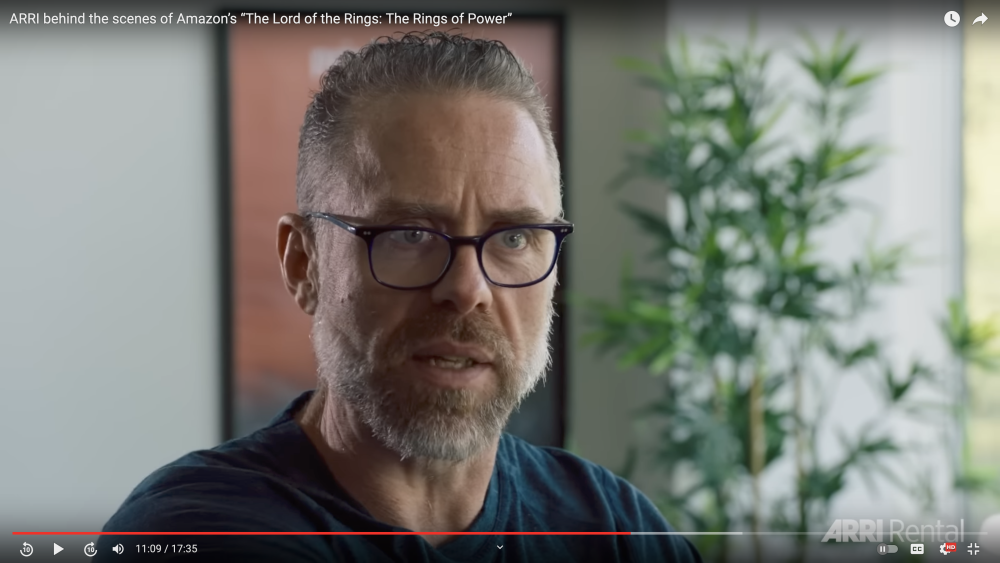

It's back! I skipped through ARRIs YT channel to look for 1080p videos that had it, and I found a couple of interesting things: ARRI upload videos in all sorts of resolutions, 4K, 1080p, 1440p, even 720p, even recently The 1080p uploads they did only have the Enhanced Bitrate option very recently - the 1080p ones uploaded more than a couple of months ago didn't have the option This video has it: Here's a comparison. This is the 1080p video displayed full-screen on my UHD display and then a screen-grab taken. Standard 1080p: Enhanced Bitrate 1080p: Standard 1080p: Enhanced Bitrate 1080p: Oddly, it's not available on this 1080p upload, which is more recent than the above. @kaylee Do you have it enabled yet?

-

I think you might be low-balling your estimate...

-

I see metalworkers releasing things like Loctite by just applying heat. They typically do it with a blowtorch, which obviously I wouldn't recommend, but it might be a simple case of applying a soldering iron for a bit perhaps? It depends on how much superglue you used. "A few dabs" isn't very scientific a measurement!!

-

Just a hint? I'd suggest that there's more than just a hint of je ne sais quoi from the Komodo!! Good practical questions. If the battery life wouldn't last then a V-mount could be added, potentially via a cable with the battery kept elsewhere, like in a pocket, but it would potentially add to the weight of the rig, so should be taken into consideration. One trick that I got from a fellow forum member for stability and holding a camera for long periods was to buy a belt and put a tape-measure pocket on it, and then put the camera on a monopod and put the foot of the monopod into the tape-measure pocket. This puts most of the weight of the rig onto the belt, and provides a third point of contact, although your hips aren't completely stable if you're walking around. It's quite a minimal setup, can easily be held with only one hand (potentially even quickly changing batteries like this), and the rig can easily be quickly taken out of the belt pocket and used as a normal monopod or packed away etc. If @backtoit put the camera on a gimbal and then extended the handle of the gimbal with a monopod into the belt then that might help stabilise things sufficiently. They might still get the vertical bobbing up and down motion when walking, but it might not be that visible.

-

Artificial voices generated from text. The future of video narration?

kye replied to Happy Daze's topic in Cameras

Also, paranoia seems to be setting in..... https://petapixel.com/2023/07/11/real-photo-disqualified-from-photography-contest-for-being-ai/ The judges looked at the metadata (it was taken with an iPhone) but couldn't work out if it was real or not, so disqualified the image because they weren't sure. -

Artificial voices generated from text. The future of video narration?

kye replied to Happy Daze's topic in Cameras

This one is incredible. I'm not sure how available it is for users though - I saw this video posted by the developer who is sharing their work on the liftgammagain forums just this morning. -

Not a user, but having RAW 5.7K60 and C4K120 and 4.4K anamorphic seems a lot like it's now in cinema camera territory. Things like the ability of RED cameras to have RAW at high frame rates was one of the things that I thought separated them from the usual prosumer cameras that mere mortals like I could afford. Maybe I'm just behind the times, but if you were shooting something serious like high-end music videos / high-end docs / low-budget features and had the ability for 5.7K up to 60p (for those emotional/surreal moments) and also C4K 120p for any special effects shots (like if shooting an emotional sports doco) then it makes it a serious camera for those tasks.

-

Wow - that looks really good! I shoot with my phone regularly so I see a reasonable amount of iPhone footage and I agree that the iPhone doesn't look that good. To my eyes it's clearly RAW. Despite the low bitrates that YT uses to compress things, if you give it something shot on RAW then the quality of the YT stream goes up significantly - things like the falling snow and fine detail gives this away. Here's an example of a very high-quality RAW capture, but this is from 2015 and only uploaded in 1080p: It has the same look to me. Definitely agree with this. I would go further and suggest that the lesser phones (any phone shooting a compressed codec) are modern Super 8 emulations. I say this because: they get used for home videos like 8mm used to be and are the perfect tool for shooting for fun and not thinking about the quality of the work by the time you process the footage so the compression isn't visible they're not as sharp as RAW 4K they're likely using SS to expose there's enough hand-held motion shown in the frames that it's a different look to a S16 film camera I have to resist the temptation to turn random iPhone shots in my projects into 8mm film emulation 🙂

-

If you take time-lapses using RAW stills then they look pretty good. No-one is expecting it to look like an Alexa, but it looks way better than the prores files that the latest iPhone creates.

-

Absolutely! I must admit that my excitement to hear that Apple introduced prores was topped only by my disappointment when I saw that they were the first people in history to implement prores to record an image that had been dragged through all 7 levels of hell first, rather than it just being a neutral high-quality compressed version of the RAW image.

-

No worries! A little bit of excess enthusiasm is the least of our issues!! What are you planning on using the RAW for? or is it just a recent discovery?

-

Nice! They definitely look like movie stills and not photographs. What was the show LUT? Interesting range of tones/hues there.

-

My understanding of it was that a colour timer would take the negative and make a positive print using a special machine where each frame of the film was exposed via a separate light for Red, Green and Blue, and the machine allowed the exposure time for each to be adjusted. Thus the phrase "colour timing". Adjusting all of them would raise/lower the overall exposure and adjusting them in relation to each other would adjust the WB. The controls from that operation live on as the "printer lights" controls in Resolve and other software, as they literally adjusted the lights of the printer. https://en.wikipedia.org/wiki/Color_grading#Color_timing

-

It's definitely very different shooting conditions... I laughed so hard years ago when I saw the review of the P4K by CVP (IIRC) and the guy took it out and took some random shots in public including some shots of his companion while riding on the train. His comment was that he had to work "incredibly fast"... HAHAHAHAHA. It was a person sitting motionless for several minutes!! I can go from zero to rolling in something like 5 seconds, and there are situations where I'll still miss a quarter of the shots I see.

-

@Brian Williams On the contrary.. Lots of interest, as expressed in this thread! Smartphone sensors have enormous potential if we could just get all the ridiculous over-processing out of the way to get to the image. I've developed a powergrade that matches my iPhone to my GX85 to suit the work I do, but RAW would be the far easier option, that's for sure!

-

Vintage lenses tend to be 10x the price for the "best" version compared to 2nd place, which is great if you don't happen to need the fastest/sharpest one. Often, if you can find a lens test that does compare the "best" one with the "losers" then you'll find there's not much of a difference between the best one and the next best one (and maybe the one after that..). A year or two ago I thought that Minolta glass was probably the next one that would become super popular, but I haven't kept up with it so I don't know if those have rocketed up yet or not. They're spectacular lenses though. Realistically, if you watch enough of those "OMG I found this incredible $30 vintage lens" then you realise that somewhere between half and three-quarters of all vintage lenses are actually great performers. Even more than that if you're interested in lenses that have a more degraded set of optics, or are mechanically only so-so.

-

Definitely not mid-season! I suggest: Learn the interface and how to get around Learn where the basic tools are and what they do Learn Colour Management and how to set everything up correctly At that point you'll have the knowledge to start getting into all the fun stuff like playing with other colour spaces and splitting channels and using blending modes etc. The journey in Resolve has three phases: You can't yet swim, so you wade out a bit, but can't go far You learn to swim and have fun swimming around a bit further from shore and grow your skills and confidence You start to explore what's happening underneath the surface and you dive down and suddenly realise that you've only been exploring the surface and that there is no bottom..... I've read posts on the colourist forum where someone makes a one sentence post and it took me 8+ hours over multiple days to work out what they said, how to do it myself, what it meant, and how I might use it.

-

Good points and they strike me as mirroring the workflows of Cullen Kelly and Walter Volpatto, the only two professional colourists who I've heard break down their workflow. The broad process for both of them seems to be: Import footage and get things setup (or, in our cases, edit the footage!) Setup the colour management correctly so everything is well behaved Setup the global 'look' If required, setup any specific looks for groups of shots Then start reviewing the shots individually (in passes) to even things out, troubleshoot, and then to really polish things up Of course, I tend to bounce between 1 and 2, because for me the colour and visual appeal of the shots matters in terms of which ones I choose. This is in complete contrast to how all the people that play 'colourist' on YT do it - they spend 10 minutes on one shot, whereas pros often only get 1 minute per shot, or less, so would be screwed if they didn't start broad and narrow down. I also particularly like Cullens approach, or at least the approach he's taken to his more professional LUT pack, which is that it's modular, so there are separate LUTs for contrast, saturations, hue rotations, split-toning, and other look adjustments, with there being several options in each of these categories. He recommends mixing and matching and applying them and adjusting the opacity of each to taste. For me, considering I tend to shoot and grade the same sort of material, I'm developing my own default node tree with everything all setup and ready to adjust as required. On many projects it's just a matter of applying the overall look and then just going into the Lightbox mode which shows all your shots at once and then just adjusting any that stick-out and then exporting it and doing a final watch-through. The BM grading panels are a bit of a clue as well, having Next Node and Previous Node buttons, but not buttons to create new nodes, on the smallest one at least, which implies to me a default node structure already applied to each shot. I've been meaning to go back and re-watch the Walter Volpatto masterclass now that I've actually gotten my head around colour management etc. Colour Management was the biggest breakthrough for me. Shooting on cameras that didn't have profiles in ACES/RCM meant that I had a really hard time adjusting levels or WB without the mids and highlights/shadows moving in different ways, but convert to DWG and grade and convert to 709/2.4 and then grade in DWG and all the controls magically do what you'd want them to do.

-

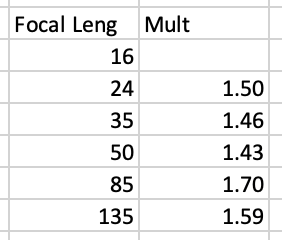

I thought that the standard focal lengths were normally designed to be spaced out relatively evenly in increments where you could move the camera closer/further to fine-tune. Obviously there are variations within that progression, and also variations in lens line-ups (like 28mm, 56/58mm, 90/100mm lenses etc) but that when you see matched sets of cine lenses, those were the main ones. There were quite a lot of other bundles with other spacings, which was interesting, although I did sort from highest price so started with the lens sets with every lens they made!!

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

Indeed I do! But seeing as I live in Australia, I tend to keep local hours 😉