-

Posts

8,046 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Yes, the video from the iPhone is no match for the RAW android video. I suspect the BM camera app will be subject to all the same image processing that the normal app is subject to, just with adjustable compression ratios. I'd love to be proven wrong, but I haven't seen any examples of third-party apps having less processing than the default app.

-

Good point and I think it's one I have gradually been becoming aware of. It's a funny thing, when I was using the GH5 I got used to using the EVF, but since swapping back to the GX85 I went back to using the screen. Looking back on it, I think I used the GH5 EVF partly because it provides a third point of contact for stabilising things, and I suspect partly because the flippy screen is a bit of a pain to tilt because you have to pull it out to the side first and then it sort-of gets in the way if your left hand is cradling the camera and operating the lens. I have vague memories about thinking it felt odd to swap to the EVF when I did that, and then that it was odd to go back to using the screen when I changed back, but after you get used to something then it becomes second nature. I definitely notice that people immediately look at you when you raise a camera to your eye...

-

Do people say "P6K" when they mean the Pro version? I haven't seen it, but maybe it's something I've missed. But yes, confusion! Yes, that would be quite a good implementation. A bit messier in the rig, but still not ridiculous. I saw a discussion video online that was saying their app was iPhone only and didn't control their other cameras, but it does raise the potential for their app to be expanded to also be able to connect and remotely monitor and control other cameras, which would be pretty good. I'd go so far as to say that the app might have even started as a remote control app where they decided to allow it to use the iPhone camera, which then got rolled out in the first version. I don't have a deep understanding of their live switching options, but what I do know about it is very impressive and if I was a live streamer / podcaster then it would be my first call to put together a setup. The BM cameras aren't a good fit for what I do because they're not really designed for people like me, but it does seem that they really nail it for their target customers. Absolutely! I had Filmic Pro installed on my phone from ages ago and recently updated it and opened it up and saw it wanted to charge me for just using it, even though I had previously bought it outright. Let's just say it got removed from my phone pretty darn quickly after that discovery! "Don't let the door smack you in the ass on your way out!" Oh wow, that's a pretty aggressive move in the ecosystem wars! BM is also notable for not supporting Prores RAW in Resolve too, essentially forcing Resolve folks to either convert their files or switch to BRAW. I'd be curious to see what the equivalent bitrate BRAW file was like compared to Prores - I suspect it might be similar quality. If I ended up with a more modern camera I'd be trying this as a first step. BRAW provides a "custom non‑linear 12‑bit space designed to provide the maximum amount of color data and dynamic range" which is something only Prores 4444 etc can provide, so shooting BRAW gives the extra bit depth that Prores typically doesn't on these cameras. Prores HQ in UHD is ~700Mbps, and BRAW UHD 5:1 is about ~670Mbps. So BRAW goes to a higher bitrate than most Prores implementations (in the BRAW 3:1 mode), and also goes lower (Prores Proxy UHD is ~150Mbps and Prores LT is 328Mbps and BRAW 12:1 is about 280Mbps, so in the middle of those two).

-

Interesting! I did scour the product page and specs hoping for a wifi interface and an associated control app, but alas. It also uses the USB-C port for ethernet control, so if you're recording over the USB-C you can't use it for control as well. The alternative would be to have a SDI recorder/monitor and use the USB-C as a controller, but that's a significant step-up in cost for the recorder. Of course, if you paired it with a BM Video Assist recorder then that would be a sweet package for those who can deal with rigged-up camera packages.

-

I just downloaded it.. it has shutter angle!

-

Can you use the new Micro Studio Camera as a 4K60 stand-alone camera? https://www.blackmagicdesign.com/uk/products/blackmagicmicrostudiocamera It has a battery backup, so can you just run it from the battery permanently? It can record BRAW to USB, it has HDMI out for monitoring (like the previous model).

-

Just looking at the iPhone app, and there are some interesting things in here..... https://www.blackmagicdesign.com/uk/products/blackmagiccamera/techspecs/W-APP-01 Apple ProRes 4444 Automatically generates 1920 x 1080 HD HEVC proxy files for each recording 24fps, 25fps, 30fps, 48fps, 50fps, 60fps False Color Guides Display LUT Guides Timecode Recording - Record Run or Time of Day (TOD) timecode Some of these are likely dependent on specific iPhone models, but it looks like they've been digging into the iPhone camera spec and simply unlocking everything they think would be useful? Then I'd just need to figure out how to launch an app without it taking 17 steps - the default one is on the Lock Screen FFS.

-

😆😆😆 Love your work, as always!! Looks like they've left room for the Pro version to be released in next-years new camera announcement.. unless you can't fit a FF sensor and ND mechanism into that chassis? Of course, the real issue is the acronyms! P6K could be referring to the old S35 6K model or the new FF model, or the new S35 G2 model where the person dropped the G2 part for brevity! Oh the confusion!!

-

I really hope that you're wrong, but your points make sense and it might simply be how the economics play out. I'd hope that even if they released an updated GX camera, they could retain the same body/battery/EVF/etc, but offer improved bitrates / frame-rates / profiles. A GX95 that took advantage of most/all of the modes on the new sensors would be wonderful. In terms of the idea that smartphones killed cameras of this size, it's a fundamental misunderstanding of how things work, but one that the industry seems to make time and again.... the idea that serious people want a large camera and people who want a small camera don't want quality. I shoot with the GX85 and iPhone 12 Mini, and I can assure you, the difference in image quality is night and day. What do I mean by night and day? I mean, the image quality of the GX85 shooting at night is better than the iPhone shooting during the day!

-

This is my impression as well, and the main difference between the G and GX style bodies that I see. Even the width isn't so bad considering that the minimum width of your camera when viewed from the front is the width of the lens + the width of your hand on the grip-side. A seriously low-profile shotgun mic would be spectacular as well - all the commercial offerings are all dramatically larger than they need to be, except perhaps that Sony hot-shoe one which seems to be more compact.

-

I did note this from a few weeks ago: https://ymcinema.com/2023/08/24/panasonic-develops-a-variable-built-in-electronic-nd-filter/ Who knows what camera that's destined for, but at least they're thinking about it. The more I think about it, the more I think the G9 looks like an "Oh shit, the GH6 is tanking... what can we super quickly get out there to keep MFT folks in the system?"

-

Best of luck in your return to the industry! 🙂 In terms of creativity and movies that are duds, I think there are lots of reasons why someone might go from being great to having a few 'misses'. Obviously losing touch with our shared humanity is one reason, but it might just be that they are tackling a difficult subject and didn't get it right, or they managed to execute their vision but others just didn't gel with it, or it got watered down by the studios or through creative differences within the team that couldn't be resolved. We're all very different in how we understand the world and how the various concepts in it are related to each other. Making a film that reaches a broad audience in a meaningful way but isn't overly simplistic is a very tough call. There's a whole thing about how the mid-budget movie segment is in rapid decline and studios are now trying to create characters so that instead of an actor being the thing that's famous it'll be Batman / James Bond / etc that's famous and they can just rotate actors through the roles ditching them when they ask for too much money. I've posted this video before, but it's a good critique of how things have become:

-

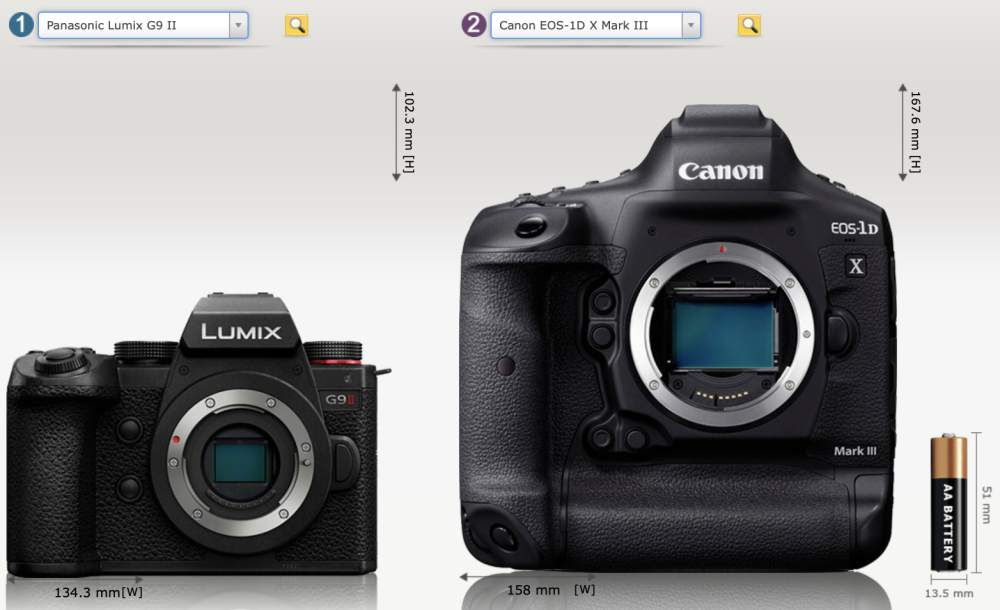

What's the point of having a FF sensor inside a full frame body? What's the point of having ANY camera the size of a full frame body? I can think of a great many reasons why having a slightly larger bodied camera would be advantageous beyond being able to stick a bigger sensor in it. Almost all of those advantages also apply if the sensor was an MFT one. The size differences between camera bodies do make you question what the things are that take up space. I was looking only this morning at the size differences between the GX85 and the GM1, and was stunned at how much smaller it is again.... Of course, no camera is the Tardis and things like cooling for processing and compression, IBIS, batteries, EVFs, etc do all take up some space. One thing that makes me seriously question the whole thing is smartphone cameras. Think about it, they're smaller than an SD card, and obviously the sensor in them is very small, but all the image processing and compression etc is done in there too, and once the data has been read off the sensor then data is data. If I placed the camera module from a smartphone on my desk, then put next to it a "proper" sized sensor, battery, lens, SD card, and screen, I'm still absolutely nowhere near the size of the GX85 even, let alone a 1DX! I can't fault Panasonic for putting an MFT sensor in a larger body, the thing that will be sad is if they ONLY put it in a larger body, and don't refresh the smaller form-factors. I love my GX85 but there's no doubt that the ergonomics and array of buttons on the GH5 or XC10 are a superior user experience. If Panasonic released an updated GX line camera, and also released an updated GM line camera that had similar performance as the GX85, I'd be very tempted by the smaller GM size, but it's almost too small to be able to hold steady and by the time you put a lens on it other than the 12-32mm or 14mm or 15mm pancake lenses then the difference from a GX sized body would almost be irrelevant.

-

Damn! What on earth is that thing?

-

I was thinking of making a Peaky Blinders joke as I read through the thread, but you beat me to it. I laughed so hard at this I started to cry a little.. nice work as always!!

-

I continue to participate in those discussions because I figure that there are enough people lurking who have the same question that it's worth answering it anyway. In terms of them being wankers, I think one thing that we often forget is how completely overwhelming video can be. I came to video from stills, and I'm a technical person who reads a lot, but even I completely underestimated the sheer number of topics involved and the depth of each topic. Also, cameras are so compromised when it comes to video that simple questions like "what camera should I buy" involve considering literally dozens of trade-offs per camera, and that's before you talk about the wider ecosystem like lenses and accessories etc. I can't think of any other product that is as complicated to buy as a video camera, where the tiny details make so much of an impact on real-world use. I think a lot of those people asked a simple question and are simply overwhelmed by the replies they get.

-

I'm sorry to hear about what you experienced. I take a pretty pessimistic view (I think of it as realistic, but others might not) that power corrupts, and because those in power are able to avoid consequences, are prone to behaving the way they want to. Some estimates are that 4% of people are sociopaths and 1% are psychopaths, which puts a lot of people in the situation that if they gain power they will use it to take advantage of others, and to maintain that power they have. Priority #1 of people who do things that society rejects is to hide that from the world, and priority #1 of powerful people is to keep that power. We are animals after all, and further to that, we are the descendants of those that "won" at the reproducing game, which doesn't only incentivise being nice and considerate. In terms of if fame kills creativity, I think that it would if you lose touch with the parts of the human experience we all share. It's well known that rich / powerful people still suffer from mental illness and commit suicide so those things don't separate you from the human experience, but if you get caught up in ego and trying to act like you're better than everyone else or that your life is somehow better then your work will no longer include the things that we all share.. it takes courage to reach into yourself and connect with the uncomfortable feelings that we would prefer weren't there. I think it also includes staying connected to why you do something in the first place. I see lots of good YT people get into YT to be creative and then get caught up in the algorithm or in product reviews and the commercial aspects of it, and because they're not motivated by those things they get burnt out. I used to think that in life you got energy from sleeping and then doing things during the day would make you tired. Now I realise that this is true physically, but mental energy comes from doing what you are inspired to do, so if you're mentally tired it's because you're not doing things in accordance with your own values and preferences. Of course, if you're a creative person running their own business then practical considerations mean that you might have to talk to clients, do the finances, etc, but if you're inspired by the business enough creatively then that motivation should power you through those "required" tasks. As soon as those things are seriously getting you down, it's because you're not getting mentally inspired elsewhere enough to carry you through those things.

-

😂😂😂 Fair enough. The issue, I think, is that while you have perspective and are ignoring these sorts of over-reactions, I don't think the rest of the world, or the manufacturers marketing departments are, thus by repeating this BS over and over I think it makes it come true. It certainly doesn't push things in the right direction. I suppose it really depends on what happens in the next year or so. Scenario 1: Panasonic releases an updated GX camera, which satisfies those who want a smaller body, and an updated GH camera, which has the things that this G9 doesn't have. If this is the case then the G9ii will be part of a sensible line-up of cameras, will offer good value for money, and will allow users to not have to span multiple mounts. This release might have been pushed up because of the failure of the GH6. Scenario 2: There aren't updates to the GX or GH line for a while, and this G9 will represent Panasonic putting their sensor in a body that is unnecessarily large, but is shared with another model, and it will have been a cost cutting measure. Indeed, a museum camera is my main priority. However, I'd prefer to have a museum camera and a larger more powerful camera with the same lens mount, rather than having the museum camera be MFT and there being no powerful MFT camera. The FP is a great example of what is possible, of course. I wonder if we took that form factor and put an IBIS mechanism in it, and a chip that encoded a large range of compressed codecs, how large it would be then. I'd also be happy if it had a slight bump forwards as a grip if they used that space to make the battery larger. Perhaps the point of disagreement is over these two statements: Small MFT cameras should exist because this is an advantage of the sensor size OR ONLY small MFT cameras should exist because this is an advantage of the sensor size I believe it's the first one. The advantages I've outlined previously (and others I didn't comment on) apply to MFT regardless of what size the body is. Ah, I thought it was the 16mm, my bad. But, going back to the point about lens size, I still maintain that the pursuit of optical perfection is what made the lenses larger, and that by constantly wanting sharper lenses and higher resolution systems that everyone drove the lenses to get larger. Nice! You should be less shy about talking about the work this company has done to advance the technology... I tell people all the time that if the problem is people who don't know anything constantly doing self-promotion, the answer isn't for people who do know things to sit quietly and say nothing. LOL about your testing setup. I do think that manufacturers are missing a trick with IBIS. The GoPro does EIS, which involves moving the sensor crop around on the captured image, which results in the same wide-angle wobble that that IBIS exhibits, but they compensate for the lens and so the results are buttery smooth. This is the same compensation mechanism that is used in 360 cameras too. All Panasonic or others would have to do is to stretch the image in accordance with the current position of the IBIS mechanism and the FOV of the lens, and it would be completely eliminated. This is up there with RS compensation too, where the camera would be able to compensate for the motion of the camera and the IBIS mechanism that occurred while the shutter was moving, at least for short shutter speeds where RS is visible. I looked for software that would do stabilisation that accounted for lens FOV and the only option I found cost several thousand dollars, when it could just be built into the camera, like it is with GoPro and smartphones already. Yeah, the GH6 was a bit of a lemon. I suspect that them releasing the G9ii is to try and recover from it by releasing a powerful full-size bodied camera. The thing I don't understand is why when Panasonic releases an MFT camera people don't like (or releases a FF camera) everyone jumps up and down declaring that MFT has been abandoned or ruined, but when Sony released the FX30 no-one said that they were abandoning or ruining FF. It's very strange to me. @BTM_Pix remember I said that people get dramatic?

-

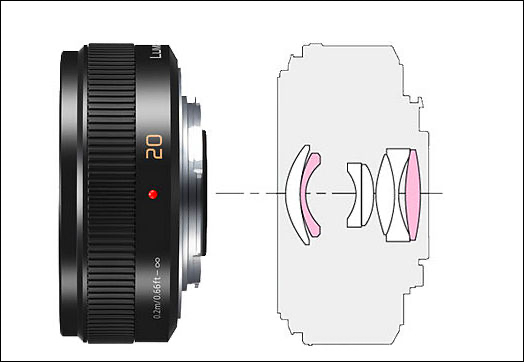

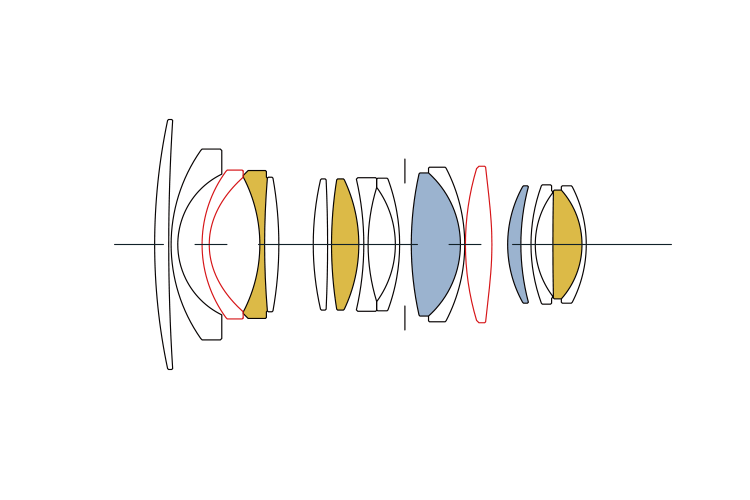

Note: none of my commentary is personally directed at you, I'm merely replying to you as a way to share my thoughts 🙂 It's useful to talk about the various merits of different systems in more depth than the typically "meme-deep" level of analysis in discussions. It wasn't you that was doing it, but it was definitely that dramatic. If I had the time and inclination I could go back and find a torrent of "MFT is dead" "if the GH6 isn't PDAF then Panasonic will go out of business" "4K isn't enough" "6K isn't enough" "36MP stills might be enough, but if I could get 48MP...." "you need at least 13 stops of DR" "I'm selling everything because I can't afford to stay invested in a system that is dying" etc from other forum users. If you don't remember those nuggets of ridiculousness over the last 5 years of forum posts then you're a much more happy-go-lucky and forgive-and-forget type of person than I am! I had to stop rolling my eyes when I read those lest I develop some sort of permanent vertigo-like medical condition. Everyone seems to have decided that the point of MFT was smaller cameras, but where was that declared? and who has the power to decide that? If we start dictating the size of the camera by the size of the sensor then Apple has been making iPhones far too large for their tiny sensors and so the next iPhone should be a 2" display maximum! Maybe the G9 should have been a lot smaller and a lot more difficult to use for people with large hands! Personally, I want cameras to get better and smaller but not in that order, and I'm definitely on record for those preferences, but to say that size was the point of MFT I think is missing something. When you flex the size of the sensor you change more than just the size of the camera body you're wrapping around it, you start trading off things like exposure vs low-light vs DoF vs heat vs mass (important for IBIS) and probably a bunch of other things too. We have sensors that range from S16 to 65mm (and further) and I would say that there is no reference so everything is relative, but it's not relative because there is a reference. The reference is human vision and perception. For human vision, we tend to see across a range of brightness levels and have various depth-of-fields as our iris opens and closes and we focus closer and further away and have noise and loss of colour perception in very low-light conditions. I would say that the best sensor size would be one that is able to fully reproduce that behaviour, with a bit of extra leeway for creative purposes and practicality of use, of course. If you look at the current market and the low-light and DoF vs distance performance and take into account the lens availability then I think you end up somewhere around MFT/S35. Modern FF camera / lens combinations wide open at F2 or F2.8 seem too shallow in DoF to me to seem unnatural, and are definitely way shallower than is used in almost all tasteful story-driven cinema. That combination is also far more capable in low-light than is needed to recreate anything remotely natural-looking either. On the other end of the spectrum, S16 seems to be difficult to work with in low-light and getting shallow-enough DoF is too expensive and lenses selection is too narrow to match what seems to match human vision. ....and we all know that smartphones have no chance with either of these things, which is why they're going computational to try and fight the laws of physics. The 14mm is a much simpler lens - 7 elements in 5 groups: The Sigma is much more complex - 16 elements in 13 groups: The difference I see between them is the pursuit of optical perfection, which makes all lenses significantly larger, especially when they switch optical recipes to make them better, but it makes them much larger. Ironically, the more that people talk about sharp lenses and high resolutions and shallow DoF the more that manufacturers make the lenses bigger. Not to say I told you so, but I did, and everyone screwed it up for me as well as themselves. Of course! I'd forgotten you mapped this territory early on! That's a seriously cool feature - I must have missed that when you were talking about it. Do you have links to the relevant info? Please post 🙂 Yes, IBIS, another thing that MFT has a huge advantage over FF with, but the manufacturers deliberately hide and forum folks steadfastly refuse to learn about. Manufacturers rate IBIS in STOPS, which is the amount any vibration is reduced by the mechanism while the mechanism is working within its mechanical limits. As soon as you move the camera too far and the mechanism runs out of travel then the movement can't be reduced any more: FF camera needs to move the sensor twice as far as an MFT camera would need to move its sensor to achieve the same motion reduction. If the sensors are the same thickness and density, a FF camera needs to move around four times as much weight as the MFT sensor.. It is also likely to reduce how fast the mechanism can move the sensor, and a slower mechanism lets through more high-frequency motion, which is the most aesthetically offensive motion. This all takes battery power too. As someone who shoots exclusively hand-held with IBIS cameras, I can tell you that the stabilisation fails because the movements are too large for the IBIS mechanism, it doesn't fail because there aren't enough stops of reduction. I have never used a FF camera with IBIS, but I find it unlikely that they've scaled up the IBIS mechanism to twice the size of the MFT one and juiced it up to move the sensor as quickly, so it will likely perform far more poorly in real life than an MFT equivalent. But, this is yet another thing that isn't talked about, isn't understood, and no-one tests in anything remotely approaching a scientific way.

-

If the lenses were already good then maybe there was no reason to update them? It depends on if you do stills or video, but in higher-end moving images the best lenses aren't the razor sharp ones, they're ones with a bit of diffusion and a bit of character. You might be chasing the latest sharpest fastest lenses, but if you're interested in a higher-end look, then that's not the way to go. Often the mid-range MFT lenses have a good amount of the right optical aberrations. I spoke about that here: https://www.eoshd.com/comments/topic/58706-a-manifesto-for-the-humble-zoom-lens/ For example, look at the following 4 shots: Two look sharp and detailed with lots of contrast and saturation and two look more vintage. The two that look sharp/modern are the Panasonic 12-35mm F2.8 zoom and the Voigtlander 42.5mm F0.95 prime, both expensive lenses. The two that look more vintage are the mythical Helios 44-2 58mm F2 lens, and the other is the 14-42 kit lens, sitting somewhere in-between the modern and vintage looks. The kit lens is easily accessible, it's a zoom lens, it has OIS, it has AF, and it's dirt cheap. You can also combine it with diffusion filters to customise the amount of contrast etc. In many ways, it's better than the 12-35mm f2.8 and better than the Helios.

-

Yeah, it was a shame, and I'm not optimistic about the 15, but it might be better than the 11. My suggestion is to record in the highest bitrate and then put it onto a lower resolution timeline to downscale all the digital nastiness. With enough downscaling all cameras look alike. Judging by this criteria, the latest iPhones are almost at 720p.

-

I suspect that it is to do with their own mental and emotional place. If someone is able to connect to their own emotions deeply then they can express themselves in a way that is authentic, which people respond to because we instinctually recognise it. It's hard for someone to stay connected with themselves though, especially if they get commercial success during that time, so that's why they often stop making content of this quality. It also explains why people who are reclusive and take time and release projects far apart can often extend these periods of creative authenticity over long periods. That's my take on it anyway.

-

Yeah, that one is surprising! Actually, considering how well Sony integrate with their lenses (e.g. focus breathing compensation), their IBIS might be really tuned to their lenses individually. That would be a huge advantage in tuning the resulting images.

-

The last one recorded Prores but it looked like crap. I thought if they were going to implement a professional codec they'd provide a professional image, but it was atrocious... turns out the best thing about Prores on other cameras was that it guided the manufacturer to be tasteful and professional when processing the image, it wasn't the codec itself.

-

Panasonic has partnered with DJI to integrate their LIDAR technology, which when coupled with a focus motor, can do AF on any manual focus lens. The technology is coming. Ironically, if Panasonic releases this soon, they'll be the only manufacturer who provides easy AF on MF lenses, and then we'll see if all the Sony/Canon PDAF folks obsessed with plant-eye-detect-AF switch over because Panasonic would have the best AF in town....