-

Posts

8,044 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I might be wrong about it being digital, you'd have to watch the video in detail to confirm. In terms of advantages of doing things in-camera vs in-post, if you have a limited bitrate for the output file there's a non-trivial difference between the camera scaling the RAW data and then compressing it with the full bitrate of the codec vs taking the already compressed file out of the drone into post and then cropping in to that image and effectively discarding some of that limited bitrate. The disadvantage of doing things in-camera is that you're stuck with them in post, whereas doing it in post allows you to exactly match the zoom with the crop. Ideally you'd shoot RAW and then it wouldn't matter, but that's not the price-point we're talking with these drones!

-

Sorry but that video is awful - I had to stop watching before the finish. I believe that he likes HLG better than SLOG, and he may well be right about it, but the fact he uses film-emulation LUTs and then jumps around all the time between controls that weren't set to default settings is very strange. Plus his comment about "SLOG gives you purple shadows and you can't do anything about that" indicates that either he doesn't understand the basics of using the Lift wheel or the Curves panel, or the fact that the tint is likely coming from the film emulation LUTs, but either way, no-one should be taking grading and colour advice from someone who can't add green in the shadows!! I am intrigued to learn more about the HLG profile though, because it seems like it might be how to get high DR but avoid the low bit-depth in colour information issue (which is the downside of 8-bit log profiles).

-

Tony Northrup mentioned the dolly-zoom effect on the new Parrot drone, which does a zoom (digitally I think?).

-

Totally agree. The current (small) batteries give flexibility to those who want a smaller setup - carrying batteries in a pocket or bag is much nicer than the weight being on the camera if you're carrying the camera around. If you want the extra battery capacity and don't care about size then external power options are available. For all of the talk of it not being a 'pocket camera', which essentially comes down to how large your pockets are(!) no-one would argue that size differences don't matter, otherwise we'd all be making travel films with old-second-hand cinema cameras!! Yes. I looked for such a thing and found the WD ones that backup SD cards, as well as some with USB ports, but I could never get a straight answer if a CFast card reader would work so never bought one. Plus it annoyed me that I had to buy it integrated to a HDD - modular is better. Even if you have a laptop, if you're out in the dust then you'd probably leave the laptop in the hotel safe and take this kind of device out into the field for user throughout the day. I've looked for this in the context of my family and travel videos, but my dad (who used to be in IT and is now retired) also asked me about such a device for downloading the footage from the SD card in his dashcam for his 4WD trips, so I think there's a market both inside and out of the film industry. This sounds excellent - having something with flexible connections would be great. If you can make it so it connects almost any type of storage to any type of HDD and can be powered by anything (USB is my preference, but I would also imagine that Sony MPF(?) batteries or the DC power sockets from those huge external batteries might also be useful for some) then that would really hit the nail on the head. Make it work in rain, dust, the heat / cold, and other tough conditions, and have a screen/LEDs that are easily visible in bright light and you'd have a winner. "It's the device that copies your data from the cards you have to the storage you have with the power you have in the conditions you're in" would be all the sales pitch I'd need to buy one. Usually an option for verifying the data after the copy should suffice (from a technical standpoint at least). It would take more battery life for sure, but would be a level of protection good enough for most. I'm assuming that if you're making sure that you're detecting read errors, write errors, and verifying the data then it would be trustworthy. This would be a pretty good user experience for me: You connect it to power and the POWER led lights up You connect a card and the CARD light turns on green (or red if there's an error) You connect a drive and the DRIVE light turns on green (or red if there's an error) You press the copy button and the COPYING light turns on and starts blinking (Maybe the COPYING light turns a different colour if it detects an error?? eg, orange blinking) It finishes the copy and unmounts the drives and then the COPY COMPLETE light goes green for "no errors" or "copy verified ok", or amber for "errors detected but copy completed", or red for "copy failed" If it had this level of communication I'd be fine with it. You'd probably need to build in some kind of recovery mode if a copy is interrupted and you reset it and try again. This is absolutely a device I would buy if it does what you have described and doesn't have any silly design flaws.

-

Totally agree. 5 years is also long enough for most of the late-adopters to see that others have gone before them and been fine, and that when they make the switch there are people they know who can help with questions etc.

-

I don't for a minute think that ARRI feels threatened by Sony, but if they did, and if they wanted to talk about how their cameras might be better, this would certainly do the trick!!

-

I did notice one thing in the video that got me thinking. In the final shot where he overexposes the most and clips some sky detail the histogram is only showing data levels perhaps at 70% of full values. That seems strange to me - why wouldn't you take 100% exposure on the sensor and translate that to 100% brightness in the output file? It would give you more bit depth to play with - the whole point of ETTR I thought. Unless there's some aspect of S-LOG that needs to be calibrated between cameras, with other cameras having greater dynamic range perhaps? It just struck me as odd..

-

I think you missed my point - I have internet at a speed that's totally acceptable, but the upload speeds to the cloud servers is only a fraction of my internet speed - therefore it's a problem with the capacity of the cloud storage companies servers. If you have fast internet and fast cloud upload speeds then that's great and good on you, but it doesn't mean that everyone in the world will experience the same thing.

-

Setting aside my word choice (maybe 'reluctant' isn't the best word) this is agreeing with what I've been saying (in a number of threads recently actually) - that business decisions are very different to technology decisions. I even included a video on the last page of this thread showing that the pros at the olympics need reliability and speed through the entire chain from capture to publishing. The video I showed was linked to the moment in the video where the photographer said that it took less than a minute for the photo of the winner to be taken, uploaded, processed, and published. If you review my previous posts you will see that I am far from taking a 'spec only' perspective. In fact, I often take a "photography is a business" perspective on here and get chewed out because I didn't say that everyone in the world should buy the latest shiny thing! If you're having trouble finding where I have said such things, just ask. I'll happily link to a few

-

and they need to publish them pretty darn quickly.. this was an interesting video, and I've linked to the time when they mention publishing in under 1 minute from the photo:

-

I definitely agree with you around the reluctance of the pro market to adopt new technology. One thing that I have seen that MILCs offer that the pros would really value is a faster burst rate. IIRC Tony Northrup was saying that they did a head-to-head at some kind of sports event with the A9 vs the top end Canon and Nikon and the A9 got 20fps vs the others that were both in the 10-12fps region. My memory was that it was with full continuous autofocus. I think if you don't have C-AF enabled then CaNikon are much closer to the A9. I'm not sure what that kind of burst rate improvement is worth professionally, but it's definitely worth something.

-

Yes, this again.

-

Speaking of lost content because of equipment.... theres an entirely lost scene from todays video due to sound issues:

-

It looked useful to me. Maybe it's not complete, but incomplete doesn't mean not useful. The one thing I think he should have focused on more is the equivalence in DoF, which is important for those who are interested in the FF look. Saying a lens is 17mm F2 (34mm equivalent) is very different to saying it's 17mm F2 (34mm F4 equivalent)!

-

Even if it isn't true, the fact you keep hearing about it means there's a perception out there about it (and it definitely used to be the case) and perception drives behaviours.

-

Maybe you're right, I guess it depends on the Return-On-Investment for the different options. If they release two FF MILC cameras (as they have announced) and we assume they're solid but not spectacular, then for their next camera they can either release a MILC or DSLR. If Nikon decide to release a MILC then it would make sense to make the best one they possibly can, this would be to compete with the A9 and equivalent cameras, and would prove that Nikon are still a competitor, and that the pros who use Nikon are in safe hands, etc etc. But it would also be a statement that Nikon has moved away from DSLRs and the Nikon users who are late adopters may get their feathers ruffled worrying that Nikon has abandoned the only tech that these users are familiar with. As much as photography is a very technical industry, photography is an art form and there are many photographers who are emotional/creative and operate almost exclusively on intangibles. These people may be very sensitive to feeling abandoned, especially when having to learn a whole new system starts to look like also changing manufacturer. If Nikon decide to release a DSLR then it would be a big gesture to the loyal but middle and late adopters that are already Nikon customers. By then they will have designed and released two new MILC cameras, a new lens mount, and a number of lenses, and may have developed some technology that can be applied to a DSLR. For those customers still using DSLRs who need a new camera but aren't ready to change to MILC and a new mount, it might be the stop-gap they needed, and for some it could be good enough to stop them even thinking about changing brands. It would be a disappointment for those who switched to Nikon MILC who were wanting an A9 competitor, but how many of them would want to immediately upgrade is debatable. The correct decision would be whichever one above generates the most profit over the long-term - they have to balance poaching customers from other brands with poaching customers from their own DSLR lines with losing existing customers to other brands. It's about long-term product and customer strategy, not just features or sales.

-

Being a professional youtuber is about business, not IQ. Shooting in 4K costs more to backup, costs more time to edit, costs you out-of-focus shots (the cost in time to re-shoot, the creative cost of the frustration, and sometimes the cost of missing a shot you can't replicate), but most people don't have a 4K display device and those that do probably sit too far away from it to see it, so apart from getting the 'hype' bump of having a 4K logo it is all negatives. All those costs directly translate to entire videos you could have made instead - if 4K is a 10% cost then potentially you could have made 10% more videos, the percentage is probably more than that. For people like Casey / Peter / Matti that's quite a lot of views, as well as potentially viral videos. IQ is important to films where the aesthetic is important, the vlog type of YT videos are basically disposable and are only valuable for their time-sensitive content. As they say, being second is first-last place, so workflow speed is everything, especially in the tech space. I think it's both - he cares about content and storytelling. In terms of the A7III it would be a good technical choice, but I remember Casey saying that he's basically a luddite who only uses tech when he's forced to. Even his 'Tech Review Tuesday" videos are almost completely devoid of technical content - they're all about user experience. As above, learning something new has an opportunity cost of a number of videos.

-

Thanks Zak - that's a great link. Not as good as the OFX plugin, but as you say, a good alternative.

-

Not FF mirrorless! I think it comes down to timeframes and lenses. In terms of timeframes, it took what, 10-15 years for digital to "take over" from film? It's hard to estimate because people still shoot film today, and the first digital cameras were completely rubbish, but I think 10-15 years is a reasonable estimation. I'm not sure what the complications of lenses and compatibility was between DSLRs and SLRs during that time, but I'm assuming they had some overlap of compatibility. In the sense then that MILCs might take 10-15 years to "take over" from DSLRs doesn't seem to be too far fetched. I think we tend to get a bit carried away in these conversations - it's not like Nikon will release two FF MILCs and then never release a DSLR again! In a sense, the lens mount change is a separate issue, as it just happens to be occurring at the same time as a change to mirrorless, and I think all the logic applies. Even if they started a second line of DSLRs with a different lens mount, all the conversations about adapters and compatibility and flange distance would still be relevant. Needless to say, the quality of adapter for this camera will play a huge part in how well it does commercially.

-

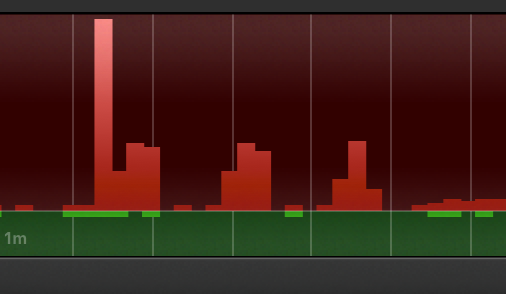

We have great internet (just tested it then and got 29Mbps / 1.45Mbps), but those companies don't have great upload servers here in Australia. I just uploaded a file to test it and got this transfer graph (red is upload): It clearly uploaded it in three bursts. It was amazing how fast it was actually - when I'm uploading something large the bursts are often 20 or 30s apart, and this is SugarSync, which I am a paying customer of. The rest are no different - tiny little spikes a very long time apart.

-

One of the main issues with the cloud storage is that it takes forever to upload anything. It says 'unlimited' but if you can't upload very fast then it's a very real limit. My wife has been backing up her photo collection overnight to OneDrive for maybe a year now and I don't think she's done yet. She doesn't upload every night though because whenever she starts uploading it kills the internet and everyone complains and so she turns it off again, and the app doesn't have a schedule option, so it's just a bad experience overall. I have dropbox and sugar sync and both take a long time to upload things too. Cloud storage seems like a good idea, but in reality is has many other issues. Also, whenever you hear "cloud storage" you should substitute the words "someone else's computer" so that you're interpreting things correctly - it has very different connotations.

-

Not yet released, but here's some test footage vs iPhone X. Verdict - it's a great time to be alive and into video!!

-

TLDR: MILCs will gradually replace DSLRs, like DSLRs replaced SLRs and smartphones will replace cameras for the enthusiasts that had compact cameras Mirrorless advantages over DSLRs MILC lenses vs DSLR lenses and focus modes Enthusiast vs Pro Stills vs Pro Video customer segments vs mirrorless manufacturers - current and predictions Industry sales are decreasing, more competition Predictions of what each brand will do and what will happen commercially Sony are leading, CaNikon only have to have good enough mirrorless to keep their current customers, then they can go after Sony in the future Consumers are the winners...

-

I'd view those things more like hygiene factors (absolute minimum requirements) rather than weaknesses, but you're right about some people might view the trade-off as acceptable.

-

This is an excellent example of how people speak about the Sony cameras, a list of features, presented rationally and factually. I'm not criticising at all, this is how I view the camera - an input to the work I do in Resolve. By contrast, people talk about the colour of Canon and more recently Nikon, they talk about the rendering of out-of-focus areas of vintage lenses, they talk about things like the Black Pro Mist filter, and they talk subjectively about 'the look', how things are 'cinematic' or 'analog' or 'filmic', about the quality of the highlight rolloff, etc etc. They tend to say things like "I know camera X only shoots in soft 1080, weighs 25kg, battery life is 12 minutes, sound is unusable, and you need an engineering degree to operate the menus, BUT <insert subjective comments about the feel of the footage here> and that's why I choose it". Different tools for different folks. Film-Making is Art after all I guess my point is that although Sony isn't a stand-out in aesthetic terms, it is a stand-out in wringing all the technical capability out of the components of a camera, which is a stand-out for those who take a more objective preference to the camera, which is why people can suffer the poor menus and other weaknesses.