-

Posts

1,151 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Posts posted by tupp

-

-

7 hours ago, newfoundmass said:

My fresnel attachment that I use on my Godox COB lights doesn't really do a good job in "full flood", though it works great when in spotlight (which is my primary use for it.) I imagine you'd get better results from a nicer one then?

Not sure what is meant by "doesn't really do a good job in 'full flood,'" A Fresnel attachment on an LED fixture might be disappointing to one having experience with tungsten and HMI Fresnels.

Regardless, the range of beam angles from a focusable fixture/attachment depends on a few variables.

With Fresnel fixtures, the source is always closest to the lens in the full flood setting. So, if the Fresnel attachment doesn't allow the LED to get close to lens, then the beam angle will not reach its widest potential. Of course, there are safety reasons why the light source should not get too close to the lens.

On the other hand, if one can just remove the lens/attachment, then it's best to just use the fixture without the lens, one wants to go really wide and use all of the output from the source.

By the way, it is dangerous to run a tungsten or HMI Fresnel without its lens.

8 hours ago, newfoundmass said:I'm not on major sets, just small commercial ones, and I've never seen a fresnel attachment being used with diffusion, so I find that interesting. I still feel like the op would be better off using more lights instead of a fresnel attachment. Not to say he shouldn't get one, as they're very fun to play around with and good to have in your kit, I'm just not sure that it's the solution he'd want to go with for diffusion?

I don't advocate using Fresnels to illuminate diffusion -- it doesn't make a lot of sense to do so. However, I see it on set often.

Fresnels and other focusable fixtures are more than "fun to play around with." If one knows how to use them, they are a valuable tool that "play" often on set.

-

13 minutes ago, Mark Romero 2 said:

I think that when shooting through a scrim,

You call a diffuser a "scrim" -- do you have a background in still photography?

9 minutes ago, Mark Romero 2 said:think that when shooting through a scrim, you are correct. Moving the light far back enough to get an even spread is going to make the lights more or less equal, regardless if it is a fresnel or a cob with a dish.

Generally, a Fresnel will be significantly less efficient than an open-face fixture. A lot of the light is lost when it strikes the inside of the housing of the Fresnel fixture/attachment.

14 minutes ago, Mark Romero 2 said:When bouncing off a HIGH ceiling, it might be easier to use a fresnel because you wouldn't have to raise the light as high to achieve the same light spread.

An open-faced focusable source would be better and more efficient in this situation.

-

On 11/11/2021 at 5:34 AM, barefoot_dp said:

From what I've read (ie what is advertised) they actually increase the light output over the bare bulb by focusing it all on one place

Fresnels don't focus all of the output in one place -- such fixtures can only focus the light that hits the lens. The light that hits the inside of the housing is wasted.

In this sense, many open-faced fixtures are more efficient than Fresnel fixtures as almost all of the light from an open-faced fixture comes out the front of the unit.

On 11/11/2021 at 5:34 AM, barefoot_dp said:- does this mean a COB LED would give more output with a fresnel pointed towards say a 4x4 silk, than it would just mounting a softbox directly on to the light? Or am I missing something?

No. The Fresnel will be dimmer than using the exposed LED with a reflector.

By the way, softboxes are generally a lot more efficient and controllable than naked diffusers. Naked diffusers also generate a lot of spill light.

On 11/11/2021 at 6:42 AM, newfoundmass said:I don't think you'd get the spread you'd want / need since the light would be concentrated in one spot on the silk,

On 11/11/2021 at 2:43 PM, scotchtape said:In your example, at the same distance if you used the fresnel vs softbox, the fresnel would produce a small hotspot which is totally useless for soft lighting.

Folks, Fresnel lights on set generally have a continuous "focus" range of beam angles from "spot" to "flood." The range of those beam angles varies with each fixture.

I can't recall all the times I've seen someone illuminate a diffuser with a Fresnel light, but almost always they focused the light to "full flood" to completely illuminate the diffuser.

17 hours ago, Grimor said:Don´t forget that years ago most cinema lights were fresnel

Fresnels are used all the time on film sets.

9 hours ago, Mark Romero 2 said:I think a fresnel could come in handy when:

1) Bouncing light

2) Illuminating through a window or cookie for a hard light / hard shadows.

3) When you need to use more than one light for a diffused key, and you can shoot two (or more) fresnel lights through a scrim (since we can't really fit more than one light in to a softbox, can we???)

4) When you want a spotlight effect

I use Fresnels and open-faced focusable fixtures directly on people and sets.

Keep in mind that the "spotlight" effect from a naked Fresnel will usually give a soft edge to the spot. If one wants a harder edge on a spot, use a snoot and focus the light to "full flood" (or use a good ellipsoidal/followspot or projection fixture that has minimal fringing).

3 hours ago, barefoot_dp said:He literally says in that video that the light is too harsh on its own.

A lot depends on what you are trying to do. I could use that panel fixture naked in a lot of shoots.

3 hours ago, barefoot_dp said:I own and use a couple of 1x1s and they are simply not large enough for a soft key. They're great for backlights, quick 'n' dirty portable/field/battery setups, or even as an eye light outdoors, but if you want soft light they come nowhere near competing with a COB light pushed through a softbox or scrim (at equivalent prices).

Softness in lighting is a matter of degree between a point source and completely surrounding your subject with a smooth light source. There is not definitive "soft light" and "hard light."

By the way, you can use a panel light in a soft box.

3 hours ago, barefoot_dp said:Thanks Mark. In regards to points 1 & 3, and taking in to account Scotchtape's comments above, how would a fresnel compare to a bare COB (with a dish)? Would you not run in to the same issue Scotchtape mentions, that you need to back the Fresnel further from the wall or scrim in order to get an acceptable sized beam - resulting in more light loss than if you'd just put the bare COB closer to the bounce/scrim?

A lot depends on the optics in front of the LED, but, again, open-faced fixtures are almost always more efficient than fixtures using Fresnel/plano-convex lenses.

-

The stills look great!

-

14 hours ago, KnightsFan said:

I'll be "that guy" and point out CD's DR comparison actually shows the 12k slightly ahead of the 4.6k. In Braw, they measured 12.1 stops on the 4.6k G2 scaled to UHD. Downscaling the 12k to 4k gave a SNR to 12.4 stops, with a note that there is more data under the noise floor than the 4.6k has. I'd argue that the 4k-normalized number is better for comparison, because if you're looking to maximize SNR in 12k than obviously the 4.6k won't even compare.

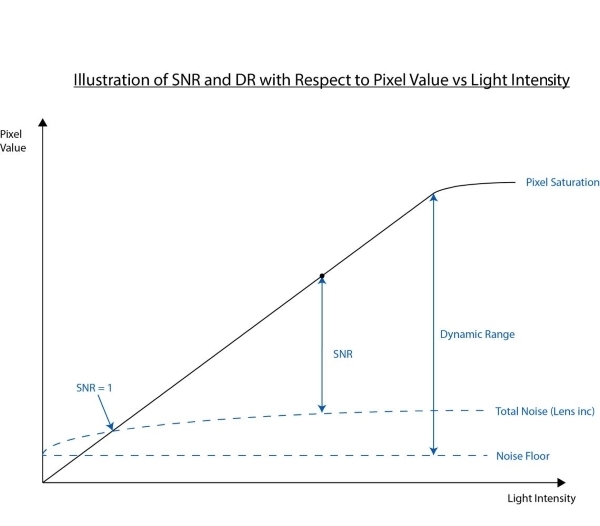

Keep in mind that although signal-to-noise ratio and dynamic range are similar properties, SNR will always be smaller than DR. Both he upper limits and noise thresholds differ in the way that they are obtained, especially in regards to capturing photons with image sensors.

This article breaks down the differences.

15 hours ago, KnightsFan said:Worth noting though, they showed that the 12k only does that well shooting in 12k and downscaling in post. Shooting 4k in camera produced only 11.3 stops.

A downscale with binning usually reduces noise, which increases the effective DR/'SNR

Perhaps the in-camera downscale is not binning properly, or perhaps it might be too difficult to do in the camera because of the sensor's complex filter array.

-

1 hour ago, barefoot_dp said:

Well I've done a tonne of research and I think I've actually come back full circle to the projection lens. I was previously leaning towards getting some barn-doors and using cookies/flags, but that still seemed like a lot of extra gear (and space) dedicated to reducing spill.

I have a fair bit of experience creating patterns and slashes on backgrounds, and unless the desired pattern is complex, you might be better off just cutting what you need out of foamcor and just casting a shadow. Slashes are easy to make with zero spill using bardoors and/or blackwrap. With foamcor patterns the spill is not that big of a problem, if you are careful with your doors/snoot and if you leave a big border around the pattern.

If you really need to go with a projection fixture, consider a used Source 4 ellipsoidal. They have a nice punch and go for as low as US$175 on Ebay (sometimes with the pattern holder included). In addition, they take standard theatrical patterns -- there are zillions of them. If you anticipate working mostly in close quarters, get the 36-degree lens (or the 50-degree lens).

-

1 hour ago, fuzzynormal said:

I don't do a lot of high end stuff, [snip] Basic basic basic, but got the product delivered to the client as they requested.

Your video is easily better than 95% of the work that is out there -- great eye and a nicely coordinated edit.

The narrator did a great job, as well. Did someone give her line readings?

2 hours ago, fuzzynormal said:I'm not sure if I even used a high end camera for a gig like this it would've turned out much different.

I noticed that the interior shot had slight noise, but I was pixel peeping. I would guess that you had to stop down due to the high scene contrast. It certainly was not enough noise to warrant using an unwieldy cinema camera, although it might have been interesting to try a minor adjustment to the E-M10's "Highlights & Shadows" setting.

Your video is a superb and inspiring piece that brings life to a mundane subject.

-

-

On 6/30/2021 at 7:31 PM, Chrad said:

Silly. Film is a lot grainier and we accept that as part of the aesthetic, so I don't know why we need to treat video differently and expect total cleanness to the point of sterility.

Film grain is "organized" to actually form/create the image, while noise is random (except for FPN) and obscures the image.

On 7/1/2021 at 6:00 AM, Chrad said:I don't really think it's true that noise destroys resolution,...

Noise doesn't "destroy" resolution -- it just obscures pixels. On the other hand, with too much noise the image forming pixels are not visible, so there's no discernible image and, thus, no resolution.

On 7/1/2021 at 6:00 AM, Chrad said:...and grain only adds flavour.

Grain actually forms the image on film. Noise obscures the image.

On 7/1/2021 at 6:00 AM, Chrad said:Large grained filmstock is considered to hold less resolution relative to finer grained stock.

Yep.

On 7/1/2021 at 6:00 AM, Chrad said:I also don't think the pattern vs random aspect makes much difference to the viewer.

That notion is subjective, but not uncommon.

On 7/1/2021 at 6:00 AM, Chrad said:In practice, digital noise is perceived as random.

Actual electronic noise is random, whether digital or analog. It could be argued that FPN and extraneous signal interference are not "noise."

On 7/1/2021 at 6:00 AM, Chrad said:The appearance of noise varies from camera to camera, but I think it's the kind of imperfection that can prevent images from looking sterile and inhuman.

This is another subjective but common notion.

On 7/1/2021 at 6:00 AM, Chrad said:I look at it as the surface of the medium becoming visible.

Again, with the medium of film, grain is organized to actually form the image -- noise is random and noise obscures the image.

On 7/2/2021 at 12:13 AM, Zeng said:Usually you denoise and then add grain :).

To me, it doesn't make much sense to remove random noise and then add an overlay of random grain -- grain that does absolutely nothing to form the image.

-

15 hours ago, HockeyFan12 said:

Some of the projection kits have chromatic abberation...

Yes. Fringing can sometimes be an issue with some ellipsoidal fixtures. Instruments that use lenses made for slide/film projectors usually exhibit minimal fringing.

7 hours ago, barefoot_dp said:That's the bit I'm struggling with - the further the light is from the cookie, the more spill there is and the more flags are required.

Use an open-face tungsten or HMI source with a snoot. Add a tube of blackwrap if the snoot is too short. That should eliminate most of your spill, and the open-face filament/arc will give you sharp shadows if the fixture is set to full flood.

15 hours ago, HockeyFan12 said:The light is probably sharper at full flood than full spot (if you're using a fresnel lens)

Both open-face and Fresnel fixtures always give their sharpest shadows on full flood.

- HockeyFan12 and Tim Sewell

-

2

2

-

I know what it is like to suffer attacks and abuse for merely telling the truth and stating fact.

All I can say is:

-

3 hours ago, barefoot_dp said:

Yes, we're often camping and working out of vehicles - plenty of lights on the 4WDs, as well as flashlights, headlamps, firelight, etc.

[snip]

I do a 4WD Adventure show and we usually rely on the hero vehicle's dual battery/2kw inverter, but sometimes we're away from the main cars for a day or two (eg somewhere that is only accessible by boat/helicopter) and need another solution.

Okay. If you can keep your set-up and shoot time down to an hour total and if you are only using two or three fixtures, then it is probably better to use batteries. Bring spares.

3 hours ago, barefoot_dp said:Plus needing to light a scene does not always mean it is nighttime.

LED fixtures might need to be fairly close to the subject when shooting in the daytime.

-

46 minutes ago, barefoot_dp said:

We've got plenty of light off camera. No problems there.

[snip]

If I was in a position that I could run a cable then I would, but it's hard to find a power outlet when you're sometimes 1000km+ from the nearest town!

You have plenty of work light? Are you shooting nighttime exteriors 1000km form the nearest town?

49 minutes ago, barefoot_dp said:This is just specifically for the lighting for remote IV/PTC setups.

What is "IV/PTC?"

-

If your LED lights actually draw a total of 600W, you might suffer continual battery management/charge anxiety. Also, when you kill your battery-powered set lights in between takes and in between set-ups, will you also have a separate battery-powered work-light running?

On the other hand, a genny with a 600W constant capacity is not that big (but it's good practice to use a genny that is rated at twice the anticipated power draw). You can keep it and a gas can in a large plastic bin(s) to protect your car's interior from gasoline/oil.

By the way, gennys last longer than batteries because their tanks have much larger power capacities than typical batteries -- not because they can be "topped-up." A battery system can be "topped-up" with a parallel, rectified circuit and/or switches.

If you are recording sound, make sure to have at least 150 feet of 12 guage stingers (extension cords) just to run power from the genny to the set, and hide the genny behind a distant building or thick bushes. You can also build a sound shield with stands, heavy vinyl and a furniture pad (or a thick blanket).

If you can arrange in advance to run power from a nearby building, that might be an even better solution.

-

14 hours ago, PannySVHS said:

On another forum someone was dreaming of a speedbooster for this to push it up to super 16 sensor size. Maybe time to rethink the classy classic EOS-M rather then waiting for a speedbooster for this little kitten.:)

There might be a way to attach a speedbooster.

This is a really interesting thread!

-

14 hours ago, PannySVHS said:

Darn this thread it tasty as the other other EOS M RAW thread. Both threads are some of the greatest browsing pleasures for nerds like us. 🙂 Anyone shooting narrative shorts with this beauty? How does it hold up against a BMPCC? Anyway our friend @ZEEK has provided us with beautiful pieces like this one.

Yes! The EOSM with ML raw is amazing, and @ZEEK has a great eye! EOSM ML raw videos are constantly appearing on YouTube.

This guy also does nice work with the EOSM.

- PannySVHS and ChristianH

-

2

2

-

31 minutes ago, HockeyFan12 said:

Even though you'd expect it to be, it's not interlaced, it's 24p footage someone put into a 1080/30p timeline from what I can tell.

Perhaps it was interlaced (60i), and then deinterlaced to 30p.

34 minutes ago, HockeyFan12 said:Is there any fast way to do this with After Effects or Premiere or Compressor?

There are probably plug-ins/filters in those programs that might work, but I am not familiar those apps.

-

On 4/30/2021 at 4:26 PM, leslie said:

From the outside looking in. Its been fun watching you guys troll each other, well thats what it looks like from here anyway.

Look again. I am not trolling.

I have presented facts, often with detailed explanations and with supporting images and links. These facts show that Yedlin's test is faulty, and that we cannot draw any sound conclusions from his comparison.

In addition, I have point-out contradictions and/or falsehoods (or lies) of others, also using facts/links.

If my posting methods look like trolling to you, please explain how it is so.

On 4/30/2021 at 4:26 PM, leslie said:Subjectively i thought we might have had a three way going with elgabogomez having a go, half a page back, but he seems to of bowed out.

It doesn't sound like you are actually interested in the topic of this thread.

On 4/30/2021 at 4:26 PM, leslie said:Sorry to appear so shallow and frivolous,

No need to apologize for your shallowness and frivolity.

On 4/30/2021 at 4:26 PM, leslie said:but i'm now out of popcorn.

So Before one or both of you have a brain aneurism.

Those are two real funny (and original) jokes.

On 4/30/2021 at 4:26 PM, leslie said:Can you agree to disagree ? else its going to degenerate into world war 3. Which in turn will put a dent in the coming zombie apocalypse, which is what im actually hanging around for

Another real funny joke.

On 4/30/2021 at 4:26 PM, leslie said:t may not be readily apparent but i do have a fair amount of respect, for you two dudes and i think both of you have some valid points in your arguments ,

I am not sure that I believe you.

On 4/30/2021 at 4:26 PM, leslie said:although most of it goes over my head anyway,

So, you don't really have anything to add to the topic of the discussion with this solitary post late in the thread.

You have made similar posts near the end of another extended thread, posts which likewise had no relation to that discussion.

What is the purpose of these late, irrelevant posts?

On 4/30/2021 at 4:26 PM, leslie said:and i really do have to wonder why theres all this friction,

I don't wonder about that.

On 4/30/2021 at 4:26 PM, leslie said:you blokes should be better than this.

Please...

If you have something worthwhile to offer to the discussion, I'd like to hear it, but don't talk down to someone who is actually contributing facts, explanations and supporting evidence that relate directly to the topic of this thread.

-

Ffmpeg has a "pullup" filter, but removing pulldowns can be done in ffmpeg without that special filter. Mencoder and AviSynth can also remove pulldowns. Several NLEs and post programs have plugins that do the same.

However, the pulled-down 30fps footage is usually interlaced. Can you post a few seconds of the 30fps footage?

-

On 4/29/2021 at 2:55 PM, kye said:

What are you hoping to achieve with these personal comments?

What were you hoping to achieve with your personal insults of me below:

On 3/26/2021 at 6:42 PM, kye said:Then Tupp said that he didn't watch it, criticised it for doing things that it didn't actually do, then suggests that the testing methodology is false. What an idiot.

Is there a block button? I think I might go look for it. I think my faith in humanity is being impacted by his uninformed drivel. I guess not everyone in the industry cares about how they appear online - I typically found the pros I've spoken to to be considered and only spoke from genuine knowledge, but that's definitely not the case here.

On 3/27/2021 at 8:18 PM, kye said:This whole thread is about a couple of videos that John has posted, and yet you're in here arguing with people about what is in them when you haven't watched them, let alone understood them. I find it baffling, but sadly, not out of character.

On 4/24/2021 at 2:43 AM, kye said:I wish I lived in your world of no colour subsampling and uncompressed image pipelines, I really do. But I don't. Neither does almost anyone else.

Yedlins test is for the world we live in, not the one that you hallucinate.

On 4/28/2021 at 3:09 PM, kye said:I fear that COVID has driven you to desperation and you are abandoning your previous 'zero-tolerance, even for things that don't matter or don't exist' criteria!

I didn't mind any of these blatant insults nor the numerous sarcastic insinuations that you have made about me (I have made one or two sarcastic innuendos about you -- but not as many as you have about me). I don't mind it that you constantly contradict yourself and project those contraditions on me, nor do I mind when you inadvertently disprove your own points, nor do I care when you just make stuff up to suit your position.

However, when you lied about me making a fictitious claim comparing myself to Yedlin, you went too far.

Here is your lie:

On 4/28/2021 at 3:09 PM, kye said:... considering how elevated you claim your intellect to be in comparison to Yedlin...

I never made any such claim. You need to take back that lie.

On 4/29/2021 at 2:55 PM, kye said:How is this helping anyone?

Classic projection... You should ask yourself the same question -- how are your personal insults (listed above), contradictions and falsehoods helping anyone?

On 4/29/2021 at 2:55 PM, kye said:This whole thread is about the perception of resolution under various real-world conditions, to which you've added nothing except to endlessly criticise the tests in the first post. This thread has had 5.5K views, and I doubt that the people clicked on the title to read about how one person wouldn't watch the test, then didn't understand the test and then endlessly uses technical concepts out of context to try and invalidate the test, then in the end gets personal because their arguments weren't convincing.

I have given many reason's in great detail on why Yedlin's test is not valid, and I even linked two straightforward resolution demos that disagree with the results of Yedlin's more convoluted test.

Additionally, you unwittingly presented results from a thorough empirical study that directly contradict the results of Yedlin's test. Those results showed a "pretty obvious" (your own words) distinguishability between two resolutions that differ by 2x, while Yedlin's results show no distinguishability between two resolutions with a more disparate resolution difference of 3x -- and the study that you presented was also made with an Alexa!

I cannot conceive of any additional argument against the validity of Yedlin's comparison that is more convincing than those obviously damning results of the empirical study that you yourself inadvertently presented in this thread.

On 4/29/2021 at 2:55 PM, kye said:This is a thread about the perception of resolution in the real world - how about focusing on that?

Indeed, it's a question you should ask of yourself.

-

I thought that you were just being trollish, but now it seems that you are truly delusional.

Somehow in your mind you get the notion that I am "criticizing Yedlin for using a 6K camera on a 4K timeline" from this passage:

On 4/12/2021 at 7:27 AM, tupp said:There is a way to actually test resolution which I have mentioned more than once before in this thread. -- test an 8K image on an 8K display, test a 6K image on a 6K display, test a 4K image on a 4K display, etc.

That scenario is as exact as we can get. That setup is actually testing true resolution.

Nowhere in that passage do I mention Yedlin, nor do I mention a camera, nor do I ever refer to anyone "using a 6K image on a 4K timeline."

Most importantly, I was not criticizing anyone in that passage.

Anybody can go to that post and see for themselves that I was simply making a direct response to your quoted statement:

On 4/12/2021 at 1:08 AM, kye said:Based on that, there is no exact way to test resolutions that will apply to any situation beyond the specific combination being tested.

Even YOU did not refer to Yedlin, nor to a camera nor to using 6K on a 4K timeline.

Making up things in your mind is harmless, but posting lies about someone is too much. You need to take back your lie that I claimed that my intellect was elevated in comparison to Yedlin's.

Also, if you are on meds, keep taking them regularly.

-

8 hours ago, kye said:

You criticised Yedlin for using a 6K camera on a 4K timeline

No I didn't.

What is the matter with you -- why do you always make up false realities? Also, I already corrected you when you stated this very same falsehood before.

I never criticized Yedlin for using ANY particular camera -- YOU are the one who is particular about cameras.

The fact is that I have repeatedly stated that the particular camera used in a resolution doesn't really matter:

On 4/27/2021 at 6:03 PM, tupp said:Resolution tests should be -- and always are -- camera agnostic, as long as the camera has enough resolution and sharpness for the resolution test.

Once again, here are the two primary points on which I criticized Yedlin's test (please read these two points carefully and try to retain them so that I don't have to repeat them again):

On 4/12/2021 at 7:27 AM, tupp said:- Yedlin's downscaling/upscaling method doesn't really test resolution which invalidates the method as a "resolution test;"

- Yedlin's failure to meet his own required 1-to-1 pixel match criteria invalidates the analysis.

The particular camera used for a resolution test doesn't matter!

8 hours ago, kye said:and then linked to a GH5s test (a ~5K camera) as an alternative...

Actually, I linked two tests. I am not familiar with the camera in the other test.

8 hours ago, kye said:what about the evil interpolation that you hold to be most foul? Have you had a change of heart about your own criteria? Have you seen the light?

It is truly saddening to witness your continued desperate attempts to twist my statements in an attempt to create a contradiction in my points.

I have repeatedly stated that the camera doesn't matter as long as it's effective resolution is high enough and it's image is sharp enough. So, camera interpolation is irrelevant, as I have also already specifically explained.

The "interpolation" that Yedlin's test suffered was pixel blending that happened accidentally in post (as I have stated repeatedly) -- it had nothing to do with sensor interpolation.

8 hours ago, kye said:You even acknowledge that the test "is not perfect"

Yes. *Both* tests that I linked are not perfect. The first tester has a confirmation bias, and he doesn't give a lot of details on his settings, and the second comparison just doesn't give a lot of settings details, and the chosen subject is not that great.

Nevertheless, both comparisons are implemented in a much cleaner and more straightforward manner than Yedlin's video, and both tests clearly show a discernible distinction between resolutions having only a 2x difference.

Unless the testers somehow skewed the lower resolution images to look softer than normal, that clear resolution difference cannot be reconciled with the lack of discernability between 6K and 2K in Yedlin's comparison.

8 hours ago, kye said:I fear that COVID has driven you to desperation and you are abandoning your previous 'zero-tolerance, even for things that don't matter or don't exist' criteria!

Again, it's sad that you have to grasp at straws by using insults, instead of reasonably arguing the points.

8 hours ago, kye said:Yedlins test remains the most thorough available on the subject, so until I see your test then I will refer to Yedlins as the analysis of reference.

Your declaration regarding Yedlin's demo doesn't change the fact that it is not valid.

So far, the most thorough comparison presented in this thread is the Alexa test you linked that shows a "pretty obvious" (your words) distinguishability between resolutions having a mere 2x difference. The test that you linked is the most empirical, because it:

On 4/27/2021 at 6:03 PM, tupp said:- is conceived and performed with no confirmation bias;

- is made in accordance with empirical guidelines set by TECH 3335 and EBU R 118;

- directly shows us the actual results, with no screenshots of results displayed within a compositor viewer;

- doesn't upscale/downscale test images to other resolutions;

- uses a precision resolution test chart that clearly shows what is occurring.

8 hours ago, kye said:Performing your own test should be an absolute breeze to whip up

This:

11 hours ago, tupp said:there is no sense/logic to your notion that I should perform such a test myself, just because I have shown that Yedlin's comparison is invalid. I have demonstrated that we can draw no conclusions regarding the discernability of differing resolutions from Yedlin's flawed demo, and that fact is all that matters to this discussion.

8 hours ago, kye said:considering how elevated you claim your intellect to be in comparison to Yedlin,

I never made such a claim, and you've crossed the line with your falsehoods here.

Unless you can find and link any post of mine in which I claimed that my intellect was elevated in comparison to Yedlin's, you are a liar.

-

On 4/21/2021 at 10:02 PM, kye said:

If Yedlin has made such basic failures, and you claim to be sufficiently knowledgeable to be able to easily see through them when others do not, why don't you go ahead and do a test that meets the criteria you say he hasn't met?

4 hours ago, kye said:Well?

As I answered that question before, I have already demonstrated that it is easy to achieve a 1-to-1 pixel match.

However, I will add that there is no sense/logic to your notion that I should perform such a test myself, just because I have shown that Yedlin's comparison is invalid. I have demonstrated that we can draw no conclusions regarding the discernability of differing resolutions from Yedlin's flawed demo, and that fact is all that matters to this discussion.

In addition, there are several comparisons which already exist that don't suffer the same blunders inherent in Yedlin's test. So, why would I need to bother making yet another one? The execution in these demos is not perfect, but they are implemented in a much cleaner and more straightforward manner than Yedlin's video.

Here is one resolution comparison shot with a GH5s at 10bit and evidently captured in both HD and UHD. Does a GH5s use the correct type of sensor and is it also common/uncommon enough and does it additionally have enough quality -- to meet your approval for a resolution test?

The tester emphasizes the 200% zoom, but at 100% zoom I can see the resolution difference between UHD and HD on my HD monitor. However, this test should really be viewed on a 4K monitor at minimum viewing distance, giving priority to the 100% zoom.

This comparison is more straightforward than Yedlin's test. There is no upscaling/downscaling, and the images are not screen-captures of a software viewer, and there is no evidence of accidental pixel blending. However, it should be noted that the tester performs the comparison with a confirmation bias.

Here is a similar resolution comparison showing faster moving subjects. Likewise, I can see a difference in resolution on my HD monitor with 100% zoom, but the video should really be viewed on a 4K monitor at minimum viewing distance.

Now, I do not claim that higher resolutions are better than lower resolutions. I simply state the fact that I can discern a difference in resolution between HD and 4K/UHD at a 100% zoom, when viewing these two comparisons on my HD monitor.

One more thing... if the Nuke viewer behaves the same as the Natron viewer, I suspect that peculiarities in the way the viewer renders pixels contributed significantly to Yedlin's "6K = 2K" results. Combining this potential discrepancy generated by the Nuke viewer with Yedlin's upscaling/downscaling and with the accidental pixel blending, it is easy imagine how a "6K" image would look almost exactly like a "2K" image, when viewed on "4K" timeline.

-

On 4/24/2021 at 11:44 AM, elgabogomez said:

Yedlin’s test is for the world he is living in which is of world class feature film budgets, not “everyone’s regular 4:2:0 cameras “ or “monochrome, foveon or ccd sensor cameras”.

Yedlin's test isn't really applicable to any "world," because his method is flawed, and because he botched the required 1-to-1 pixel match.

Again, the type of camera/sensor doesn't really matter to a resolution test, as long the camera has enough resolution for the test. Resolution tests should be (and always are) camera agnostic -- there is no reason for them not to be so.

On 4/24/2021 at 11:44 AM, elgabogomez said:Moving pictures have a way of pulling you into a story, engaging the viewer with more than pretty detailed pictures.

What does that notion have to do with testing resolution?

On 4/24/2021 at 11:44 AM, elgabogomez said:Once you consider that filmmakers (world class directors, producers, cinematographers) are the target audience for his tests,

There's not much to a resolution test other than using a camera with a high enough resolution and properly controlling the variables. There is no "special" resolution testing criteria for "world class" filmmakers that would not also apply to those shooting home movies -- nor vice versa.

Furthermore, I don't recall Yedlin declaring any such special criteria in his video, but I do recall him talking about standard viewing angles/distances in home living rooms.

By the way, if you think that there are special criteria in resolution testing for "world class" filmmakers, please list them.

On 4/24/2021 at 11:44 AM, elgabogomez said:it gives both of you and your valid points a perspective

The fact is that Yedlin's resolution test is flawed.

On 4/24/2021 at 11:44 AM, elgabogomez said:and a way to back off to more productive discussions.

Not sure how to take this. By "more productive discussions," I hope that you mean "additional productive discussions" and not "discussions that are more productive."

On 4/24/2021 at 8:14 AM, tupp said:Of course, chroma subsampling essentially is a reduction in color resolution (and, hence, color depth), but it doesn't really affect a resolution test, as long as the resulting images have enough resolution for required for the given resolution comparison.

On 4/24/2021 at 9:26 PM, kye said:This is from a resolution test of the ARRI Alexa:

Source is here: https://tech.ebu.ch/docs/tech/tech3335_s11.pdf (top of page 10)

Your example involves color channel resolution resulting from a crude Bayer sensor interpolation, which has nothing to do with chroma subsampling. Chroma subsampling occurs after sensor interpolation (if a sensor needs interpolation).

In addition, chroma subsampling also applies to imaging generated by other means -- not just camera images.

On 4/24/2021 at 9:26 PM, kye said:Pretty obvious that the red has significantly less resolution than the green. This is from the number of green vs red photosites on the sensor.

But you're totally right - this has no impact on a test about resolution at all!

By posting those resolution charts, along with stating, "Pretty obvious that the red has significantly less resolution than the green," you have unwittingly demonstrated that there is a discernible distinction between different resolutions and/or that there is a large problem with Yedlin's test.

Of course, we all know that the green photosites in a Bayer matrix have twice (2x) the resolution as the red photosites. So, you are actually admitting that there is obvious discernibility between two resolutions that differ by 2x -- in a test shot with an Alexa camera.

Furthermore, Yedlin's Alexa65 test shows no discernible difference between two even more disparate resolutions -- 6K:2K is a 3x difference.

So, how is it that we see an *obvious* distinction between resolutions that differ by only 2x in a well controlled, empirical study, while we see no distinction between resolutions that differ by 3x in Yedlin's uncontrolled test?

It certainly appears that something is wrong with Yedlin's comparison. As I have suggested, the problem lies with his convoluted, nonsensical methods and in his failure to achieve a 1-to-1 pixel match.

Again, contrary to your relatively recent stance, it doesn't matter to a resolution test whether or not the test camera uses chroma subsampling or uses a Bayer sensor or is common or uncommon with a certain level of quality. All of your constantly changing conditions on what particular camera can work in resolution tests are irrelevant.

Resolution tests should be -- and always are -- camera agnostic, as long as the camera has enough resolution and sharpness for the resolution test.

Earlier in the thread, you said yourself:

On 4/13/2021 at 4:00 PM, kye said:In Yedlins demo he zooms into the edge of the blind and shows the 6K straight from the Alexa with no scaling and the "edge" is actually a gradient that takes maybe 4-6 pixels to go from dark to light. I don't know if this is do to with lens limitations, to do with sensor diffraction, OLPFs, or debayering algorithms, but it seems to match everything I've ever shot.

It's not a difficult test to do.. take any camera that can shoot RAW and put it on a tripod, set it to base ISO and aperture priority, take it outside, open the aperture right up, focus it on a hard edge that has some contrast, stop down by 4 stops, take the shot, then look at it in an image editor and zoom way in to see what the edge looks like.

So, you are saying that ANY raw camera can be used to duplicate the zoomed-in moments in Yedlin's test.

In the very same post, you also stated:

On 4/13/2021 at 4:00 PM, kye said:Of course, it's also easy to run Yedlins test yourself at home as well. Simply take a 4K video clip and export it at native resolution and at 2K, you can export it lossless if you like. Then bring both versions and put them onto a 4K timeline, and then just watch it on a 4K display, you can even cut them up and put them side-by-side or do whatever you want. If you don't have a camera that can shoot RAW then take a timelapse with RAW still images and use that as the source video...

So, you additionally say that we can use ANY 4K camera that can shoot raw video (or even a still camera) to duplicate Yedlin's entire test.

The question is: which stance of yours is true? For resolution tests, can we use ANY raw 4k camera or are we now required to use the particular common/uncommon camera with the particular sensor or quality that happens to suit your inclination at the moment?

Of course, even if there is an prominent difference in the resolution between green and red/blue photosites it doesn't matter -- as long as the resolution of the red/blue photosites is high enough for the resolution test.

Furthermore, the study that you linked showed the results of a better debayering algorithm in which the chart clearly shows the red channel having the same resolution as the green channel. So, your notion that the difference in resolution between green and red/blue photosites doesn't really matter to a resolution test -- as long as the camera's effective resolution (after debayering) is high enough and as long as the image is sharp enough.

Additionally, there is a huge difference in execution between your linked empirical camera study and Yedlin's sloppy comparison. Unlike Yedlin's demo, the empirical study:

- is conceived and performed with no confirmation bias;

- is made in accordance with empirical guidelines set by TECH 3335 and EBU R 118;

- directly shows us the actual results, with no screenshots of results displayed within a compositor viewer;

- doesn't upscale/downscale test images to other resolutions;

- uses a precision resolution test chart that clearly shows what is occurring.

Can you imagine how how muddled those fine resolution charts would look if the tester had accidentally blurred the spatial resolution as Yedlin did?

Benefits of Fresnel Lens LED

In: Cameras

Posted

Some open-faced focusable sources can produce a double cast shadow in the outer parts of the beam, when focused to "spot." So, cutting into the beam with barndoors or a flag can sometimes not give a clean edge as with a Fresnel.

I tend to use open faced fixtures, as that double cast shadow usually is not apparent, and because they are more compact and lightweight than Fresnels.

The Lowel Omni light. A great, lightweight, compact, powerful and exceedingly versatile fixture. It's focusing range is greater than many Fresnels. Always use a protective screen on the front of the fixture. Use FTK bulbs with a filament support, and avoid off-brand bulbs. The focusing mechanism is very fast and can break the bulb's filament if one is not careful.