Otago

Members-

Posts

60 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by Otago

-

Before anyone gets too misty eyed remember this is the same person who bullied employees for whistle blowing against Lance Armstrong and threatened news sites with law suits for being critical. He is also the master of hype and could just as easily have stubbed his toe as be facing real medical problems.

-

Does anyone have some Canon C300 mark I footage they would be willing to share as the original XF file ? I want to see how my computer handles editing it ( I'm assuming it won't be anymore taxing than GH3 footage ? I just want to make sure incase I need to budget for a new computer too! ) I have had a google but all the files I have found are now long gone I think.

-

It might do full frame 4k 10-bit 422 and 6k RAW ?

-

It's also quite like MXF in video in that hit can hold metadata, if it is implemented well then all the edits can stay in the file as metadata. Unfortunately it is heavily patented so it might never take off as a universal file format.

-

Can you use hardware HEVC decoding to decode the 10-bit HEVC ? If so then doing that on the latest AMD or Intel hardware would probably be worth the price difference alone. Are these 1U/2U servers or workgroup servers in tower cases ? The 1U servers I have been around were not something I would want outside a data centre because they are LOUD, like really really loud and with a range of harmonics to set your teeth on edge. The other things like M.2 NVMe drives and space for PCIe cards are probably doable with a few riser and adapter cards though.

-

Internal RAW and really good autofocus, which is good because historically this is the spec for Canon cameras for the next 10 years ? I'm more excited that HEIC images are being adopted outside the Apple ecosystem. It can be half the size of JPEG for the same quality ( or lossless ) and can have alternate display versions so an sRGB file for general viewing and then a 10-bit flat file for editing and that edit data can be kept in the file rather than as a sidecar or in a database.

-

Interesting replies! I note that there are compromises for some people and some people are content with what they are currently shooting with. If you are satisfied then how did you come to that conclusion ? I am curious about how you know that you don't need anymore, because I know what I am happy with but I am finding it difficult to express how I came to that conclusion.

-

I've been looking at footage from various cameras, straight out of the camera if I can find it, and was curious about how other people evaluate cameras. I can see differences between them; resolution, dynamic range and colours. I can rank them in order of which image looks better to me ( in a subjective way ) and I can rank ergonomic and technical features that I want or need. I can push the footage to see where it breaks and how it looks after compressing for delivery. What I'm curious about is where is the point that it is good enough and anymore isn't necessary for you ? I think I know where my point is, it's somewhere around a C100, C300, F3 for features and image quality. I want the built in NDs and XLRs and I need the robustness of them and the image quality holds up to the little grading that I do and when it's compressed down higher resolution or bitrates don't jump out at me as being better, but I'm not pushing anything hard and my delivery is heavily compressed. Where is your point of adequacy and why is it there ? Is there ever a point where you will say that you don't need anymore ?

-

I'm envious of the concentration it takes for ENG and documentary shooters to think and edit in those conditions! Might be of interest to some people : https://www.live-production.tv/news/sports/gearhouse-broadcast-helps-take-sky-sports-f1-coverage-road.html https://www.svgeurope.org/blog/headlines/behind-the-scenes-sky-sports-coverage-of-the-british-grand-prix-at-silverstone/ https://www.wired.co.uk/article/formula-one-liberty-media-chase-carey-bernie-ecclestone It's mostly from the point of view of branding but it was still quite interesting to someone who has never thought about how outside broadcast works.

-

Nikon Z6 features 4K N-LOG, 10bit HDMI output and 120fps 1080p

Otago replied to Andrew Reid's topic in Cameras

Perhaps it will become a huge success and a cult film making lens, but I think it's more likely to be the next Leica 50/1 Noctilux where a few people use it to it's full extents and many lust over it and sell it when the realities of using that lens hit and it ends up stopped down to 1.4 or 2. -

I agree that it could be a 2/3 camera ( and according to android lad it is! ) I haven't watched live tv recently and I noticed that it looked very different to what I was used to - why the change now if they were always capable of it ?

-

I was watching the interview highlights on YouTube and was surprised to see high dynamic range and shallow depth of field in the drivers interviews after the race. Admittedly the last time I watched F1 it was 10 years ago ( they moved from free to view to Sky Sports and I didn't want a satellite dish just for F1! ) but it was definitely all clippy with deep depth of field. I can't find anything about the cameras and other broadcast stuff they use, anyone know ? The irony is that there's a camera man in the background of this video but I can't see because of the shallow depth of field :

-

Nikon Z6 features 4K N-LOG, 10bit HDMI output and 120fps 1080p

Otago replied to Andrew Reid's topic in Cameras

I think that lens is designed for a very particular type of Japanese camera collector / user. The same ones who buy those mint cameras on eBay and the Leica collectors editions, and that I follow on Instagram. If you don't have much space, but a high disposable income and the urge to collect then £8k camera lenses might be just the thing for you! I wouldn't be surprised to see a beautiful leather bag for that lens and the Z7 that comes with its own cover bag to stop the bag getting damaged, they're not expecting professional users to buy it ? -

Perhaps 4K 10-bit 422 or 420 30p with a codec too low to meet broadcast specs and remove the timecode ? I would hope it's not 8-bit in 4k, but 8-bit with great colours is better for me than 10-bit that I have to work on. Great autofocus, variable ND, XLR inputs and good out of the box colours would still make it a great camera for all the web delivered content being shot by single shooters ( me for instance! ). An upgraded FS5 II with the Venice colours available in more than one profile, full frame and great autofocus, I'm guessing about £6k ? What else could they cut down to make the A7s3 though ? Just the variable ND and XLR inputs ? Perhaps the A7S3 will be 8-bit 4k and the FX6 will be 10-bit ?

-

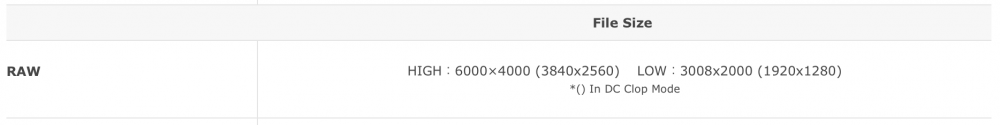

There does seem to be a low RAW for stills, so perhaps the 1080p RAW is based on that? Nikon do it by downsampling according to this https://www.rawdigger.com/howtouse/nikon-small-raw-internals but it could be pixel binning too. I think this makes sense for stills - jpeg is only 8-bit so anything better is useful but for video 10-bit and 12-bit are available so this style of partial RAW might only be useful for getting around patents and keeping the data rates lower vs uncompressed video if it is similar to the baked in sNEF.

-

Do You Think They'll Ever Make a 12k Resolution Camera?

Otago replied to Zach Goodwin2's topic in Cameras

This is an interesting series from Steve Yedlin that goes into this and uses IMAX and 35mm film and all sorts of digital cameras. http://yedlin.net/ResDemo/index.html Is debayer processing getting better or will there still need to be 12k of bayer to get 8k RGB ? After watching the resolution demo, and understanding some of it, I don't think it matters though. -

I'm engineer so not directly relevant, but might be based on what BasilikFilm has said above. I was never asked what school qualifications I had after I got into University, I was never asked what degree I had after I had a few years work under my belt, I haven't been asked what my first few years of employment were like now I have 15 years under my belt. Each time those credentials or qualifications made the step to the next level easier, it would've been possible without them but I have seen others coming from a less traditional background take longer to get their foot on the first rung. A traditional path with good grades gives everyone in the hiring process a bit of comfort and can be the differentiator between 2 candidates but this is only true at the early stages of a career, after 10 years of experience I care far more about personality. I work at a University now and there would be 2 things I would check if I was going to do a masters. 1. See who the tutors are, if they are academics that have worked their way up via bachelors, masters then a phd I would be a little cautious because there are some great people who have done that and some people who have learnt and excelled at academia and not just their specialist subject. 2. Who are the other students, if part of what you want is to build a network then students who don't stay in your location ( either because they don't want to or aren't allowed to ) or aren't fluent in English then it may not be as useful to you. In our institution ~70% of masters students are Chinese and American and they are there for the credentials and don't stick around afterwards.

-

That looks like a pretty great camera for the price, still no confirmation that the UHD Clop ( nice typo in their specs list ) isn't what they are using for RAW so it looks like a s35 UHD CineDNG. Then again the Pocket 6k is massive compared to this so I'm not entirely surprised!

-

They also seem to have wanted the exposure to be the same, perhaps they also saw it as a B camera for Alexa shooters as they were also comparing the F65 to the Alexa.

-

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

Otago replied to Andrew Reid's topic in Cameras

Ah, I have misunderstood the terms then! I assumed by linear it was meant that the total values had been remapped to make better use of the graduations rather than the absolute light levels - but that is what log is - I assumed that this was done on all sensor ADC's as a matter of course ( the data coming off was log) and then a further log conversion was done to fit that in a 10-bit container while prioritising mid tones but I suppose it's not necessary if the sensor bit depth is close to the final bit depth, I forgot this wasn't a 24 bit instrumentation ADC where you have loads of data to throw away. Thanks! -

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

Otago replied to Andrew Reid's topic in Cameras

Just realised some of this is incorrect. There are only 1024 values for the whole dynamic range, rather than each stop so the numbers about should be 50 values representing each stop rather than 100 - the concept is the same but the values are / were wrong. -

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

Otago replied to Andrew Reid's topic in Cameras

I think this is true if it is a linear 14 bit file but not if it is log 10 bit ( assuming each bit corresponds to an extra stop of dynamic range ) If you ETTR and put lots of information in the curve of the log then in the brightest values they will be sharing bits. Depending on what curve your camera uses you could end up with, say, your 2 brightest stops being compressed into one bit in the codec and so only have 512 values representing each stop rather than 1024 - whether that is noticeable is another question! If you exposure "correctly" then most of the values will fall in the linear part of the log curve and you'll get all 1024 values for each stop. I think log curves are used because it is assumed that the very brightest and darkest information will be lower in information and importance, and work similarly to film so it was easier to switch over. -

I thought this might interest a few people, I find these things really interesting because it shows thinking and not just the final output. I think it's probably from the Sony Pictures hack a few years ago. EDIT; This was actually the link I meant to post but the other one is interesting too https://wikileaks.org/sony/docs/05/docs/Camera/FeedbackV1 1_Next Generation Camera v6.pdf https://wikileaks.org/sony/docs/05/docs/Atsugi/To_Nakayama_san.pdf Apologies if this has been posted before, I couldn't find anything.

-

It may also be to appease the algorithm in another way; if they have different types of content then they can be penalised if everyone isn't interested in everything. If you never watch a channels vlogging content but always watch their proper content then the algorithm just sees that as you not being as interested as you were before, and in a puppy like attempt to please you it shows you content that you always watch all the way through, and may not show you their content again for a while ( or ever again ). How it is working is conjecture on my part, based on reports from a few people who have talked about it - Linus Sebastian is pretty open about how it all works on the WAN show, but also knowing how "algorithm" and "machine learning" is used as pixie dust in the tech world to make pretty old concepts seem magical and worthy of investment. It could be solved if there weren't so many people trying to game the system, it's probably hardest to game a system based on your watch metrics rather than what the content is purporting to be. Until an AI is smart enough to understand and categorise the content itself then it'll just continue to be a cat and mouse game.

-

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

Otago replied to Andrew Reid's topic in Cameras

I've been looking at C300 footage recently and there is a big difference between the best and worst, some people can make it shine and others could make an Alexa look like an iPhone video. It might be wise to wait for a few weeks till there's a bit more footage rather than just the promos and test shots out there - the S1 vlog stuff I have been seeing has definitely gone up in quality as the camera has been used more and there's a larger sample of users publishing stuff.