-

Posts

8,047 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I would suggest that it has enough DR, as would most current high-end cameras. Have you done any tests to work out how much DR you're actually dealing with? I'd suggest it's less than you'd think. It's trendy to complain about how cameras don't have enough DR and to continually call for more, and I have done this in the past as well, however I did it because I actually shoot scenes on a regular basis (backlit sunsets) and I was able to work out how much DR this required in real life (the BMPCC / BMMCC could just do it and the GH5 fell short) so I knew I needed around 11 stops. I would suggest that interview situations, even naturally lit, wouldn't require as much as literally having the sun in the frame, but it's very easy to run a test and find out. I did a latitude test on the GH7 in V-Log Prores and whatever wasn't clipped kept full quality, so with proper colour management was basically perfect.

-

No, I just shoot travel stuff, so not really the same thing, but having said that it would crush it if you gave it controlled lighting and a fast sharp prime. Shooting in these high DR scenes with available lighting is a torture test compared to an interview where you're rolling out the red carpet and making everything the easiest it could possibly be for the camera. You feeling tempted?

-

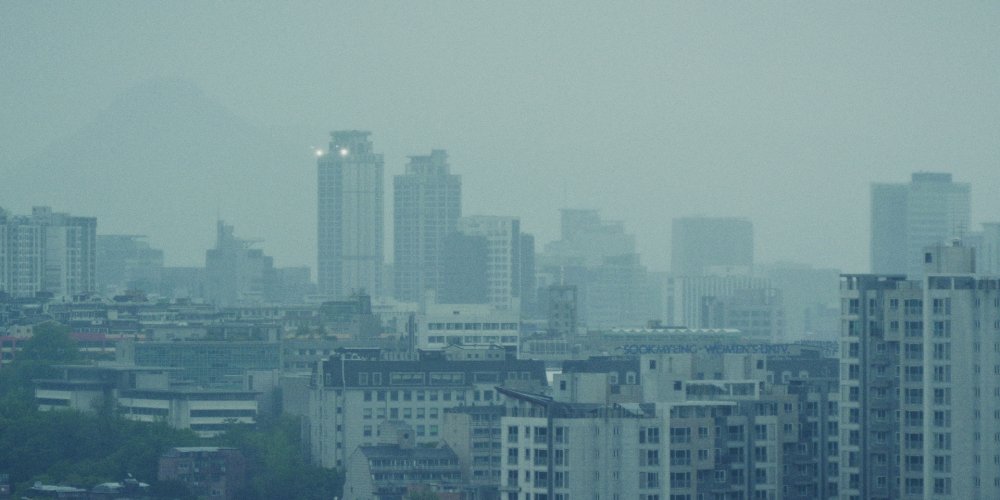

Raining in Seoul today, so pushed the GH7 + 14-140mm combo to its limits, including the in-camera digital zoom to get to 179mm, equivalent to 358mm on FF!! Don't judge the lens by these images, apart from having a film emulation applied, I was also shooting through two layers of hotel glass on a range of angles, and the rain and dirt on the outside of the glass wouldn't have helped either. My normal thoughts when shooting through dirty glass is to have the fastest lens possible to blur it all into oblivion, but the DOF calculator says that a 50mm F1.2 lens focused at 100m will have 41.6m in focus in front of the focal plane, but the 140mm F5.6 lens also focused at 100m will only have 30.2m in focus in front of the focal plane, so the 140mm will blur the raindrops on the glass more, presumably because of the longer focal length. Win! This is the situation I had in mind when buying the 14-140mm lens, although obviously I knew I would use it for other things too. I must admit that I've been using the long end while out and about much more than I thought I would, so not regretting the purchase at all.

-

Maybe gothic weddings is the way to go? OMG, of course it is. This travelling stuff will rot your brain I tell ya! But better to refer to Perfect Days than to Train To Busan - I'm not interested in a holiday THAT exciting. But talking about contrast ratios, yes, the frame grabs were for reference of how dark they print finished films and how much contrast they tend to have in the finished print. The files from the GH7 are incredible, and certainly the best I've ever owned, so dealing with these challenges is new to me. Previously I would have files clipped in-camera and the shots would just be binned, or they'd be super contrasty and without proper colour management I'd be struggling to make them look natural and without radical colour casts etc, so this is a very welcome development! I wouldn't mind holidaying in overcast conditions actually - where do I apply to get one of those remotes that changes the weather?

-

Visited Jikjisa Temple yesterday, which was founded in the year 418, and looks straight out of Crouching Tiger Hidden Dragon. Everywhere you turn there are compositions that make you gasp. The main challenge with full sun like this is the high contrast scenes, and then grading them so they don't look faded and also don't have half the image almost while or half of it pitch black. This one has slightly more contrast than I'm happy with, but it gives you a sense of the look things start to drift into. When I'm grading them for real, I think I'll likely use large power-windows and pull things down or up using those. As a reference, here's the kind of shot I'm talking about where the contrast ratios are just overwhelming. The information is all in the files, so I'm capturing it all, I just have to pull it out.

-

Thanks! I've literally used the same saved powergrade for each set, adjusting it a little perhaps but not by much. I think I backed off the halation on that set, but I can't remember making any other change. They do look nicer though, so maybe it's just the exposure levels, or maybe it's just the subject matter? Sunset and blue hour always has a certain magic to it.

-

Just had a look at some stills from it, and yes, much darker than my other references. I looked through all the John Wick franchise, as well as some other action series too, and one way they can make these things all look dark is just have them all set in night-exteriors or interiors without visible daylight. I think there's lots of tricks like this. For example, I read an article on how movies often look "larger than life" which has many factors, but one was that there were almost three times the number of shots that were taken below eye-level as those at or above eye-level, so most of the movie was literally looking up at the characters.

-

Last nights adventures. This time I graded with MBP monitor set to middle brightness and referred to some references. I swapped from the 14-140mm f3.5-5.6 + vND combo to the 12-35mm f2.8 lens without vND once the sun went down, as it's easier to shoot with the constant aperture when zooming. The stalls and restaurant areas were too bright for F2.8 and base ISO 500, so had to stop down on some shots. The massive lanterns in the parade were also a challenge too, bring so much brighter than the ambient levels, and I didn't want to smash them into the highlight rolloff of the emulation. I tried walking with it at 12mm but it was IBIS-wobbles galore, so next time when walking I'll probably use my iPhone. I compared my iPhone 12 mini cameras to my GH5 and the normal iPhone camera was equivalent ISO noise to GH5 with F2.8 and the iPhone wide was equivalent to GH5 at F8. @MrSMW can tell me if these are still too dark 🙂 I based the FLC grade on the 35mm preset, but I'm thinking that it's a bit too low-end, and the 64mm preset is a bit clean, so I might create my own 50mm preset for something in between.

-

Edited and uploaded the stabilisation test video. Test of how well the GH7 and 14-140mm combination is able to stabilise hand-held footage. GH7 was using the Boost IS mode, which is a more stable version of IBIS (but it doesn't do electronic IS, that's a different mode, so this mode has no crop). My hands were more shaky than normal when filming this, and the first shot was standing up without leaning on anything, and the second shot of the hotel was sitting down. Results aren't perfect, but they're good enough for my purposes.

-

I don't mind at all, I mentioned it partly to raise the topic as I'm not sure about it. What I need to do is to get a bunch of references and study how they distribute the DR of the images into the final grade, so I can get a sense of things. A bit like how cinematographers get an understanding of levels and ratios, using false colour or a light meter. I pulled a few reference stills from the movie Perfect Days which is set in Seoul to compare: and some from Kill Bill vol 2, as it's a bit more contrasty: and The Matrix, because it's got that feeling of the matrix not being a real place, which gets to the idea that Seoul is like a world unto itself: or The Killer has quite a dark grade to things: There's something about the rich dark areas, and having rich dark colours that I'm chasing, but obviously I'm yet to work out what it is and how to get it. Works in progress though!

-

First day out shooting in Seoul. Here are some images. These are all frame-grabs, were ETTR, had a look put over them with Resolve FLC plugin, and I adjusted exposure on each (and contrast on the odd one or two) and that's it. I'm sure I will finesse them once I start editing for real, but this is essentially just looking at my dailies. Setup is incredibly easy to use thanks to the huge DR, AF is super-snappy, the 14-140mm zoom gives so much flexibility and I'm finding I'm using the long end a lot more than I thought I would. I'll post some video footage of it later, but I'm also finding that I can hand-hold at 140mm (280mm FF equivalent) and with the OIS + IBIS working together get almost no movement in the frame at all, and with a slight crop in post I'd get locked-off images. At anything below 80mm or 100mm the frame is locked and won't need any stabilisation in post. Incredible results.

-

Yeah, every time I think about how you might be composing for both horizontal and vertical I just imagine you turn into Wes Anderson and frame everything centred. There are probably other ways to go about it, but I'm glad it's not something I have to work through!

-

In all seriousness though, now that everything is devices and standards don't matter anymore, it's worth taking a few images and cropping them to different aspect ratios and seeing how the images feel. I've become very fond of 2:1 lately. Wider than 16:9 or 17:9, but not so wide that normal-sized screens force the subjects in the frame to be too small, as can happen with 2:35:1

-

The only video one needs on aspect ratios:

-

You'd be mad not to wait for the 17K version. I mean, is 12K enough? Really?

-

The low-contrast look has been fashionable for a long time, since people started shooting in LOG and then editing in it and getting used to how it looks. Colourists talk about this problem like it's been around for many years and simply never went away. This caused a feedback loop where directors fought the colourist to keep things bland, which made films get released with bland grades, and then this became the reference for future directors and also all the amateurs. Also, it's quite hard to add contrast in post because it requires a clarity of thinking that many do not possess. When you look at your image and see it's captured all this information in the shadows and highlights and then apply a healthy level of contrast you immediately miss the details that are now crushed in the rolloffs. This leads to the question of what parts of the scene can be obscured. The only way to be able to answer this question is to understand what the shot is about, and therefore what is relevant. This is a level of maturity not yet attained by many. I didn't really do a systematic comparison with the G9ii, but in general terms, why would I pay several thousand dollars for a new camera that isn't the leading offering, when the flagship is only a few hundred more? Certainly, if internal Prores and cooling fans were absent on the G9ii then either of those would probably have been an instant disqualification. The size comparison is pretty moot as well, for street work I'd consider both to be full-sized bodies.

-

Yes, that's the one. K&F Concept 58mm True Color Variable ND2-32 (1-5 Stops) ND Lens Filter, Adjustable Neutral Density Filter with 28 Multi-Layer Coatings for Camera Lens The key phrases are "True Color" and "ND2-32 (1-5 Stops)". They sell ones that have a wider range, but aren't as high quality and will have greater colour shifts over the operating range. Having hard stops is the key to preventing you pushing it too far and getting into the range where the performance is poor (which I think is inevitable of this design, so the hard stops just stop you before you get there).

-

Thanks.. To be honest I just find it baffling that everyone is throwing the word "cinematic" around and yet all the colour grades look like they haven't been converted from LOG to 709. It's one of the easiest changes you can make in post to take your footage from video to cinema, and yet hardly anyone does it. Pushing as much contrast and saturation as you can was the best film-making advice I ever got.

-

HA! I didn't notice that until you pointed it out, as I hadn't hit play on that shot yet. I guess nothing is immune to moire, although this was worse than I would have thought. The GH7 has no crop in its full resolution modes, and none in the 1080p modes, but the 4K modes do have slight crops - with the C4K having slightly less crop which is why I chose it, so I wonder why that is and what tom-foolery might be going on with that. The bitrates for the native resolutions are pretty brutal without Prores LT, so I'll likely stick to the C4K mode unless it starts being an issue. Thanks! I didn't get the LogC upgrade. I haven't seen any tests that were done properly so am assuming that it's not worth the cost unless proven otherwise. I also figure that even if there is some magic in there, I'm taking a hammer to the image with the FLC anyway, so it's not like I'm precious about it. The only thing I am precious about is getting images that have the right aesthetic to support the subject matter and get out of the way rather than being distracting. When I saw Goodfellas and a bunch of film trailers projected on 35mm film, the two most stunning things about the images were: 1) how fundamentally flawed they were from a technical perspective 2) how quickly and completely those incredible flaws evaporated and you saw effortlessly through and into the scene I'm still at the beginning of my FLC journey, but I'm convinced this pipeline is capable of removing the digital distractions from the image that bug me so much.

-

Part 2... how did I get here? I've written about this previously, but the summary is that I went through a sequence of trial and error, continually bumping up against the limits of the hardware, the software, my skill, and mindset. Setup: GH5, fast manual-focus primes I shot a bunch with the GH5 and a set of very fast MF primes. I chose this setup as it had 10-bit LOG, IBIS, and the fast primes gave good low-light and some background blur. I knowingly sacrificed AF, essentially swapping a fast / accurate / robotic AF for a slow / human / aesthetically-appropriate focusing mechanism. The MF worked, but not always (especially for my kids, who wait for no-one), and took time and effort to operate the camera away from the things that literally every other crew member does on set. The 10-bit LOG worked, but wasn't a properly supported LOG profile so didn't colour grade flawlessly in post. The primes provided the low-light and shallow-DoF but the shots I missed because I couldn't change lenses fast enough were more valuable in the edit than having shallow-DoF. The DR was lacking. Setup: GX85 with 12-35/2.8 I owned the GX85 and 12-35mm zoom, so I did some testing. I loved the speed of AF-S, and the deeper DoF meant that the number of shots unusable due to missed focus dropped to almost zero. I tested it with low-light and for well-lit night-time areas, like outdoor shopping malls, it was sufficient. The Dual IS (IBIS + lens OIS) was absolutely spectacular and a welcome addition, and having a zoom made me realise why doco and ENG shooters have them as standard issue. The DR and 709 profile was a real limitation though, and I really felt it in the grade. It was around this time I figured out proper colour management, and the Film Look Creator was released. These made a huge boost to grading the GX85, despite its 709 profile. The mindset shift The fundamental pivot was in mindset. As social media became faster and more showy, I noticed the gulf between it and 'real' film-making more and more. I went minimalist, thinking more and more about the days before digital where the process was to shoot as best you could, cut it, do sound-design, and that was it. The focus was on what was in-front of the camera, how the cuts made you feel, and sound design that supported that vision. Even those shooting docs on 16mm film could make magic. Without throwing away the baby with the bathwater, I decided to re-focus. To shoot what I could shoot, to learn to cut with feeling, and to simulate a film-like colour grading process where the look was applied and only very basic adjustments were made. Setup: OG BMMCC with 12-35/2.8 I owned these already, and on my last trip to South Korea I took these as well as the GX85. My shooting moved from shooting people I knew to shooting more general scenes, giving me more time and taking the time pressure off. The setup was large (comparatively!) and very slow to work with, but it validated my mindset shift. The images were organic and rich, but in a way that drew you into their contents, rather than towards the medium. The DR was finally sufficient, and the colours were delightful. But the monitor wasn't bright enough, the lack of IBIS meant that the OIS stabilised tilt and pan but not roll, so the images all needed to be stabilised in post, but had roll motion blur due to the 180 shutter. The low-light wasn't ideal either, even with fast lenses. The moire from the 1080p sensor was real and ruined shots. I also got a lot more comfortable shooting in public with a more visible camera setup. New Setup: GH7 with 14-140 and 12-35..... So what I wanted was the best of all worlds. Dynamic range. I knew the OG BMMCC and OG BMPCC were the same/similar sensor, and I knew the GH7 had more. What I didn't expect was how much more that would be in real life. Here's a high DR scene from Monday. Here it is without the FLC, which I set to add contrast. Now with the shot raised by 3 stops. Notice the detail in the railings and under the eves on the right. Now with the shot lowered by 3 stops. Notice that the roof, and even the body of the car are still not clipped, with the only clipping being the sun reflecting off the car window. In grading, there is more DR than you can fit in the DR of the final shot - assuming you haven't developed Stockholm Syndrome for your LOG footage that is. Low light. I had previously established that the GX85 and 12-35mm F2.8 were good enough for well-lit night locations. I also knew that the BMMCC at ISO800 and the 12-35mm F2.8 set to a 360 shutter was good enough for semi-well-lit night locations. I also knew that the BMMCC with my 50mm F1.2 lens was passable at the darkest scenes I shot in Korea - which were from the hotel window at night. I've compared the GH7 vs the GX85 and BMMCC and the noise profiles are all very different, but I concluded that the GH7 had probably 2-4 stops of advantage over those. This means that I might be able to shoot well-lit night locations with the 14-140mm lens, and might be able to shoot from the hotel window at night with the 12-35mm lens. I'm still contemplating if I should take my F1.2-1.4 primes on my up-coming trip, but if I don't I'll still be able to shoot 99% of what I want to with the zooms. Camera size. Perhaps the only drawback when compared to my previous setups. I'll be taking the GX85 and 14mm F2.5 pancake lens as the pocketable tiny camera. This combo is no slouch by itself, so although it lacks some of the specs from more serious cameras, it is easily in the capable category, especially when helped by Resolve+FLC and from a shoot-cut-sound-publish mindset. Absolute speed. When it comes to absolute speed, nothing beats a smartphone, which is always in your pocket, can be pulled out and rolling in seconds. This is also a serious tool when combined with Resolve+FLC and a shoot-cut-sound-publish mindset.

-

I have a new setup and pipeline and I'm really happy with it. GH7 shooting V-Log in C4K Prores 422 internally, at around 500Mbps 14-140mm F3.5-5.6 lens for daytime, 12-35mm F2.8 for night-time K&F True Colour 1-5 stop vND Pipeline in Resolve: CST to DWG as working colour space Plugin for basic shot adjustments Film Look Creator for overall look (and for taking the digititis out of the image) ARRI709 LUT to get to 709 output I went on a walk on Monday to test the full setup, and it was a crazy hot day (37C/99F) and direct midday sun, so seriously challenging conditions. Here are a few grabs (be sure to click-through rather than viewing the preview files embedded in the post). My notes and impressions - while shooting: Setup was GH7, 14-140mm lens, vND, and a wrist-strap and that's it I used the integrated screen, showing histogram, zebras, and focus-peaking to monitor I used back-button focus to AF before hitting record, so no AF-C going on while shooting (and randomly changing its mind about what to focus on) All shots were 14-140mm at F5.6 for constant exposure The K&F 1-5 stop vND had enough range, when combined with the DR of the GH7, so I never needed to change settings, despite going in and out of shadow (and even inside, which isn't included in the above images) The vND had a much more consistent sky and colour render than my old (crappy) vND, so I'm really happy with it I did ETTR and bring images down in post, but my tests indicated that V-LOG is very linear so there are a good few stops of latitude there The "tripod mode" of IBIS, which locks the frame completely, was very effective (despite me being hot and not having eaten for hours) and I could hand-hold past 70mm without it needing to drift, and even at 140mm (280mm FF equivalent) the shots will be fine with a bit of stabilisation in post (C4K on 1080p timeline) I shot about 18mins of footage in about 1.5 hours, camera was on most of the time with screen at full brightness, and didn't get any notifications about the battery, and didn't look to see how much was left since I have a spare My notes and impressions - in post: I chose a Film Look Creator preset and then just messed with it for maybe 10 mins, while scrolling back and forth through the footage, and I deliberately pushed the contrast to create a really strong sense of the contrast between the beating sun and the deep shadows Shots had exposure adjusted (obviously, due to ETTR) and some had contrast lowered and a few had slight WB tweaks, but that's it I never felt like I was fighting with the footage, and it didn't feel like work when creating the look.. I've shot with a lot of cheap cameras with tiny sensors and you always feel like you're trying to make gold out of lead, but playing in the FLC was more like choosing between a large range of high-quality options I used another copy of the FLC to adjust exposure etc per shot, with it set to not impart and 'look'. The advantage of that is that in Resolve there is a mode (Shift-F) that maximises the preview image and gets rid of the GUI except for the vertical toolbar on the right-hand-side where the DCTL and OFX plugins are, so it's a way of getting almost a full-screen view but keeping the controls visible.. very useful if you don't have a control surface or a second monitor handy. I'll talk more about my thought process and how I got to this setup in a later post, and also go into some of the technical stuff (DR, high-ISO, etc) but more importantly than that, I finally feel like the tech has come of age. What I mean by that is that I now have a setup where: I can shoot with a conveniently sized setup that doesn't need a rig and is ergonomic to use It has the right usability features, such as histograms, zebras, focus-peaking etc internally The monitor is bright enough The GH7 plus lenses (14-140mm F3.5-5.6 and 12-35mm F2.8) are long enough and fast enough to shoot what I see, without being too large, heavy, or prohibitively expensive It has enough spec that it can deal with almost all the situations that I actually shoot in, with enough DR for the sun, enough ISO for night-time, and fans so it doesn't overheat before I do, etc It shoots internally using a colour space and codec that don't look cheap/amateurish and make me think about upgrading It doesn't fight with me in the colour grade Resolve and the Film Look Creator are able to easily give me the flexibility in post to match images and correct any weaknesses from shooting (e.g. if there's a bit of movement when shooting hand-held at 140mm) Resolve and the Film Look Creator are able to remove the 'digital/video' look and instead give me a range of options that don't look artificial and most importantly, contribute a feeling to the footage without distracting from the content of the images (this is, after all, the entire purpose of what we're doing here.....) For the first time it feels like I'm getting the results I want because of the equipment I have, rather than in spite of it.

-

I loved that book! I should re-read it.. I definitely agree that exceptional craft is not seen but is felt. Perhaps my whole film-making journey has been gradually learning to see what makes the great things great, as at first I knew they were great but couldn't tell you why. When thinking about your video, and visual storytelling in general, I keep thinking of poetry. In poetry you have things like alliteration, rhythms, rhyming, as well as metaphor, etc. For example, having a sequence of walking which ends with a mile-marker sets up the pattern that lots of walking happens to get to a mile-marker, and then you can give a short burst of shots of mile-markers and we know that lots of walking was implied. Having two sequences where the structure was similar but subject matter was different is a way to draw parallels between different subjects. Having a theme throughout the edit, perhaps from the editing style, perhaps from the compositions or other choices during filming, can 'rhyme' through an edit. There are lots of other examples possible. I watched an analysis of the first Nolan film, Tarantella, and a theme was that the non-linear sequences seem random at first, but as the film progresses, patterns begin to emerge and as links form between new shots/sequences and previous shots/sequences, your brain becomes aware of more and more patterns, making the experience richer. I think this richness of patterns is how I experience craft. Potentially I'm becoming more consciously aware of emerging patterns and themes (which can seem to me like comments or questions or both) and potentially the feeling is building up (which I interpret as there being patterns but I'm not registering them consciously), but mostly likely both. There are gems in the doom-scrolling which show examples of vibrant craft, but I think the quadrant I don't see that much is of gentle / relaxed / understated craft, rather than hype craft. Perhaps this is just that attention editing is perceived as being the only path to success on social media (it's not, but it's often perceived that way), or it might be that those capable of such craft are trying to make a career from it and so everything is a showreel of-sorts. As someone who has more than enough excitement/stress in my life without needing to find more of it online in my spare time, I really appreciate something that is relaxed, but is also intellectually stimulating and emotionally communicative. Filming subjects that contain stillness and space, and communicating that feeling (that emotional reality) within the edit without losing the attention of the viewer is a more significant challenge than retention through frenetic hype edits set to music written for the club at 2am.

-

Accidental perhaps, but very welcome! A very significant part of me deprogramming myself from the bubble of social-media film-making was looking at professional work. After my analyses I concluded that any shot-on-location TV doc piece that was above average would have the equivalent quality of the top 20% of social media content. The pieces winning Vimeo Staff Picks would only translate to good-but-not-great in TV land. So your instincts from client work put you in very good stead in comparison to the very shallow pool of talent in online travel film-making. I think when you have any professional level as your reference point then it's difficult to see how much extra there is going on!

-

Art is what happens when you stop thinking about the equipment, technical specifications, and shallow DOF. Therefore the only options for an art camera are cameras that shoot RAW at 3K or less, and to avoid Bokeh fever the sensor should be smaller rather than larger. OG BMPCC or BMMCC are the obvious choices, but these days the OG Alexas are coming within striking distance of the average new MILC - and are a much better choice because instead of pixel peeping 8K you can take your head out of your ass and go point the camera at something interesting.... which is required for "art" to even become possible. Of course, higher spec cameras can be used to make art, but the art happens in spite of the camera, not because of it, so I'd wager they're not "art cameras" they're tools for artists.