-

Posts

8,046 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I disagree - the budget is the budget - it doesn't matter the split between production and post. If I get my iPhone and walk through a shopping centre and record a 90 minute single take, then go home and spend $50M turning that clip into an action film by replacing everything with VFX, then that's still a blockbuster film, I still filmed it, and the question is relevant. The answer to the question is no, but that answer has nothing to do with the camera. This thread discussed the camera used to shoot the film because this forum only ever talks about cameras. You could start a thread asking if AI will use GMOs to fix poverty in sub-saharan Africa and we would discuss the camera used to shoot AI videos.

-

The latest test video from Matteo shows quite a difference in noise between the BMCC6K and BMPCC6K in the super-dark areas when be boosts the image up a bunch, so unless he did something wrong (which I doubt), it's noisier than the previous model: However, it doesn't look visible unless he boosts the image to reveal it, so it's likely only an issue if you're not able to expose properly and/or want to see in the dark like night-vision-goggles. Of course, this is the internet, so every tiny detail must be completely removed from reality and then blown up beyond any sense of reasonableness (then everyone can latch onto the least significant issues and argue solely based on those!).

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

Sounds like he needs to learn about colour management. The more I learn, the more I realise "I can get better colour with X" really just means "I don't use colour management and I prefer the LUT I happen to use for X over the LUT I happen to use for Y". In my recent attempt to match GH5 to OG BMPCC, I was surprised at how easy it was, and how difficult I found it in previous attempts. It's taken me a long time to get here, but now I'm here I look at cameras fundamentally differently - they are just devices that capture an image (with whatever DR / noise they have), process and compress that image (NR, sharpening, compression) and then I pull it into Resolve and (assuming they have a documented colour space) I can do whatever I like, applying whatever colour science I like. The thing that matters most is the usability of the camera to capture things and work reliably etc. Half the amateur camera / colour grading videos are "how do I grade Sony" "how do get the best from Fuji", but the colourist videos talk about colour management, and then talk about technique and workflow. There's a reason for that. -

Just noticed one other thing - when you're converting from ACES to Rec709 you might want to apply some Gamut compression, otherwise the signal might just clip on output. You don't have to do it in the transform, you can do it beforehand if you like, it's just something to keep an eye on while you're grading 🙂

-

That all seems correct. I use Davinci as my intermediary, but I don't know of any arguments that put one above the other. Setting the Timeline Colour Space is the hidden one that often isn't mentioned, so good to see you're on top of that as well. Just to test it, I suggest pulling in a bunch of footage from a range of cameras onto the same timeline and seeing how that feels. If you were to do lots of work with multiple cameras, I'd suggest the following: Put all the clips from each camera into a Group in the Colour Page (this unlocks two extra node graphs - the Group Pre-Clip and Group Post-Clip Put the camera-to-ACES transform in the Group Pre-Clip Put the ACES-to-Rec709 transform in the Timeline That way the Clip graph can be copied between any clip to any other clip easily for anything you want to apply to the clips, and anything you want to put across all clips in your timeline can go in the Timeline graph. Just a note that if you do this, the Timeline graph will apply to everything in the timeline including titles / VFX / etc, which doesn't work for some folks.

-

Insta360 just posted a teaser for a new action camera.. The only things I can tell are that it has a flip-up selfie screen: It has a Leica lens: ....and that they'll cost a billion dollars each because they appear to be manufacturing them in a particle accelerator. I'm also guessing it has AI in it, because of the red letters in the thumbnail, but then again, everything has AI in it these days, so....

-

I think we're getting off track here. 1) The first job is getting the signal converted to digital. I pulled up a few random spec sheets on Sony sensors of different sizes and they all had ADC built into the sensor, and they specifically state they're outputting digital from the sensor. This effectively takes analog interference out of the design process, unless you're designing the sensor, which almost no-one is. 2) I am talking specifically about error correction. To be specific, to send digital data in such a way that even with errors in transmission the errors can be detected and corrected. The ability to send digital data between chips without it getting errors in it is fundamental, and if you can't do it then the product is just as likely to get errors in the most-significant-bits as the least-significant-bits, which would effectively add white noise to the image at full signal strength. 3) I was saying that the deliberate manipulation of the digital signal before writing it to the card as non-de-bayered data (ie, RAW) is the area that I didn't know existed and might be where some of the non-tangible differences are. Certainly it's something to be looked into more. 4) Going back to the idea of spending money on training, I stand by that. Here's the problem - there is good info available for free online, but it's mixed in with ten tonnes of garbage and half-truths. How do you tell the difference? You can't, unless you already know the answer. So, how do you get past this impasse? You pay money to a reputable organisation for training... To be frank, the majority of benefit I got from the various training courses I've purchased has probably been from un-learning the vast quantity of crap that I swallowed from people who looked like they knew what they were doing online but really had just enough knowledge to be dangerous.

-

I wish they'd add ability to go higher.

-

That's not a brutal business perspective. This is a brutal business perspective - clients will absolutely be able to tell the difference between buying the better camera and buying the cheapest one and spending the extra on training. Training on lighting, composition, movement, etc. In terms of what is "better"... in that much referenced blind test with the GH4 in it, many of the Hollywood pros preferred the GH4 over high-end cinema cameras. Talking about the difference between two RED sensors is fine, but trying to apply that difference to the real world is preposterous. Well, it's one of the following: Processing in the analog domain before the ADC occurs Noise reduction or other operations that occur in the digital domain that DO change the digital output Noise reduction or other operations that occur in the digital domain that DO NOT change the digital output The last one is simple error correction, and will be invisible. If it's the first one, then it makes sense to do noise reduction, but (to be perfectly honest), if you have 23 crossings in between your sensor and the ADC chip then you deserve to be fired from the entire industry, and probably would be because the image would look like ISO 10 billion. This leaves the middle one, which is explicitly what ARRI are doing, so that's what I think we should be talking about.

-

That caught my eye too. The thing that made me really curious was that he said that on the BMPCC "that image signal crosses other things 23 times on that one board, and in those 23 crossings every time it crosses it gets a noise reduction afterwards because otherwise it would be a completely unrecognisable image afterwards". This makes no sense to me, from my understanding and experience in designing and building digital circuit boards, so according to my understanding the statement is completely wrong. However, I trust that the statement is correct, because the source is infinitely more knowledgeable than I am. There is obviously something there that I don't understand (likely a great many things!) and this is something that isn't talked about anywhere. It does make me wonder, though, if this might be the reason that some cameras look more analog than others, even when they're recording RAW. The caveat is that the differences we're used to seeing between sensors, for example between the OG BMPCC and the BMPCC 4K, is that it might simply be due to subtle differences in colour science and colour grading. Sadly, the level of knowledge of these things applied to most camera tests is practically zero, and it might be that with sufficient skill any RAW camera can be matched to any other RAW camera and that there is no difference at all, beyond differences that occurred downstream from the camera. Another data point for RAW not really being RAW is that when ARRI released the Alexa 35, they talked about the processing that happened after the data was read from the sensor and before it was debayered: Source: https://www.fdtimes.com/pdfs/free/115FDTimes-June2022-2.04-150.pdf Page 52. Not only is there some stuff that gets done in between these two steps, there's a whole department for it! RAW isn't so RAW after all.....

-

One thing I find a real challenge these days is the pace of real learning. Once you've watched a few dozen 5-15 minute videos that explain random pieces of a subject (likely sprinkled with misunderstandings and half-truths) then going to a source that is genuinely knowledgeable is often very difficult to watch, because not only do they start at the beginning and go slowly, they also repeat a bunch of stuff you've heard before. The pay-off is that they are actually a reliable source of information and that not only will they likely fill in gaps and correct mis-information, but they might actually change the way you think about a whole topic. I had this with a masterclass I did from Walter Volpatto - I completely changed my entire concept of colour grading. It was a revelation. It's actually a bit of a strange thing because I can see that lots of people think the way I used to, but there's no easy way to get them to flick that switch in their head, and when you try and summarise it, the statements sound sort-of vague and obvious and irrelevant.

-

The other thing to mention, beyond what @IronFilm said, is that the thing that matters most for editing is if the codec is ALL-I or IPB. To drastically oversimplify, ALL-I codecs store each frame individually, but IPB codecs define most frames as incremental changes to the previous frame, so if you want to know what a frame looks like in an ALL-I codec you just decode that frame, but in an IPB one you have to decode previous frames and then apply the changes to them. In some cases, the software might have to decode dozens or hundreds of previous frames just to render one frame, so the simple task of cutting to frame X in the next clip is a challenge, and playing footage backwards becomes a nightmare. Prores is always ALL-I, but h264 and h265 codecs CAN be ALL-I too, but it's not very common. This is probably the cause of almost all of the performance differences between them.

-

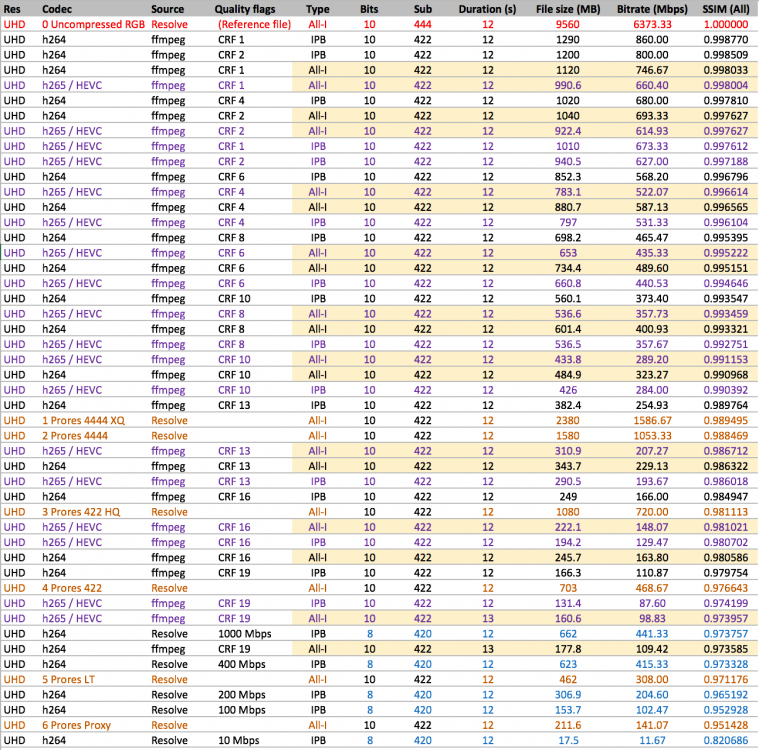

Thinking about this more, one of the things I liked about Prores over h264/5 was the aesthetic of the compression artefacts - the Prores artefacts were more organic and the h26x were more sharp/digital. I measured the relative quality of Prores vs h264 vs h265 back in 2020 and found that h265 > h264 > prores.. here's the table sorted by SSIM (a mathematical image quality comparison measurement - I compared every image with the uncompressed version to determine that score) These are all based on the outputs from Resolve, which is known for not having a very good h264/5 encoder, so this is likely wrong, but indicative. The things that kept me as being a fan of Prores at that time were: Prores implementations were always relatively unprocessed compared to h264/5 Prores errors were more analog in feel rather than sharp/digital However, Apple showed with the iPhone 14 that prores could be implemented and still have horrifically over-processed images, so that clearly showed that the processing isn't a codec thing, it's a processing thing. And, I've discovered that un-sharpening footage is easy in post, and that the difference in image frequency response (MTF) between film and RAW/unprocessed digital and over-sharpened digital footage is all fixed by just un-sharpening the footage, with the over-sharpened footage simply requiring more un-sharpening than the normal digital footage. Given these factors, I see no advantage to Prores over h264/5. In fact, now that manufacturers have insisted that all cameras are now megapixel-steroid-freaks, and the fact that Prores has a constant bitrate-per-pixel, the compressed RAW formats have lower bitrates than the Prores flavours, when shooting at higher resolutions anyway. This matters, as having decent downsampling in-camera isn't a given, and you're perhaps more likely to get a better compressed RAW implementation than a nice downsampling implementation.

-

I'd assume there will be a bunch of sample footage available in due course.. both nicely shot stuff from the brand ambassadors as well as stuff shot by the mere mortals who don't have a $2000 per-day film set to point the thing at. The way I view cameras is this: Under ideal conditions, the pros will show you the potential - the most that the camera is capable of Under real-world conditions, the rest of the shooters will show you what is possible with a range of scenarios and levels of skill The ideal results may be extremely difficult to re-create, or may be very robust and very easy to recreate - seeing what the camera does under ideal conditions does not tell you this. The rest of the shooters will give you a sense of how well the camera operates under real-world conditions and when there is not an entire production team behind the project. The mix of good results vs awful results will give you a sense of how easy it is to work with, unfortunately, this can be a reflection of how good the camera is, or the level of skill of the majority of shooters - not every camera will be used by people with the same distribution of skill levels. My recommendation is to have a few reviewers / shooters who you have followed for a time and where they have similar shooting circumstances and similar skill-levels as you do, and see what they are able to do with it, and how that rates against the other equipment they have used.

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

I'm sorry to hear that and glad you've recovered and doing well. These things definitely give clarity on what is important in life! Sounds like you definitely deserve a new camera - the S5iiX looks like a killer offering. I've been playing "if I won lotto, what would I buy" recently and the S5iiX is high on that list. Enjoy!! My only piece of advice is to just shoot a bunch of test shots and play with it to really understand how far you can push it etc. Especially shooting highly saturated, high DR scenes, and doing a series of under / over exposures, then trying to pull them all back to a normal exposure again. If you can get everything setup to where you can pull footage down / up to a normal exposure and have it look correct then you're in a good place with normal WB / exposure / saturation / etc adjustments. -

All those arguments could be made about shooting 1080p. Good luck trying to hold back "technological progress"!! TBH, My preferences are now 10-bit LOG ALL-I h26x files (due to their manageable file sizes and down-sampling in-camera advantages) or compressed RAW (for the complete lack of processing). I think Prores is a weaker alternative to those other two options, with the only advantage being wide-spread support. Sure, some of the negatives of prores are becoming less, but if the advantages of the alternatives overtake it, then it makes sense to switch over at some point. The primary purpose of 4K over 1080p was its ability to sell televisions, but hot damn did Hollywood go to 4K anyway. Compressed RAW has all sorts of benefits over Prores, and they're even relevant to film-making not just limited to selling consumer products....

-

I've seen people saying that the G9ii is the best MFT camera ever made by Panasonic, so the comparison is being made. Of course, this leaves lots of room for improvement, as the GH6 has advantages over the G9ii, so an updated GH-line with full-sized body could exceed both, and a smaller bodied GX-line with some trickle-down features would also be a significant improvement to that line.

-

Maybe they're thinking of it like it's a great B or C camera on Venice / Burano shoots? If so, having a demo video with A9iii shots mixed in would make sense. The "lesser" folks who aren't shooting on Venice / Burano are more than capable of convincing themselves that "if it's good enough for them as a C-cam then it's an excellent choice as my A-cam!!"

-

No worries! A couple of suggestions beyond learning more about colour management (so that you've got it clear in your head).... Learn to colour manage using Nodes, rather than using the Resolve settings. The reason I suggest this is that there are all sorts of cool little tricks you can do in Resolve by transforming to a different colour space, doing something, and then transforming back. This means that you'll be doing some colour management in the node graph. However, if you're doing that and also using Resolves colour management menus then your colour management configuration is split between nodes and menus etc and so it can become confusing because you can't see the whole pipeline in one place. This might be a good start for getting your head around colour management... This is Cullen Kelly, possibly the most experienced colourist on YT, and this talk is a complete introduction (ie, it's not just a fragment) to the topic: The other thing is to rate the camera like it's an acquisition device, not from the footage. What I mean by this is that each camera you're looking at has greater image quality than you will be able to capture, because they're all high-end cameras, so the limitation will be the footage you are able to capture with it. This comes down to workflow. You need to be able to understand how the camera works and how to get the optimal results from it, how to set the camera up optimally, you'll need to get it prepped, get it to the location, carry it, identify good locations while carrying it, set it up including mounting lenses and filters, turning everything on etc, prep the shot by composing / exposing / focusing, and only then hitting record. This all sounds obvious, but (to make my point a bit more obvious), if the camera was 100kg/lb then you wouldn't be mobile enough to get it to the best compositions, if you are tired you wouldn't even see the best compositions, if there's a great composition that is time-sensitive (like, something is moving - animals in a field - people walking etc) but it takes a minute to setup the shot then the moment will be gone, etc. You can only grade the footage you actually record...

-

I understand your logic, but think about it this way.. When will a codec from 2007 stop being the industry standard? It has to some time, right? People aren't going to be using it in the year 2150... Not a lot of technologies from 2007 are still around! Prores is a great codec and we have championed its use for good reasons, but those reasons aren't what they used to be: It was high-quality, unlike the h26x codecs on budget cameras It was 10+ bit and ALL-I, unlike h26x codecs It was high-quality but had must more modest / manageable file sizes compared to RAW These are all still true, but the h26x codecs are now (mostly) 10+bit and ALL-I (and we have sufficient computing power to edit with IPB in practical resolutions). Compressed RAW makes swift work of the file sizes too.

-

You'd think it would be, but there are still elements of it that I can't work out. 30p and an overly compressed and sharpened image is a pretty solid start though! The thing I don't like about the digital look is that it looks like real life in the sense that it makes the scene look like people in a room saying things to each other, rather than there being a sense of scale.. that "larger than life" thing people talk about.

-

Thanks for sharing your impressions. Your description is pretty much how I was expecting it to perform. I think, for me, the take-aways are: you own one and have real-world experience in uncontrolled conditions it's not as good as a real camera it's good enough to use in professional gigs, even if only in a limited capacity

-

Yeah, next to the support for a BM "box camera" there's a lot of people wanting a P4K Pro with NDs and a tilting screen etc.

-

Well, this is a bit embarrassing! I did a detailed comparison between then when I was grading, but I must have picked an area that was slightly out of focus or something, because looking at the images I posted they're not even close!! Here are some updated images with a couple of blurs added. Just for fun I added a slightly emphasised halation-like one so I've tipped the balance slightly in the opposite direction now.....

-

The other thing people don't consider is that there are a relatively small number of compositions that are required, and almost all shooting situations have various constraints or factors that influence where you position the camera, so for any given scenario these factors will often result in preferences for specific focal lengths over others. Sure, it depends on your preferences, but there is definitely common ground and preferences. Not a lot of folks would look at hand-held footage shot on a rectilinear ultra-wide (16mm FF equivalent or wider) lens from above head-height with tonnes of micro-jitters and say that's just as cinematic as a locked-off 85mm close-up shot from eye-level. If they did, then everything would be equal, and there would be no language of cinema at all.

_1.3.2_POST.thumb.jpg.174fb47bac96afe90573ab56686fd035.jpg)

_1.4.2_POST.thumb.jpg.dccae310e70c0019343c9cb911709c10.jpg)

_1.5.2_POST.thumb.jpg.774ec76d48c0eb555817f1d17af1057e.jpg)

_1.6.2_POST.thumb.jpg.f6cef5a308d309e0f2e5607c95b32db6.jpg)

_1.9.2_POST.thumb.jpg.0da99f773b5bcd02d207e91fee6222a3.jpg)

_1_10.2_POST.thumb.jpg.94a70944dae24f6e7503b53cd3cc344b.jpg)