-

Posts

8,046 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

It really depends on where you're filming. I shoot exclusively in available light, in realtime, without directing anything that happens. What happens happens, I shoot what I shoot, and I get what I get. This often means I shoot in situations with lighting so terrible it would make you cry. Have you ever shot something on the wrong WB setting and couldn't recover it in post because it was too badly damaged? I have done tests to see how much latitude there is and it's not much. Have you ever shot something in post and the WB controls in your NLE max out and you have to apply colour correction in multiple passes to return a shot to neutral? I have. Here's a final grade I did of a little film I shot with the GF3 getting some food one evening: Those last shots are interesting. Here's one SOOC: That shot was filmed with AWB. The lighting was so off-colour that it maxed out the cameras AWB adjustments. Had I chosen a fixed WB for this shot the SOOC would have been way worse, and probably unusable. Had I stopped to manually set the WB, it wouldn't have helped - the camera didn't have enough latitude. Notice from the scene that there are several sets of lighting in the communal area, and every truck has its own internal lighting, and some have their own external lighting too. These lights will have been chosen to be the cheapest possible - high CRI LED lighting these are not! Other shots were completely fine, like this one SOOC: In practice, AWB will take you towards what the camera thinks is the right WB. It won't get it perfect, but it will take you in that direction. Had I shot this with a fixed WB, I would have been trying to push the GF3s (rather meagre) 17Mbps further in post than I had to. The sequence is this: Camera reads RAW data off the sensor Camera applies WB (fixed or AWB) Camera applies colour profile, compresses stream and writes video to media You adjust WB in post The bottleneck is the colour profile of the camera followed by the compression. If fixed WB will get the compressed stream closer to perfect then do that, but I find most of the time that AWB helps me get close in difficult situations, and the rest of the time almost never needs to be touched.

-

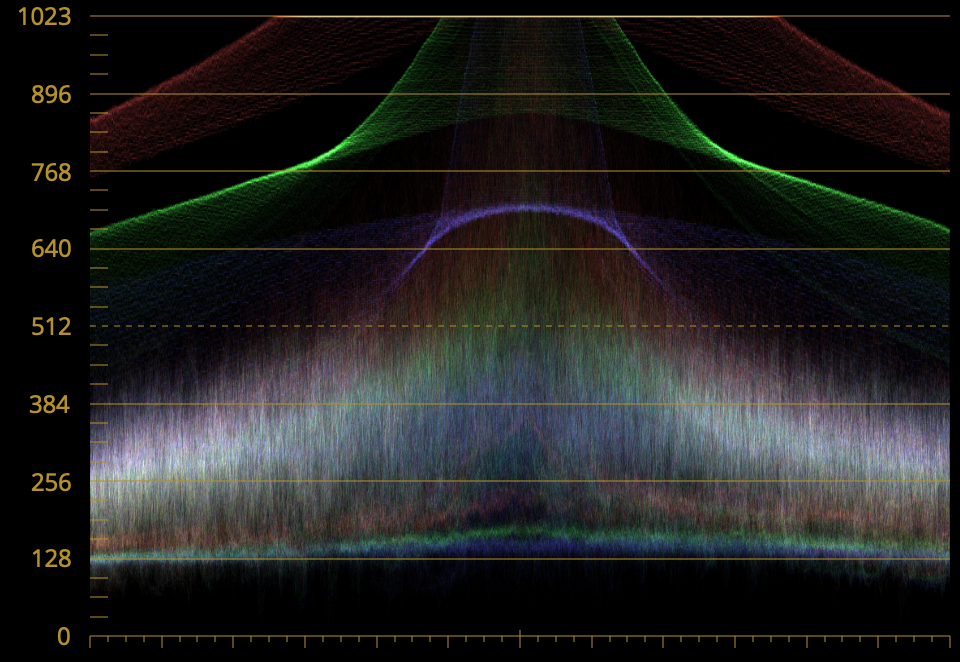

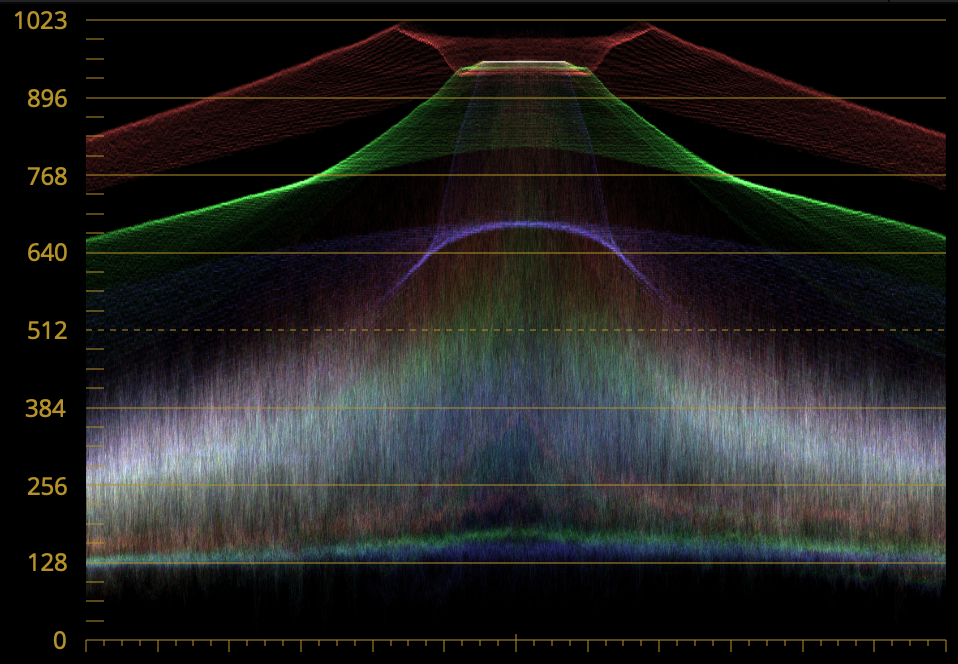

Oh, and to clarify my earlier poor wording, the waveform where everything is clipping (below) is SOOC, and doesn't have the curve applied. The bottom waveform with the red showing is the one with the curve applied.

-

Oh yeah, and I always have AWB on. My rationale is that I'm always wandering between different lighting conditions and cameras now are pretty smart about things, so regardless of if I was doing WB manually or AWB I'd have to adjust it in post anyway, so I may as well just use AWB because it's more likely to get it in the ballpark, which helps when not shooting RAW to get it as close as you can in-camera. Having said that though, I don't recall having to adjust this camera yet, so it seems good.

-

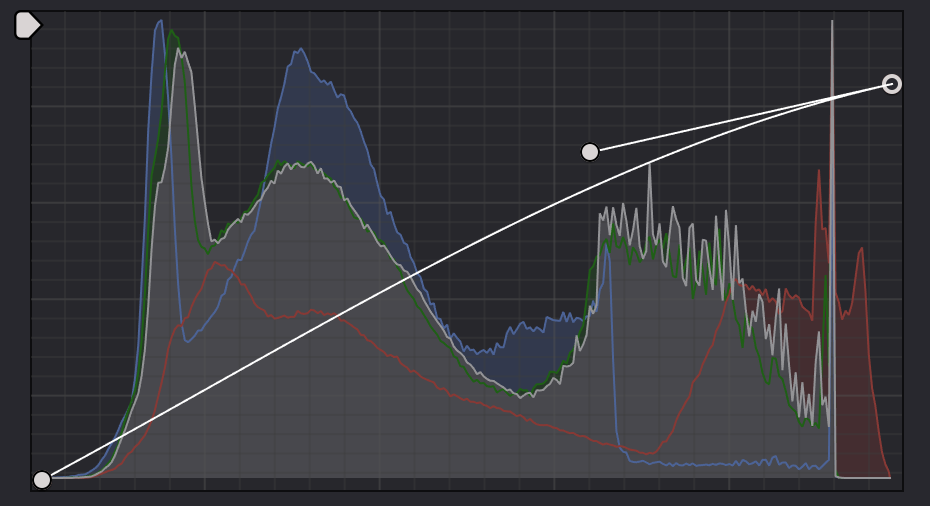

Those shots were Natural profile, unmodified, and with a curve to bring the highlights down and have a little highlight rolloff, and the saturation boosted to about 107%. Here's the curve: The challenge is that when you have a curve to clipping, your waveform looks like this: Which means that for that whole area where the red is clipping the colours shift to yellow, like this: BUT, somehow the red channel has extra info that is recoverable (IIRC this isn't the first time I've seen colour information above 100%) so if you pull it down a little then you get this: and this waveform: There are still some rather odd things happening to those red levels in the parts we recovered, but they do give an interesting hue to the areas just before clipping. Not sure if that helps? I've found it relatively easy to get great sunset shots with almost any camera, so either it's the colour rendition of the cameras I happen to have chosen (possible - Panasonic is no slouch) or it could be the lighting here. In other news, I have an old Yashica Auto Yashicor 28mm f2.8 m42 lens that I bought super cheaply as it had damaged front coatings and I've been searching for a vintage walk-around lens for the GX85. I had put it in the discard box due to it's terrible IQ wide-open but it turns out that stopping it down a stop (or half a stop) cleans up the image almost completely, so I took it for a test drive and I think I might be sold on it! GX85 + Yashica 28/2.8 + SB + Natural profile SOOC ungraded. Once again, filming in golden hour is probably making this lens look way better than it should look. I have plans to start exploring the colour profiles and colour performance of the GX85 soon, so will report back on what I find.

-

They call them the Trinity of zoom lenses for a reason! One thing worth considering with the Sony cameras is the Clearimage zoom, which is very high quality. From what I saw (watching through the 4K YouTube compression of course) was that it was invisible up to about 1.4x magnification. I also recall from the A73 that you could punch in to a 1:1 (?) 4K mode, which also gave a 1.4x magnification. Combined they give a 2X factor, which you may find high quality enough to use to extend the long end of your 24-70. It's a tough thing, moving from a 16-35 + 24-70 to a 16-35 + 35-150. If it were me, I'd be thinking about: How many of your shots are wider than 35mm How many times those shots occurred after a shot longer than 35mm How many times that transition had to be done in a hurry, or what the time cost would be if you had to change lenses constantly How many times you could use the 1.4x crop / Clearimage zoom on the 16-35mm to get a longer shot (and therefore avoid changing from the 16-35 to the 35-150) How many times you could use the 1.4x crop / Clearimage zoom on the 24-70mm to get a shot longer than 70mm What the optical performance of your lenses is when wide open (as a 1.4x or 2x crop into their image circle will reveal softness or other issues) My travel kit is 7.5/2 + 17.5/0.95 + 42.5/0.95 (FF equivalents of 15/4 + 35/1.9 + 85/1.9). I originally had the 17.5 and was using a 58mm as the longer lens but I found the gap from 17.5 -> 58 to be too large (3.3x) so I bought the 42.5mm (2.4x). The 17.5mm (35mm) stays on the camera most of the time so that's the default lens. However, I bought that kit while I was filming in the GH5 4K mode which I could get a 1.4x zoom by punching into the sensor. I have since switched to 1080p to get ALL-I and therefore eliminating the need for proxies in my workflow, and in that mode I can engage the 2x digital zoom, which creates a 1080p image by downsampling a ~2.5K area of the sensor, and is higher quality than the 1:1 mode. This means that instead of the 35mm cropping to a 49mm equivalent and having a large gap between that and the longer prime which was 116mm equivalent, it means that the 35mm crops to a 70mm which is a lot closer to that 116mm equivalent. So I now have a 35mm(70mm) then an 85, which you could argue isn't so useful because they're so close together. Had I known this before I bought the 85 equivalent, I might have gone in a different direction. I guess I say all this to suggest that the crop/zoom functions can have a real impact on your lens requirements and should be factored in before you spend real money on glass.

-

Super tiny Sigma 28-70mm F2.8 for mirrorless camera (E / L Mount)

kye replied to Andrew - EOSHD's topic in Cameras

That's a nasty cough you've got there... I'd suggest working out the cause of it and eliminating it from your lifestyle 🙂 -

Oh yeah.. I guess I was so amused by the USD$6,000 R3 that while not rendering me completely speechless, it did befuddle me significantly! Getting smacked in the face with the Canon Cripple Hammer is likely to leave you significantly dazed....

-

How Jordan of DPReview showcases flexibility of RAW video (lazily)

kye replied to The Dancing Babamef's topic in Cameras

Now now, he's a camera reviewer who reviews cameras for making camera reviews, you have to keep a hold of your expectations! These are the same people that keep making endless "how to get cinematic images" videos but for some unfathomable reason keep talking about new cameras and higher resolutions when the people who actually make cinema have basically been using the same cameras for a decade. -

The more I use the GX85, the more I like it. I've taken it out with the 12-35/2.8 a couple of times now, including a street festival on the weekend where I got a bunch of shots of a friends kids running around non-stop, and the camera and lens kept up, even at F2.8 the whole time. Some beach visit sample images from the 12-35mm F2.8 (probably wide open).. and the festival outing, also 12-35mm f2.8 wide open, but this includes some shots using the 2X zoom mode too.. and a couple from an outing with the Cosmicar 12.5mm F1.9 wide open (and cropping into the image)... The 12-35mm images are almost SOOC using the Natural profile (I hadn't put Cine-D on yet), just with some slight tweaks in post, but the Cosmicar ones above have been graded under a Kodak 250D / 2393 emulation process, which did surprisingly little to the colour actually. The more I use the 12-35mm the more I appreciate a zoom lens for just getting shot after shot in fast situations, which is pretty much the key to having lots of options in the edit and keeping an edit fresh with lots of different types and compositions of shots. I'd really like a longer lens as I got quite a few shots at 35mm with the 2X engaged, so I'm contemplating the 12-60mm F2.8-4 which seems to be a good match from the reviews I've seen. Curious to hear others thoughts on this. I found the Cosmicar a little challenging to work with as I composed for the screen and was then surprised in post with how much crop I needed to apply, so had a few shots where I was chopping peoples heads off etc. I've ordered a cheap 28mm F2.8 m42 lens to go with my m42 speed booster, which will give a 43mm FOV, which is a little tighter than the 12.5mm Cosmicar which gives about a 35mm FOV once you crop into the image circle. I'll have to see how I go with it. I'll need a 22mm lens on the SB to give a 35mm FOV and those aren't super common in m42 mount! The alternative is my 17.5mm Voigtlander which is 38mm on the GX85 but isn't exactly vintage, so I'd have to look more seriously into filters to get similar flare characteristics (which is the part I love about vintage lenses). The stabilisation is really good, and in combination with the 12-35 is just spectacular, and my regular camera is a GH5 so I'm guessing I'm not easily impressed. It really makes me feel like shooting. Great stuff!

-

Looks great, but looks just like Magic Lantern on the 5D, only with a higher resolution / less cinematic image. You could downscale, but it's a small bump for a large price increase. IIRC my new GX85 gives you zebras, focus peaking and waveform at the same time. For $600, which includes two lenses. and it's not a dedicated video camera either. LOL. Canon.

-

Imagine a telecommunications company that had an internet department and a telephone department. They decided that the internet department should be segmented from the telephone department so they release modems for computers only and refuse to do wifi because then it could be accessed with smartphones, and they refuse to release smartphones because they believe that telephones should be used for voice calling and SMS messages only. How do you think they'd go? World leader in telecommunications? Would this segmentation bring them great fortune? Yeah.

-

Business models that can't keep up with reality inevitably die. The archives contain millions of bankruptcy filings from businesses that would have been successful if the world was different. In todays world, convergence will kill any business that tries to maintain segmentation. This is basic economics. Celebrating such things is little more than writing poetry about decay and death.

-

That would be the best option. Just like allowing people to choose between shutter speed and shutter angle.

-

So DJI really IS leading the cinema camera market! Nice 🙂

-

Yes, they're both valid, although I'd argue that an analytical approach is likely to get a better result, faster. There are hundreds of variations of each focal length, maybe thousands for common ones, so the likelihood that you would stumble onto the best one for your preferences, or even one in the top 5, just by randomly looking at Flickr or whatever is pretty small. If, on the other hand, you worked out that you like a certain quality of bokeh, then come to understand that the bokeh is a function of the optical recipe, then understand the history and usage of that recipe, then you're likely to be lead relatively quickly to potentially unknown resources where lists of lenses you've never heard of that have this trait are catalogued. This might seem like a hugely specific thing that no-one actually does, but optical recipe is hugely common and is referred to almost as a matter of course in lens reviews - this is when reviews say a lens has "11 lens elements in 9 groups", and maybe mention how many are aspherical. I'm not sure if you are aware of this, but I know that I wasn't until recently. Since I worked it out, I realise that this is commonly included in lens reviews and specs, and there are many common lens formulas that get talked about regularly too, like Petzval, Tessar, Distagon, etc. There are equivalents for other lens design aspects as well. It just depends on what your goals are, if your goals are to look at lots of pictures and maybe get the best lens from a random selection of images then go right ahead, but if your goal is to get the best lens for your application and tastes then a more methodical approach is going to be more effective and hugely faster.

-

So there you go. GH5 not succumbing to the lowered standards that Sony only stoops to on their consumer cameras and not on their flagship cinema camera, and which ARRI etc do not consider at all!

-

An addenda to the above. Think about the crap that people dump onto kit lenses and the mythical attributes they give to other lenses like the Helios and the 12-35/2.8 etc, and then compare that with the images I posted at the start of this thread. If all you are doing is "browsing for pre-packaged solutions" then the conventional wisdom is radically misleading. This "browsing" is the path to overpaying for what is trendy, not developing your own style, and likely using the wrong tool for the wrong job and not even knowing that it could be different.

-

Actually, there is such a thing as a mathematically perfect lens, and any deviation from that is theoretically defective, and therefore all character is made from aberrations. This isn't a criticism, and it's not pedantic either - I say this because it's helpful. Once you realise that all character in a lens are deviations from perfection then it frames all of these into the same category. Everyone agrees that some aberrations are good and others are definitely not, so then it just becomes a matter of taste. This gets us away from the idea that there are two categories called "character" and <insert bad word here> and then arguing over what things fall into which categories. It also helps in understanding what character is. If you understand that it's made from particular elements that are measurable then you can start to narrow down what they are, understand where those might come from, and understand how one can select for them, potentially while removing the aberrations that are not to your tastes. I've gone a decent distance down the lens rabbit hole and have a passing understanding of some of the aberrations that I like, but it's ridiculously deep. Here are a couple of videos from a pair who are trying to write a book about the subject, which apparently is still years away and current drafts are over 1000 pages! This is part of a larger theme around the creative elements of film, and where Steve Yedlin put it really well when he said: Source: http://www.yedlin.net/OnColorScience/ He was talking about colour science and not about lenses specifically, but the principle holds. Far too many of us are just choosing from pre-packaged solutions and not understanding the smaller attributes. Right at the opening of the first lens video Christopher Probst says "yeah I've got to be careful what I talk about because I'm still buying lenses [from ebay] too". So, what does this tell you? The guy who is literally writing the book on lenses knows enough to be buying lenses from eBay that he thinks are undervalued and doesn't want to give the game away about them until he's bought the hidden gems he's found... The big take-away for me from those two videos was that the optical recipe was a thing that drives a lot of my interest, along with diffusion characteristics of the various coatings. These are particularly useful things to me because: Knowing I prefer older and simpler optical recipes means that instead of trying to buy CZ primes (which are very costly) I can buy modern copies from Chinese factories that are made very well but are much more reasonably priced Knowing I prefer certain levels of diffusion from the lens coatings (and a certain amount of flaring) means I can investigate filters that emulate these characteristics Knowing how to read the optical properties of lenses through MTF charts, and also knowing that perfect isn't actually desirable, I can draw parallels between Zeiss Super Speeds and Cooke Panchros (lenses that no-one here can afford) and the Helios 44 lens that everyone here can afford, and then understand the parallels between those and the modern lenses available from China that I previously mentioned Knowing these things is actually empowering in a very real and practical way. Taking a one-dimensional view of lenses and just separating them into "Good Character" and "Bad" is neither empowering, nor helpful. You said you like to have the lens be more like a partner instead of a tool, this is a way to understand what kind of partner you'd like. If I said that character in people was definable, you might balk and say it wasn't possible. If I insisted it was then you might say you didn't want to know, but you actually do. Imagine the character of two potential business partners below, and then tell me you'd prefer not to know about these and would rather choose at random: Partner A character traits: deceitful, grandiose, manipulative, emotionally unstable, volatile Partner B character traits: indecisive, ambitious, natural salesman, excitable, sentimental Here's the funny thing, those two people could easily present as initially quite similar. They talk big, their story changes a bit over time, they're dynamic, etc. Knowing the traits and being able to read them gives insight. It doesn't take away the mystery though. Knowing everything isn't the only alternative to knowing nothing.

-

ISO isn't universal anyway - ISO 100 can give quite different levels of exposure on different cameras. IIRC Tony Northrup did a test and the different cameras were different to each other, some by quite a lot. Besides, what matters is the native ISO, not the absolute number. ISO 100 on one camera might look great if it's the native ISO of that camera, but might look awful on another where the native ISO for that camera is 800. And shutter speed is a simple primary school fraction isn't it? And how often do you change it? Couldn't you just calculate it and write it down? I'm not really sure where the problems are here, but maybe that's just me. Carry on.

-

I think you've perhaps missed the original point of the thread - how do these things compare in practice. I've delved deep enough into the theory of resolution downsampling in post and the more you look into it the more variables there are and the more that you realise the results cannot be predicted. @Ty Harper is smart enough to not ask for theory-land responses, because (having read their other posts and seen the quality of their work) they have enough experience to understand that theory and practice are two very different things.

-

Are you suggesting that cinematographers won't be able to use the camera because of how a control is labelled? and one that is normally not changed much at that? You don't seem to have much faith in their intelligence!

-

And get PDAF fixed-pattern interference in the image? I don't think so! It's like you didn't even read the preceding comments?

-

Yet another reason the GH5 is still a leader....

-

While I'm no fan of the Sigma 18-35, it can be (with careful consideration) the basis for a nice look. I'd suggest: Diffusion filters (as others have suggested) but be aware there are lots of them and all have different looks so that's worth doing some research into - most brands will provide comparisons of their various products so you can narrow down and then look at real-world reviews Some of the characteristics of vintage lenses can be added in post, including: Softening of the edges (use a mask like a vignette but use a blur effect - experiment with different types of blurs here too - radial vs pinch(?) vs "lens" vs directional blurs etc Chromatic aberrations can be added, either to the whole frame or to the edges using a mask Vintage lenses often vignette Vintage lenses often have pincushion distortion - where objects on the edges get curved like a slight fish-eye effect - this can often be introduced with a "lens correction" slider which are common You can even put an oval shape over the front of the lens which will make your bokeh oval-shaped (at least when you have the lens wide-open) but bear in mind it will lower the light coming through the lens and depend DoF slightly With the BM S16 cameras they tend to be on the softer side due to 1080p sensors and lock of sharpening so having a tack-sharp lens isn't a bad thing per-se, it's just on the bland side. It used to make me mad too, but now I realise that it's all a scam. They say the biggest lies are the most successful and I think it's true here too. Have a look at this: and I don't mean "see how large the budget is" - I mean go shot by shot, pause each one, and actually look at the image. If I can take a small liberty for educational purposes, have a look at the frame at 14s, it includes the main star of the whole movie looking like this: and then imagine what DXOMark would say if they tested this lens: This single frame reveals the truth about the cinematic look: The video is uploaded in 2K - because the film is projected in 2K in cinemas The frame has edges that are blurry, even in 2K (see above snippets) The frame has significant pincushion distortion (look at the "straight" lines on the edges of frame) It has quite deep DoF It has spectacular colour Checkmate YouTubers! Of course, I say that sarcastically because the reality is that they've checkmated all of us years ago when they started talking about sharp lenses and 4K and we didn't just laugh and skip the video and watched something remotely sensible instead. I mean, that single frame is so optically poor from a technical perspective that most forum nerds would recommend throwing that lens away, and yet it was used in a key scene in a $250M movie that has grossed $700M+ worldwide. Turns out we didn't need MORE pixels, we needed BETTER pixels. The proof was in the movie theatre the whole time and we never called their bluff. Or even been to a movie theatre in the last 30 years..... Why? The DoF is a function of focal length, so if I take my 12-35/2.8 lens, set it to 12mm f2.8 and focus on an object it will have a different DoF than if I set it to 35mm f2.8 and focus on the same object from the same camera position. DoF is variable, even with constant aperture zoom lenses. In fact, it's probably less variable on a variable aperture zoom. You said you don't really zoom while filming, so constant exposure isn't really an issue from that point of view. If you've zoomed and want to match exposure then just adjust your ND - you've adjusted your composition already so a twist of an ND isn't a big deal. If you're shooting with variable apertures and matching exposure with ISO and wanting to match the noise profile then just get familiar with NR - most cinema cameras have noise (even at their native ISOs) that would make any videographer CRY. Professional colourists know about NR and how to use it, and it will be used on almost every professional movie or TV show you have ever seen. Videographers complain about noise in their images and it just shows they literally don't know the first thing about high-end productions (and I mean first thing, because NR is usually the first node of a professional colourists workflow). Not sure what specific post you're referring to, but most kit lenses are ~24-70, most secondary zooms are 70-200, and most systems have a 16-35 equivalent. The numbers all look different between brands and mounts etc, but they mostly boil down to those ranges. I'd say from just that statement alone you're doing far better than most forum peeps, because: You seem to know what kind of shooter you are You seem to actually shoot (armchair critics aren't a fan of the 12-32mm lens) You seem to understand how the equipment you have relates to what your requirements are ("nice and portable" isn't a think armchair critics who don't shoot say) Sadly, this makes you far ahead of the curve. Most of camera internet discussions are about specs, with people recommending you change what and how you shoot in order to match the latest equipment rather than the other way around, and TBH mostly it's just the blind leading the stupid, or these days the shills leading the gullible to part with their money!