All Activity

- Today

-

Is anyone using Parsec with BetterDisplay for edits?

John Matthews replied to John Matthews's topic in Cameras

With Parsec, the key is efficient H.265 (HEVC) encoding and decoding. On my 2020 Intel MacBook Air, I’m seeing decode times around 9ms at its native resolution. On the other end, the M2 Mac Mini encodes the HEVC stream in about 8.5ms. Over Wi-Fi (on the Air), network latency hovers around 4ms. Altogether, that gives me a total latency just under 25ms—well below the 42ms frame time of 24fps video. In practice, it’s totally usable and, to my eyes, indistinguishable from working directly on an M2 MacBook Air. Most Intel Macs from 2017 onward support QuickSync, which provides solid hardware-accelerated decoding. The real game-changer, though, is the vastly improved encoding performance on Apple Silicon, which makes setups like this feel snappy and responsive. When using Ethernet—even at modest speeds—latency drops further (often below 1ms). Parsec can stream H.265 video with variable bitrates up to 50 Mbps, which is trivial for gigabit Ethernet. It also supports 10-bit 4:2:2 streams, though honestly, I can’t see a difference on my aging eyes—especially when using high-DPI iMac displays. For me, Parsec solves three major problems: Reviving modest hardware — especially for tasks like video editing. Enabling near-instant resource sharing — with Thunderbolt providing a pseudo-network connection up to 40Gbps between Mac Minis. Letting me work remotely — from the kitchen table, for instance, while the main setup runs in the basement. When you pair Parsec with BetterDisplay, the experience is nearly identical to working on the host machine—if not better. In my case, the iMac’s high-DPI Retina display actually gives me a superior viewing experience compared to using the M2 Mac Mini with a standard external monitor. Unless you're using an Apple Studio or Cinema Display, it’s hard to beat the visual quality of 4K, 4.5K, or 5K iMacs. Interestingly, the 2017 iMac Pro isn’t the best choice as a Parsec thin client. It lacks both QuickSync and the T2 chip, which hurts performance. If you're going this route, a standard 5K iMac (2017 or later) is a much better option. -

John Matthews reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

John Matthews reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

-

Is anyone using Parsec with BetterDisplay for edits?

eatstoomuchjam replied to John Matthews's topic in Cameras

You might be talking me into reinstalling Parsec and trying this out, but for different reasons. Usually I edit on my Macbook (The lower-end M2 Max) and it's totally fine, but when I'm at home, I prefer to edit off my basement NAS instead of from external drives and I use a postgres database on a server next to it. But if I don't plug into the network (which means sitting at my desk where the dock with 10ge is), editing video is not great (and editing photos in Lightroom ain't great either). But I have a windows gaming PC also set up near the desk which has a pretty decent setup with a nice GPU. I suppose I could just parsec to that while on the couch or in bed and do my editing, with the main catch being that I need to use Windows shortcut keys. That or I wait until there's a decent deal on an M4 Mini with 10ge (there have been some solid deals, but they're always for the base model with only 1ge) -

eatstoomuchjam reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

eatstoomuchjam reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

-

eatstoomuchjam reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

eatstoomuchjam reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

-

John Matthews reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

John Matthews reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

-

Is anyone using Parsec with BetterDisplay for edits?

John Matthews replied to John Matthews's topic in Cameras

I've been using the free version only. However, I did pay for BetterDisplay ($20)- for me, it's worth it and they did a hell of job that needs to be rewarded. I'll definitely have a look at this too. Thanks for that. -

Is anyone using Parsec with BetterDisplay for edits?

Anaconda_ replied to John Matthews's topic in Cameras

I often use Splashtop Remote Desktop when editing remote and its always been very reliable. My main use-case is making an edit on a windows machine hooked up to a bunch of media servers in one city and I'm in another city on a MacBook. It handles the keyboard mapping and display scaling extremely well. It also has support for multiple monitors, so I can plug the MacBook into 2 external displays and have all 3 screens mirror the 3 Windows screens. Or assign them to spaces if I'm using the MacBook without the externals. Another advantage, in my case, is it has the option to blank the screens on the remote computer, and runs through a secure network, so feels very safe if you need that. I'm curious about Parsec, but it seems to be a similar price for similar feature set. Since Splashtop is doing the job just fine, I'm debating if its worth the time to research and switch over. For colour depth, both say they're 4:4:4 so I don't think that's much of an issue. -

Is anyone using Parsec with BetterDisplay for edits?

John Matthews replied to John Matthews's topic in Cameras

If macOS ever supported multiple simultaneous users, I’d happily run just one Mac Mini and use 3–4 iMacs around the house as thin clients for the whole family. I’m confident the performance would be more than sufficient—as long as no one is editing video at the same time! Unfortunately, that’s not the case. But there’s nothing stopping me from setting up several iMacs as thin clients, each connected to its own Mac Mini. By placing the Mac Minis next to each other and linking them via Thunderbolt, I could enable ultra-fast access to shared resources—effectively building a small, high-speed local computing cluster with shared storage and plenty of power. -

Is anyone using Parsec with BetterDisplay for edits?

John Matthews replied to John Matthews's topic in Cameras

I’m not 100% focused on color accuracy here—I rely on scopes for that—since calibrating two machines in different environments is never going to yield perfect results. My main reason for using BetterDisplay is to match the exact 4096x2304 resolution of my 2019 4K iMac. Retina displays don’t play nicely with standard external monitors, like the one I currently have connected to the M2 Mac Mini. I’ve heard some users connect “dummy” displays to the encoding machine to force the proper resolution, but this setup with Parsec achieves the same effect. If I send a standard 4K signal to the iMac, it doesn’t look as clean—the image is upscaled and noticeably less sharp. With this setup, I just launch Parsec and connect to the M2 Mac Mini. What I see on the iMac looks almost indistinguishable from running natively on the 2019 i3 iMac with 8GB of RAM—except, of course, I get the performance of the M2 Mac Mini. In fact, I often forget I’m even using Parsec. I’ve accidentally shut down the Mac Mini thinking I was turning off the iMac! And the biggest surprise? I’m getting Thunderbolt 4-level performance on my media drives—despite using only a 1Gbps Ethernet connection. As long as latency stays below a certain threshold, the experience is virtually seamless. The iMac just becomes a glorified thin client- exactly what I want. -

John Matthews reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

John Matthews reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

-

Is anyone using Parsec with BetterDisplay for edits?

eatstoomuchjam replied to John Matthews's topic in Cameras

I never tried editing with Parsec, but it's a good idea! How does it handle color management? Or is that done by Better Display? -

eatstoomuchjam reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

eatstoomuchjam reacted to a post in a topic:

Is anyone using Parsec with BetterDisplay for edits?

-

I'm putting this question out there to see if anyone else is doing something similar. I've been running Parsec over a modest 1Gbps home network, paired with BetterDisplay to ensure proper 4K+ 60fps signals are sent to my iMacs—while editing 6K video on very modest hardware. For those unfamiliar, Parsec is low-latency remote desktop software. With BetterDisplay, I can match the resolution to the native Retina display of my iMac, allowing me to work from two different locations in my home without needing multiple high-end machines—or a 10Gbps network. The end result? It feels like I'm using an Apple M2 Mac Mini directly on a 2019 4K iMac. Honestly, I can barely tell the difference. I’d estimate the latency is under 25ms with this setup. Now I’m curious—has anyone tried this off-site? I imagine cross-continent setups would suffer from latency, but within the same continent, it seems like remote editing could be entirely feasible. It sure beats lugging around terabytes of footage. For me, this setup has breathed new life into an older machine. I’m even considering picking up another iMac—maybe a 5K model from 2017 or later. With H.265 decoding not being too demanding and the M2 or M4 Mac Mini handling encoding with ease, it seems like a highly effective and budget-friendly workflow.

-

EduPortas reacted to a post in a topic:

The Aesthetic (part 2)

EduPortas reacted to a post in a topic:

The Aesthetic (part 2)

-

Well, that's something. It's clear that key and fill lights are the main differences between all the high-quality movies you mentioned and your samples. Hours upon hours go into the cinema aestehtic so it will be very hard to replicate via YT without the proper means. Add some very good actors and the bar stands incredibly high. Ever shot with older tape-based digital cams? Maybe you'll get some better results with a different media altogether. Newer sensors are just too clean.

-

I've had an initial look at the test I shot that compared shutter speeds, including longer exposure times than 180-degrees as you suggest, but didn't really notice any improvement there. Maybe there is and I'm just not sensitive to it, but it's not what I'm sensing. I absolutely agree that human movement is better, but I don't have any volunteers handy that don't mind being published on the open internet, so unfortunately that isn't something I can easily do. I edited up my other test of the four-camera setup and that didn't yield any joy either. That test included lots of camera movement as well as subject movement and so I probably would have noticed if there were significant differences, so not noticing any is probably a good thing because it means that the camera isn't the answer. I was beginning to wonder if I wasn't viewing things objectively, but then I randomly fired up The Bourne Supremacy and it auto-resumed to some random timestamp and within seconds delivered these two frames, which have it in spades. I'm now convinced I'm not chasing a ghost, so that's positive. I think I've been struggling because I've been conflating the look I've been chasing with the fact that I have mostly witnessed it on movies shot on film, so I've perhaps paid too much attention to sharpness and grain and not enough on other aspects of the image. The fact that I haven't seen anything above about 2K projected until very very recently probably also plays into it. Recently I've sourced some higher quality reference materials and have found examples that contain the look but are also higher resolution. These are all 4K so be sure to open the full resolution file, not just viewing the highly-compressed preview image the forum shows. I will be studying these as my next area of focus. At the very least, I'm eliminating things that it isn't, and that's progress of a sort. My anamorphic adapter has finally left China so I think that'll be the next round of tests. Now I've eliminated the camera body as the source of the look I can venture out and hopefully capture some footage with people in it, but it's very wet here right now so we'll have to see.

-

Thanks for the detailed answer. You're right, only the more advanced videocameras will allow to you record at different fps, either higher or lower than 24fps (8, 12, 18, for example). Not sure about your GH7, though. But as you mentioned, you can play around with a lower shutter speed to break the 180º rule. Try 40 or 30 instead of the usual 48 or 50 and see what happens. Can't lose anything for trying, right? I don't mean to be rude, but it's hard to make innanimate objects feel "cinematic". We'd get a much better feel of the cinematic vs. video aesthetic you mention by recording human movement.

- Yesterday

-

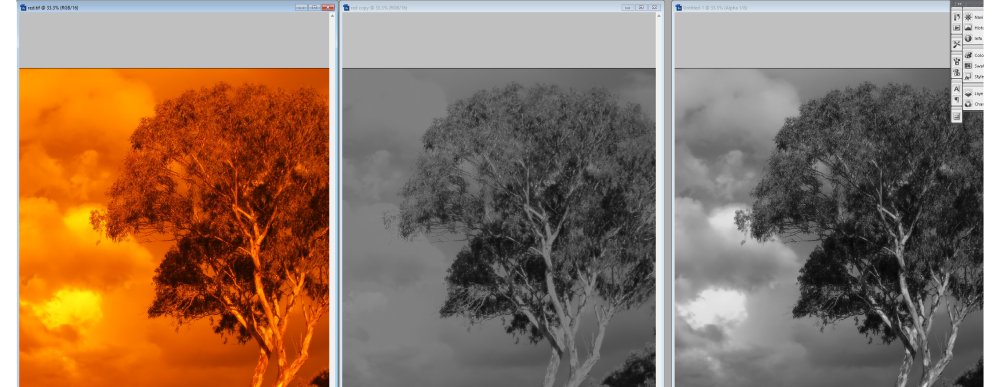

Put the 17mm takumar smc back on the e-m1, as it has inbuilt filters. Thought i'd try those filters and apply some black and white effects. First is the clear filter / uv or your normal look. yellow filter changes things by about a stop of light and adds abit of yellow. last but not least is the red filter, loses three stops i think and delivers a full on Armageddon effect. These are photos from the em1. Grabbing stills from within resolve and doing b+w conversions on the image, they fell apart so quick. It was really quite disappointing. Next image is the original then a desaturated image which is kinda bland. Third is done through ps/ image / calculations and messing around with some of the options Finally is the finished photo which i think is visually a more interesting image compared to a desaturated image or plain greyscale. I think some of this comes from being inspired by mercer's lovely images and a little is lifted from the the lighthouse youtube break downs and some b+w youtubes i have watched. I was aiming for a darker moody look, i think its ok. Next is to replicate this process over in resolve with some moving images. Also my shuttle xpress has arrived and has been installed. You do have to go off to their website and download the drivers for it, however its not a large file. Once you restart your pc, you run a setup program thats also been installed and you can select from a menu which program/ programs you want to use it with. Resolve is listed, just have to scroll down abit. Not much weight difference between a mouse and the xpress, similar sizes as well. It seems like a tight little unit. No looseness in use. First button isn't programmed to do anything, 2nd button is reverse play, third is stop, fourth is forward or play, fifth is .., i forget it does something 🙄. The inner dial/ circle has a detent for your finger and makes rolling forward and backwards through footage, a rather lovely experience. It also makes stopping on the exact frame you want a breeze. The outer ring does jog through footage at increasing rates depending on how hard you twist it. I have to confess, i giggled like a five year old when i first fired up resolve and run some footage backwards and forwards. I suspect editing isn't supposed to be fun and the effect will probably wear of pretty quick. I also presume after using the contour express that all other controllers available from various sources add a measure of efficiency. Whether you can justify the cost vs the efficiency, that's the thing. Btm_pix recommended this down in one of the threads and i have to agree with him its a good improvement over a mouse, keyboard arrangement for editing. I have yet to try it with any other programs to see what other improvements can be realized. I got it on an amazon prime day, delivered for $125 Australian. Not cheap like a mouse, but then probably cheaper than some of the name brand items associated with video editing. Also i'd like to point out no affiliate links. nor are there ever likely too be... Now i'm off to bed, been an exciting day 🙂

- Last week

-

j_one reacted to a post in a topic:

Panasonic Lumix S1R Mark II coming soon

j_one reacted to a post in a topic:

Panasonic Lumix S1R Mark II coming soon

-

Thpriest reacted to a post in a topic:

Panasonic Lumix S1R Mark II coming soon

Thpriest reacted to a post in a topic:

Panasonic Lumix S1R Mark II coming soon

-

ntblowz reacted to a post in a topic:

Panasonic Lumix S1R Mark II coming soon

ntblowz reacted to a post in a topic:

Panasonic Lumix S1R Mark II coming soon

-

Torn now... After 2 full jobs fully Lumix, I'm thinking I might just flip the S9 for another S1Rii, - 2x same bodies, weather sealing, twin card writing, option of mechanical shutter, same colour, 7.2k 30p open gate, potentially Arri log... The Sigma 28-45mm f1.8 is on it's way and would be bolted on to one unit and the other, flip between the Lumix 18mm and 85mm f1.8's making the 50mm f1.8 redundant. I think I'd do it today but for the cost so will have to think about it some more, but it's very likely. Still to use the video side of the S1Rii but based on my experience with the S9 and S5ii's, I already pretty much know what the benchmark is and that the S1Rii will exceed that. Makes the loss of the A7RV and Zf slightly more palatable!

-

I thought i was the only one who had to deal with windy days... I suspect that the gustiness of the wind on the day, might be causing some issues with the cadence ? things seem to jump around a bit, not that the the g7 should have any cadence issues but rather the gustiness is making it look like that. I also suspect that the edit points dont help the issue either. Just seems to make it more apparent. Obviously its a test and your not trying to tell a story, however the jumpiness is a bit distracting. For me anyway. As to softening I think it looks ok from here, from what little i know... personally i'm still working out some of the ground rules for a look i like. I think your much further along the road to where i am at. In fact the last project i did went the other way and i was sharpening ever so slightly. So there you go. Hopefully some of the more experienced people can be more insightful.

-

I'd also argue that the conventional wisdom over high resolution sensors needing better glass is false unless you are heavily cropping the image. I've had soft 50 euro lenses look better and better, the larger and higher the resolution of the sensor. Same soft lens on a Micro Four Thirds camera or X-T5 looks terrible. Put it on a GFX 100 and it completely transforms and looks so much sharper when you're viewing the whole shot as intended, as long as you don't start pixel peeping it of course.

-

Let alone the size of the package! ; ) It's not a REDvolution, no idea to you but to me, it's a D-Log M revolution! : P Something that puts this company ahead of anything else. Who in this industry has no at least 1x unit of a DJI drone? Or a Osmo Pocket? Well, I even mean the Osmo Pocket 3. This will apply the same for this one coming now, I guess... : X

-

Never thought i had particularly large hands, however one gripe i have found (its a personal one) is when i grip the e-m1 my little finger tends to wrap underneath as the camera isn't tall enough. Not that i want to call the e-m1 vertically challenged. Its more of a me thing than a camera design issue. One of the reasons to get it was the mft form factor. Its just a little uncomfortable to hold after a little while. At the moment it lives with the ziyhun space plate on the manfrotto base plate and that seems to work ok. I don't seem to have any issues with the plates on the bottom, i don't seem to accidently hit at all and i can put pretty much any lens on it without fear of it falling over. I suspect one of those optional HLD-9 Power Battery Grips is in the near future for this camera and the gimbal should accommodate the extra weight. At the moment its about 50 % tripod time and then 50% hand hold. Its nice the space plate and base plate are out of the way of the battery door and its easy to change the battery without having to take something off. I did get a few photos with the super takumar 35mm f2 today and i'm quietly confident that i met or exceeded the mission brief of finding a yellow lens. i should also point out it was nearly 4pm and winter here, so the sun is low in the sky. From the left, straight out of the camera. For the middle shot, and to get back to a less artistic image, i took a grey point off the lavender as thats kinda grey, perhaps a little too blue ? I do like the colour of the dirt in the middle photo but i suspect the rest of the photo is a bit too cool ? which might come back on using the lavender as a grey point also the gerbera isn't as orange as in real life. I can of course massage things in post but that kind of defeats the purpose of the exercise. Plus its all subjective anyway lol. Third is from the iphone 13 for a bit of a comparison, the flower certainly is more orange, maybe it pops a little too much. I guess the iphone is doing some " magic " although i am sure the profile is set to normal. I think its kinda interesting how cameras / lenses interpret an image and then how humans interpret that result as pleasing or not. I'll also add i don't like to overthink it, more of a conceptual thought rather than a whole process. Next shot is taken at the same time and i threw it in as i thought there was abit of fine detail in it with the spider webs. After that the battery died. I was hoping to get it charged for sunset however i was a bit late and it was abit of a bland sunset as well. So far i am liking this lens, from the very limited time i have played with it, it behaves like i thought it would. I have a bunch more things to try with this lens yet. I doubt anyone else will buy this lens for the same reason i bought it. Most would buy it and stick out under the sun or uv light to clear away the colour cast. To me thats what makes it unique. I do have other takumars super taks and smc's and the 17mm smc on the desktop beside me is clear or almost clear of a colour cast. I'll have to check the others now ... The 17mm smc also has a filter wheel built in with a red, yellow, and clear, i remembered that only a couple of days ago... I should get some comparisons to satisfy my curiosity. Also its very much a mint looking lens and the focus works smoothly and aperture work nicely, i also bought one that had front and rear lens caps. After my first couple of takumars it began to annoy me that people will sell these lenses and ship them round the world without a front or back lens cap. I am confident i have always paid good prices for the lenses i have bought and honesty i think its just lame, that people cheapen out and won't put a cheap cap on it. I have a birthday next week, kind of ironic and depressing to think that i am as old or older than these lenses. These lenses keep working just fine, however my shoulder is starting to pack it in... 🙄

-

Speaking of serious image degradation, here are a couple of test shots of the 58mm wide angle adapter I ordered. It seems to vignette quite a bit on the wide end of the GX85 + 12-35mm combo, so you don't get a wider FOV by the time you zoom in to eliminate the vignette: The fact it's so much more degraded at 35mm than 16mm makes me think that the adapter is interacting with the optics inside the zoom. If there wasn't an interaction then when it's at 35mm it should be less degraded because it would essentially just be taking a smaller centre-crop and the edges of the frame should be cleaner. Anyway, this level of distortion isn't what I'm chasing, but it might be very different on other lenses and it's still quite fun to play around with.

-

Wow, "too clean" wasn't a reaction I anticipated!! Yes, it was 24p (well, 23.976p anyway). I don't think I've heard of shooting a little slower to give a more filmic cadence - interesting idea and one I will absolutely try. I'm not sure how I would actually shoot at that speed, as I don't know which of my cameras would offer that option, but as a test I could just slow some of the above 24p plant footage down as plants moving slightly slower in the wind is a thing that happens so shouldn't be too surreal. I've slowed 30p cameras down to 24p, which is a 20% speed reduction and noticeable, which would be about the same for slowing 24p down to 20p. I am yet to really study that test I posted, but my initial impressions were that while it looked like film, it didn't have that certain something I'm looking for. What I'm looking for I can't describe, but it's sort-of the opposite of that "video look" of shooting 60p with sharp lenses and with accurate colour science and proper WB. I watched Old Guard 2 a few days ago and was impressed with how it seemed to have a cinema look but was also quite sharp (which I think have a strong negative relationship) but when I went back and took screenshots I found it actually wasn't that sharp. I then went looking at film trailers trying to find examples with this real cinema look, but most of them were sort-of "neutral" in the sense that they looked somewhere between cinema and video, with some being closer to cinema than others but none being fully at that end of the spectrum. They were mostly uploaded in 1080p from the studios, and the ones in 4K were from other movie review sites and looked a lot more detailed but I can't be sure if these are AI upscaled or what the image pipeline was, so I didn't look at them. When I think about what looked really cinematic to me is mostly old films that were actually shot on film and are surprisingly soft and grainy, so I really need to go looking for some high-quality footage (that I can trust) from more recent films. Anyway, this all sort of made me question if I was now just seeing things, or if all the trailers looked too sharp to me (including the trailer for the first Knives Out movie which seemed to be very high quality upload), so I am going to do some more testing and try and reality check myself with more research and more testing. Also here in Australia some services stream in SD (for a variety of reasons) so there's a non-zero chance I've just gotten used to that, but having said that when I go to the cinema to watch the really big films (like Dune 2 or Bond movies etc) they don't look sharper than I was anticipating, so I don't think it's that. In an attempt to give myself some perspective, yesterday I shot a motion test where I shot the same shot of the plants and then I walked through the backyard, with the following settings: - iPhone 60p using auto-SS (short shutter) - iPhone 30p using auto-SS (short shutter) - iPhone 24p using auto-SS (short shutter) - GX85 24p using auto-SS (short shutter) - GX85 24p using 180-degree shutter - GH7 24p using 180-degree shutter - GH7 24p using 216-degree shutter - GH7 24p using 288-degree shutter - GH7 24p using 360-degree shutter - GH7 24p using 108-degree shutter - GH7 24p using 70-degree shutter I haven't looked at that one in detail yet either, but it was sort of a combined test of subtle variations in shutter speeds (the GH7 shots) and also a reality check to judge the GH7 shots against actual video (iPhone and GX85 auto-SS shots). Today, I've just finished assembling this monstrosity: This is the P2K and GX85 on top, with GH5 and GH7 on the bottom. I finally own enough vNDs to do this, although the 82mm vND I bought for the Sirui anamorphic adapter does look rather ridiculous on the P2K and 12-35mm! I'll shoot a side-by-side with all of them rolling and will walk around the yard to get a number of compositions and lots of movement. I forgot to include the P2K in yesterdays test, but I also want to have a reference where the motion is essentially the same, which is why I have rigged them together. I can replicate a 35mm F11.5 FOV on all these, so should have mostly the same image. There will be resolution/sharpness differences, but I can level the playing field in post by applying various FLC profiles, which will be a good test to see if any perceived differences disappear or not. I'm still waiting for my Sirui 1.25x anamorphic adapter to arrive, but once it does some future tests will include various combinations of the wide-angle adapters, the anamorphic adapter, modern AF lenses, modern MF lenses, vintage lenses, and probably some filters too, as I've got a small collection of softening filters and a few vNDs of vastly varying quality which should add a look to the footage too. I might also shoot some tests comparing various amounts of rolling shutter too, as I think the GH7 has strong enough codecs and enough modes to make meaningful comparisons between these too.

-

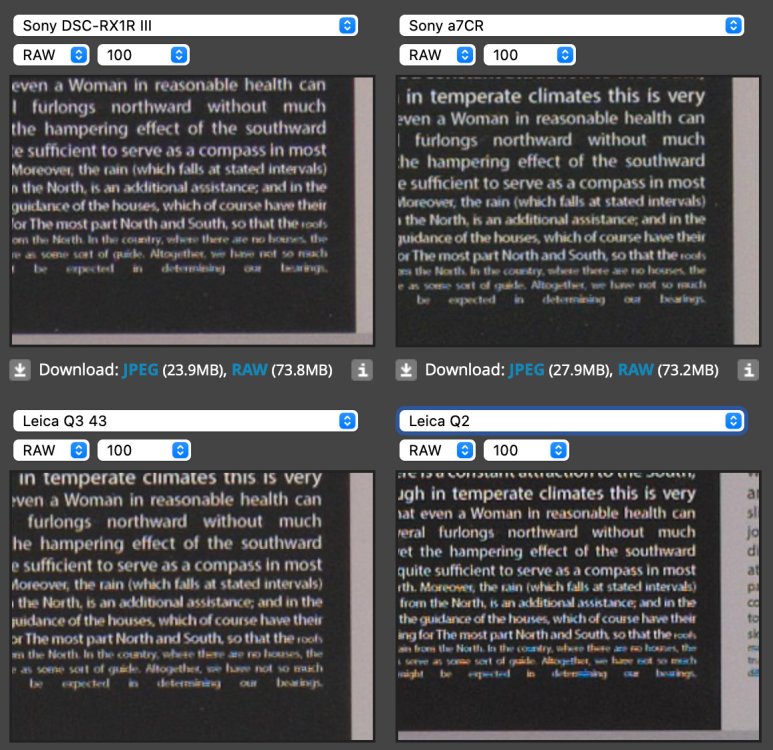

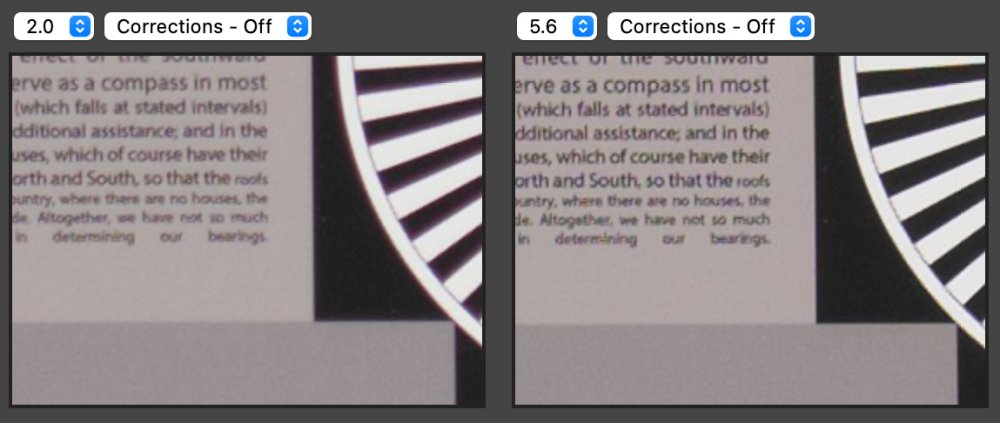

I'd also say that lens sharpness is relevant only in some contexts. A 35mm f/2 lens on a high-end point and shoot really needs to be "sharp enough" - and has to find the balance between sharpness and being too clinical. Unless you're taking pictures of a brick wall 30 meters away, chances are that the 35 has all the detail you'll ever need. Keep in mind that the previous camera using that lens had 45 megapixels - jumping to 61 is not huge. If I were only interested in still photos, the RX1R II would almost hands down be the choice, especially if I found a good deal. Flippy screen and cute lil' pop-up EVF like the RX100 series? Sign me up. Some of the other ergonomics look better on the new camera and it can record in 4K, barely. Is that worth thousands of dollars more? Not for me. Could be be an interesting camera in 5 years when the used price (hopefully) drops it more than 60%? Yes, it could be. Would I take it over a GFX 100RF that costs less? Absolutely not. My most frequently-used lens on the GFX 100 is the 32-64 and that's also an f/4 lens. Very sharp too. You can really count the molecules in those bricks in the wall 30 meters away.

-

You can't compare a fast 35mm to a 50mm F8 macro lens or whatever it is they usually use on the DPR test scene. It's a fantastic lens, always was. Even wide open at F2.0 it's close in sharpness to F5.6 stopped down. Of course, only in the centre - but the DPR test scene is a sensor test scene, it isn't designed for wide angle lenses. The real-world performance of the lens is what matters. It's not as good as a Leica M APO 35mm F2 for 4 grand or the 35mm F2.0 lens on the Zeiss ZX1, but it's still very good. I have always treasured the shots from my RX1R and RX1R II That's what counts, not the pixel peeping at 2000% magnification. I think it does just fine... By far the most important thing with a lens is to go out and take real shots with it... The Panasonic 28-200mm on paper is a piece of garbage. Is not the sharpest, not the fastest, F7.1 at the telephoto end, and yet it shoots shots like this... Which look like they're shot with a high-end 135mm F2.0. The rendering is just superb at 200mm F7.1 Does it look like F7? Nah.

-

Sony finally notices that people like small cameras, releases RX1R III

EduPortas replied to eatstoomuchjam's topic in Cameras

DP Reviews image tests on the new RX1 Mark III just came out. The lens is incredibly soft. Too many MPXs on that sensor for a 10 year-old lens. -

Still too clean for me. Dod you record at 24fps? Maybe try lower at 20-21fps to give a more filmic cadence?