-

Posts

8,047 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

My point was that there's creativity to all art, but maybe it's not obvious. Here's what a video process might look like if it had no creative decision making involved: Generate random GPS coordinates (applying some sort of criteria to ensure they're practical / accessible etc) Go to GPS coordinates, setup camera on tripod Select a lens at random from the selection - if it's a zoom select the zoom randomly Set the focus distance and aperture to random values Generate three random numbers between 0 and 365, orient the camera using these values as pan / tilt / roll Record for a random amount of time Repeat above sequence a random number of times, then proceed to editing Put all footage on the timeline in random order Generate a random percentage, then remove that number of clips from the edit at random For each remaining clip generate random start points and durations and make the edits For each cut apply a random transition effect Select a random number of pieces of music from all music ever created Cut random sized sections from these and add them to the timeline in random locations In the colour grade, randomise all the controls etc etc Obviously this would not be pleasant approach, and regardless of your views about "art" "creativity" etc, you wouldn't think this is a good way to make a film (with the exception of the experimentalists, but that's another discussion). ANY choice you make during the process that isn't random and isn't directed by some other factor (like "the cheapest one available") is a creative choice and will be based upon some sort of aesthetic preference. It's easy for people to get blind to the bigger picture and just narrow in on tiny details, which is what most disagreements stem from.

-

There is one point I forgot to mention in the first post, which is the WHY of this whole situation. The point of looking at exposure and WB latitude isn't to correct these things in post. It is to understand how the camera reacts to colour grading. When we colour grade, we are taking the image and pushing things around. In a literal sense, we are just raising or lowering the value of the R/G/B channels in pixels based on a variety of factors, but it's just that - raising or lowering the value of each channel. So, if a camera falls apart when I try to make a tiny adjustment then I'll know that I can't colour grade it much. Assuming you get the exposure and WB relatively close in-camera then the changes should be relatively minor, but some changes are larger or smaller than others. As some examples, here are the operations that I would do across most shots in a grade of mine: Adjusting WB - this is raising or lowering the values of the RGB channels against each other Adjusting exposure - raising/lowering RGB channels together Adjusting contrast - raising/lowering RGB channels together but doing the shadows and highlights in opposite directions Adjusting saturation - raising/lowering RGB channels in a way that expands the differences between channel values Applying a subtractive saturation effect - lowering the RGB channels together based on the differences between channel values Brightening faces - raising the RGB channels in an area on and around the selected faces Massaging skintones - raising/lowering the RGB channels to subtly change the hue and saturation Cool shadows - lowering the RG channels in the shadows etc etc.. as you can see, all these things are just subtle changes to the RGB channels, which relies on the latitude of the files. Most of these changes will be adjusting values by 1 stop or less, and sometimes a LOT less (like skintones), but some might also be more (cooler shadows). I did an experiment on bit-depth and wrote a plugin that lowered bit-depth, and discovered that taking a final image down to 6-bits wasn't visible (and some were fine with 5-bits!) but if you tried to colour grade those 5-bit images then you'd discover pretty quickly that even very minor adjustments would annihilate the image entirely. The other thing that occurs to me is that there are things you can do to help the image keep from falling apart too, like adding noise etc. I should explore that in future tests.

-

Absolutely, if you can shoot 10-bit Vlog and are happy to grade it in post then there's no reason not to. The main motivation for me is really in dealing with the older or smaller cameras that don't have a log profile built in, and being able to realise as much of their potential as possible. The GX85 is my main camera now, simply because of size and form-factor, but as we have been reminded by @John Matthews in the "World's smallest DSLM that shoots 4k?" thread, there is still a lot of love for these small cameras.

-

I have contemplated setups that included multiple cameras before and my brain kept wondering if there was a way I could make some sort of Franken-rig that included both cameras, like how street photographers often make BTS videos my mounting a GoPro in the hot-shoe of their camera. I could never get a rig that seemed to work, but the Pocket 3 is so small and so flexible (if you configure it to always keep level / never tilt) then you might be able to add it to another setup, potentially even using using both at once? Maybe it's an ill-conceived fever-dream, but I feel like it might just be a killer idea that changes the game in some situations - if I could only find that situation! My Intel MBP is slowly approaching retirement and I'm definitely looking forward to Apple silicone. When I last looked at the benchmarks the different 'levels' of their chips sure made a difference too. It won't be before time either, yesterday my wife and I shot the first couple of talking-head promo videos for her new business and the export was sitting at 2fps in places!

-

Cool videos - I especially liked the last one with the pink skies, very nicely done! It's great to hear you're getting deep with the Digital Bolex, it is one of the oldest cameras that are still very relevant and create images as good, or better, than the megapixel behemoths of today. Your description of having a small setup that is ready to go reminded me of the way that documentary film-makers organise themselves when they're making a doco and that at any minute they might get a call from the main cast members that something is happening and they have to rush there to get coverage, so they have to own everything (no rental equipment) and it has to be ready to go at all times. Also wonderful that you're making real work and showing it. I think that anyone who upload a finished project deserves huge respect, but to get involved in a community and then have your work shown is next level. Congrats!

-

I mostly prefer locked down shots too - I just get them handheld using IBIS 🙂 With the Dual IS and a bit of stabilisation in post, you can easily get a shot where the framing is locked off. RS impacts movement of the frame (e.g. from being handheld) but also movement in the frame (e.g. movement of vehicles, movement of objects filmed from a moving vehicle, etc). Style is, of course, personal preference though, so however you want to tackle it is the right way 🙂

-

Link to files. The reference image is repeated so you can just go back and forth to compare. Enjoy! https://www.dropbox.com/scl/fo/vj7wthlzjc9ocqtphvclt/h?rlkey=ivcvcj3hmdrow1q0zsof0wc1p&dl=0

-

Skin-tones are always the trickiest because we are much more sensitive to this area of the colour cube than any other. If I make a cameo then you'll get to enjoy the full range of tones - from pasty to gaunt and all the way to magenta and red. Lucky you! In terms of working with skin-tones "given 8-bit limitations" I don't think there are limitations, at least if we're working with 8-bit rec709 images. In broad terms, the danger of "breaking" the image comes from areas where the bits are too far apart and the associated artefacts become visible. The bits are broadly as far apart in 8-bit 709 as they are in 10-bit LOG as they are in 12-bit RAW. The difference that most people experience between those is likely to be the compression, considering that 8-bit 709 will mostly be 10-100Mbps, 10-bit log will mostly be 50-400Mbps, and 12-bit RAW will be uncompressed. Perhaps the worst combination is 8-bit log, which TBH, is so bad it should be against the Geneva convention on human rights. This is the worst combination because the bits in the final image are spread apart the most. This is why I shoot rec709 with normal amounts of saturation - it gives more information for colours than if you reduce the saturation in-camera and then boost it in post. It's also why the iPhone HDR implementation is so good - it is 10-bit but the levels of saturation in the files is close to 709 levels, so you have a really large amount of colour fidelity still retained in the files.

-

Also, if anyone can think of any other tests I should do then I'm all ears. Perhaps the one that might be useful is including skintones, so maybe I'll have to make a cameo in the test images. Realistically, the ability to recover skintones is probably the most important thing, and perhaps the most fragile.

-

Actually, for these I didn't use Colour Management (I know this will shock people!) and it was a bit of a surprise to me too, but the LLG controls just worked better. The fact they're designed for rec709 might have something to do with that, perhaps, maybe... ? 😆😆😆 I normally make my adjustments in real projects using Colour Management, and they're fine because they're not a torture test like this with huge adjustments, but maybe I'll keep this in mind and if I get difficult shots then maybe I'll change to rec709 for one node and do the adjustments in that. Cool, I'll work out the best way to post them. TBH I don't know what "more latitude" really means. For example it might be the amount you can shift something before clipping occurs, or before some level of visible undesirable aesthetic effect occurs, but this would be situation dependent and also user dependent. If you can think of a way to test this then I'd be happy to have a look. From memory, I think I set the blue image to a colour temp in the 6000s and the warm one to 3200, and neutral was in the middle somewhere. The magenta and green images were setting the in-camera adjustment to the maximum it would go in those directions. So, from that, these are very large WB errors to recover from, and (hopefully!!) much more than you'd ever encounter in real life.

-

+1 for the suggestion of getting an older camera for stills, for the following reasons: Older cameras are much less out-of-date for stills assuming they can shoot RAW stills (which is almost universal now) This will give you the ability to have one camera setup for stills and the other for video, with no fiddling around to change modes and adjust any settings that can't be recalled as part of a profile etc The concept of having a backup is a great one and one that I've implemented for my setup If you decide to shoot a second angle or BTS video or anything like that then you're set, even if you setup both on the same tripod and one shoots a wide and the other a close-up, there are so many uses You can shoot a Timelapse of a whole setup while also recording video of parts during the activity You can even do things like record with the FX30 from a distance and use the older one as a field audio recorder and have it close by with a lav running into it

-

Just created a new thread showing that the rec709 profiles in these cameras are more flexible in post than most people think: Posting this here for easy reference, and because these small cameras likely only have 8-bit rec709, but I think this isn't nearly the barrier to getting good images than most people believe it is. It's also why I shoot in auto-exposure and auto-WB.. making small changes to every shot is a very simple operation and a much better outcome than me setting this stuff manually and then forgetting to adjust it later and getting some shots horribly wrong! For those who think that adjusting exposure and WB in post is a sign of bad film-making, every colourist I've seen allows for making small exposure and WB changes as a first step in their grade and I've heard several say that it's something they apply routinely, even on professionally shot productions. Same as NR, which is routinely applied, completely contrary to YT wisdom which suggests that visible noise means the camera is defective. I hope this test will encourage people to look beyond the forum/YT "wisdom" and be free to get the most from their equipment.

-

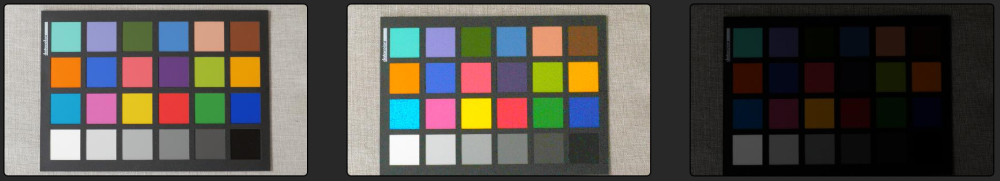

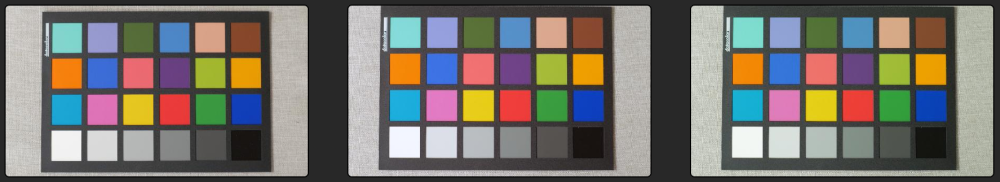

8-bit rec709 profiles are much more flexible in post than you might think. I'm not saying they're as good as an Alexa or whatever, but they're a million miles better than people give them credit for. Here's a latitude and WB torture test of the GX85, in the standard profile (8-bit rec709) customised to have reduced contrast but normal saturation. For each of the below, the left image is the properly exposed reference image, the middle one is the graded image, and the one on the right is the image SOOC. Exposure latitude test - +3 stops: Exposure latitude test - +2 stops: Exposure latitude test - +1 stops: Exposure latitude test - -1 stops: Exposure latitude test - -2 stops: Exposure latitude test - -3 stops: Exposure latitude test - -4 stops: Exposure latitude test - warm: Exposure latitude test - cool: Exposure latitude test - magenta: Exposure latitude test - green (as far as it would go!): Notes on the testing method: GX85 shot in manual mode on cloudy day Exposure varied by changing lens aperture WB varied by changing colour temp and tint Tools used in Resolve were mostly Lift/Gamma/Gain, saturation, and some had a bit of Shadows/Mids/Highlights Notes on the results: If it's clipped then it's clipped, there's no getting around that If you've shot a whole sequence in the wrong WB then shoot a test chart replicating the error, spend some time on the correction, then apply to all the shots... a bit of work but worth it to rescue a days shooting If you've shot on auto-exposure / auto-WB and want to correct the small errors then this is easily possible - it won't have gotten it nearly as wrong as what I have shown above Most cameras shooting in rec709 will do funky stuff to colours depending on their luma value as part of the look of the profile, so when you over/under expose and then pull things back the hues and saturation levels will have shifted around in odd ways compared to a normal exposure, but if it's a real shoot then you most likely won't have many dominant hues in the image and you only have to correct the ones that are in the frame and distracting, so the above is far more work than normal shooting would be The days of needing RAW or even LOG to change exposure or WB in post are gone, and although the rec709 profiles often have lower DR than log profiles, they're much better than you think. If anyone wants me to post full-sized versions with titles so you can flick back and forth then just let me know. Happy shooting!

-

Agreed, but I'd go one step further. To be perfectly honest, I read/watch half the online stuff about film-making and just shake my head - it's like vast sections of the people discussing this online have forgotten what cinema actually looks like..... if they'd ever even been! Almost any YT search for a camera or lens-related phrase will instantly deliver an endless stream of videos that were shot with kit lists that rival the price of a new car, and yet somehow look more like home videos than my actual home videos. Of course, the biggest issue is that almost no-one in the whole online film-making community actually make films, they're mostly professional or amateur videographers and have clients that want a high-end video look. As a dad that makes home videos, if I think your stuff looks awful then you're in real trouble, and that's how I think most stuff looks these days - until I see something from actual film-makers!

-

I've been thinking about my own strengths and weaknesses too, and have started a project designed to let me focus on something that I'm quite weak at with the goal of improving in that aspect. I think that focusing on something in particular and just practicing that might be a good way to build that specific skill. I'm reminded that in sports the coach will have players run drills of the same thing again and again, presumably so they can focus on that one thing without being distracted by the chaos of a normal situation. I imagine the situation is the same with film-making - trying to work on one aspect while also doing all the rest probably doesn't help much in comparison to just focusing on that one thing.

-

I assume you're talking about delivery via streaming service? If so, yeah, yes, it's a real weakness. However, there is a huge difference between the quality of a 50Mbps file coming from a camera vs the ones coming from the streaming platforms. I've done a lot of testing of codecs and streaming compression and one thing I noticed is that the quality of the image you create in the edit really carries through the entire streaming pipeline. Just take a look at the camera demo reels from ARRI and RED on their YT channel from 10+ years ago. The image quality speaks for itself.

-

We all have more camera than talent! Anyone who thinks otherwise needs to go back and watch the best examples of their particular niche and then look in the mirror and ask themselves if they really think they are as good at shooting as Deakins, at editing as Murch, etc. I'd watch the work of the masters shot on an iPhone 4 rather than an influencer vlog shot on an Alexa 65 any day of the week. In fact, I highly recommend finding and studying the best examples of your genre. Pull them into an editor and cut them up manually shot-by-shot so you are forced to see what they are doing. I did this, and it completely revolutionised how I view the whole process.

-

I enjoy shooting far more than editing, but that's because I don't feel like I know what I'm doing in post yet. The more I learn the more I enjoy the process though, which is encouraging. One thing that people often forget is that film-making is a creative pursuit and that humans are emotional creatures. One of the critical ways this manifests in film-making is that if your equipment is frustrating to use then you will be frustrated and will not make as good creative decisions (e.g. compositions) as you might have if you were in a better mood. If you are around people, and especially if you are interacting with the people you are filming, then your mood will alter the behaviour of those people, directly influencing the people in the frame. Personally, I have used cameras in the past that made me feel like I was fighting them the whole time, and I am sure the shots suffered because of it. When I shoot now, not only do I use cameras that feel like they're helping me, but I also know that the images I get in post will be aesthetically pleasing and working with them in post will be straight-forwards, and these things make me enjoy shooting much more, making the experience nicer (which is important considering I do this for fun) but also meaning that the influence I am having on the creative aspects will be more creative as well.

-

Good idea to reflect on the year! My highlights of the year: Bought a Thunderbolt hub and radically changed my home setup I use my MBP laptop for everything, and now have the following setup. When I dock it at home I plug in a USB-C hub which connects it to power, my speaker system, the Resolve dongle, and a few peripherals. When I want to use it "normally" I plug in a Thunderbolt-to-mini-DisplayPort cable that goes to my UHD panel, and the MBP switches to using the UHD panel as the main UI. I can run Resolve in this mode, but it's not the best setup. If I want to run Resolve properly I remove the cable for the display and plug in my new Thunderbolt hub. The TB hub then connects a second 1080p panel I have on my right as the UI and connects the BM UltraStudio Monitor 3G, which is connected to the HDMI input of my UHD panel. When I run Resolve in this setup the UI is to my left, and the UHD panel will be a pure monitor feed set to the correct resolution and frame rate (up to 1080p, which is my timeline resolution). My big purchase was that I did a course on using alternative colour spaces in Resolve with Hector Berrebi It was pretty niche, but really solidified a bunch of concepts that I'd seen in passing or had some familiarity with. There's another course from him coming up in Feb to do with skintones and beauty grading and retouching that I'm really curious about. I bought zero cameras and zero lenses I did two post-pandemic trips, one to Melbourne and the other to South Korea The best investment in my film-making is putting interesting things in front of the camera, and this was definitely that. Discovered how to grade iPhone and GX85 footage to match with GH5 In preparation for the Melbourne trip I did a comparison between the iPhone, GX85 and GH5. It was mostly to rule out the iPhone as a serious camera option. I proved myself wrong and discovered the iPhone is actually incredibly good and useful in post. I worked out a power grade to convert it to Davinci Intermediate and was able to colour grade it just as easily as LOG or RAW. I also discovered that the GX85 is practically as malleable as the GH5 when it comes to small adjustments. Really nailed down my understanding of Colour Management in Resolve This is what enabled me to grade the iPhone and GX85 so well. If you're not using Colour Management then you're basically fumbling around in the dark with boxing gloves on, you've got no hope of doing anything except accidentally discovering a few tricks that seem to work. Discovered I prefer the GX85 over the GH5 The GX85 suits my shooting more than the GH5 because it's smaller, the tilt screen is so much faster/easier to use in busy public places than the flippy screen, and now that I can grade the files just as easily the image is just as good unless I hit the limits of the image (e.g. DR) Switched from MF primes to an AF zoom This was a revolution. I used to shoot with fast manual primes, but in Korea I discovered that the 14mm F2.5 was fast enough for having a natural amount of DoF and had good low-light, and the speed of the AF-S was a real upgrade to my shooting. I have since switched to the 12-35/2.8 and will see how that goes on future trips. Learned a bunch of things about editing I have a huge backlog of previous trips that I haven't edited yet. In fact, the small percentage that have a "final" video edit, I am still quite unhappy with. Overall I have felt like I didn't know what I was doing in the editing, or the colour grade. This feeling isn't completely gone, but I have had a number of realisations about various editing challenges and I feel like I'm almost knowledgeable enough to edit something in a passable way. If I had to sum up, I feel like I'm almost good enough at editing and colour grading to understand what I'm doing in post, and I almost understand enough about post to know what equipment I need in prod.

-

Just thinking back on 2023 and I think it was a pretty low cost year for me, with the one of the main expenses being a colour grading training course. Skills > equipment....

-

The G9ii looks like a good upgrade to the GH5. If I used my GH5 as my main camera and wanted an upgrade I'd be tempted. The list of modes in these cameras is definitely getting to be huge, I noticed that Panasonic implemented the ability to favourite some modes so you can shorten the list, so they're aware that it's getting cumbersome too. It would be great for them to implement a BM style solution were you choose things individually, but of course if there is a complex set of combinations that can't be done then this style of menu would really make that obvious to people, so it might not be in their best interests. Sadly, perception matters a lot to people and this stuff can make or break products and even companies, so while it's frustrating it is a relevant consideration. I think this is just how capitalism works. Products across all industries adopt this pattern of trying to make the main item as cheap as possible and then making as much profit on the accessories as possible. This is because people price compare on the main item, but don't even look at the prices of the accessories until they're in the retail shop or have already ordered the main item. What sort of things are you planning to use it on? I'm an introvert and going out and filming is not my natural compulsion, but whenever I do I am always glad that I did.

-

Do you have a cat? They walk on the keyboard and can accidentally buy things. My wife tells me that is quite common.

-

I've tried film-maker math, but I discovered my bank wasn't film-maker compatible and the translation was corrupted, with none of the savings arriving in my bank account.

-

MCN? My impression about them getting deleted is that it's some sort of unrelated thing, like business decisions or people-related stuff. I got that impression from when Kai spoke to the bloody producer and they seemed to talk about why it happened but then Kai didn't say publicly, so that says to me its a reason that he was able to understand (either because he was told and understood it, or wasn't told but was given an explanation that made sense like it was a personal thing etc).

-

I'd suggest for the presentation that you mention the price of the Sony first. In each conversation you only get to set the "anchor" once and it's remarkably persistent: https://en.wikipedia.org/wiki/Anchoring_effect In the recent trend of cinematographers asking their partners how much they think each bit of their kit costs, the pattern that I saw was that they tended to overestimate the value of smaller items because they were so used to discussing the huge prices of cinema camera bodies and cine lenses. Yes, it's all relative. Every now and then when I think about how much money I have spent on cameras and lenses I remember that it's common for people to buy a V8 car, or motorcycle, or spent $100 a week on alcohol or cigarettes, so the fact I spend less than that on a hobby that is family friendly and doesn't risk my health puts things into perspective. My impression was that it's more about who you know rather than how much money you have. With all the crap that's being pumped out of Hollywood it's not like there's no funding available, and even if you can self-fund a film your money won't give you a distribution deal etc. Maybe these days if you can make something and then put it on streaming and promote it then maybe you can get people to watch it, but you're less likely to make money or get lots of views than if you knew people and someone else paid for promotion, and if you just wanted lots of eyeballs then you'd be better off just making lots of content and gradually building an audience surely?