ac6000cw

-

Posts

689 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Posts posted by ac6000cw

-

-

54 minutes ago, FHDcrew said:

Decided to get the best of both worlds and splurge for a used Panasonic G9II. A cheap gh7. Got the gold build quality and ergonomics and decent high ISO performance and great IBIS etc etc etc. Just makes so much sense for me as an a cam.

That sounds like a good decision to me 🙂

I own the original G9 and if the ergonomics worked for me I'd almost certainly own a G9 ii - but they don't... (same with the S5/S5 ii and a few other cameras I've considered). The modern trend for relatively thick, squared-off bodies means they don't fit nicely in my right hand, so I can't easily reach the front and top buttons without having to change my grip while recording hand-held.

-

13 hours ago, John Matthews said:

This logic rings true for so many cases. "With just a little bit more you get" will make you end up at 2k. In this case (S5 vs.S9), I think some people would pay more for the S5 over the S9 (and it's PDAF) to not have the limitations of continuous lighting only and no indoor shots at high SS of 1/100 (no banding in PAL regions)... never mind the weather-sealing, mic port, better IQ (in 4k, especially with RAW) and EVF. Doing it all again and a lower budget, I'd probably get the S5D + 18-40 for 799 euros (new with 5-year waranty). After, I'd get some awesome Konica lenses.

Minor point - the S9 *does* have a 3.5mm mic input jack (but no headphone jack).

-

3 hours ago, FHDcrew said:

Question for you guys. OM1 with 12-40 IBIS performance vs Panasonic S9 with e stabilization on high. What is better?

It's hard to say which one is better - they are both excellent but behave differently. I think the OM1 is better at working out what you a doing e.g. are you panning (when it lets the stabilization 'flow') or stationary (when it tries to hold it static). The amount of crop the OM1 adds in e-stabilization is less than the S9 adds in 'high' e-stabilization mode, but the probably S9 degrades the image less in that situation.

Really, the big difference between the two cameras (ignoring the lack of EVF and mechanical shutter plus the limited physical controls on the S9) is that the OM1 is maybe 80% stills and 20% video orientated, where the S9 is more like 60% video and 40% stills. The OM1 is very well built, feels great in the hand, is fully weather-sealed etc. but you'll curse it's video limitations at some point. For example, to get the sharpest video you need to use 10-bit mode, but that is *only* available as 4:2:0 HEVC in either HLG or OMLog400, and you can't adjust anything in those modes. The 8-bit modes are basically the same as the E-M1 iii, but the output looks a bit cleaner. There is no way to save custom sets of video settings - the custom sets only work for stills, but at least most settings are kept separate between stills and video modes, including button and control wheel customizations.

-

On 12/24/2025 at 12:27 AM, FHDcrew said:

Has anyone had success with the Viltrox EFM2 and an Olympus PDAF body in video?

I may still order the EM1X. I want to get the sigma 18-35 and speedbooster. But if AF doesn’t work I’ll need to get the Olympus 12-40mm 2.8 instead.

I currently own an E-M1 iii and OM-1 (mk1). I'd just get a used OM-1 and the 12-40mm F2.8. It's just a great combo for handheld video. If you want even better stabilization, use the 12-100mm F4 instead as that supports Sync-IS (Oly/OM equivalent of Dual-IS), but it has the downside of extra size and weight.

Having recently acquired a Pana S9 I still think Oly/OM has the best stabilization - it's almost uncannily good sometimes - but the S9 runs it pretty close most of the time. Main difference for me is that the S9 needs more decisions about which stabilization modes to use in a particular situation, whereas with the OM-1 + 12-40mm I usually enable sensor + e-stabilization (M-IS 1) to minimise corner-warping and set the stabilization level to +1 and let it work out the rest for itself. With the 12-100mm the Sync-IS means sensor-shift only stabilization (M-IS 2) is much more usable at the wide end and stabilization level 0 is usually enough (which is more flowing/less sticky).

-

3 hours ago, ND64 said:

Have they explained somewhere how they get this ranking? If one entity rent the same 24-70mm twice in a month, and it counts as two, the whole thing doesn't show any meaningful information.

Follow the link at the top of the first post.

-

The Optyczne.pl 'movie mode test' review is here - https://www.optyczne.pl/110.1-Inne_testy-Sony_A7_V_-_test_trybu_filmowego_Wstęp.html

-

2 hours ago, Andrew Reid said:

Nothing to stop them doing an FX2 as well.

They had an FX2-like body design (including a flip-up, multi-angle EVF) 9 years ago in the form of the GX8 - https://www.dpreview.com/reviews/panasonic-lumix-dmc-gx8-review/3

-

6 hours ago, maxJ4380 said:

I'll get a second battery, probably have a look at a usb charger, if i can can find such a beast

'Slow' USB chargers definitely exist (I use them myself) e.g. https://www.amazon.co.uk/JJC-Battery-Charger-Olympus-Cameras/dp/B08DR6BY2Z and https://www.amazon.co.uk/Ayex-Charger-Olympus-BLH-1-Batteries/dp/B07BSWH8QN

-

8 hours ago, maxJ4380 said:

One thing i will have to do is buy an extra battery as its fairly easy to flatten a battery in a couple of hours or less.

My experience with E-M1 iii is that the battery life is pretty good (certainly compared to some Panasonic M43 cameras I've owned), but I would always take a spare battery with me if I was planning to shoot a reasonable amount of video.

(That's a nicely kept red Citroën Ami on the left of the 2nd photo - brings back memories of the later Citroën Visa that my wife owned years ago, powered by a 650cc air-cooled 2-cylinder engine. At only 35bhp It was rather underpowered but that made it great fun to drive - just to make reasonable progress you had to thrash it hard and overtaking needed serious forward planning 😀)

-

12 hours ago, Tulpa said:

Does anyone have anything to say about the Fuji XT cameras?

I've never owned a Fuji camera myself, but one major reason I haven't is that many of their cameras don't support 'plug-in power' for external mics, which some camera-mount mics need (or can detect to perform auto mic on/off). You need to check the user manuals carefully if that's important to you. The X-M5 does appear to support it (but e.g. the X-T50 doesn't), so maybe future cameras will as well.

-

5 hours ago, FHDcrew said:

So was using the APSC crop mode; honestly this is a great faux-APSC camera, image quality still phenomenal and noise performance isn't bad.

5 hours ago, FHDcrew said:It's nice too that I can do 48p or 60p without a FOV increase.

I agree completely.

5 hours ago, FHDcrew said:The cage and hand-grip definitely helps, and I don't find the camera to be uncomfortable; the Sigma 18-35 is definitely front heavy, but the setup is still a lot more comfortable than my old Z6 with the Atomos Ninja V bolted on. I will say over time the smallrig handle seems to come slightly loose so i have to tighten it every now and then, but when not shooting I also tend to hold the camera in one hand by the grip.

With smaller/lighter lenses like the Sigma 18-50 f2.8 APS-C I find the S9 well balanced and easy to hold, with left hand under the lens and right hand around the body. I have (but don't use very often) the Sirui grip, which is deeper than the Smallrig one and has a high-grip surface on the 'bump' - this is someone else's comparison photo of (top to bottom) the Sirui, JJC and Smallrig grips

Even with a (small/light/decent sounding) Sennheiser MKE200 mic mounted on the cold shoe the combo still feels relatively small and light, which is exactly what I wanted when I bought the S9.

-

2 hours ago, MrSMW said:

Have you considered the Sigma 18-50 f2.8 even though it is a crop lens, or do you need the full frame?

it’s an exceptional tiny zoom with a 27-75 FF equivalent range and I’m still considering whether when I pick up my second S1Rii if I don’t sell the S9, would this work for me in any viable way, or would I just be trying to keep hold of it?!

Having bought the Sigma for my S9 (largely because of your enthusiasm for it 🙂) I have to 100% agree - it's a perfect companion to the S9 in size and weight, with nice smooth zoom and focus rings.

As I normally shoot video in 4k50p which forces an APS-C crop anyway, it being an APS-C lens is actually a plus for me as it forces APS-C for everything so I don't have to deal with changes in viewing angle between stills and video. The 12MP APS-C stills are OK for my purposes, and I have the compact FF 18-40 f4.5-6.3 if I really feel the need for 24MP stills. So I tend to regard my S9 as an APS-C camera with a bonus FF stills mode 🙂.

13 hours ago, FHDcrew said:My weird plan was to rock the sigma 18-35…i already own it and it covers 24-35mm roughly on full frame. But the darn viltrox EF to l mount adapter, while great, forces the camera into an apsc crop no matter what I do.

The adaptor is only doing what it's supposed to do with an APS-C lens on the front, same as using a native L-mount APS-C lens (e.g. the Sigma 18-50 f2.8).

-

On 7/9/2025 at 11:08 AM, ita149 said:

I read somewhere something interesting about Real Time Lut, using a burned in lut with a good amount of contrast helps to recover fine details. When recording V-log without Real Time Lut and grading in post, some fine details can't be recovered. I can confirm it's true. So using Real Time Lut V-log on the S1RII in 8,1K Open Gate is the best for details rendering.

I suspect that's because the in-camera LUT is processing the video before it's compressed, but in post you are working video after compression (which is likely to have reduced or smoothed over some detail, depending on picture content and bitrates).

-

On 6/26/2025 at 11:26 AM, Fatalfury said:

Simply fantastic images and I like the dark look. Though I'd change the 14-140 to M.Zuiko 12-100 f4 for that constant aperture, while losing tele but gaining on a wide end. Feels like 100mm on a M43 body should be enough though.

I own and use both. The Oly 12-100mm F4 is a great lens, but it's much bigger and heavier than the Pana 14-140mm (560g versus 265g) - both on an OM-1:

On an E-M1 ii/iii or OM-1 the 12-100mm supports Sync-IS which gives sublime video IS performance, but even with the relatively light (for that kind of camera) OM-1, the combo is 1.2 kg and somewhat front-heavy if you're using it handheld. A GH7 + 12-100mm would be nearly 1.4 kg.

As a 'travel' lens, IMHO the combination of low weight and focal length range makes the 14-140mm almost perfect (other than in really low light, of course).

-

8 hours ago, eatstoomuchjam said:

That or the G9 II - it seems to be not much bigger (a little taller and the hand grip + EVF protrude just a little more) and it barely costs more used.

The G9 ii is much larger and over 50% heavier than an OM-5:

The obvious M43 alternative (larger and heavier, but not as bad as the G9 ii) is a used OM-1 - almost a steal at it's current sub-£1000 used prices in the UK for what you get in a rugged, weather-sealed, reasonably compact body.

And of course as MrSMW said, there's the S9 at sub-£1000 new, but that's not weather-sealed nor has an EVF.

It's the lack of a compact M43 body with PDAF, 4k50/4k60 and excellent video IBIS that drove me to buy an S9 - my first ever non-M43 MILC (after 13 years of buying M43 cameras and owning a sizeable lens M43 collection).

That said, the E-M5iii/OM-5/OM-5ii series aren't really aimed at the video-user market - they are primarily lightweight, small, weather-sealed stills-orientated cameras with a bit of retro style (which is an OM-System self-confessed niche, really).

-

18 hours ago, Sebastien said:

But how do you achieve that, provided it is a 4/3 lens without an aperture ring (like my olympus 17 & 45 mm 1.8 and the 12-32 kit lens)?

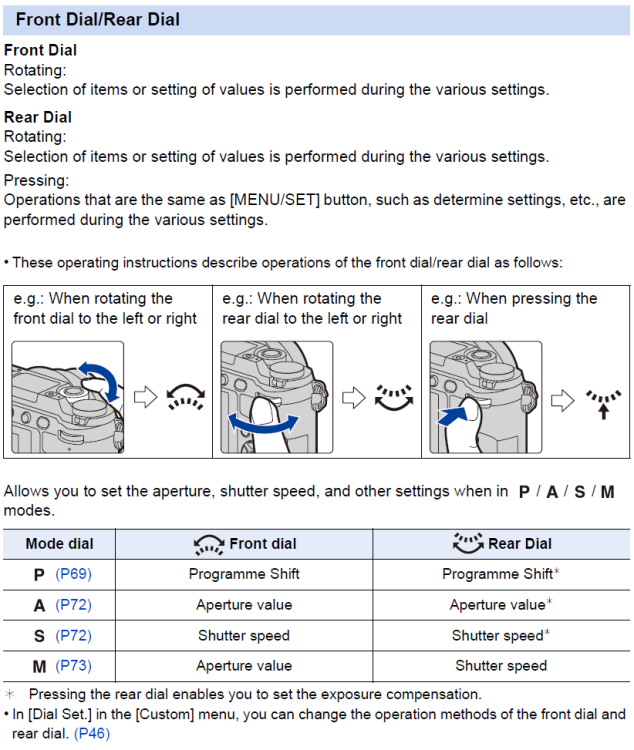

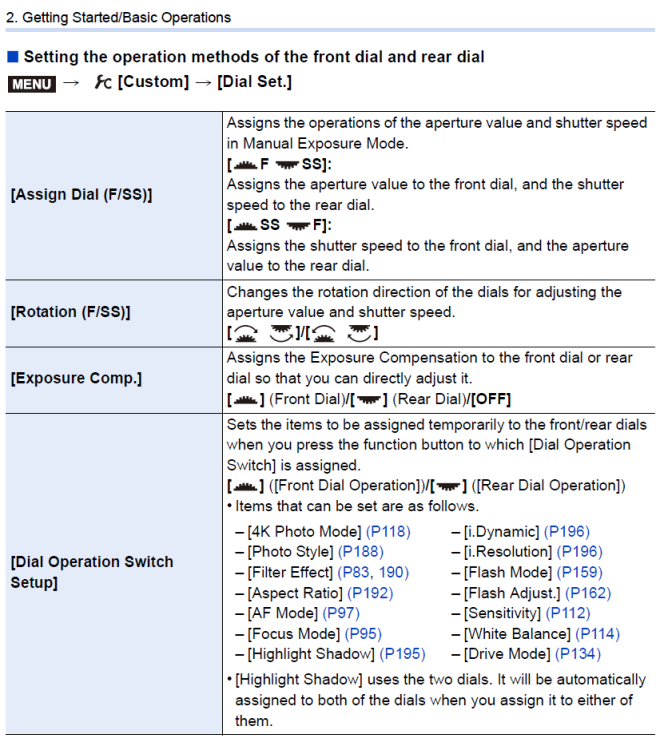

As 'newfoundmass' suggested, use the touch screen, or (probably, for movie mode, as the manual doesn't make it clear if it applies to movies as well as stills) you can use/customise the functions of the front and rear dials:

-

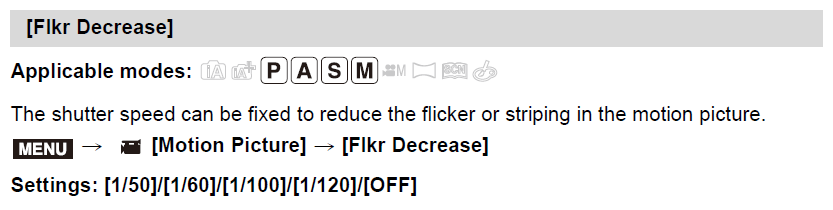

Something that's worth noting/remembering is that the 'Flkr Decrease' setting can be used to fix the shutter speed to 1/50, 1/60, 1/100 or 1/120 (180 degree shutter for 24/25, 30, 50 or 60 fps video) when you press the video record button in stills/photo mode (which forces the camera into 'P' mode, irrespective of what the stills/photo setting is). This workaround gives you shutter priority video with auto aperture and, if you want, auto-iso - it's 'photo' P mode with fixed shutter speed, basically.

I find this really useful for 'instant hybrid' shooting - press the shutter button to take stills (in whatever mode you have set) or press the video record button to shoot shutter-priority video - no need to move the mode dial.

From the manual:

- John Matthews and Sebastien

-

1

1

-

1

1

-

2 hours ago, MrSMW said:

What is this camera?!

Never seen it before…

Have a read of https://www.dpreview.com/articles/0518873678/hasselblad-lunar-an-act-of-lunacy

It looks like it's a (rare, only 100 made) Hasselblad Lusso, based on the Sony A7R.

-

35 minutes ago, MrSMW said:

The Euro deal is significantly better!

€1299 is 'only' about £1100.

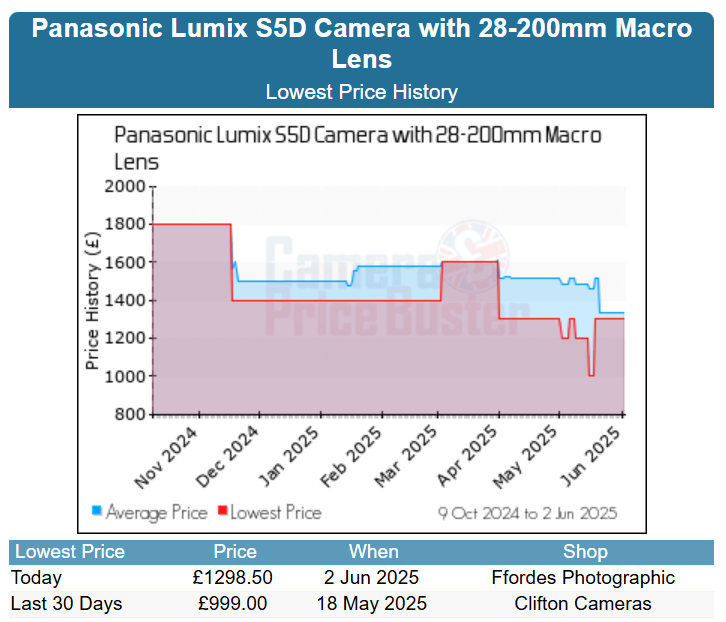

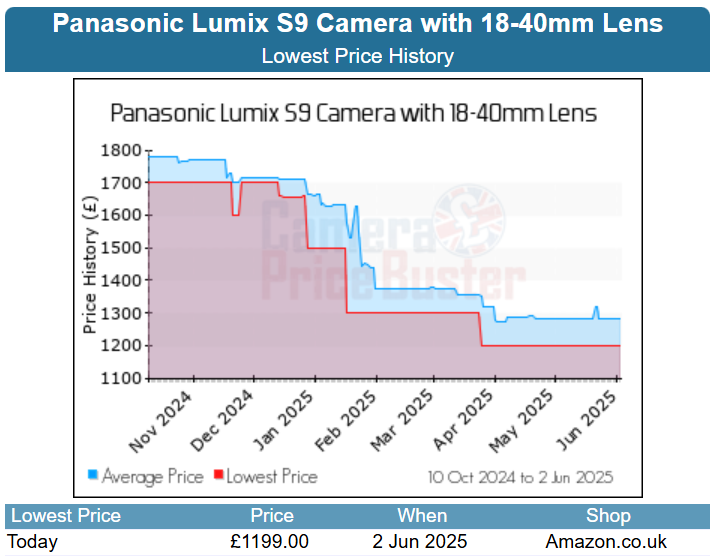

Good point 🙂(it did go lower than £1299 recently from a some sellers - see the red line below).

...and for the S9 + 18-40 kit over the same period - https://www.camerapricebuster.co.uk/Panasonic/Panasonic-Lumix-S-Cameras/Panasonic-Lumix-S9-Camera-with-18-40mm-Lens:

-

15 hours ago, John Matthews said:

I'm seeing deals in Germany and France for a S5D + 28-200 for 1299 euros- that's a great deal!

It's similar in the UK (£1299).

Personally I think Panasonic should offer the S9 + 28-200 as a reasonably compact FF travel cam kit (e.g. like Sony have the APS-C A6700 + 18-135mm kit).

-

9 hours ago, Andrew Reid said:

The best one out of all those is the 28-200mm.

It's been on my wish list for a while...

(I already own the 18-40 and 20-60, and I'm more likely to buy the more-compact APS-C Sigma 18-50mm F2.8 than the Pana 24-60).

-

Re. S9 lens options (and being a 'zoom' rather than 'prime' person), now the 24-60 F2.8 is out, the obvious hole in Panasonic's full-frame small/light zoom range is a longer focal length companion to the 18-40 collapsible zoom e.g. a compact 35-90. (Like the 12-32 plus 35-100 pair they introduced to go with the smaller M43 cameras years ago).

The current 'reasonably compact' FF zoom range mounted on the S9 (from the left, 18-40, 20-60, 28-200 and 24-60):

-

2 hours ago, Video Hummus said:

It involves their partner and I'm not talking about Leica. RED going down market and Sony's success with VENICE has stirred up some unlikely partnership collabs.

Arri?

-

I don't have a definitive answer, so this is an 'engineering opinion':

For UHD (3840 x 2160) the G7 crops a slightly larger area out of the centre of the sensor, around 4100 pixels horizontally. Someone on Reddit estimated it to be 4130 x 2323 - see https://www.reddit.com/r/PanasonicG7/comments/3zfu0f/comment/cym5c9j/ - which is very close to 4096 x 2320 (and both dimensions of that are divisible by 16).

If you take 4096 x 2320 and multiply each dimension by 15/16 you get 3840 x 2175, and I suspect because of the low scaling ratio it's most likely using 'nearest neighbour' re-sampling (line-skipping in camera speak) to generate UHD from it.

So my educated guess is that its reading out a 4096 x 2320 region of the sensor, de-bayering it and then using line-skipping to down-sample it to 3840 x 2160.

The Olympus OM-1 goes full frame OM

In: Cameras

Posted

I agree with all of that that.

(My OM-1 also gets used for wildlife video, where the extra reach due to the small sensor is very useful).