-

Posts

1,153 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by tupp

-

To me, the "thickness" of a film image is revealed by a rich, complex color(s). That color is not necessarily saturated nor dark. That "thickness" of film emulsion has nothing to do with lighting nor with what is showing in the image. Certainly, for the thickness to be revealed, there has to be some object in the frame that reflects a complex color. An image of a white wall will not fully utilize the color depth of an imaging system. However, a small, single color swatch within a mostly neutral image can certainly demonstrate "thickness." I don't think that's how it works. Of course, there is also reversal film. Agreed. Digital tends to make skin tones mushy (plastic?) compared to film. Look at the complex skin tones in some of these Kodachrome images. There is a lot going on in those skin tones that would be lost with most digital cameras. In addition, observe the richness and complexity of the colors on the inanimate objects. Yes. Please note that most of the images in the above linked gallery are brightly lit and/or shot during broad daylight. Agreed. I think that the quality that you seek is inherent in film emulsion, and that quality exists regardless of lighting and regardless of the overall brightness/darkness of an image. Because of the extensive color depth and the distinctive color rendering of normal film emulsion, variations in tone are often more apparent with film. Not sure if that should be considered to be more of a gradual transition in chroma/luma or to be just higher "color resolution." Those images are nice, but they seem thinner than the Kodachrome images in the linked gallery above. The image is nicely crafted, but I read that it was shot on Fuji Eterna stock. Nevertheless, to me its colors look "thinner" than those shown in this in this Kodachrome gallery. Great site! Thanks for the link! I disagree. I think that the "thickness" of film is inherent in how emulsion renders color. The cross-lighting in that "Grandmaster" image seems hard and contrasty to me (which can reveal texture more readily than a softer source). I don't see many smooth gradations/chirascuro. Evidently, OP seeks the "thickness" that is inherent in film emulsion, regardless of lighting and contrast. Nice shots! Images from CCD cameras such as the Digital Bolex generally seem to have "thicker" color than their CMOS counterparts. However, even CCD cameras don't seem to have the same level of thickness as many film emulsions. That certainly is a pretty image. Keep in mind that higher resolution begets more color depth in an image. Furthermore, if your image was shot with Blackmagic Ursa Min 12K, that sensor is supposedly RGBW (with perhaps a little too much "W"), which probably yields nicer colors.

-

Of course, a lot of home weren't properly exposed and showed scenes with huge contrast range that the emulsion couldn't handle. However, I did find some examples that have decent exposure and aren't too faded. Here's one from the 1940's showing showing a fairly deep blue, red and yellow, and then showing a rich color on a car. Thick greens here, and later a brief moment showing solid reds, and some rich cyan and indigo. Unfortunately, someone added a fake gate with a big hair. A lot of contrast in these shots, but the substantial warm greens and warm skin and wood shine, plus one of the later shots with a better "white balance" shows a nice, complex blue on the eldest child's clothes. Here is a musical gallery of Kodachrome stills. Much less fading here. I'd like to see these colors duplicated in digital imaging. Please note that Paul Simon's "Kodachrome" lyrics don't exactly refer to the emulsion! OP's original question concerns getting a certain color richness that is inherent in most film stocks but absent from most digital systems. It doesn't involve lighting, per se, although there has to be enough light to get a good exposure and there can't be to much contrast in the scene. We have no idea if OP's simulated images are close to how they should actually appear, because 80%-90% of the pixels in those images fall outside of the values dictated by the simulated bit depth. No conclusions can be drawn from those images. By the way, I agree that banding is not the most crucial consideration here -- banding is just a posterization artifact to which lower bit depths are more susceptible. I maintain that color depth is the primary element of the film "thickness" in question.

-

Well, when I listed the film "thickness" property of "lower saturation in the brighter areas," naturally, that means that the lower values have more saturation. I think that one of those linked articles mentioned the tendency that film emulsions generally have more saturation at and below middle values. Thanks for posting the comparisons! Then what explains the strong "thickness" of terribly framed and badly lit home movies that were shot on Kodachrome 64? Unfortunately, @kye's images are significantly flawed, and they do not actually simulate the claimed bit-depths. No conclusions can be made from them. By the way, bit depth is not color depth.

-

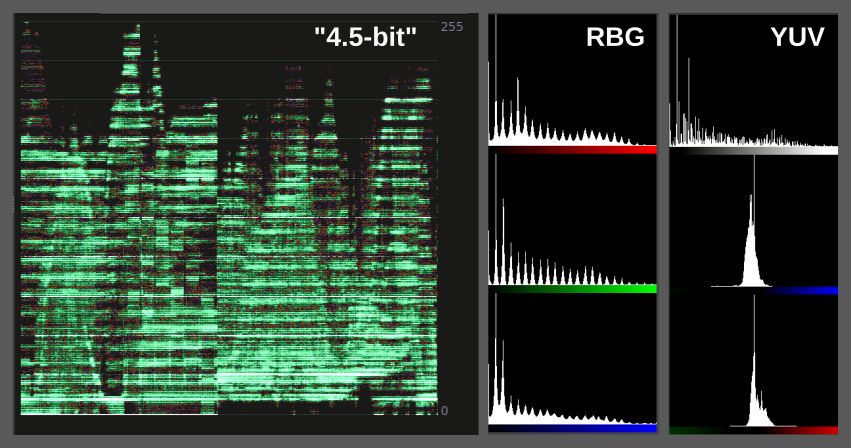

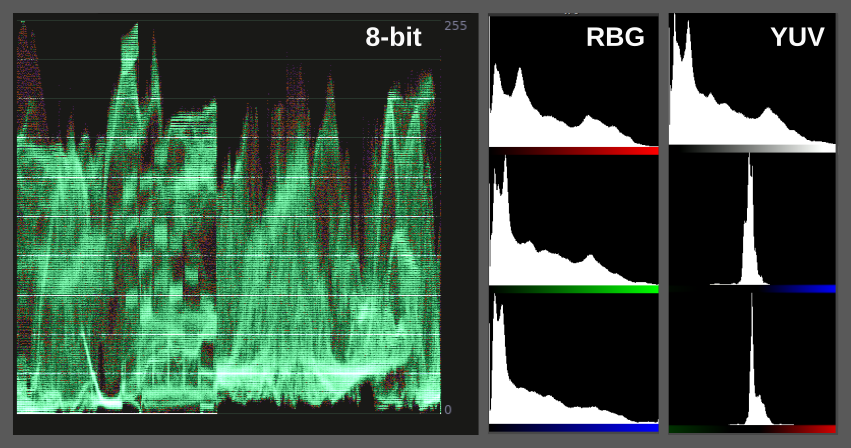

Thank you for posting these Trueclor tests, but these images are not relevant to the fact that the "4.5-bit" image that you posted earlier is flawed and is in no way conclusive proof that "4.5-bit" images can closely approximate 8-bit images. On the other hand, after examining your 2-bit Truecolor test, it indicates that there is a problem in your rounding code and/or your imaging pipeline. 2-bit RGB can produce 64 colors, including black, white and two evenly spaced neutral grays. There seem to be significantly fewer than 64 colors. Furthermore, some of the adjacent color patches blend with each other in a somewhat irregular way, instead of forming the orderly, clearly defined and well separated pattern of colors that a 2-bit RGB system should produce with that test chart. In addition, there is only one neutral gray shade rendered, when there should be two different grays. Looking at the histogram of the 2-bit Truecolor image shows three "spikes" when there should be four with a 2-bit image: Your 2-bit simulation is actually 1.5 bit simulation (with other problems). So, your "rounding" code could have a bug. Well, something is going wrong, and I am not sure if it's compression. I think that PNG images can exported without compression, so it might be good to post uncompressed PNG's from now on, to eliminate that variable. Another thing that would help is if you would stick to the bit depths in question -- 8-bit and "4.5-bit." All of this bouncing around to various bit depths just further complicates the comparisons.

-

Not all additive color mixing works the same. Likewise, not all subtractive color mixing works the same. However, you might be correct generally in regards to film vs. digital. One has to allow for the boosted levels in each emulsion layer that counter the subtractive effects. I don't think the scopes are mistaken, but your single trace histogram makes it difficult to discern what exactly is happening (although close examination of your histogram reveals a lot of pixels where they shouldn't be) . It's best to use a histogram with a column for every value increment. I estimate that around 80%-90% of the pixels fall in between the proper bit depth increments -- the problem is too big to be "ringing artifacts." There is a significant problem... some variable(s) that is uncontrolled, and the images do not simulate the reduced bit depths. No conclusions can be drawn until the problem is fixed.

-

From the first linked article: So, the first linked article echoed what I said (except I left out that the print itself is also "subtractive" when projected). Is that except from the article (and what I said) what you mean when you refer to "additive" and "subtractive" color? Also from the first linked article: I'm not so sure about this. I think that this notion could contribute to the film look, but a lot of other things go into that look, such as progressive scan, no rolling shutter, grain actually forming the image, color depth, compressed highlight roll-off (as you mentioned), the brighter tones are less saturated (which I think is mentioned in the second article that you linked), etc. Of all of the elements that give the film "thickness," I would say that color depth, highlight compression, and the lower saturation in the brighter areas would be the most significant. It might be possible to suggest the subtractive nature of a film print merely by separating the the color channels and introducing a touch of subtractive overly on the two appropriate color channels. A plug-in could be made that does this automatically. However, I don't know if such effort will make a substantial difference. Thank you for posting the images without added grain/noise/dithering. You only had to post the 8-bit image and the "4.5-bit" image. Unfortunately, most of the pixel values of the "4.5-bit" image still fall in between the 22.6 value increments prescribed by "4.5-bits." So, something is wrong somewhere in your imaging pipeline. Your NLE's histogram is a single trace, rather than 255 separate columns. Is there a histogram that shows those 255 columns instead of a single trace? It's important, because your NLE histograms are showing 22 spikes with a substantial base that is difficult to discern with that single trace. Something might be going wrong during the "rounding" or at the "timeline" phase.

-

I am not sure what you mean. Are you referring to the concept color emulsion layers subtracting from each other during the printing stage while a digital monitor "adds" adjacent pixels? Keep in mind that there is nothing inherently "subtractive" with "subtractive colors." Likewise, there is nothing inherently "additive" with "additive colors." Please explain what you mean. Yes, but the histograms are not drawing the expected lines for the "4.5-bit" image nor for the "5-bit" image. Those images are full 8-bit images. On the other hand, the "2.5-bit" shows the histogram lines as expected. Did you do something different when making the "2.5-bit" image? If the culprit is compression, then why is the "2.5-bit" image showing the histogram lines as expected, while the "4.5-bit" and "5-bit" images do not show the histogram lines? Please just post the 8-bit image and the "4.5-bit" image without the noise/grain/dithering. Thanks!

-

If you are referring to "additive" and "subtractive" colors in the typical imaging sense, I don't think that it applies here. There are many different types of dithering. "Noise" dithering (or "random" dithering) is probably the worst type. One would think that a grain overlay that yields dithering would be random, but I am not sure that is what your grain filter is actually doing. Regardless, the introducing the variable of grain/dithering is unnecessary for the comparison, and, likely, it is what skewed the results. Small film formats have a lot of resolution with normal stocks and normal processing. If you reduce the resolution, you reduce the color depth, so that is probably not wise to do. Too bad there's no mark-up/mark-down for <code> on this web forum. The noise/grain/dithering that was introduced is likely what caused the problem -- not the rounding code. Also, I think that the images went through a YUV 4:2:0 pipeline at least once. I posted the histograms and waveforms that clearly show that the "4.5-bit" image is mostly an 8-bit image, but you can see for yourself. Just take your "4.5-bit" image an put it in your NLE and look at the histogram. Notice that there are spikes with bases that merge, instead of just vertical lines. That means that a vast majority of the image's pixels fall in between the 22 "rounded 4.5-bit" increments. Yes. The histogram should show equally spaced vertical lines that represent the increments of the lower bit depth (2.5-bits) contained within a larger bit dept (8-bits). The vertical lines in the waveforms merely show the locations where each scan line trace goes abruptly up and down to delineate a pool of a single color. More gradual and more varied scan line slopes appear with images of a higher bit depth that do not contain large pools of a single color. I checked the histogram of "2.5-bit" image without the added noise/grain/dithering, and it shows the vertical histogram lines as expected. So, the grain/dithering is the likely culprit. An unnecessary element (noise/grain/dithering) was added to the "4.5-bit" image that made it a dirty 8-bit image, so we can't really conclude anything from the comparison. Post the "4.5-bit" image without grain/dithering, and we might get a good indication of how "4.5-bits" actually appears. Using extremes to compare dramatically different outcomes is a good testing method, but you have to control your variables and not introduce any elements that skew the results. Please post the "4-5-bit" image without any added artificial elements. Thanks!

-

I think that the "thickness" comes primarily from emulsion's color depth and partially from the highlight compression that you mentioned in another thread, from the forgiving latitude of negative film and from film's texture (grain). Keep in mind that overlaying "grain" screen on a digital image is not the same as the grain that is integral to forming an image on film emulsion. Grain actually provides the detail and contrast and much of the color depth of an film image. Home movies shot on Super8 film often have "thick" looking images, if they haven't faded. You didn't create a 5-bit image nor a "4.5-bit" image, nor did you keep all of the shades within 22.6 shade increments ("4.5-bits") of the 255 increments in the final 8-bit image. Here are scopes of both the "4.5-bit" image and the 8-bit image: If you had actually mapped the 22.6 tones-per-channel from a "4.5-bit" image into 26.5 of the 255 tones-per-channel 8-bit image, then all of the image's pixels would appear inside 22 vertical lines on the RGB histograms, (with 223 of the histogram lines showing zero pixels). So, even though the histogram of the "4.5-bit" image shows spikes (compared to that of the 8-bit image), the vast majority of the "4.5-bit" image's pixels fall in between the 22.6 tones that would be inherent in an actual "4.5-bit" image. To do this comparison properly, one should probably shoot an actual "4.5-bit" image, process it in a "4.5-bit" pipeline and display it on a "4.5-bit" monitor. By the way, there is an perceptible difference between the 8-bit image and the "4.5-bit" image.

-

What's today's digital version of the Éclair NRP 16mm Film Camera?

tupp replied to John Matthews's topic in Cameras

Most of us who shot film were working with a capture range of 7 1/2 to 8 stops, and that range was for normal stock with normal processing. If one "pulls" the processing (underdevelops) and overexposes the film, a significantly wider range of tones can be captured. Overexposing and under-developing also reduces grain and decreases color saturation. This practice was more common in still photography than in filmmaking, because a lot of light was already needed to just to properly expose normal film stocks. Conversely, if one "pushes" the processing (overdevelops) while underexposing, a narrower range of tones is captured, and the image has more contrast. Underexposing and overdeveloping also increases grain boosts color saturation. Because of film's "highlight compression curve" that you mentioned, one can expose for the shadows and midtones, and use less of the film's 7 1/2 - 8 stop capture range for rendering highlights. In contrast, one usually exposes for the highlights and bright tones with digital, dedicating more stops just to keep the highlights from clipping and looking crappy. I don't think @kye was referring to reversal film. The BMPCC was already mentioned in this thread. No. Because of its tiny size, the BMPCC is more ergonomically versatile than an NPR. For instance, the BMPCC can be rigged to have the same weight and balance as an NPR, plus it can also have a shoulder pad and a monitor -- two things that the NPR didn't/couldn't have. In addition, the BMPCC can be rigged on a gimbal, or with two outboard side handles, or with the aforementioned "Cowboy Studio" shoulder mount. It can also be rigged on a car dashboard. The NPR cannot be rigged in any of these ways. To exactly which cameras do you refer? I have shot with many of them, including the NPR, and none of the cameras mentioned in this thread are as you describe. -

What's today's digital version of the Éclair NRP 16mm Film Camera?

tupp replied to John Matthews's topic in Cameras

I have watched some things captured on 16mm film, and I have shot one or two projects with the Eclair NPR. Additionally, I own an EOSM. The reasons why the EOSM is comparable to the NPR is because: some of the ML crop modes for the EOSM allow the use of 16mm and S16 optics; the ML crop modes enable raw recording at a higher resolution and higher bit-depth. By the way, there have been a few relevant threads on the EOSM. Here is thread based on an EOSHD article about shooting 5k raw on the EOSM using one of the 16mm crop modes. Here is a thread that suggests the EOSM can make a good Super-8 camera. Certainly, there are other digital cameras with 16 and S16 crops, and most of them have been mentioned in this thread. The Digital Bolex and the Ikonoskop A-cam dII are probably the closest digital camera to a 16mm film camera, because not only do they shoot s16, raw/uncompressed with a higher bit depth, but they both utilize a CCD sensor. Of course, one can rig any mirrorless camera set back and balanced on a weighted shoulder rig, in the same way as you show in the photo of your C100. You could even add a padded "head-pressing plate!" Just build a balanced shoulder rig and keep it built during the entire shoot. I've always wanted to try one of those!: -

What's today's digital version of the Éclair NRP 16mm Film Camera?

tupp replied to John Matthews's topic in Cameras

The name of this S16 digital camera is the Ikonoskop A-cam dII. Of course the BMPCC and the BMMCC would also be comparable to the NPR. Well, since the NPR is a film camera, of course one had to be way more deliberate and prepared compared to today's digital cameras. If you had already loaded a film stock with the appropriate ISO and color temperature and if you had already taken your light meter readings and set your aperture, then you could start manually focusing and shooting. Like many 16mm and S16 cameras of it's size , the NPR could not shoot more than 10 minutes before you had to change magazines. One usually had to check the gate for hairs/debris before removing a magazine after a take. Processing and color timing and printing (or transferring) was a whole other ordeal much more involved and complex (and more expensive) than color grading digital images. On the other hand, the NPR did enable more "freedom" relative to its predecessors. The camera was "self-blimped" and could use a crystal-sync motor, so it was much more compact than other cameras that could be used when recording sound. Also, it used coaxial magazines instead of displacement magazines, so it's center of gravity never changed, and with the magazines mounted in the rear of the camera body, it made for better balance on one's shoulder than previous cameras. The magazines could also be changed instantly, with no threading through the camera body. In the video that you linked, that quick NPR turret switching trick was impressive, and it never occurred to me, as I was shooting narrative films with the NPR, mostly using a zoom on the turret's Cameflex mount. The NPRs film shutter/advancing movement was fairly solid for such a small camera, as well. In regards to the image coming out of a film camera, a lot depends on the film stock used, but look from a medium fast color negative 16mm stock is probably comparable to 2K raw on current cameras that can shoot a S16 crop (with S16 lenses). By the way, film doesn't really have a rolling shutter problem. It is important to use a digital camera that has a S16 (or standard 16) crop to approximate the image of the NPR, because the S16 optics are important to the look. The EOSM is a bit more "grab and go" than an Eclair NPR. -

What's today's digital version of the Éclair NRP 16mm Film Camera?

tupp replied to John Matthews's topic in Cameras

I shot with the NPR, and I would say that the EOSM with an ML crop mode and/or the Digital Bolex would be the obvious cameras to compare. I think Aaton (begot from Eclair) had a S16 digital camera worth comparing, and there is also that shoulder mount digital camera with the ergonomic thumb hold of which I can never remember the name. -

Most of the results of your Google search echo the common misconception that bit depth is color depth, but resolutions' effect on color depth is easily demonstrated (I have already given one example above).

-

Nope. That is the basic equation for digital color depth.

-

Yeah. All of those photos by Richard Avedon, Irving Penn and Victor Skrebneskiphotos were terrible! "Color sensitivity" applied to digital imaging just sounds like a misnomer for bit depth. Bit depth is not color depth I have heard "color sensitivity" used in regards to human vision and perception, but I have never heard that term used in imaging. After a quick scan of DXO's explanation, it seems that they have factored-in noise -- apparently, they are using the term "color sensitivity" as a term for the number of bit depth increments that live above the noise. That's a great camera, but it would have even more color depth if it had more resolution (while keeping bit depth and all else the same). That is largely true, but I am not sure if "good" lighting is applicable here. Home movies shot on film with no controlled lighting have the "thickness" that OP seeks, while home movies shot on video usually don't have that thickness. No. There is no gain of color depth with down-sampling. The color depth of an image can never be increased unless something artificial is introduced. On the other hand resolution can be traded for bit depth. So, properly down-sampling (sum/average binning adjacent pixels) can increase bit depth with no loss of color depth (and with no increase in color depth). Such artifacts should be avoid, regardless. "Thick" film didn't have them. There is no chroma sub-sampling in a B&W image. I really think color depth is the primary imaging property involved in what you seek as "thickness." So, start with no chroma subsampling and with the highest bit depth and resolution. Of course, noise, artifacts and improper exposure/contrast can take away from the apparent "thickness," so those must also be kept to a minimum.

-

As others have suggested, the term "density" has a specific meaning in regards to film emulsions. I think that the property of "thickness" that you seek is mostly derived from color depth (not bit depth). Color depth in digital imaging is a product of resolution and bit depth (COLOR DEPTH = RESOLUTION x BIT DEPTH). The fact that resolution affects color depth in digital images becomes apparent when one considers chroma subsampling. Chroma subsampling (4:2:2, 4:2:0, etc.) reduces the color resolution and makes the images look "thinner" and "brittle," as you described. Film emulsions don't have chroma subsampling -- essentially film renders red, green and blue at equal resolutions. Almost all color emulsions have separate layers sensitive to blue, green and red. There is almost never a separate luminosity layer, unlike Bayer sensors or RGBW sensors which essentially have luminosity pixels. So, if you want to approximate the "thickness" of film, a good start would be to shoot 4:4:4 or raw, or shoot with a camera that uses an RGB striped sensor (some CCD cameras) or that utilizes a Foveon sensor. You could also use an RGB, three-sensor camera.

-

No need for ignorant bigotry. The notion that camera people got work in the 1980s by owning cameras couldn't be further from the truth. "Hiring for gear" didn't happen in a big way until digital cameras appeared, especially the over-hyped ones -- a lot of newbie kids got work from owning an early RED or Alexa. To this day, clueless producers still demand RED. Back in 1980's (and prior), the camera gear was almost always rented if it was a 16mm or 35mm shoot. Sure, there were a few who owned a Bolex or a CP-16 or 16S, or even an NPR with decent glass, but it was not common. Owning such a camera had little bearing on getting work, as the folks who originated productions back then were usually savvy pros who understood the value of hiring someone who actually knew what they were doing. In addition, camera rentals were a standard line-item in the budget. Of course, there was also video production, and Ikegami and Sony were the most sought-after brands by camera people in that decade. Likewise, not too many individuals owned hi-end video cameras, although a small production company might have one or two. Today, any idiot who talks a good game can get a digital camera and an NLE and succeed by making passable videos. However, 99% of the digital shooters today couldn't reliably load a 100' daylight spool.

-

Yes. It might be best to run the leads outside of the adapter/speedbooster, and epoxy or hot-melt-glue the switch to the adapter housing. By the way, the pictured dip switch is tiny.

-

Why not just put a switch inline on the "hot" power lead of the adapter instead of powering with an external source? That way, OP can just enable and disable the electronics by merely flicking the switch. Incidentally, here is a small dip switch that might work:

-

The paragraph reads: "I have repeatedly suggested that it is not the sensor size itself that produces general differences in format looks -- it is the optics designed for a format size that produce general differences in format looks." Again, you somehow need to get that point through your head. Perhaps you should merely address my points individually and give a reasonable counter argument each one. Unless, of course, you cannot give a reasonable counter argument.

-

This should read: "Once again, I have repeatedly suggested that it is NOT the sensor size itself that produces general differences in format looks..."

-

You certainly don't need to address each of my points, and, indeed, you have avoided almost all of them. In regards to the your parenthetical insinuation, I would never claim that the number of individuals who agree/disagree with one's point has any bearing on the validity of that point. However, please note how this poster unequivocally agrees with me on the problems inherent in your comparison test, saying, "I certainly can see what you're talking about in the areas you've highlighted. It's very clear." In regards to my not providing evidence, again, are you referring to evidence other than all the photos, video links, and references that I have already provided in this thread, which you have yet to directly address? Additionally, you have misconstrued (perhaps willfully) my point regarding sensor size. I have continually maintained in this thread that it is the optics designed for a format size -- not the format size itself -- that produce general differences in format looks. The paper that you linked does address points made in this thread, but a lot of the paper discusses properties which are irrelevant to DOF equivalency, as I pointed out in my previous post. Interestingly, the paper frequently suggests that larger format optics have capabilities lacking in optics for smaller formats, which is what I and others have asserted. Not sure how you missed those statements in the paper that you referenced. Regardless, I have more than once linked Shane Hurlbut's example of an exact focus match between two different lenses made from two different manufacturers. So, there should be no problem getting such a close DOF/focus match from other lenses with the proper methods. Once again, I have repeatedly suggested that it is the sensor size itself that produces general differences in format looks -- it is the optics designed for a format size that produce general differences in format looks. Somehow, you need to get that point through your head. Your thoughtful consideration and open-mindedness is admirable.

-

Well, I certainly appreciate your thoroughly addressing each one of my points and your giving a reasonable explanation of why you disagree. You mean, do I have any evidence other than all the photos, video links, and references that I have already provided in this thread, which you have largely avoided?

-

It's not a good read on this at all, as most of the information given is irrelevant. Furthermore, many of the conclusions of this paper are dubious. How is "sensor quantum efficiency" relevant to optical equivalency? How is "read noise" relevant to optical equivalency? Lens aberrations are absolutely relevant to optical equivalency and DOF/focus. According to Brian Caldwell, aberrations can affect DOF and lenses for larger formats generally have fewer aberrations. Hence, the refractive optics of larger formats generally influence DOF differently than lenses for smaller formats. Keep in mind that the DOF/equivalency formulas do not account for any effects of refractive optical elements, yet optical elements can affect DOF. Again, this property is not really relevant to optical equivalency and DOF/focus. This property is not really relevant to optical equivalency and DOF/focus. Only one of these six factors (aberrations) that you and the paper present are relevant to optical equivalency and DOF/focus. So, why is this paper quotee/linked? On the other hand, here is a choice sentence from he paper that immediately follows your excerpt: There are other similar passages in that paper suggesting differences in image quality between different sized formats. If the intention of quoting/linking that paper was to assert that it is difficult to get an exact match between two different lenses made by two different manufactures, I once again direct you to Shane Hurlbut's test in which he compared two different lenses made by two very different manufacturers (Panasonic and Voigtlander), that exactly matched in regards to the softness/bokeh of the background, with only a slight difference in exposure/color. So, a more exact match can be achieved than what we have seen so far in "equivalency tests." In addition, we can compare the actual DOF, instead of seeing how closely one can match an arbitrarily soft background set at some arbitrary distance, while relying on lens aperture markings and inaccurate math entries.