HockeyFan12

Members-

Posts

887 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by HockeyFan12

-

Can you send me a link to Canon's white paper? If I can read what they've claimed in context that would help. Given that you mischaracterized and misquoted every source you've presented so far excepting those that are obviously wrong, it would make sense that I'd ask. Twice you posted square wave charts instead of sine waves from sources that had both. There's a reason they had both.... And it is pretty good. But clearly not mellowing me out enough.

-

If you can find me an affordable 4k zone plate (SINUSOIDAL) I will. You're right it's not about money but I also don't have to prove it to myself. Like, how's $10,000? Doesn't make me any more right or wrong. This escalation is absurd and I'm sorry I got involved with it. Might makes right is generally wrong. You're right to the extent that it doesn't matter, it's about what's right and not about money or what sources claim what (even if the reputable ones agree with my model). I apologize for that, but why should I spend money to prove to myself what I already know? It's not on me to prove you wrong, it's on you to stop spreading misinformation.

-

I have. (Again, read my post from a few days ago and see how those lines will cycle between high contrast and low contrast but never alias in that model so long as they're sinusoidal and not square). How's a $1000 wager?

-

Depends how confident you are that you're right. I'm 100% confident that I am. If you can find a 4k SINUSOIDAL zone plate to shoot and we can agree on what represents 2,000 horizontal line pairs, let's just make it a $500 wager. I'll bring my Foveon camera. Again, there will be some slight difference due to real-world factors like imperfectly aligned grids and quantization error and sharpening etc. But the result will be far closer to the figure I cite than the one you do. It doesn't matter how many people agree with Graeme. What matters is that he's the one doing math and the others are confusing vertical axes with horizontal axes and confusing sine waves with square waves... those articles are poorly-researched and scattershot in their methodology. They're click bait. Truth is truth. Doesn't matter what the majority says. That's what I'm standing up for above all else.

-

Indica, at the moment. And I don't have to prove my point to anyone but you, because everyone else gets it. At least the people who matter, like Graeme and whoever put that test together. I just wish you wouldn't spread this kind of misinformation online. If you want to you're free to but there's already enough misinformation out there. Case in point, that article confuses vertical and horizontal lines of resolution. So did you. Buy me a 4k sinusoidal (not square wave) zone plate. (Let's keep the budget at $500 or less.) I'll photograph it with a Foveon camera, center crop 4k. If the behavior correlates more closely with the model I correctly cite than the one you made up, you eat the cost of the zone plate. If it correlates with your claims, I'll pay you back. We can accept a small margin of error either way due to other real world factors (quantization error, things not being perfectly aligned). But our models are vastly different so we should see one or the other clearly prevail. I'm dead serious. But keep the zone plate to $500 or less I have rent to pay and am saving up for a house. :/

-

Even if that were the case, Graeme's model would still be correct in terms of the math and theory. (Nyquist requires >2 samples per sine wave, not >4 samples per square wave, as your version amounts to. If you look into how it's applied to audio this will be clearer.) But, anyhow, it isn't the case: https://en.wikipedia.org/wiki/Television_lines Wikipedia agrees with you that tv lines are traditionally measured in horizontal lines, and they are, but they note that the measurement is "alternatively known as Lines of Horizontal Resolution (LoHR)" (emphasis mine). Horizontal. Not vertical. That test was of vertical lines! So either Graeme is wrong and either wikipedia or whoever made that chart is wrong or all three actual authorities on the subject are right you're wrong. And given that your definition of Nyquist is inaccurate at a very basic level (when it cites "frequency" it refers the highest order frequency, which in the case of the sine wave is its fundamental but in the case of a square wave is the highest order harmonic, and when it refers to cycle it means line pairs, not lines) I have to go with them. And with reason. Read all of Graeme's replies. He really thought through his argument, and it makes sense.

-

Then why does the image you recently posted show 540 line pairs (1080 lines) in the VERTICAL (not horizontal) resolution for the F3? Exactly as my model would predict from a full raster 3 megapixel sample downscaled to 1080p? And 848 line pairs for the Alexa in RAW, very close to the figure my model would predict (it would predict 1620 lines from a 2880X1620 sensor, or 810 line pairs–the difference between 810 and 848 could be aliasing reading as false detail). Edit: also, that wasn't long enough to read a four-page thread. Go back and read it all. I had my questions, too, at first, until I got into the difference between sinusoidal and binary zone plates. Anyhow, I'm done. Everything except online banter agrees with what I've posted, including repeatably real-world behavior (science) and numbers (math). If you want to take this up with Graeme I encourage you to, but I just wish you wouldn't freely post misinformation like this.

-

Sure, here's the thread. It's a long one. I do think it's a bit presumptuous of you that you think you understand this better than Red's chief imaging engineer, but I've been called presumptuous too. Graeme explains to someone (a somewhat presumptuous poster named Matt) why the same misunderstanding you have is, in fact, a misunderstanding: http://www.reduser.net/forum/showthread.php?157330-Is-this-chroma-aliasing There's a lot to get through, much of it noise and ad hominem bullshit, but it's all there. Posting this thread is a little embarrassing for me since I clearly can’t articulate how Nyquist works as well in a half dozen posts (in an earlier thread) as Graeme can in a few paragraphs, but hey. I can swallow my pride for a moment and let Red's chief engineer do his thing. And no, I don't have that test chart in front of me, but I assure you that a 4k sinusoidal zone plate would not alias on a 4k Foveon or monochrome camera while a 4k binary (square wave) zone plate would. And if you want to order and ship me a 4k sinusoidal zone plate (they're expensive as heck) I would be glad to prove it. Fwiw, the image you posted above specifies line pairs per sensor height. Not width. Height is the 1080 pixel axis, not the 1920 pixel axis (in 1920X1080). Pairs are one white pixel and one black pixel. So if the F3 resolves 540 line pairs, then it resolves 1080 lines.... at 1080p. Which makes sense. The F3 oversamples by about 1.5X on each axis (like the Alexa) and it has a Bayer sensor. Bayer interpolation is about 70% efficient, and 1.5*0.7 is about 1. So you get full resolution in each axis. Which is to say 1080 lines in the vertical axis and 1920 in the horizontal axis. Which is to say 540 line pairs per sensor height. Which is exactly what that chart predicts. Because the real world correlates with the real Nyquist theorem.

-

JCS, can you stop propagating that incorrect version of the Nyquist theorem? If you want I can link you to the original thread where Graeme Nattress at Red describes how it actually works. If you agree to take a look at it I'll leave you alone about it, since usually you're a valuable contributor, but I hate to see that kind of misinformation spread online in a technical forum.

-

I've never been that bullish on 4k video or it providing a significant or worthwhile improvement in real-world use. For UI elements on phones and computers I think a high res display is advantageous, but 4k video doesn't look significantly better to me at normal viewing distances (except maybe for line art and 2D animation). I recall that the text on my projector from my PS4 looks pixellated but the video never does. For VR I think higher resolutions are going to be particularly important, for video and UI elements alike. The statistic you posted is misleading. Most of us are in the US or developed countries that have at least a majority HD infrastructure and even if we're selling to other markets that don't have HD, those markets are less important to our revenue stream. I think all of us benefit from shooting HD. I think most of us know whether our clients will pay more for 4k and it's really just a cost-benefit analysis at that point. Or as hobbyists, we decide that cost-benefit analysis subjectively. Personally, I prefer working with 2k or 1080p media both professionally and as a hobby. I hate doing the extra busy work or waiting on the extra renders and I don't see any difference in real-world use. I just don't see the difference, but my eyes are now 20:30 or 20:20. When I was young and they were 20:15 I bet I tell the difference even with video at a normal viewing distance. I do think VR video is too low res now and that will need to be acquired at 8k or 16k or beyond to look good. I don't personally expect that most tv networks will upgrade their infrastructure to 1080p in the US or abroad. Upgrading to HD was recent and very expensive. Their libraries are all 1080p anyway, and finished as such. But I do think tv will eventually be displaced by Netflix, YouTube, Amazon, etc. which already have 4k infrastructure. So watching how that market changes and evolves and tracing those viewership graphs will probably give a pretty good idea when 4k will become widely demanded. CBS won't push its shows to 4k for air. Not ever, I think. But Netflix might push them to shoot in 4k so they can license a 4k deliverable for their own distribution. When networks earn more money from licensing 4k to Netflix than they suffer in added production cost shooting in that format (fwiw, the added cost is deceptively enormous for larger productions), we'll see a quick change. That won't be too soon. But it will happen soon enough. Probably sooner than we think! I bet Arri is targeting a true 4k Alexa for before that date. Personally, I'm in no rush at all to upgrade, but that's just me! I know a lot of people here are primarily targeting Netflix Original distribution (based on posts I've read). I still think 1080p is fine for that. They'll acquire 1080p movies as originals; they just won't produce 1080p series. So I wouldn't worry about that. TL;DR: People shooting 4k did a cost-benefit analysis and 4k provided more profit from their clients, which in that case are the only clients who matter, or they just want to because they're hobbyists, in which case their preferences (and bank account) are all that matter.

-

From my perspective, the only math you're doing that I'm not is dividing by two an unnecessary extra time. (That and we disagree on how the frequency of square waves relates to the Nyquist theorem.) But I understand your reasoning, and most of what you've written closely mirrors what I believe to be a widespread misunderstanding, one that I used to share, and one that I see online a lot. So I agree we can agree to disagree. Which is fine. But I think we can also agree that the basis of our disagreement is predicated on whether Nyquist applies to sine waves specifically or if it concerns only the fundamental frequency of the wave and ignores overtones. IMO, regardless of whether it's audio or an image, a square wave is of effectively infinite frequency at its rise and fall regardless of the fundamental, whereas a sine wave is of its fundamental frequency throughout its sweep. These are the frequencies I contend apply to Nyquist, and I think this is what our disagreement is mostly about. That said, I agree that in practice oversampling is very beneficial! There's no arguing with those test charts and the F65's performance! (Those charts are still binary, thus square wave, btw .) Fwiw, I did post an example (with images) a few posts above that shows how what I've written is fully consistent with real-world performance given a monochrome sensor and sine wave in the input field. Again, we're back to the same fundamental (pun intended) disagreement.

-

There's no need to oversample beyond the 2X conversion from line pairs to lines. If we have N/2 line pairs (cycles), a sensor with >N photo sites in that axis can resolve them all. And N/2 line pairs then translates to N lines. So we can resolve lines up to the limit of the sensor's resolution (not only up to half that limit). So a 4k sensor can indeed resolve up to 4k lines on a sinusoidal zone plate without aliasing. Practically, all that Nyquist really does is recognize that a sine wave has two humps, one positive hump that goes from 0 to A (amplitude) to 0 and one negative hump that goes from 0 to -A to 0. And that we need to sample twice per sine wave to pick up once on each of the two humps or else we will get aliasing. But if those humps are exactly out of phase with the sampling, we'll get a phase-cancelled signal (gray, or 0), so we need to sample at more than twice the frequency to leave a recoverable trace of the signal. Again, this entire discussion is predicated on whether or not you accept that by "frequency," the Nyquist theorem means "frequency of the highest order sine wave." And it does. The Nyquist theorem refers specifically to sine waves. Nyquist applies to computer graphics, sound (yes, a sine wave through a synthesizer has a different frequency than a square wave of the same fundamental, at least so far as Nyquist is concerned), and images. But in each case Nyquist applies to sine waves specifically. By frequency, the theorem refers to the frequency of the highest order sine wave. If we can't accept that as a starting point we'll get nowhere.

-

I don't know what more to say except that the Nyquist theorem specifically concerns sine waves. Always has, always will. It concerns other wave shapes only to the extent that they are the product of sine waves. By frequency, Nyquist means frequency of the highest order sine wave. (Which in the case of an unfiltered square wave is infinite.) I don't think we're going to make any progress in this discussion until we can agree upon this point, and if we can't we won't. Which is okay! Everyone will draw their own conclusions and luckily this discussion is more academic than practical (to the extent that neither of us are designing sensors and both of us agree the F65 looks awesome). By fairly arbitrary, I only mean tuned partially subjectively. That's my bad for articulating that poorly. And yes, Nyquist does apply to computer graphics, and to that extent the fact that all the graphics you present are of square wave functions (infinite order so far as Nyquist is concerned) is very relevant. That's why they're aliasing like crazy when you downscale them. A few posts back I downsampled some sine wave zone plates (quantized sine waves, granted, and I suppose a binary quantized sine wave resembles a square wave, which further complicates things....) and they didn't alias in the same way! Not nearly as much aliasing. Not any, in fact... until they hit the Nyquist limit. So yes, square waves and sine waves do have different frequencies as concerns Nyquist.

-

No. Nyquist applies to sine waves, full stop. Once you put square waves (black and white lines) in the input domain you are immediately adding infinite higher order harmonics and so, in absence of a low pass filter, you're increasing the input frequency to infinity. (Because of the overtones.) Again, Nyquist concerns sine waves. Square waves have overtones of infinite order, so while Nyquist still concerns them, it theoretically requires of square waves a sensor with infinite pixels. So anyhow, it concerns them differently... When we discuss Nyquist, we have to use sine waves in the input domain. Whether it's concerning sound or image. Or if we use square waves we must accept that square waves in the input domain are of theoretically infinite frequency at certain localities. That said, in practical terms, oversampling by 2 with a Bayer sensor seems to work really well! And, in practical terms, the low pass filter knocks away most of those higher order harmonics. As does diffraction, etc. As do the limits of the printed image, etc. Yes, oversampling helps get a better image! But oversampling by an extra factor of 2 is fairly arbitrary, and not exclusively or specifically derived from Nyquist. Imo, it's probably more related to getting full raster in R and B compensating a bit for the low pass filter.

-

To avoid aliasing and ensure a recoverable signal when recording at a given frequency, you need to sample at more than twice the rate of that frequency. That, more or less, is the Nyquist theorem, I think we can agree?* So you need ≤1/2 as many cycles in a given direction as you have pixels in that direction in order to record a signal without aliasing. Cycles of a given frequency are equal to line pairs (sinusoidal line pairs) So that's ≤(W/2) x (H/2) line pairs without aliasing Line pairs contain two lines (half sine waves) So ≤(W/2) x (H/2) line pairs x 2 lines per pair = W x H lines without aliasing Thus, if you are recording a sinusoidal zone plate, a 4k sensor can record anything up to 4k sinusoidal cycles without aliasing. Your extra dividend comes out of nowhere. It's an attempt to apply Nyquist to Nyquist. The pictures you posted are again of straight lines, not of sinusoidal gradients. All of your visual examples so far are of straight lines, which have infinite higher order harmonics and thus are of effectively infinite frequency (irrespective of the fundamental frequency) and so they'll alias at their edges unless you apply a low pass filter. Your latest example correctly demonstrates this, but that's all that it demonstrates. You keep posting these incredibly basic examples that don't relate to the math but just to the broad concept that things can alias. I'm not arguing that. I'm arguing that you're continuing to add an extra dividend and continuing to ignore that Nyquist applies to the highest harmonic frequency of a wave not to its fundamental frequency (the two are only the same with sine waves). *≥2 times will prevent aliasing. >2 further guarantees the signal doesn't gray out when it's exactly out of phase.

-

I've already explained myself, I'm not going to explain further if it doesn't add up. I had this explained to me by a sensor engineer at one of the leading cinema camera manufacturers, so while I may be using layman's terms incorrectly I'm sure the theory behind it is right and the illustrations I offer prove it fairly simply. Maybe what I'm expressing isn't clear. But I used to think what you argue and a lead engineer at a camera manufacturer corrected me. I don't want to refer to others' explanations so that people take things on faith, but I already explained how it works just maybe not as well as he can. If you want I can refer you to his explanation via PM. Long story short, you're still dividing by two twice, once unnecessarily. Of course there are no harmonics of a pure sine wave beyond the fundamental, by definition. But Chapman's images show square waves, which do have higher order harmonics. And so what I contend is that if you take Alistair Chapman's images (his explanation is wrong btw) but swap sine waves for the square waves he places in the input domain, you'll find that any frequency equal or less than 2k line pairs (4k lines) doesn't alias and any frequency less than 2k line pairs (less than 4k lines) not only doesn't alias, but leaves a recoverable trace, however low contrast. Yes, the contrast of the recorded pattern varies based on alignment with the sensor, but no false detail is generated. I'm quoting the first website I find: Nyquist rate -- For lossless digitization, the sampling rate should be at least twice the maximum frequency responses. Indeed many times more the better. You'd agree that fits the definition of Nyquist? So let's say we have 2k sinusoidal line pairs, cycling between black and white, and want the minimum resolution sensor we need to record them without aliasing. A sine wave hits zero (gray) twice per cycle, and 1 (black) once and -1 (white) once per cycle. So that's one black and one white (really they'll show up as shades of gray) line per cycle. So if a 4k sensor can resolve up to 2k line pairs without aliasing, it can resolve up to 4k lines. Nyquist basically does nothing more than convert lines to line pairs. Yes, you still need a low pass filter. And yes, misaligned exactly by 1/2 and sampled at the same frequency as the input, the image will resolve as gray, as Chapman illustrates. But that's where the < comes from. If it's < that number, then there will always at least be a trace in the recording, however faint, from which to recover the signal's frequency. So yes, let's think in sine waves and monochrome sensors exclusively. Refer to the images I posted above. You don't need the second dividend, as I illustrate, under those circumstances. Maximum possible capture resolution for without aliasing is : (W/2) x (H/2) line pairs in terms of frequency or W x H in terms of pixels (although those pixels may be shades of gray, and almost certainly won't be black and white lines unless there's a lot of false detail or sharpening)

-

Lack of wide gamut BFA and 10 bit high bitrate codec, for one. I'm sure they'd let you shoot the occasional insert on an iPhone, though, and would purchase an iPhone-derived project as an original on its other merits. They just likely wouldn't finance and produce one. So I wouldn't sweat it until you're in a position to discuss it with them personally.

-

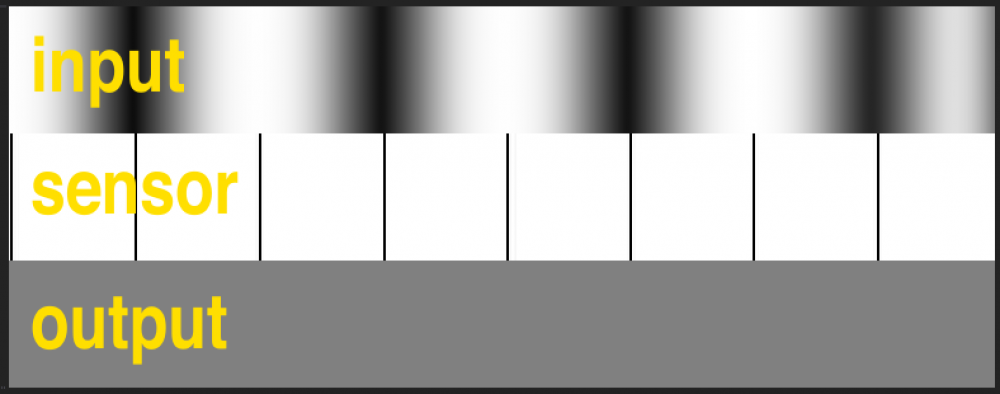

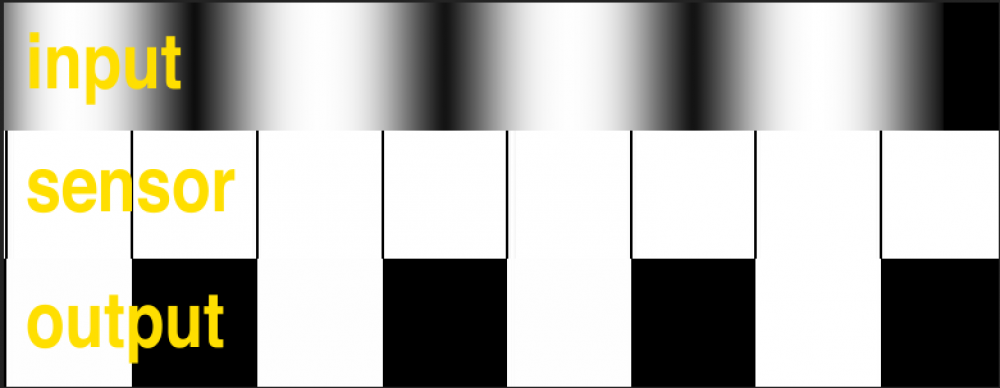

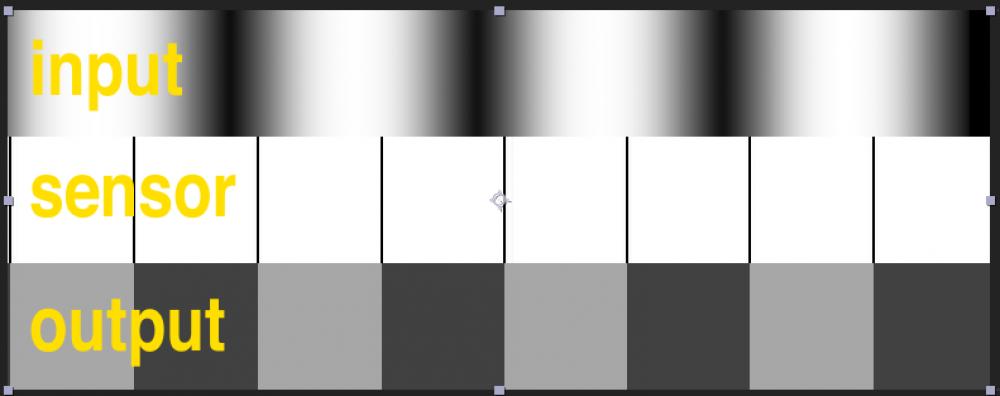

@jcs While the systems you identify function more-or-less as you claim and most of what you’re writing is correct, you’re making a few mistakes, most significantly dividing by two an extra time. Rather than dissect every aspect of your arguments in this thread, I’ll focus only on a few points that are problematic and we can go from there. IMO, Alister Chapman’s images are bad and misleading examples anyway so I won't address that post in as much detail as your post before it. I struggle to be concise, but I’ll try. For now, let’s stay focused on monochrome sensors without AA filters because Bayer interpolation just confuses things. You state: Maximum capture resolution possible for a monochrome sensor is W x H pixels, or W/2 x H/2 line pairs in terms of frequency Maximum possible capture resolution for (1) without aliasing is : (W/2)/2 x (H/2)/2 line pairs in terms of frequency or W/2 x H/2 in terms of pixels Where did the second dividend come from in part 2? Not from Nyquist. You already applied Nyquist in converting pixels to line pairs. What is correct is simpler and needs no part 2: Maximum capture resolution possible without aliasing for a monochrome sensor is <W x H lines or <W/2 x H/2 line pairs (assuming sinusoidal cycles in the input frequency) Or, basically just the Nyquist theorem. Alister Chapman’s first image is misleading. I suppose if you could map each pixel individually and perfectly that is about what would happen. But the chance of those patterns being mapped exactly to one another is infinitely low (not to mention I think he’s wrong in terms of how a Bayer sensor would read that input based on how deBayering algorithms work, but that’s an unrelated tangent and why we’re focusing on monochrome sensors for now). Both of his example images are based on a vanishingly low improbability. >99.9999999999% of the time at a square wave input frequency equal to the frequency of the sensor you’ll just get crazy aliasing, as you correctly state. Not that a true square wave could even hit the sensor once passed through a lens. So we see here only the exceptions which prove the rule and which are only tangentially related to the actual rule, which concerns sine waves and not square waves (except to the extent that square waves contain infinite order harmonics of sine waves). So let’s entirely forget Chapman’s confusing examples for now and start with more useful ones. Let’s map <N sine waves onto N pixels. (There are some caveats to the examples I’m posting but we can discuss those later.) What we can see here is that when the frequency of the sensor and signal are aligned, we can resolve <2N lines with high contrast. When they’re offset exactly by 1/2 we can see that the signal approaches gray (it would be pure gray if exactly offset and if we had N pixels but for this example we’re talking <N and N needn’t be an integer so instead it’s infinitely close to gray). And when the grid is offset by an arbitrary amount we get…. a lower contrast image. Not aliasing! Nyquist holds true. We don't need to divide by two again to get rid of aliasing! We just need to think in terms of sine waves rather than square waves in the input domain. Let’s reexamine your claims: 1 Maximum capture resolution possible for a monochrome sensor is W x H pixels, or W/2 x H/2 line pairs in terms of frequency Part 1 is basically true, excepting a lack of a < symbol and some semantics relating to pixels and line pairs not quite being the same thing 2 Maximum possible capture resolution for (1) without aliasing is : (W/2)/2 x (H/2)/2 line pairs in terms of frequency or W/2 x H/2 in terms of pixels Part 2 contains an extra dividend. It should read: Maximum possible capture resolution for (1) without aliasing is : <(W/2)x (H/2) sinusoidal line pairs in terms of frequency or <W x H in terms of pixels. Those sampled pixels may approach but will not reach zero contrast (in theory, not practice). The reason there’s so much confusion is because most resolution charts and most zone plates are printed in square waves, which have infinite higher order harmonics. I would also contend that oversampling by a factor of two guarantees a preservation of contrast that not only guarantees no aliasing but guarantees that no signal is reduced to approaching gray (taking gray as zero) and every signal is allowed to reach its full amplitude. But that is separate from Nyquist, and I'm not even sure oversampling (itself a Nyquist-bound process) preserves that detail anyway. What’s really crazy to consider is that a high resolution sensor is still prone to alias when shooting a single high contrast edge no matter the frequency of the fundamental pattern. It just usually doesn’t show up much. Furthermore, the < is a bit of a silly bugaboo because even without the < you won't get aliasing. You just also won't get a recoverable signal because it will be reduced to gray (zero) when misaligned perfectly with the grid. But since there's noise in any sensor and quantization isn't infinitely sensitive anyway, the <'s presence is very arbitrary in practice and the exact limit of recoverable resolution is even less than Nyquist predicts, promoting the practice of oversampling Anyhow, you're dividing by two an extra time and using square waves and sine waves interchangeably even though square waves are of infinite frequency regardless of their fundamental and sine waves are of their fundamental frequency and no higher.

-

Big Fancy Cameras, Professional Work, and "Industry Standard"

HockeyFan12 replied to Matt Kieley's topic in Cameras

I know of one or two DPs who own expensive kits like that. Reds or Alexas. It worked for them, they got work from bundling the camera in with the deal, and they gradually paid the camera off. But the majority of people I know who are working consistently and supporting themselves well don't own any camera except something like a t2i for personal use. Partially because different cameras are better for different shoots, mostly because they're getting hired for their ability and not their gear. Yes, if you're being hired mostly for bundling a cheap rental then that cheap rental will open doors to you... but only with bad shitty clients. So yeah, it will open doors for sure... imo, the wrong ones. Most commercial sets cost $250k/day. Lower end shoots still cost five figures a day. Is saving a few hundred dollars on a camera rental really that important to anyone but the most miserly client? Is the most miserly client the one you want? There is a middle ground of C300 and FS7 ops who work as wet hires for lower rates ($600-$800/day wet hire, maybe a lot more but that seems to be the agreed upon low end) and seem to do REALLY well because they get tons of work for mostly documentary style stuff, tv and web. Usually they can pay off their small camera ($20k investment rather than $200k investment) in the first six months while still making money and after that it's just gravy. Talent helps there but all you need to be able to do is operate competently and reliably. But when it comes to Alexas and Epics... I rarely see owner/ops unless they own their own production company or are independently wealthy or just crazy ambitious. The cost of the crew to support those cameras is thousands of dollars a day, anyway, so most cheap professional clients don't want the hassle. A lot of student films do, though, and if you're in a city with a lot of film schools you can do okay just with that since you can recruit a free crew of film students and still ask a decent rate for yourself. In my experience the most important thing is who you know. You want to know people who are looking for DPs. You also have to be able to do the job reliably. That's about it. I've witnessed a number of DP hiring decisions and it's usually just who's easiest to work with. What camera someone owns almost never matters at all. Having a good reel of course is very helpful. Edit: for narrative specifically I can see having a higher end camera being a strong selling point. For breaking into indie films (where rates are low but passion is high) having a good camera could be a significant factor. -

Which is better for green screen, GH5 10 bit 4K, or BMPCC RAW?

HockeyFan12 replied to Rank Amateur's topic in Cameras

I've found this to be the case, too. The Alexa's noise also responds better to noise reduction and there's more separation between the green and red chromasticities. That said, Red footage seems to key fairly well, too. -

I would try out the Arriscope before buying. I think optically it shares a basic design with the Iscoramas but it lacks a lot of the "look" people want with anamorphic lenses. It's low distortion, low flare, etc. Since these lenses are about look, not performance, I would DEFINITELY try before buying.

-

Which is better for green screen, GH5 10 bit 4K, or BMPCC RAW?

HockeyFan12 replied to Rank Amateur's topic in Cameras

The industry standard is Neat Video, which is $99. It will get rid of that noise, but it will also reduce the texture of the clip in a way you might find objectionable. In that case, just use the keyed layer as a track matte for another layer with only a spill killer on it and no noise reduction. Or regrain. -

Which is better for green screen, GH5 10 bit 4K, or BMPCC RAW?

HockeyFan12 replied to Rank Amateur's topic in Cameras

I haven't used either (at least not extensively) but RAW isn't 4:X:X in the traditional sense of the term. 4:X:X defines the color resolution of the recording codec following internal RAW conversion, whereas RAW has yet to be converted into anything. The GH5 should have more color information to start with due to its greater pixel density and I find 4:2:2 more than good enough for keying anyway. Haven't noticed any real difference between 444XQ and 422HQ for keying tbh. Maybe in really challenging scenes? I generally denoise everything before keying anyway and that recovers some lost color resolution from the temporal denoising and smooths everything out. It makes sense that the GH5 would key better but I would definitely try both before buying either.