HockeyFan12

Members-

Posts

887 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by HockeyFan12

-

I guess that's true. I find Netflix requirements strange (the F55 raw looks worse to my eye than "4k" Alexa prores, which Amazon and YouTube will accept, although both are good) but jumping early on the 4k HDR bandwagon is a big part of their business model and makes sense. Even if compression kills it now, in ten years they'll have a huge library they can re-encode. I do think they're pretty much the only "mid range" company that has a large portion of their content originating in raw. The rest is mostly limited to the very high end and one-man-bands.

-

The budgets aren't that high for their original series. Higher than most network tv, sure, but not Avengers level. But you're right. If any network can afford to shoot raw that network would be Netflix.

-

I suppose that's true. The wide gamut thing isn't true only for theaters, but also Netflix's 4k spec. Some Netflix shows are shot on raw, even though you wouldn't think they'd be that high end. It's a weird ecosystem that's split roughly between those wildly different audiences but they share the same needs. On the very high end a few features can easily afford to shot raw (Skyfall shot raw, many blockbusters since have, features shot on Red or F65 are all raw) and they want the extra flexibility to get the last little bit out of the image even if it's like a 0.1% difference the money is there so who cares. On the very low end, almost anyone can afford to shoot raw because the only infrustrature they need is a decently fast computer and they want the extra flexibility because they're mostly very inexperienced first-time buyers. In the middle range (network tv, Netflix, standard seven or eight figure features, most ads) the vast vast majority of cameras are shooting prores. 1080p or 2k, even. I think that's why the Alexa blew up so fast. At that level, workflows are expensive, but money isn't unlimited. So the article is sort of misleading but also sort of mostly true... raw is great if you screw up white balance. And that's harder to fix otherwise. There is a stench of marketing fluff there, but everyone has their angle. They're pushing affiliate links at most, I don't think they're shilling for Canon explicitly. Red has been using the same technique to market to rich amateurs and quirky pros. It's a weird angle but it's not wrong.

-

Has anyone tried these? How dissimilar are they from Arri fresnels? I rarely use my Arri lights anymore because everything is daylight balanced these days. I was going to install 12v 40w LEDs (150w tungsten equivalent) in them, but didn't want to ruin such nice lights with cheap LEDs. (I figured out how to re-wire them, but it would be somewhat destructive.) Has anyone tried these? They're about the price of a pair of Arri barn doors... and no need for scrims when you have a voltage dimmer. https://jet.com/product/detail/87e4eb77e4114e14a30f534b9fc23592?jcmp=pla:ggl:NJ_dur_Cwin_Electronics_a1:Electronics_Camera_Photo_Lighting_Studio_a1:na:PLA_785510854_39764707423_pla-292507316389:na:na:na:2&code=PLA15&pid=kenshoo_int&c=785510854&is_retargeting=true&clickid=056192a7-f632-47e9-a0a7-5c20fc483507 I already have some LEDs that fit the Arri fresnels. Anyone tried these out? Are they wired about the same? Are they real fresnels or something terrible? I'd buy real LED fresnels but these are for personal/personal promotional products... so I can't justify the cost. With the 15% off coupon and given that I own some 40w LEDs already I can definitely justify these. The option for battery power is very important to me.

-

From what I understand, industry rates for commercial/corporate dry hire non-union camera ops (not even good ones) run about $600/day, significantly more with a kit. Editing/vfx/color maybe $300-$500/day, more if you use your own computer. Heck, I know DITS making $1500/day regularly. Sound, $700/day wet hire. And that's before a 4-10X agency mark up (what the agency or studio charges the client). But your potential client isn't hiring at industry rates for a reason. I'd just ask them roughly what their budget is. I wouldn't undersell yourself at $100/day. That'll make them suspicious that you don't value your own time, and they won't value yours. I wouldn't try to take home a full rate for your first job, but I also wouldn't undersell yourself. Ask them roughly what their budget is or bid a little high and let them negotiate down. At least then they will recognize up front that you respect yourself. But the reason it's a difficult question to answer is that there's absolutely no standard or good answer for this kind of work. Which is why I wouldn't feel bad asking them what their budget is.

-

I agree that the Alexa renders in a duller way than film, in just the way you mention. To that extent, the Sigma DP Merrills aren’t a great film replacement, either. I’m really not sold on how skin tones look, and the over/under is more similar to slide film than it is to color negative. But the image is about as detailed as (and much less grainy than) a good 6x7 Velvia scan, with good color rendering and highlight rendering and nice high frequency detail, too. For landscapes and still life it’s a viable alternative to a Mamiya 7 with slide film. For candids and street photography where you want more texture and character, I’m not at all sold. The LCD is really bad, unfortunately. Somewhat like large format slide film, the DP2 Merrill has a transparent look to its image. It doesn't look like film or digital, it looks like the scene you shot (if you expose carefully)... Given its size and price, that's reason enough to recommend it to landscape photographers in particular. But no, it's not a direct film replacement, and behaves more like slide film than color negative. Worth a try, though. I really like mine.

-

I just found this photo I took by accident on my DP2 Merrill while trying to adjust the settings. A bit underexposed but it shows that the limited DR looks fine at magic hour if you don't shoot straight at the sun. This is straight out of camera, default settings, not even any exposure adjustment I don't think. (It is a little underexposed, and there are some NR artifacts.) I think the sensor behaves more like slide film than color negative film (which more closely resembles the Alexa's look). And most photographers I know who shoot 4x5 slide film generally wait until just after sunset for a more even light, finding magic hour too harsh. So I can't vouch for the DR at all. That's just not why you'd buy this camera. For "organic" portraiture or a gritty look I wouldn't go with a DP Merrill. It's better for landscape and macro and studio type work IMO, where it excels. Don't judge the sharpness too harshly. I didn't adjust focus (I was on manual focus and took a photo by accident) and I shot handheld and the jpeg artifacts are softening it a lot.

-

It depends what film stock you're coming from and what your budget is. I don't think anything digital surpasses 8X10 film or even 4x5 film (Velvia 50 and Portra 400 were my favorites), but I can't afford to spend a few hundred dollars (with scans) per shot anymore. At least not often. The DP2 Merrill has more dynamic range than my favorite film stock (Velvia), but it has worse color rendering. It has much less dynamic range than Portra has, or black and white film. Its look is most similar to 4x5 velvia scans without the same intense richness and wildly vibrant greens and of course less high frequency detail. And despite its initial strong impression, I find that the Sigma has banding, slight aliasing, and a low frequency green/magenta pattern that looks a lot like heavy noise reduction, all unique to it. It ruins the tonality of rock textures in a way that's wholly unique. On Bayer sensors they have a nice smooth look but no high frequency detail; on Foveon they have great high frequency detail with an ugly smoothed low frequency color noise reduction pattern. If you shoot at 50 ISO you can reduce this but it increases banding, too. I generally rate the camera no faster than 64 ISO. But I prefer the look of a film stocks with less than five stops of dynamic range because the print itself has less than five stops of contrast and so you get a more accurate contrast in the print by limiting the dynamic range of your scene. So I don't find the Sigma's limited dynamic range to be a problem. For landscapes, I like it. I also find the clipping to look less digital than you'd expect. The lens is great, though. I guess what I can say is it's a good replacement for medium format Velvia, and brings roughly as well, but a poor replacement for medium format Portra. Drum scanned large format of course wins against almost anything and has a special look but at such a great cost. If you want the look of 4x5 Velvia using a very modern lens, the DP2 Merrill is the closest thing there is that's cheap and digital. If you like older lenses, organic texture, grain, etc. it will disappoint a bit. I think you know your needs and your clients' expectations and that they're better met by shooting film, and I don't think you'll have your mind changed. But for landscape photographers I think it's quite viable. The screen is terrible, though, but 4x5 is even slower. Don't expect too much from the DP1X. It's only going to produce 4 megapixel images and while they will be nice 4 megapixel images, they'll still be less sharp than today's entry-level dSLRs. But it should give a good idea of the Foveon "look."

-

That's a good point. For some reason I always considered the 1 series and D series professional and the 5D and D800 prosumer, but maybe because I replaced my 5D III with an iPhone... and I'm a consumer. To that extent, the D850's awesome specs are encouraging. In fact the D850 plus a host of tilt/shift lenses might be the sub-$10k view camera replacement I've been looking for.... I agree that the high end will be the most resilient to change.

-

Those are really good points. I do think that pretty soon a "prosumer" camera will more closely resemble an iPhone, but the higher end pro lines (the D850 seems to be going in that direction) look like a healthy market, too. MF is exciting for sure, I wish there were a true view camera replacement under $10k, and maybe that's a possibility soon.

-

Nikon needs to address ergonomics, ecosystem, and UI, or their market share will continue to decline. Canon does, too, but less pressingly. Mirrorless cameras need to reach iPhone-level convenience before the iPhone reaches A7R-level image quality if the prosumer market is to survive. This focus on specs will only get Nikon so far. Which is sort of a shame because those specs are monstrous and the Nikon ecosystem is great. I'd love this camera for stills and video, assuming the video is decent in practice. (The D5 and 1DXII are fine for what they are, but; they're pro tools specifically for sports. That's not going away, but it's a tiny market. The prosumer market is in danger of going the way of point and shoots though.) Anyhow, awesome specs. Likely an awesome camera. Hoping it keeps Nikon in the game a while longer (and that the tech trickles down to Canon's ecosystem five years later lol).

-

I don't know the first thing about lens design, either (well, maybe the first thing, but definitely not the second). I've just been playing around with wide angle adapters for a while. I also have one of these for my Iscorama: https://www.bhphotovideo.com/c/product/464579-REG/Canon_1724B001_WD_H72_72mm_0_8x_Wide.html It's 72mm and I think it's the very same adapter Chris Probst uses on his Kowa 40mm. The only problem is you might need to find a machinist to grind the mount down a bit. But optically it's really good. There's significant barrel distortion and CA but it's very sharp. There should be some on the used market for good prices; I got mine on eBay and then found a local machinist to grind the area in front of the threads down to fit. For some reason they protrude too much. It was around $100 total in but I got a good deal I think. The machinist only charged $20 but I was lucky to find someone so good who charged so little. Now you're making me want to buy an 18mm f3.5 Nikkor to use with it.... I'm going back to vintage lenses.

-

I don't think you're going to find an adequately high quality 0.5X wide angle adapter, and I suspect all of them will vignette (hard vignetting) when combined with a speed booster. I do think it's an intriguing idea, though. I have a 0.8x adapter for my 24mm f2 Nikkor. Lots of barrel distortion, but sharp. I think it's this one: https://www.bhphotovideo.com/bnh/controller/home?A=details&O=&Q=&ap=y&c3api=1876%2C{creative}%2C{keyword}&gclid=EAIaIQobChMI87WL_fLm1QIVEhuBCh3gTAAlEAQYASABEgJEevD_BwE&is=REG&m=Y&sku=751265 From what I understand the off-brand ones are worse but can be found even cheaper. The corner sharpness suffers with them. You can try something like this but I expect it will have hard vignetting and an atrocious image in the corners: https://www.amazon.com/Neewer-0-45x-Select-Camera-Models/dp/B002W4RPD2 I don't think a 0.5x adapter is going to do a good job, but it's not necessary anyway imo. The Speed Booster XL is 0.64X and has good performance. With a 0.8x adapter and the Speed Booster you're down to about 0.5 anyway. Might be worth getting a 24mm FD lens (if Canon makes one with 58mm) threads to eke out those last few degrees of FOV, though. That would bring you right around 12mm. Wide angle adapters are a viable option if you don't mind barrel distortion. I believe Chris Probst uses one on his 40mm Kowa. I think they look nice on anamorphic lenses in particular, where the distortion adds more character.

-

I almost entirely agree with this, but you will see more aliasing in nearest neighbor downsampling than Nyquist predicts. The reason why is that Nyquist requires evenly-spaced sampling (per the definition of the theorem), but nearest neighbor downsampling cannot be evenly spaced unless you divide the resolution of the image by a whole integer. So for a 1080p image, the only Nyquist-applicable nearest neighbor downsampling resolutions would be 1p ,2p, 3p ,4p , 5p, 6p, 8p, 9p, 10p, 12p, 15p, 18p, 20p, 24p, 27p, 30p, 36p, 40p, 45p, 54p, 60p, 72p, 90p, 108p, 120p, 135p, 180p, 216p, 270p, 360p, and 540p. (Factors of 1080.) I still argue that anything >2 fulfills the theorem... in theory. In practice it is more complicated. 3 may be a decent rule of thumb. Some systems will get by with <3. None will get by with <2. But 3 may be a good rule of thumb in the real world.

-

I have to get to bed, but I appreciate you answering directly. I apologize again if I get testy... I get really grumpy at night when I'm tired. I also have to get to bed I wanted to get to the point quickly. I'll have more time to discuss this weekend. As regards your first response, I would agree that Nyquist states >2. Which means, in theory, any number >2. (In theory, practice is more complicated because there are other systems involved, including quantization and Bayer CFAs and OLPFs etc.) 3>2 and so 3 fulfills the theorem, but anything between 2 and 3 does, too. (I would agree that 3 is a particularly good choice for Bayer sensors because Bayer interpolation is just over 2/3X efficient.) I disagree that >2 samples will alias when you have sine waves in the input domain on a monochrome sensor, though. While you might see aliasing with a square wave (as we see with the FS100 chart), if you look at the examples of sine waves in the input domain I posted a few posts above (granted, they aren't the clearest; I can try to put together a clearer animation this weekend) while a sine wave can approach phase cancellation and even possibly reach pure gray at exactly 2x (and will go in and out of phase depending on how the domains line up), it will never alias. It might turn gray or extremely low contrast. It won't induce false detail (aliasing). I agree with you on the second point, completely. But while a high frequency square wave passing through an OLPF does eliminate the highest order harmonics, the OLPF isn't always going to be perfectly matched with the frequency of the pixel grid, nor is it a perfect low pass filter... it blurs all frequencies, so most manufacturers tend to make OLPFs a bit too weak. So, for academic purposes, it is worth discussing sine waves specifically and sensors without OLPF specifically because we don't introduce those extra variables. And they are variable. Which is why I'm still confident that if we take a 4k zone plate and photograph it with a >4k center crop of a Foveon or monochrome sensor (with no OLPF) we'd get no aliasing at all. That said, I agree the 3X rule of thumb works well for Bayer sensors. The Alexa, for instance, samples 1620 pixels vertically to resolve 540 (1620/3) cycles, or 1080p vertical resolution.

-

Your model works fairly well in real world practice. I don't dispute that. Both of our models provide fairly similar results in most cases, actually. And no one disputes that oversampling is beneficial. I'm not arguing that 1/0.58X or 2x or 3x isn't a good rule of thumb for a sampling rate in a specific complex system. It's just that we still disagree how you get there. I have little doubt the programs you wrote work well. But the basic math you're doing is sketchy. I have to return to that. I've answered your questions (not always to your liking, but as honestly and analytically as I could). Why haven't you answered mine: Direct answers this time, please. Not "I don't care about the actual theorem because I have a rule of thumb I guessed on and seems to work most of the time." Lastly, if you're so evidence-focused, why did you cite 1000 LPPW as the FS100's resolution in support of your argument that it resolved about 1000 lines, and then when I pointed out it was 1000 LPPH you insisted that's what you meant all along but that there's aliasing (which both of our models predict, btw)? Evidence can be read subjectively. That's the problem. Math is simpler. Let's start with the math. We're debating the Nyquist theorem. Not the results. They speak for themselves. For the most part I agree with your statements on oversampling and its benefits. Just not how you got there. To me, that matters. Truth matters.

-

I apologize for any ad hominem attacks. That said, we’re aiming to discuss the Nyquist theorem, which explains how to sample cycles of sine waves in (discrete, evenly-spaced) samples without aliasing or phase cancellation. I’m starting my argument with the simplest variable possible: the frequency of a sinusoidal cycle. And then building my argument from there to the necessary result: the required frequency of the sampling system to record the input frequency with a recoverable trace and with no aliasing. You’re making interesting arguments, but frequently throwing wrenches in that do exist in the real world, but only factor into this discussion to confuse a very simple fundamental principle, from which we should build an understanding bottom up if we intend to delve further. You're throwing in square waves, Bayer interpolation, OLPFs, a rotated pixel grid, a bunch of confusing (and confused) blog posts, differences between digital downsampling methods, 0.58 for some reason, perceived and purported differences between two equally Nyquist-bound systems (digital and physical, and yes, there are differences in the downsampling methods and quantization if not the laws that govern them)... it's all obfuscating the point, and frankly points to little understanding of it. Yes, those factors matter in the real world. As I said, both of our models return fairly similar results in real-world practice. And yes, all the factors that are responsible for that result are beholden to the Nyquist theorem. Yes, I understand what you’re arguing each time. But before we get into calculus we have to understand that 2+2=4. So let's address the basics first... Your arguments don't change the fact that you’re ignoring the fundamental disagreement we have and that you aren't answering my questions. I've been consistently responding to yours, and repeatedly posting images that show how my model works and yet you've simply ignored them each time. It's disrespectful to me that I keep asking you to accept simple statements that should be givens and instead you post links to irrelevant and often misinformed blogs. Rather than dodging the questions, can you answer these two: Do you multiply by two (>two to promise a recoverable trace) once or twice per cycle (full sine wave, or line pair) the highest order sinusoidal signal to avoid aliasing when sampling in a Nyquist-bound system? Does Nyquist concern sine waves in the first place, or are the fundamental frequencies of square waves interchangeable in the theorem with sine waves? If they are, why are the higher order overtones irrelevant?

-

I haven't used an Alexa65 yet. But I agree fully with your 2x oversampling factor for Bayer sensors being ideal. If only so we can guarantee full raster in R and B. But that's obfuscating by adding additional factors (Bayer interpolation) instead of starting bottom up and following Gall's Law (the engineer's Bible, I always assumed?) to get to the root of the issue. Can we agree that:

-

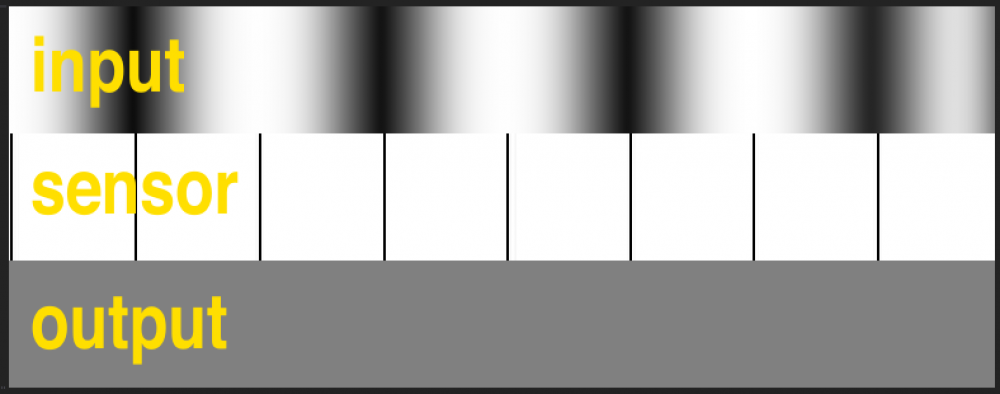

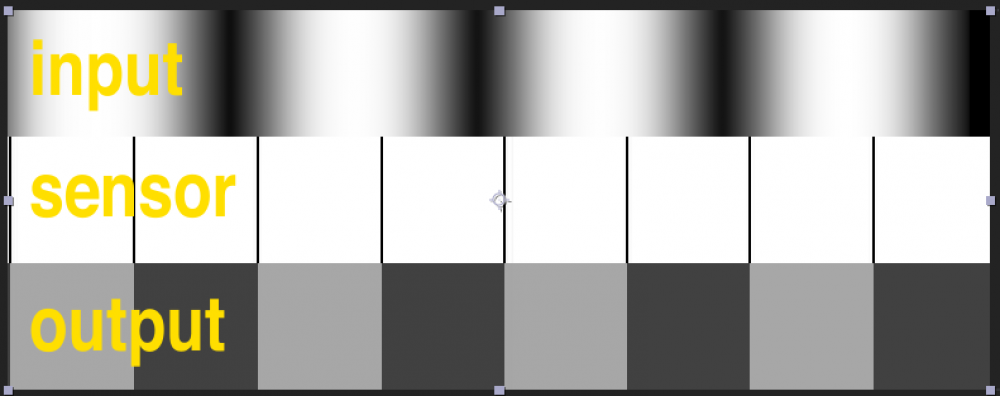

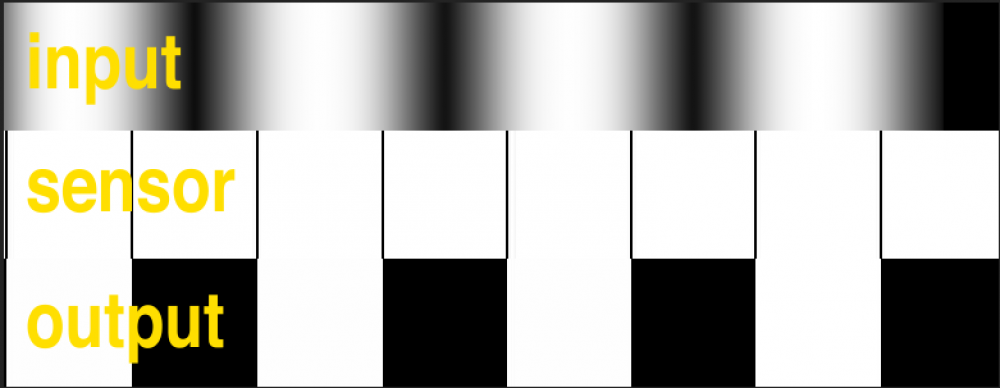

I totally agree about the confusing terminology being a big issue. There are so many technical committees trying to clarify resolution in obscure technical terms and marketing departments trying to obfuscate resolution in clear marketing terms that it’s a wonder anyone can agree on anything. Bloggers and academics who usually don't know what they’re talking about in the first place aren't helping either! But Graeme’s model actually doesn’t incite any aliasing, so long as we use sine waves, which he does, and as Nyquist definitively concerns. I hope my post above clarifies why using sine waves and not binary/square wave charts is essential if we're following the Nyquist theorem! See this example, which I posted before, modeled as a more accurate and relevant version of Alister Chapman’s claims: Yes, a sine wave signal can get grayed out almost completely if it is exactly out of phase. And no, you’re not going to get “tack sharp” black and white lines from a high frequency sine wave chart as you would with square waves (I fudged that a bit in the above graphic; the first chart should probably have a slightly lower contrast output). You’ll only get a reasonably sharp reproduction of it when it’s nearly exactly in phase. And a fuzzy near-gray one when it's not. But Nyquist concerns avoiding aliasing and maintaining a recoverable signal; it doesn’t say a thing about MTF or amplitude except that it doesn’t hit zero as long as you keep that ever-important “greater than” symbol intact. As I illustrate above, there’s no aliasing anywhere under Graeme’s model. The signal can get grayed out when it's out of phase and we're sampling at 2X exactly... But then the “greater than” symbol guarantees that at least a trace of the original signal is preserved, however faint. So while I agree that if you sample at twice the frequency and sample perfectly out of phase you will get a completely gray image... I maintaining that it will still have no aliasing. (As Nyquist predicts.) Yes, also with no signal. (As Nyquist predicts. We need that "greater than" sign to guarantee a signal.) But if you do sample at greater than twice the frequency you will guarantee at least a very faint trace of a signal. So if you're sampling sine waves, there won't be any aliasing so long as you sample at 2x the frequency. And there will be a recoverable signal so long as it's >2x the frequency. And yes, that's two samples per sine wave (line pair or cycle), not two samples per line (half of a sine wave or half of a cycle). (Admittedly, that faint trace might be obscured to zero by quantization error and read noise in real world use. Look at ultra-high ISO images–the resolution suffers! I never said oversampling wasn’t beneficial in real world use. Just that the math holds up with Graeme’s model. As I've illustrated, it does! No aliasing. Some trace of a signal.) I maintain that the “1000 line” portion of the chart is repeating in approximately single-pixel lines–and if you pixel peep, you'll see that it incontestably is. I also maintain that the FS100 chart specifically concerns vertical lines–and it does. That’s why it’s labeled as LPPH (line pairs per height) and why the frequency of lines is the same in both axes. The frequency of those lines would be stretched 1.78X horizontally to match the 16:9 aspect ratio if it were measuring each axis separately. It isn’t. We’re seeing roughly 1920X1080 of resolution out of the FS100 (as my model predicts). The resolution looks pretty similar to my eye on both axes. Do you really think this is a 1080X1080 pixel image? Or do both axes look roughly equally sharp? They do to me. It looks like a 1920X1080 image to me, equally sharp in each axis. But I have admit that your second claim is incontestably true. There’s a very significant amount of aliasing on the FS100 chart at 1000 vertical lines. In both axes. I’m in full agreement there. I won’t deny what the image shows. Only, I will contend that the aliasing is there mostly because it’s a square wave chart. As I keep repeating, there are infinite higher order harmonics on square wave/binary charts and Nyquist concerns the highest order frequency of the system. If it were a sine wave chart, it would be softer, but with no aliasing. If you have any doubt, read my post above this one, and you can see why a square wave chart is so sure to incite aliasing in these instances. With square waves of any fundamental, there are infinite higher order harmonics (at admittedly decreasing amplitude), and in real-world use, those are usually more than a standard OLPF will filter out at higher fundamental frequencies. I maintain, with complete confidence, that if they had used a sine wave plate, the results would be fuzzier in the higher frequencies, perhaps approaching gray mush at the 1000 LPPH wedge and certainly reaching total mush at 1200 LPPH... But there wouldn’t be any aliasing. Thus, fulfilling Nyquist. It is confusing. The model I suggest (not dividing by two the extra time, only using sine wave charts) and the model you suggest (dividing by two the additional time, using square wave charts) actually result in rather similar real-world results. But my model is mathematically sound and correlates closely with real-world examples. Yours divides by two an extra time, assumes harmonics don't count (of course they do), and predicts a fuzzier image than we see. Your computer graphics examples are interesting… but I don’t see how they’re relevant. Let’s focus on what's simple for now, rather than throwing obfuscating wrenches in as a way to distract from a very simple concept. (What's the frequency of a rotated sine wave anyway? Can you provide an equation for it? I'm guessing it might be something to do with root 2, but I don't know for sure. It's not the same as the original wave, or else the F65's sensor grid wouldn't be rotated 45 degrees to reduce aliasing, and you wouldn't be getting aliasing in your example.) Furthermore, those sine waves look suspiciously high contrast (like anti-aliased square waves, or with sharpening or contrast added beyond the quantization limit of the system). Whether that's the case or not, I suspect you introduced this example just to confuse things and deflect your uncertainty. It complicates rather than clarifies. Not the goal. Let's focus on simple examples and take a bottom up approach, rather than throwing in extra variables to obfuscate (that's what marketing departments do; engineers follow Gall's Law). Furthermore, both real life and computer graphics are Nyquist-bound in the same way. Both are sampling systems. So choosing different oversampling factors for each seems... very wrong. Unfortunately I’m busy with work for the rest of the week and won’t be able to return to this discussion until the weekend. But I would be interested in continuing it and will have time to then. Before I then, can we agree on two things? They shouldn't be controversial: Nyquist concerns the highest frequency sine wave in system. Not the fundamental frequency of square waves. Square waves are of infinite frequency at their rise and fall and thus their highest frequency as concerns Nyquist isn't their fundamental, but whatever the highest frequency is that the OLFP lets through. See my prior post. Simply stated, let's talk sine waves, not square waves. Or at least accept that square waves are of infinite frequency at their rise and fall, or are at the frequency cut off of the OLPF. Nyquist theorem concerns sine waves by definition. Can we at least agree on this? It should be a given in this discussion. Secondly, if we take a 4k sinusoidal zone plate and photograph it with a 4k center crop of a Foveon sensor and there’s no aliasing horizontally or vertically, my model is correct. Can we agree on that? (I believe you stated this as your condition for agreeing with my model earlier, so this shouldn't be controversial.)

-

Here's an interesting layman's description of higher order harmonics in square waves (one that even I could understand and I'm not very good at math): http://www.slack.net/~ant/bl-synth/4.harmonics.html Let's agree on a common definition of Nyquist if we can. I propose this one, though I want to correct one minor error in it: https://www.its.bldrdoc.gov/fs-1037/dir-025/_3621.htm (even there they publish an error; it should not read equal to or greater than; it is equal to if you want to guarantee no aliasing, but must be greater than to preserve a recoverable signal, however potentially faint, because otherwise the frequencies can theoretically be aligned exactly out of phase, resulting in a gray signal) So, looking at the highest frequency components of a system: If we have a 1hz sine wave then we need >2 samples per second to represent it. (>2 samples per cycle) But what if we have a 1hz sine wave with a 3hz overtone at 1/3 the amplitude? We need >6 samples per second. (3hz is the highest order frequency; >2X3=>6) What if we have a 1hz sine wave with a 3hz overtone playing at 1/3 the amplitude and a 5hz overtone playing at 1/5 the amplitude? We now need >10 samples. How about 1hz + 3hz (1/3 volume) + 5hz (1/5 volume) + 7hz (1/7th volume)? >14 samples... How about 1hz + 3hz (1/3 volume) + 5hz (1/5 volume) + 7hz (1/7th volume) + 9hz (1/9th the volume)? >18 samples... How about 1hz + 3hz (1/3 volume) + 5hz (1/5 volume) + 7hz (1/7th volume) + 9hz (1/9th the volume)..... We need infinite samples, because we've hit an infinite frequency (granted, at an infinitely low amplitude). We've also just constructed a 1hz square wave. Hence my contention that we must discuss sine waves, not square waves. I contend that the effective frequency of a square wave is infinite, at least as concerns Nyquist.

-

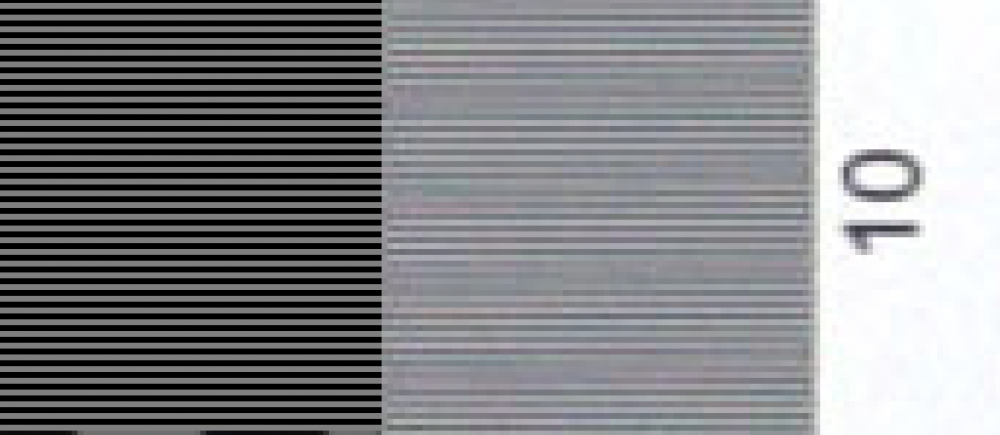

As I mentioned above, that FS100 chart specifically says lines per picture height (LPPH). Yes (I think) in the SDTV days horizontal lines of resolution was the standard measure (LoHR) but that chart SPECIFICALLY says lines per picture height. So does the graph you posted above state 540 vertical line pairs from the F3 (aka 1080 lines, aka full 1080p resolution). Just out of curiosity, I aligned the 1000 line chip in that FS100 chart you posted with a set of alternating one-pixel width lines. They should line up closely but not perfectly (1000 lines vs 1080 lines). And they do: Clearly this image shows that the chart represents alternating lines of approximately 1 pixel frequency on the sensor. Yes there's some aliasing, which is expected. Yes it's approximate (1000 vs 1080p and not perfectly aligned). But it's clear that these lines are of closer to one-pixel height than two-pixel height. Thus, we're seeing about 1000 lines per picture height, as the chart plainly reads (LPPH). Which would mean the camera is resolving close to full 1080p resolution, not half that. Just as my model would predict! I do agree with you that the OLPF gets rid of most of the higher order harmonics, but we both know very well it doesn't get rid of all of them. OLPFs are not always strong enough (another argument for over-sampling). That's why I suggested a center crop from a Foveon sensor (full raster RGB with no OLPF) and a 4k sine zone plate as a good real-world test case without the messy variables of Bayer interpolation and an OLPF. As for why Graeme's explanation is not more widely-circulated online, I don't know. I'm genuinely a bit confused about that. But I've learned not to trust everything I read posted by armchair experts on blogs.

-

Sorry again about being pissy yesterday, I was having a rough day for unrelated reasons. Anyhow, a few things: 1) That chart still looks like square waves to me, so you will see more aliasing but also more resolution being resolved (false detail). Can we at least agree on using a sinusoidal plate, as I asked above? I keep coming back to your insistence on using binary plates being the biggest source of your misunderstanding. Let's address that first. Can we agree on using a sinusoidal zone plate? (Read Graeme's post again where he discusses why a sine wave plate correlates properly with Nyquist, although I've mentioned why a dozen times before: square waves have higher frequency overtones and Nyquist concerns the highest frequency.) As I said, I'd buy a sine plate, because I am curious to try this on my own, but they're really expensive! If I could rent one I would give it a try, but I'm not inclined to spend $700 to prove what I already know: https://www.bhphotovideo.com/c/product/979167-REG/dsc_labs_szs_sinezone_test_chart_for.html (Plus proper lighting and a stand... anyhow, more than I'd like to spend.) 2) I agree, that chart shows that the camera resolves about 1000 lines per picture height. Yes, tv lines are normally measured in picture width unless otherwise specified, or at least that's what I grew up thinking during the SDTV days, but that chart clearly specifies lines per picture height. The confusing thing is that there is some minor and expected aliasing due to the test chart's lines being binary (square waves). If we wanted a truer result, as I mentioned above, we'd use a sinusoidal zone plate, and we should see a softer image, perhaps mostly gray mush if the sensor and chart are out of phase, but without even a trace of aliasing at 1000 LPPH, no matter how the two are aligned–exactly as the Nyquist theorem predicts. 3) That article on sensor design doesn't even mention Nyquist. What it mentions is that Bayer sensors are only about 70-80% efficient in each axis because of the Bayer CFA and interpolation. No one disagrees with this. Assuming a Bayer sensor is 70% efficient (0.7X) in each axis and given that 0.7 X 0.7 is about 0.5 we can indeed say a Bayer sensor resolves about "half the megapixel count" or a little better if it's more efficient algorithm, as recent ones are. (It's an old article.) Just as the article states. And that's consistent with Red's claims of "3.2k" of real resolution for a 4k chip, which would be 80% linear efficiency or 64% of the stated megapixels. Etc. Due to Bayer interpolation. Your claim is that a monochrome sensor resolves about a quarter the megapixel count (0.5 X 0.5), which does not follow from anything mentioned in that article.

-

I'll look into it. I actually would be curious to give this a try and I apologize for getting worked up earlier, it's been a rough week, and that's made me a little touchy. I'm as convinced as ever I'm right, but I do feel foolish for getting heated. Anytime anyone mentions money online it's as dumb as mentioning Hitler or resulting to ad hominem attacks. Pointless escalation. My bad on escalating that one. Maybe I was just trying to add a little more to my down payment. But that wouldn't be fair. Now that I see Beverly Hills in your profile, I'm starting to think you could escalate the wager a lot higher than me without suffering the consequences, but it's still just a shitty way of escalating an argument. Sorry about all that. That said, I'll see if I can rent a 4k zone plate or visit Red headquarters or something. I don't want to spend hundreds of dollars to win an argument online when I only have to prove something to you (those actually designing sensors are already following my model), but on the other hand I'm curious. Can we agree to use a sine wave plate rather than a square wave plate? And can we agree that a blurry image doesn't count as aliasing, only false detail does, and that slight false detail specifically from sharpening isn't aliasing, either? The real difficulty is that the best thing I have is a Foveon camera (don't own an M monochrome or anything) and Sigma's zero sharpening setting still has some sharpening, and one-pixel radius sharpening looks like slight false detail at one pixel. So the result will be a little funky due to real world variables. But I still contend that the result will correlate far more closely with my model: a properly framed 4k sinusoidal zone plate won't exhibit significant aliasing when shot with the 4k crop portion of a Foveon camera, even if the full resolution isn't clearly resolved when the two are out of phase. But we have to go with a sinusoidal zone plate (which is unfortunately the really expensive and scarce one, binary is cheaper and far more common) and recognize that if it's fully out of phase the result will be near-gray. That aside, I would be genuinely curious to put a 4k zone plate in front of a 4k Foveon crop. But we'd have to agree SINUSOIDAL lines (halves of full sinusoidal cycles). Even if it's just a gentleman's bet. Let's agree on a sinusoidal zone plate first. And I apologize again for getting worked up. That was really childish of me. It's been a bad week and I'm sorry about that.