-

Posts

1,839 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by jcs

-

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

That's right especially if you're doing it wrong and you know it! -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

Mattias, you are so close, if you set ISO correctly you've got equivalence down. What's the purpose of your videos? Is it to create drama or controversy to get views and negative engagement by doing things wrong on purpose, or is it to share your work, to help people make decisions and to help them learn how to shoot? Why not demonstrate equivalence correctly so other people can learn too? -

An example of excellent RED skintones and overall color. Not up to ARRI (especially highlights), however very saturated pleasing color that works well for the story. If you're into martial arts in a dystopian future with excellent acting (for the most part :)), great camera work, and cool music, check it out. Shane Hurlburt is DP; he also sells tutorials based on camera/lighting for the show. Warning: don't start watching if you have anything important to do, it's pretty awesome!

-

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

The term 'equivalence' is just a tool to match cameras and lenses, not lenses by themselves. Once the concept is understood, sure, for stills you can use shutter, ND, and ISO as needed to match EV (for film/video need to match shutter). -

'Light at end of the day' is Golden Hour. That's low color temp (orange/golden), super-diffuse light. Is this for a separate shot, because that's the opposite of a point light source (hard light). If they want hard orange light, that's easy- use a point source and set WB (or change in post).

-

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

It's physics and math. Multiplying ISO by crop factor squared works, you can see for yourself, why won't you try it? What were the two cameras you used for your 'equivalence' test? Did you watch the video, specifically at 4:02? My understanding was no focal reducer was used. The cameras were set up for equivalence: focal length and aperture, but not ISO (which is the error and reason the FF image is darker. Setting the ISO correctly will match apparent brightness). Again, the formula is multiply ISO by crop factor squared (each f-stop lets in 1/2 the amount of light, that's why ISO must be multiplied by the crop factor squared- to compensate for the loss in light). It works- how can you deny the real-world result? -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

In the video you stated 'equivalence', which means you try to set both cameras to match per the equivalence math, right? Are you saying that the full frame camera you used couldn't set the ISO ~4x what you set the M43 camera? What were the two cameras? -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

Referring to the Equivalence test here at 4:02: For example, if the crop factor is 2 and the ISO for the crop camera is 800, the FF camera should be set to 2^2 = 4*800 = ISO 3200. That's why the full frame image is darker. Mattias states he doesn't believe in equivalence (including in the video), then does the test wrong- what's the point? -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

Why didn't you adjust the ISO for full frame with the crop factor squared? That's required math and physics for equivalence. If you do that brightness will match. Good points showing improved image quality with the adapter. -

The difference between hard and soft lighting is simple: diffusion, or not, and how much (shadow edges hard or soft/diffuse). A point light source is a perfect hard light with little or no visible diffusion: very crisp shadow edges. Being inside a glowing perfect sphere is perfect diffusion (actually, if the sphere was filled with glowing plasma, that would eliminate all shadows :)). A formula: a single point source is hard, add more sources to get more diffusion (or bounce/shoot-through (fabric etc.) to make a larger source, also known as increasing light wrap. http://www.canvaspress.com/focal-point/article/2014/06/19/hard-light-vs-soft-light/

-

It's all relative, right? Aputure Lightstorms, for example are in the high end of consumer/prosumer lights. While there are now lots of good options for LED panels at all price ranges, I don't see many options for high CRI LED Fresnels (I have one Fiilex P360EX with a drop in Fresnel. Would love to see some variable Fresnels with more light output in the same size or slightly larger and priced to compete with Aputure (perhaps best bang for buck re:CRI and cost)). A powerful LED Fresnel is a lot more versatile than flat LED panels (beam width and modifiers, everything from tight hard light to large area soft diffusion).

-

While I've seen RED and Sony content that looks great and with excellent skintones, many times the color and highlights don't look very good (looked up on IMDB etc. to see what camera was used). ARRI just looks more pleasing, and I figure that it's also creating footage that's easier to make look good with less effort. For what we do (pretty basic stuff at this point), the C300 II does everything we need including creating skintones that need little or no work in post when lit well. The A7S II for example can also do good skintones, but needs work in post. I think ARRI's experience with digital film scanners has given them great insight into building a camera system that produces film-like capture which ends up being very easy to make look good in post, and thus why most of the ARRI content looks really good. Guessing that ARRI will announce a true 4K(+) (Super35) camera at NAB.

-

12. Use FOV/focal length for dramatic effect: a wide angle lens up close to the actor is distorted and 'crazy', a long lens from far away to give compressed isolation, etc.

-

Here's a basic formula: When possible, take away all the light and carefully add light tuned for the story and emotion of the scene. Look at how often everything is dark, or overall not very bright in classic films. Paint with light. There are tons of books on this subject. Shoot at night and wet down the street. Set the frame rate to 24 (or 23.976), shutter 1/48 (or 1/50). That's for normal shots, you can go all over the place for emotional effect of the scene. Protect highlights so they don't clip and pay careful attention to exposure to keep skin tones in the sweet spot for your camera. Study film behavior for highlights and adjust your look in post to match the highlight and color response of film. Film generally never gets super white. Use a diffusion filter of some kind. Blur a little in post if necessary and add full resolution film grain (blur may not be needed if diffusion is used). Make sure there are no digital artifacts such as aliasing or Moire. Use depth of field to help tell the story by helping the viewer focus on what's important in the scene. If your camera has rolling shutter, make sure to move the camera in a way to minimize it. Try to make the scene a little 'unreal', in a way you would not see in normal everyday life. Like in a dream.

-

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

@tupp the equivalence equations and test images don't claim to be pixel perfect, only a tool to set up cameras and lenses as equivalent as possible. You've looked at them all and proclaimed, "ha HA! The two images aren't perfect so it's invalid!", right? Then when shown pixel perfect computer simulations (which can in fact model any defect/transfer functions you'd like) you proclaimed, "simulations aren't reality so it's invalid!". I was providing information I thought would be helpful. If it doesn't work for you, no worries. If anyone can show that any specific lens has special properties only available for the format the lens was designed for, I look forward to seeing the results. -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

@tupp I know you say you're serious however I've been saying it's the optics, and only the optics for this entire thread (this a quote of what I wrote from your post quoting me): Are you even reading my posts lol? So now we're in agreement that it's not sensor size, great! Let's get on with debunking lenses designed for a certain sensor size format have special properties not present in lenses designed for other formats. You're going to need a large format camera vs. Super 16 in order to demonstrate the effect your propose is real? Why not use a cellphone vs. the World's Largest Camera? Yes I'm joking, to demonstrate the absurdity of all this There are no significant looks or special properties for lenses designed for a specific format either. With the lens somewhat close to the subject I suppose size could matter, however there are full frame lenses bigger than medium format lenses so that's not it. What is it about medium format lenses (or large format lenses) that make them produce a unique look only available to those lenses? Can you show us examples demonstrating these unique qualities? That also means strapping a focal reducer to a medium format lens captures these special properties and makes them available to a full frame sensor? Any examples to share? (swirly bokeh as shown in this thread is also available with full frame lenses). As with the sub-debate with @tupp, I've been saying it's the optics, and only the optics, for this entire thread. The debate was sensor size having an effect, or not. Now the debate has moved to lenses designed for a specific format have some kind of unique properties only available to those lenses. What are these special properties, and where is the proof supporting this claim? -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

Regarding the comments on perspective, in computer graphics, perspective projection in its simplest form is: s = 1.0; o = ObjectPoints(i) // the object in this example is a bunch of 3D points p.x = o.x*s/o.z p.y = o.y*s/o.z You can see that if we increase s, the object will appear bigger on screen, and the FOV will get narrower (same as increasing the focal length on a camera) and we'll 'compress' the image (less distortion). If we move the object closer to the camera (make o.z smaller), we have to reduce s (same as lowering the focal length) if we want the object to be roughly the same size on screen. This increases the FOV and increases perspective distortion because each o.z has a greater effect (in Orthographic projection, we just drop o.z and don't divide at all- zero distortion). If we move away from the camera, we must increase s to keep it about the same size on screen, and this reduces perspective distortion and 'compresses' the image. -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

70mm film (actually 65mm, 70mm is marketing) provides a lot more resolution, so the projected image can be much bigger. Nothing special about the look other than marketing :P I watched Interstellar in 70mm and it was kind of a mess (Chinese theater in Hollywood), with lots of blurry shots and overall not very sharp (+ sound was way too high and distorted). The 35mm shots were glaringly too sharp when they cut in. A while later I watched Interstellar again in 4K digital (AMC Century City) and it looked more consistent (maybe it was a new edit- they had fixed the sound problem). 70mm is a marketing term, generally telling us to expect a giant, high resolution screen (they may imply a special magical look, however that's just marketing going after your money). The ARRI 65 is 3 ALEV III sensors rotated 90 degrees (A3X). This also provides a major boost in resolution. The ARRI look is at least partly due to the ALEV III sensor, and making one higher resolution with smaller pixels while maintaining the same look is likely challenging (though they may have solved it by NAB for a (true) 4K+ Alexa announcement). -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

'Look' works as it captures any possible effect at all. The argument has been: does sensor size, by itself, create any specific visual effect or look, whatsoever, or not. Do lenses made for different formats have any special characteristics related to the intended capture format? One could argue size, however some full frame lenses are bigger than some medium format lenses. I had asked Brian Caldwell if he'd be making a medium format Speed Booster and he said no. There are now many very high quality full frame lenses and medium format lenses have no unique properties, so there was no point. A lens is defined by its optical transfer function, that's it. In this thread we learned that some medium format lenses can be found for very low cost. Combined with a focal reducer for full frame bodies that provides a cost effective way to get shallow depth of field, swirly bokeh, or other desired artistic looks. That's cool and useful info, thanks again @Mattias Burling! In summary, what we have been discussing is the notion that any format has any special and unique look or characteristic: 'full frame look' and 'medium format look' really mean a 'shallow depth of field look' or in some cases 'swirly bokeh' or other lens artifacts, which aren't specific to any sensor size or any lenses designed for a specific format. -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

Threads go all over the place with many sub ideas. The people I engaged in this debate were talking about exactly the same thing, sensor size having a real and specific look, or not. Is the 'full frame look' or 'medium format look' real, or not. -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

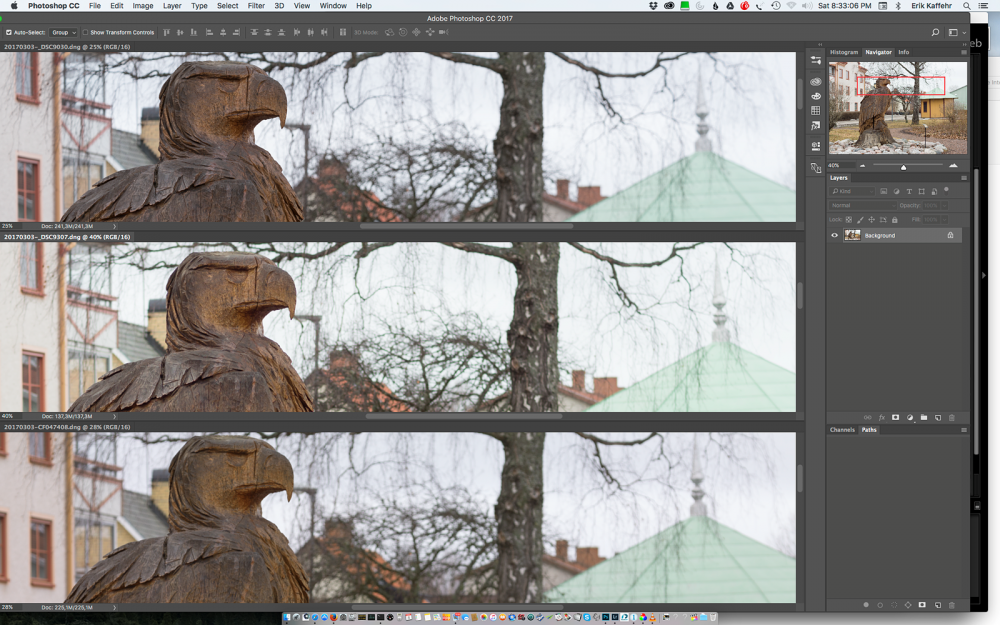

We set everything up as equivalent as possible so we can see if there is a real effect, or not. In this case we're testing to see if sensor size alone creates a specific look, or not. For example, the recent image where 3 formats are compared with the cameras set up for equivalence, there is no specific look or looks which can be attributed to sensor size. That's the point of all these tests and debates. -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

Very specifically, does sensor size create a specific look regardless of any other variable, or not. Equivalence is a useful tool to help answer this question. -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

The debate has been sensor size and look. That's it. Folks added other elements such as lens effects which were never contradicted. Haha it's all good ? -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

Yeah it's been pretty civil this time around and only a few holdouts left regarding sensor size alone having any special look. Feels kinda like being on a hung jury- we're so close! Is there a prize for being the first forum on the internet to agree on this subject? -

My thoughts on the Kipon Medium Format "Speedbooster"

jcs replied to Mattias Burling's topic in Cameras

The concept of equivalence is a technical one, math and physics. The perception of specific looks is an artistic one. Here's APS-C, full frame, and medium format compared with equivalent settings (thanks @BTM_Pix , from https://***URL removed***/forums/thread/4125975 ): Are the 3 shots pixel perfect? That's not possible 'in the real world' (via simulation it is easy if we don't simulate truly random noise). Consider that if you take two consecutive shots 'in the real world' with the same camera and lens and change nothing, the two shots can't be pixel perfect because of noise alone. The Earth rotates, clouds move, wind blows, etc. However as the photographer who did the test states, the images look more alike than different, and share the same look or character. The argument has been that sensor size alone, which gives rise to special looks, such as 'the full frame look' and 'the medium format look' and 'the large format look' are specific and real, where the sensor size alone gives images a unique, identifiable character and specific look. The actual looks or characters that people are really referring to are: Shallow depth of field Lens artifacts, including bokeh 'style', highlight behavior, contrast, sharpness, color, distortion, defects, etc., as the lens is a kind of optical transfer function or filter, ranging from clinical and accurate such as Zeiss, to something wild like a Helios or Cyclop That's it, there is nothing more. Even with different cameras and lenses, when set up for equivalence, as in the above example, the average person can't tell which camera shot which image as they all have a very similar look or character when using lenses with similar characteristics. The fact that a focal reducer (Speed Booster) works as expected should be sufficient evidence that sensor size is not responsible for any specific look or character. Photography and filmmaking are very technical forms of creating art. Everyone on this forum has the ability to do the simple math and set up their cameras for equivalence: To get the full frame camera equivalent to the crop sensor camera: Multiply the focal length of the lens by the crop factor Multiply the aperture by the crop factor Multiply the ISO by the crop factor squared. Example from my website http://brightland.com/w/the-full-frame-look-is-a-myth-heres-how-to-prove-it-for-yourself/: Let’s do one using the A7S in FF and APS-C (Super 35) crop mode. The crop factor is 1.5. We’ll set up the camera as follows using the Canon 70-200 F2.8L II and the Metabones IV Smart Adapter: Super 35 (APS-C mode on): 70mm, F2.8, ISO 800 Full frame (APS-C mode off): 70mm*1.5 = 105mm, F2.8*1.5 = F4.2, we’ll use F4, ISO 800*(1.5*1.5) = 1800, we’ll use ISO 1600 Can you tell which is full frame and which is crop without cheating or using a blink test? At the pixel level the images are different, however the overall look or character is considered the same. Here's a friendly challenge to @tupp, @Mattias Burling, @Andrew Reid, and anyone else who feels that each format has a specific look which can be characterized: Do your own equivalence tests Share the results online Do not label the images or filenames (so people can't cheat) See if anyone can identify which images are what format, and what are the specific characteristics which allow them to tell the formats apart If anyone needs assistance with the math or settings I can help and I'm sure there are others here who can as well. Remember I used to believe in the full frame look too until I did these tests. Anyone wanting to continue arguing without doing these tests for themselves is either lazy, blocked by their ego, or just enjoys arguing. Nothing wrong with being any of those things, it's part of being human, however we won't be able to take you seriously in this scientific debate