androidlad

-

Posts

1,215 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Posts posted by androidlad

-

-

Yes I invented this 12K sensor, which is precisely the same size as RED Komodo at 27.03mm x 14.25mm, and exactly double the horizontal and vertical resolution.

And who made the RED Komodo sensor?? 😉

Ok fine, this 12K sensor is a custom version of Canon's 120MXSC, operating in an ROI mode https://canon-cmos-sensors.com/canon-120mxs-cmos-sensor/

-

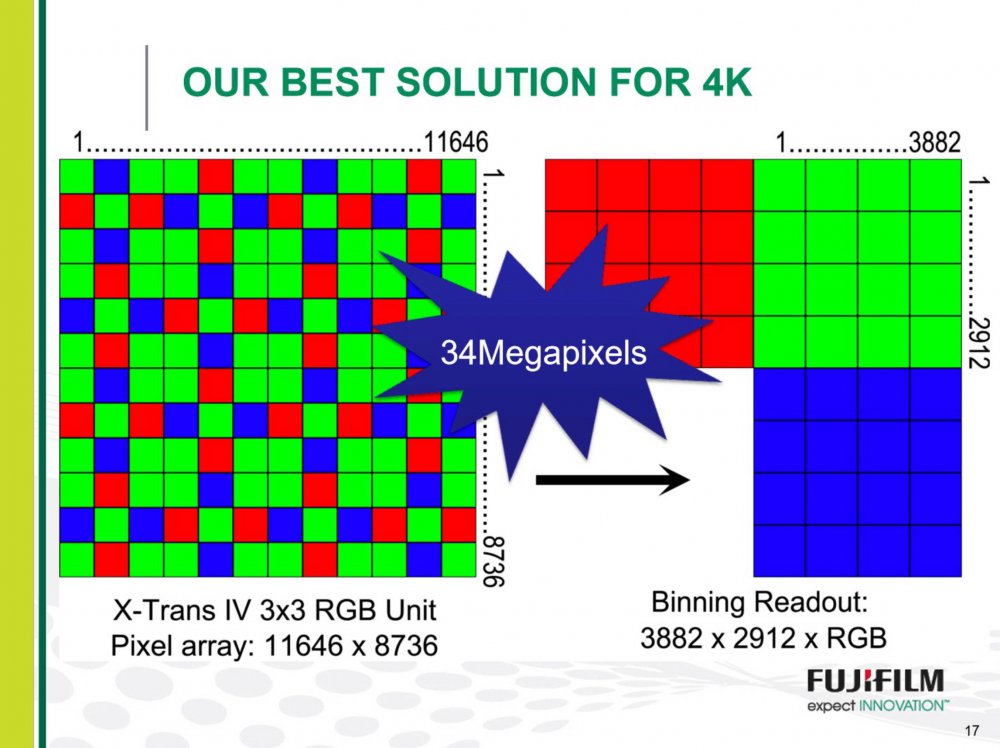

I can confirm that 4K HFR modes (50 - 120p) are achieved using 2 x 2 pixel binning. It's a relatively elegant solution because there's no line-skipping involved. The image will be very soft with lower moire and lower noise compared to other subsampling methods.

-

Free download of F-log ACES IDT DCTL for use in Resolve:

https://blog.dehancer.com/articles/fuji-f-log-aces-idt-for-davinci-resolve-download-dctl/

-

R5/R6 both have 10bit internal recording.

BTW the new look of the forum, especially the new font, is obnoxious.

-

The actual resolution in pixels is 2048 x 1536, versus the current high-end EVF with 1600 x 1200.

-

4 hours ago, Andrew Reid said:

What 100MP quad bayer capable sensor were Fuji referring to in this document (if not the GFX 100)?

It doesn't look like the GFX 100 actually employs this readout method.

So is this something they are saving for a future camera?

That's their 100MP X-Trans project that has since been shelved.

-

4 hours ago, Caleb Genheimer said:

The complete non-starter issue for me on all these external RAW updates over HDMI is the fact that it’s always C4K or 16:9. What’s the heckin point of a “medium format” or “full frame” RAW camera if I can’t even use the FULL frame?

#anamorphicproblems

Seriously though, I want to use the full sensor height. Anything else is just infuriating. I assume it’s some limitation of the HDMI hardware, either the camera’s output, the signal format, or the Atmos’ input, but that doesn’t make it less frustrating.

When there’s a camera that can shoot RAW and be speedboosted into IMAX 15/70 territory, I’ll be there with bells on. Until then, my GH5S and Blackmagic Pocket 4K will tide me over just fine.

It requires far more aggressive line-skipping to readout the full height of the sensor which is 8736 pixels.

Currently GFX100 uses 2/3 subsampling vertically to derive a 4352 pixel Bayer from a 6528 pixel height, and that already saturated the maximum readout time of 32ms required to achieve 30fps video frame rate.

-

1 hour ago, thebrothersthre3 said:

Don't dynamic range and latitude directly correlate to each other tho? A sensor with more dynamic range will have more latitude and vice versa?

Related, but not really directly correlated.

Dynamic range is a measure of a camera system - how far it can see into the shadows and how far it can see into the highlights. Dynamic range can be measured objectively, but even then there's a subjective component as each and every viewer will have their own noise tolerance threshold. This governs how much of the shadow part of the dynamic range they find actually usable.

Latitude is related to dynamic range, but it is also scene dependent. Latitude is the degree to which you can over or under expose a scene and be able to bring it back to a usable exposure value after recording. It is dependent upon dynamic range, which is going to set the overall boundary of by how much you can over and under expose, but it's limited by the scene too, and how bright and dark the scene itself goes.

Say a scene has a range of brightness of 5 stops (a typical Macbeth chart for instance), and let's use a camera that has a 12 stop dynamic range. If we place the scene in the middle of that camera's recordable range, we have 7 stops to play we can we could over or under expose by 3.5 stops and still recover the scene.

But if the scene was a real world scene of actor against a sunlit window and the range of brightness of 15 stops, you don't have any latitude at all - no matter how you expose that scene you're going to loose shadow or highlight information.

So yes, latitude and dynamic range are related, but different. Latitude can't really be used to infer how much total dynamic range a camera has.

-

Great, this aligns nicely with what C5D did with their over/under tests.

But, this is testing the latitude, not dynamic range.

-

1 hour ago, Andrew Reid said:

I'd have nothing against it having an OLPF but...

6K is low pixel density?

No OLPF is the modern standard on almost all cameras in 2020.

Moire and aliasing are trumped up today by chart tests.

The real world situation is what matters more.

The 5D Mark II was an example of where moire issue impacted real world shooting regularly. Even the surface of rivers and water had moire.

Compare the Fp to that and you'll see the moire issues are virtually non-existent outside of pixel peeping.

The AF is better on the Fp than the Blackmagic Pocket 4K & 6K but you don't see Pocket users complaining about AF! Why is this?

Sigma FP as well as Panasonic S1 which has no OLPF, are indeed very prone to moire in real world shooting senarios:

This is exactly the reason why S1H has OLPF.

-

It's a shame the FP doesn't have an OLPF, with such a low pixel density it's very prone to moire/aliasing.

-

ProRes HQ cannot compare to ProRes RAW for adjusting white balance or ISO, because RAW is linear, scene-referred, the results are much better than gamma encoded color spaces. You can linearise but it adds quite a few additional steps.

-

"Supports HDR in movie shooting"

Anyone tested this? I wonder what it does.

-

If this was truth, then there would be accompanying empirical evidence to support it.

For now, it's only your subjective opinion.

Also in BRAW, 6K scored 11.8 stops and URSA Mini G2 scored 12.1.

-

-

Is it difficult to make a 0.65x speedbooster? That way full frame glasses would have true and precise full frame equivalent on APS-C cameras.

With current 0.71x speedbooster, there's still a crop factor of 1.09x, a 24mm lens would have a 26mm field of view.

-

16 minutes ago, TheBoogieKnight said:

Hmm maybe I'm confused. As far as I saw it, if it's 2X skipped and binned, 1/4 of the light is reaching the same sensor area (fewer photo sites are used) compared to oversampling from the full sensor. I realise that each individual pixel is getting the same amount of light with skipping/binning, but you're losing the oversampling which would be taking 4 pixels and combining them into one, effectively giving two stops lower noise.

I can get that exact same lower noise benefit by shooting with a 2 stop wider aperture. If I do this with skipping/binning, I'd reduce my DOF 2 stops. If I do this with a crop, I have to step back (or use a wider focal length) which means I get that DOF back to where it was.

Of course oversampling has other advantages, but you're going to lose them with both binning/skipping and a crop.

I know what you menat, but it's worded incorrectly, what you wanted to say is it would lose SNR.

Note that pure pixel-binning actually increases SNR (2x2, 3x3 etc. you see on smartphone sensors).

-

59 minutes ago, TheBoogieKnight said:

I don't think anyone has even touched one yet. Well maybe the peeps at Canon....

Oh yeah it's already in the hands of many influencers/industry pros, who are anxiously awaiting the NDA lift.

-

48 minutes ago, Anaconda_ said:

But then why can't you take a camera that could record external ProRes raw, plug it into the VA and record Braw? If you can, then why is their EVA1 support even a thing? If that's correct, by default BMD also support any other camera that can output raw over HDMI.

I'm under the impression that the VA can't wrap any raw signal into Braw. BMD need to know the sensor data for their partial de-mosaic stuff. With that said, I still feel that Braw is sensor specific.

Of course, please correct anything that's wrong here. Would love to understand it better.

Most cameras that output ProRes RAW at the moment are mirrorless cameras with HDMI output, and Atomos developed the RAW over HDMI protocol, they only license to camera manufacturers for free.

For those that output RAW over SDI, BMD need to develop support for the their RAW spec (EVA1 outputs 10bit Log-encoded RAW, Sony CineAlta outputs 16bit linear RAW). And the same applies to Atomos, but Atomos has its RAW over HDMI protocol and it's being widely adopted, so they pretty much have full control over the RAW spec.

So instead of saying BRAW is sensor specific, you can say it's brand specific.

-

12 hours ago, Anaconda_ said:

The codec is built individually for each sensor, so Braw for the Pocket 4k will be different to Braw for the Pocket 6k and every other camera that currently records it.

Nope.

BRAW is just a codec, it has nothing to do with sensors or camera models. It requires BMD's FPGA for the encoding.

Same for ProRes RAW, Apple has licensed the encoder to Atomos and DJI, it can encode any incoming RAW signal.

-

A9/A9 II uses 12-parallel ADC and DRAM to achieve 162fps full sensor readout at 14bit (internal speed, subject to I/O limitation).

Obviously due to power and thermal requirements, DRAM is disabled and only 2-parallel ADC are used in video mode.

-

RED Komodo

In: Cameras

30 minutes ago, Alt Shoo said:They addressed the issue with the lower DR and global shutter.

They did their best to optimise the DR. For a charge domain based global shutter, it's doing ok, but it's poor compared to conventional rolling shutter sensors.

It's positioned primarily as a high-end crash cam, only global shutter can guarantee zero skew and zero flash banding.

-

According to a source, X-H2 is likely to have a conventional Bayer CFA.

-

1 hour ago, Anaconda_ said:

It could be that the shots with these custom lenses didn’t make it to the trailer.

She speaks about wanting Milan to have a magical quality, but the trailer is action packed, so I think the more ethereal styles scenes haven’t been shown yet.

34 minutes ago, KnightsFan said:There's definitely some hefty CA in the trailer I just watched, example:

Speaking of which, that's a pretty awesome shot. I haven't paid any attention to Mulan based on the quality of the other live action remakes, but that is a great shot, both for content and the optical quality. It reminds me of showdown with the Wild Bunch at the end of My Name is Nobody. It also shows the impact that vintage styling can make.

It was a custom made Petzval 85mm lens, and used sparingly in the film only for some portrait shots of Mulan. For all other shots, they were Panavision Sphero 65 lenses, very vintage but with some modern touches, CA is noticeable.

Mandy Walker simplified it a bit too much in the interview, it's not really about the CA, it's the distinctive field curvature and sharpness roll-off from centre to edge, it's another way of isolating the subject instead of just using very shallow depth of field.

This is one of the shots with the Petzval 85mm lens:

Blackmagic casually announces 12K URSA Mini Pro Camera

In: Cameras

Posted

This is the CFA pattern of the URSA 12K sensor:

6x6 block instead of traditional 2x2, 18 RGB pixels and 18 white/transparent pixels, which improves SNR a bit but reduces resolution.

So the optimal shooting mode will be 4K (full RGB info without interpolation from 3x3 pixel-binning), 8K will be softer, 12K 1:1 will be very very soft.