-

Posts

957 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by maxotics

-

Everything you say it right. It's important information to know for a filmmaker. However, when you post such things it suggests to some people that the issue I'm raising is not valid. So why get off-topic like this? How many times do I have to ask the question? In a scene of DR NOT OUTSIDE THE CAMERA'S default settings what is the DIFFERENCE in quality between Rec709, say, and a LOG capture? Have I ever once, in this post, talked about how to use LOG in physical scenes of extended dynamic range? That's a subject covered in excruciating detail all over the internet.

-

Couldn't agree more. I just wish the company didn't have it's head so far up its a___ marketing wise. They believe "normal" people are going to buy these cameras. They believe us camera geeks just don't get it. That's my impression. For example, on the site they boast "over 5x optical zoom". Really? OPTICAL ZOOM? I thought it couldn't be done They say "Massive 52 MP resolution" Pardon me while I sound sexist but most women would read that and say, "um, maybe not". Glad to see they're not phased by all those Canon 5DR owners dumping their cameras on CL. They should be selling to people like you and I. If they're not on Andrew's radar then that says it all about their communication skills. Anyway, if they can do the computations at 24 fps, or even 15 fps, it will be one seriously cool camera that can get looks currently impossible. HDR video with deep focus would rip through the product photography domain. My worry is, like Lytro, they over-promised to their investors and will die before the technology takes root. We'll have to see. I certainly want one!

-

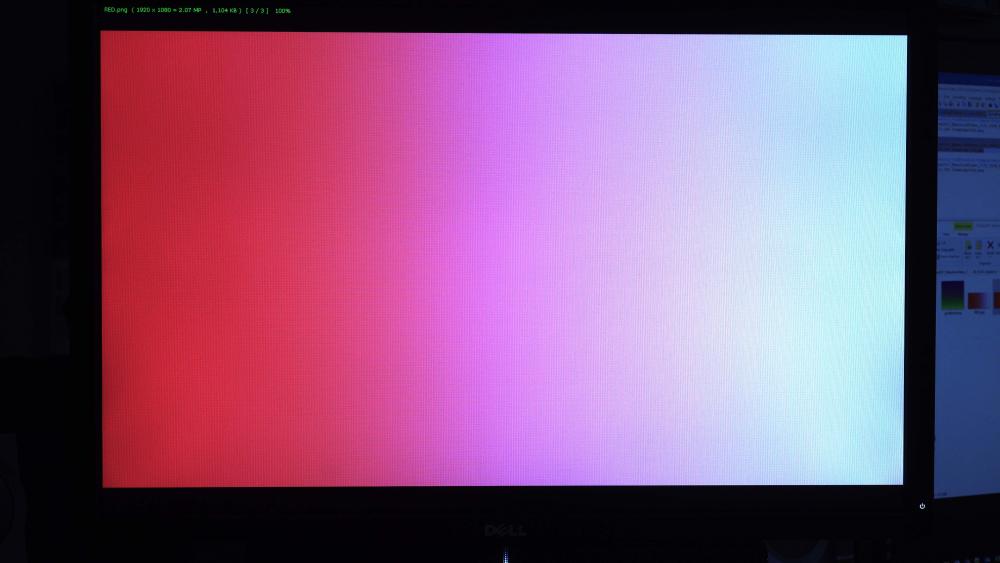

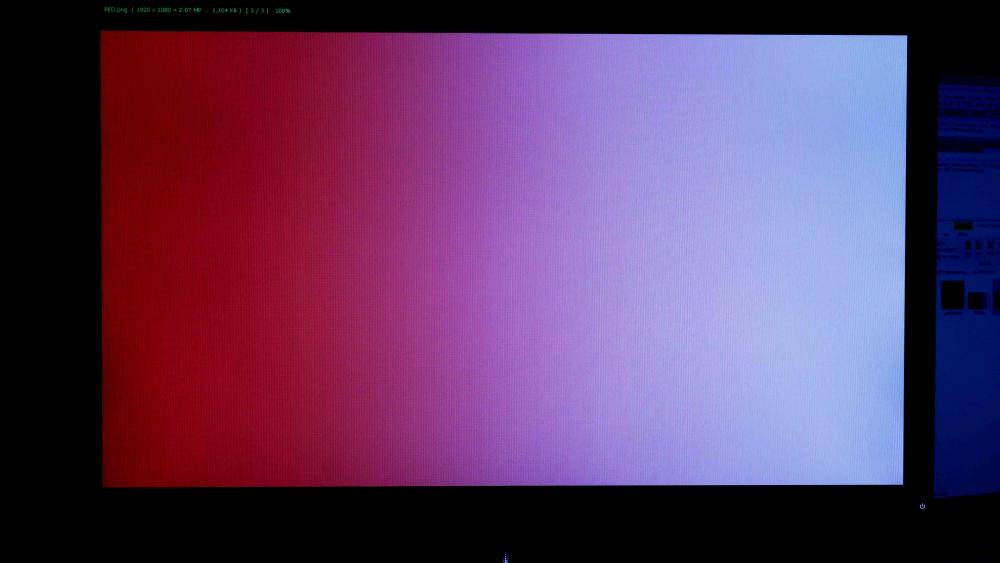

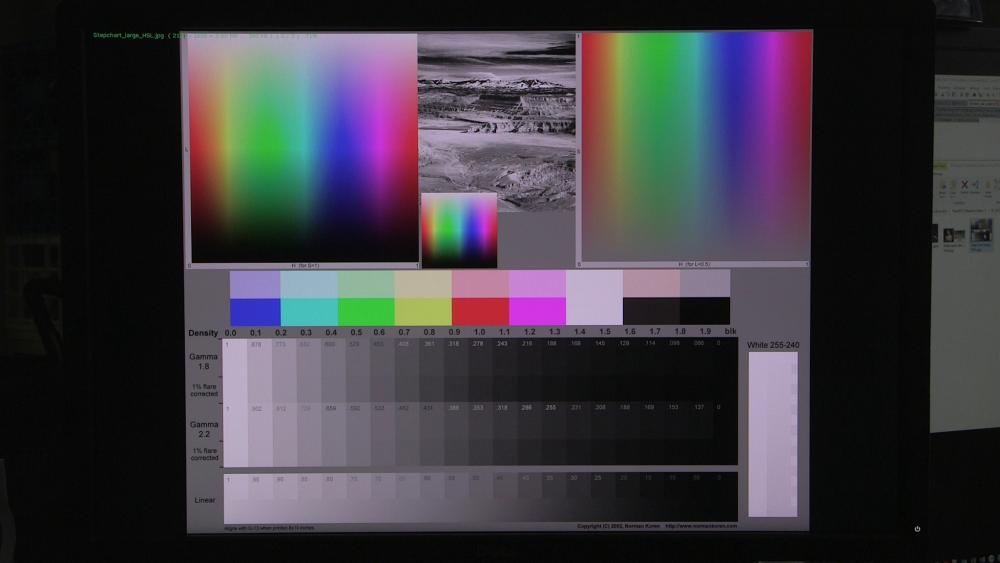

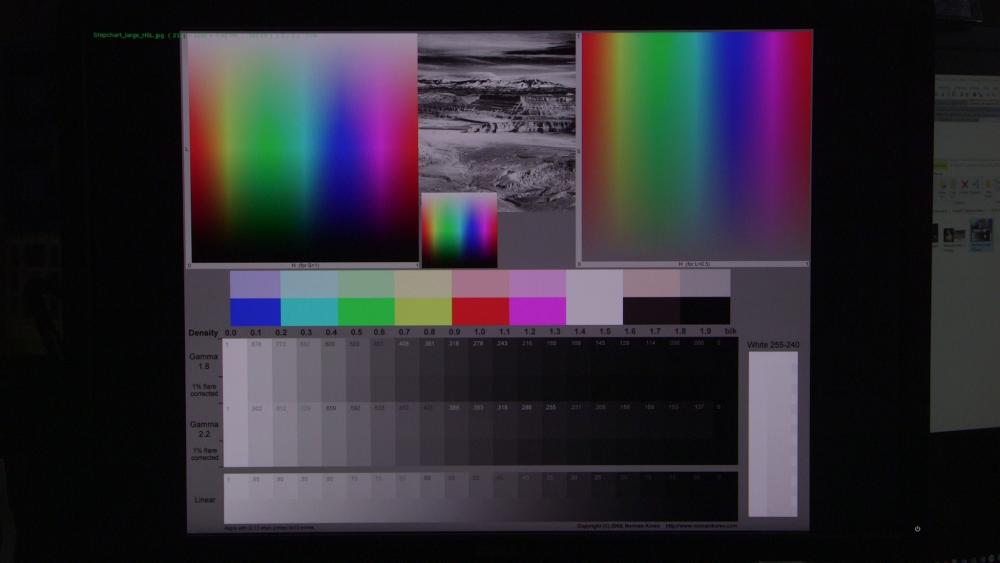

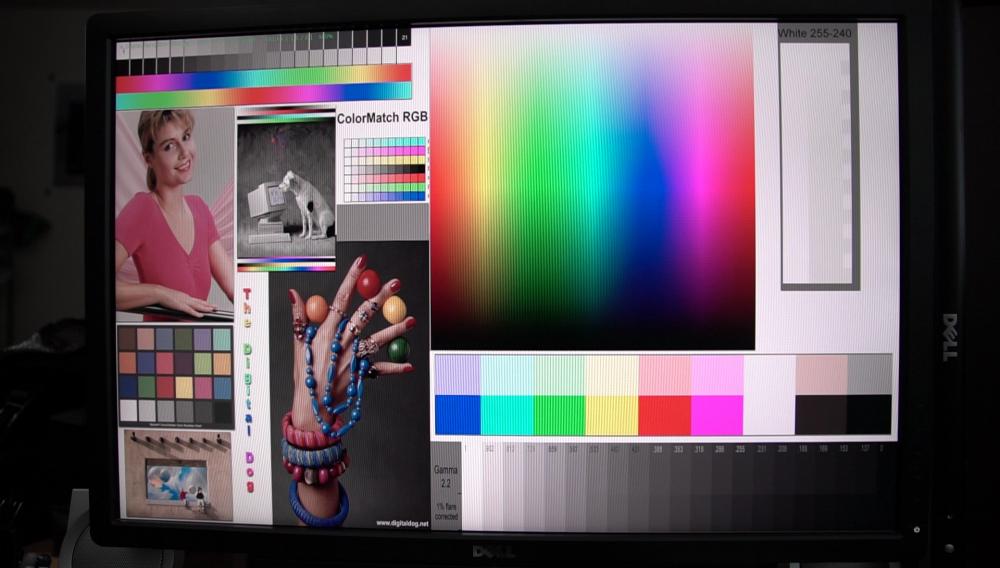

Here are two images shot with an a6300 in 4K at a red gradient on my monitor. One is PP1 the other PP7 This is a quick and dirty test where I shot the screen, curved/exposed in PS to match best I could, red to white. I then saved as JPG at quality 1 (lowest) to bring out the banding issues. In my opinion, it would be impossible to shoot LOG with the a6300/a6500 without getting banding issues if the scene has a subject where it would be noticeable. My current guess is that PP1 in the a6300 has 500% more color than PP7. I'll know more later. Anyway, I don't see how monochrome would have any improvements if the camera can't get a fully saturated "red" patch together

-

That's EXACTLY the confusion I'm trying to remove. What is the camera's best shot at a perfect image in DR that does not make our pupils dilate? Wouldn't have found it without you! Thanks!

-

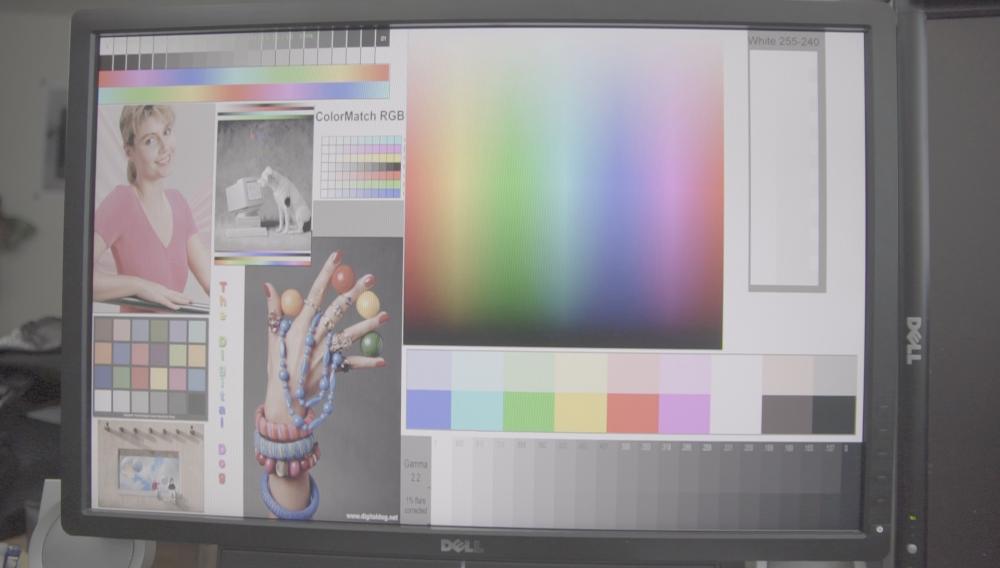

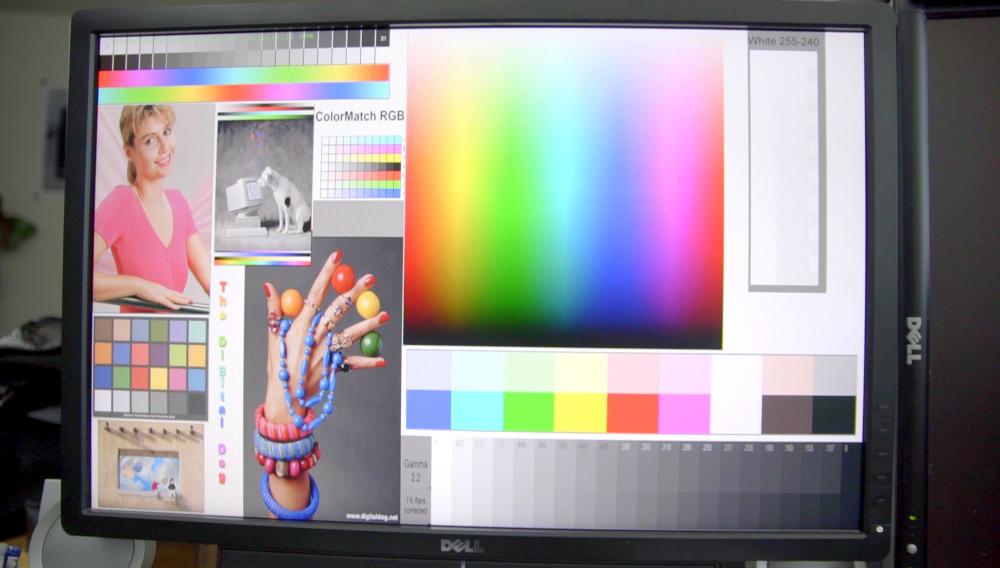

Thanks for the question. Yes, it is biased towards the display's DR. My reasoning is that the viewer only experiences the world through a display (which is probably of less quality than mine). Therefore, we should expect the camera to be able to record whatever is displayed on the monitor. My test won't be to see how much of the monitor's limited dynamic range the camera can record (it will be less for a variety of reasons), but what the difference is in fidelity between the camera's default settings and a LOG profile. If that simple initial test holds true, if I shot both standard profile and Cinema in my office I should expect a 35% color loss from the Cinema profile. Again, I understand what many have pointed out, that most people would NOT shoot LOG in my office. One of my goals is to quantify the quality loss of LOG profiles in standard DR situations. In order to do that, I need to capture the whole 24-bit color space, which so far, is going to be 32 screens of 1920x1080 colors, where each color will get 4 pixels. Make sense?

-

I'm sorry too. I'm learning a lot from you. THANKS! It just tweaks me when people (not you) explain the difference between different sandboxes, buckets, whatever, as if improvements can't be made in deciding how to chose a LOG profile or not. I want to find metrics that are more actionable. As I say in the beginning, my feeling is there is too much hype and mis-understandings surrounding these LOG profiles that are hurting filmmakers. I'm trying to figure out scientific tests to explore that further.

-

Hi @cantsin don't get mad at me but you're doing exactly what drives me crazy. The difference between LOG, or any other image IQ, between an F5, with 9 Megapixels (made only to shoot video), vs a Sony A6300 with 24 Megapixels (and made to take photographs) has more to do with the physics of the sensors than optimization and CODECs. To say that LOG is "implemented in the consumer cameras only as a compatibility function" implies that they COULD have matched the F5 if they wanted to, when I'd call it impossible due to the different sensor types. Yes, no? I don't know why you're bringing F5s into this question? Sorry! Here are four screen captures from a Canon C100. These are the color counts for each 71,685 cinema.png 227,536 eos_std.png 162,560 no prfile.png 148,903 wide_dr.png So if you shoot "wide dr", you're only getting 65% of the tonality you'd get from the standard profile. I understand that in the real world, grading can make a pleasing image out of all these profiles. One question everyone seems to aggressively ignore, is HOW MUCH would a wide dr profile hurt a scene shot within 5 stops of dynamic range?

-

Okay, so I should see a huge difference in the difference of color capture between the A6300 or C100, internal 8bit 4:2:0 video vs HDMI to ProRes HQ? What do you predict it will be? That is, the percentage difference in color details. So if the internal captures 50 colors and the HDMI 100 colors, then a 100% difference. BTW, I understand this is the point Eddie is making. My question has always been a simple one between LOG and standard profile in any camera, so not looking at the theoretical difference IF the camera had 4:4:4 compression,say. However, happy to explore that!

-

One of the posts recommended in that article really nails a lot of issues (and I learned something too!). https://prolost.com/blog/rawvslog That guy knows his stuff. However, as I understand it, there is a big difference between the kind of LOG you get on a consumer camera, and the kind of LOG he's talking about, which is how LOG can reduce RAW data through a type of perceptual compression. In Magic Lantern RAW, for example, you get each pixel's value in a 14-bit single channel space. What he's saying is the distance between those values numerically, does not match the distance we perceive. Therefore, you can re-map the values onto a LOG curve and retain the same amount of information. I don't see a similarity in consumer LOG to the RAW LOG they're talking about in those articles. Maybe I'm missing something. Are we "all in option 3"? I don't believe we are. I believe many people are confused about how LOG works and believe it to be a superior profile to shoot everything. I believe this because people who should know better do tests on YouTube where they're shooting LOG in low DR scenes. Your Option 1. Do you really believe I am making an argument that LOG is useless? Please I've written so much that for you to say you don't understand my questions is a bit disheartening. My question is yours, "If the costs of log in a particular situation outweigh the benefits, then don't use it." What are the costs? Can you point me to objective guidance that doesn't resort to subjective feel?

-

Thanks @cantsin. I wasn't clear on the names, at all! @iaremrsir I have been building a test-bed for these issues this past week. I am breaking up all 16 million colors into 32 screens worth of data (4 pixels per color) and will video them and compare each profiles color-count and histogram of what the camera should be able to duplicate from a monitor. I will shoot both in-camera AVCHD and ProRes on a NinjaBlade with a C100, and will shoot the profiles on the A6300. Hopefully I can determine the percentage of color lost in the 5 stops surrounding an exposure when shooting LOG and the effects of compression. I'm needing to do a fair amount of ffmpeg, imagemagick and Python/PIL stuff to get things set up. That suggests to me that few have really studied this issue outside the manufacturer's facilities. The GoPro articles are very interesting, and I'll have to read deeper later, but the author's goal is how to maximize image fidelity in their CODEC, real world of RAW to compressed video. I have no idea what compression, or where, is applied in the data stream for Sony, Canon or Panasonic. (I'm only looking into the internal-compression vs HDMI feed because you said it is important). My question is a practical one, believe it or not! If you're shooting a person under an umbrella on a beach, how many shades/color values to you loose when you shoot a LOG curve? I want some real numbers. Without real numbers many people assume LOG is okay because there's so much written about how to shoot it and grade it. It's in no one's financial interest to make LOG look bad And I'm not trying to make it look bad either. I just want to put it in its place

-

Yes and it is significant. The A7S has 12 million pixels over a 24x26mm sensor, the A6300 has 24 million pixels over a 16x24mm (smaller) sensor. You want the best 1920 x 1080 pixels in your video frame. You'd want the largest camera sensor pixels filling that frame, because the larger they are, the more they're sensitive to light. The A6300 will need something like 2 pixels to capture the light one pixel can in the A7S. Because there is space between pixels, you lose sensitivity there, plus all the electronic work you need to combine those values. In really good light, the A6300 will probably match the A7S. HOWEVER, as soon as you start moving away from 400 ISO exposure the A7S will begin to crush the A6300. As others have pointed out, if one was going to only use the camera for video (or 12 megapixel photograph) there really is no competition. I have an A6300 and A6000. If it wasn't for the external mic and USB powering of the A6300 I'm not sure I'd keep it. Yes, the 4K is really nice in the right situation, but my wife wouldn't notice the difference between the A6000 and A6300 4K downscaled video. For YouTube stuff, the A6000 is still a monster (especially when the new firmware gives you XAVC-S at 50mbs. If I could trade the A6300 for the A7S 1 I would in a heartbeat

-

I agree with all your posts. Many people don't want to think about the science part of photography. They don't understand why many photographers lust after a D810 just to get a base ISO of 64! Most people only think about high ISO performance. Many people who shoot video have never shot photography in a real way, where they can see how cameras behave under different circumstances, from working with their RAW files. It's only by working with RAW files that one can understand just how much degradation the video chain does on the image. Few work hard to separate the different effects between what the sensor/camera is doing, and what the video compression system is doing. They confuse which is the cart and which the horse As you know, a sensor is just the start of image acquisition. The mathematics, and secret-sauce matrix transformations, to make the data usable is beyond most people, especially me I wish I understand more about how Nikon gets such great images. @jcs might have speculated a long time ago that Canon gets its warm effect by using redder bayer filters over their sensors. That makes sense to me. Of course, if you're a photo purist, that's not cricket and why so many end up with Nikon which has the most neutral image to me (at least). To expect Samsung to deliver the same still quality over Nikon, which lives, breathes, and eats pure photography every day--nope, not going to happen. Like you say, spend enough time with a Nikon camera and you'll appreciate those subtleties. All that said, I now shoot Sony.

-

You can't sell papers if you don't annoy people--Andrew certainly has that down But his main point is valid, much of the technology development that Panasonic, Sony and Samsung have done is still years ahead of Nikon, with Canon struggling to keep up. He's been saying that for years about video. Yes, anyone shooting photography stills would always consider the Nikon D810 or D5 first--for good reason--but mirrorless technology is so compelling in other areas, like video, legacy glass, size and sound that it becomes more and more difficult to just use a Nikon. Want a cool looking, old school camera? Why get a super expensive Nikon DF when Fuji has so many affordable options? What to shoot old lenses? Sony allows you to in full-frame or crop. Want crystal clear video that runs all day, Panasonic has a camera for you (which can also work as an 6K 30 fps still shooter). Then you have Fuji working to make medium format affordable. Nikon full-frame or Fuji Medium format? Scary times for Nikon? I love Nikon products. But Andrew has a point. They still haven't caught up to Samsung in many regards. If the economy for digital cameras hadn't fallen, and Samsung was still innovating, where would Nikon be?

-

Hi Ethan, couldn't agree with you more! As soon as I get a break from my "real" work I'm going to fire up the C100, A6300 and a Ninja Blade so I can analyze un-compressed HDMI data, as Eddie suggests. We all want the same thing. We all agree high dynamic range shooting in 8 bit has issues. How much? That's the question. Again, for all I knew when I started this post, I'm completely off. But I think the issue remains so I will continue work on it with everyone's help here.

-

I think we all want to be agreed with That said, why is it I who is not listening? What am I wrong about? He has said himself he doesn't dispute much of what I've said. He says that compression has more to do with noise than shooting S-LOG, fine, but that's his opinion! He hasn't shown me any of his tests. Why should I take his word for it over my experience? Anyway, I've had to challenge him (us) to get that articulated. We still have a ways to go because so far there are no facts about that, that we can both agree on. We're getting there. A little piss never hurt nobody If I have to play the bad guy in this thread, so be it I'm sure he's happy to continue playing the good guy!

-

Hi Eddie, a simple question, when an EOSHD reader shoots an aggressive S-LOG on their A6300/A6500, say, are they getting more noise and less color than what they would get shooting a standard profile? You keep bringing up situations that is not consumer S-LOG, like uncompressed HDMI or RAW. You keep looking for holes in my arguments instead of asking if they are correct in real-world shooting for consumer S-LOG. You say you have designed log profiles for the Bolex. COOL!!!! What was the difference in noise when you grade with LOG in 10 stop dynamic range vs 6 stop dynamic range. Putting aside the fact that a Bolex is hardly consumer. You wrote "You're speaking as if the main reason detail is lost in 8-bit is that it's the log curve, when in reality, the main loss of detail has been heavy compression." Fine. How much! I calculated 30% based on the number of unique colors I could count in each. YES, they're H.264 compressed, but since they're BOTH compressed shouldn't the effect of compression be equal for each? What are your statistics of noise for your LOG and linear profiles? Nice job on the image, yes, better than I could get!

-

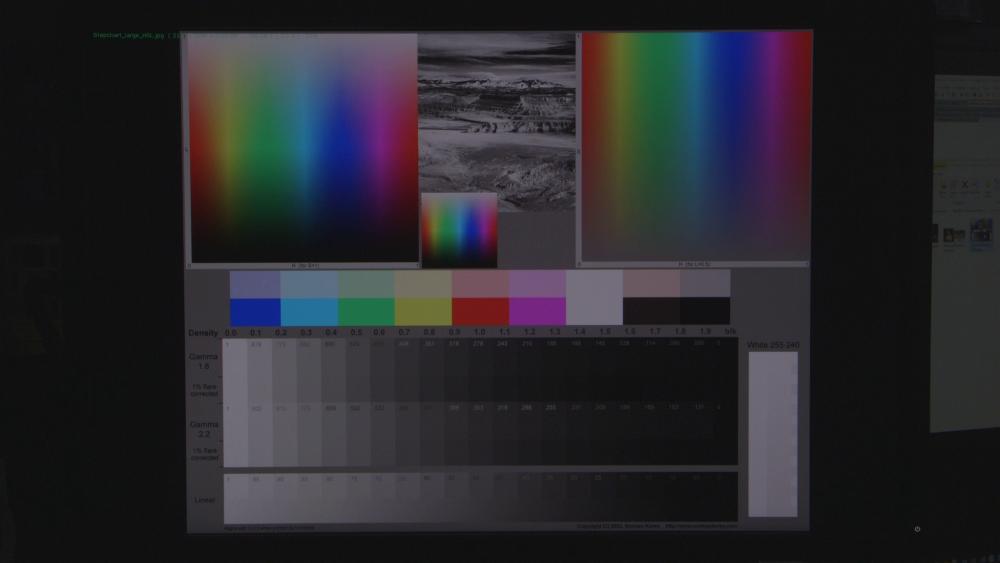

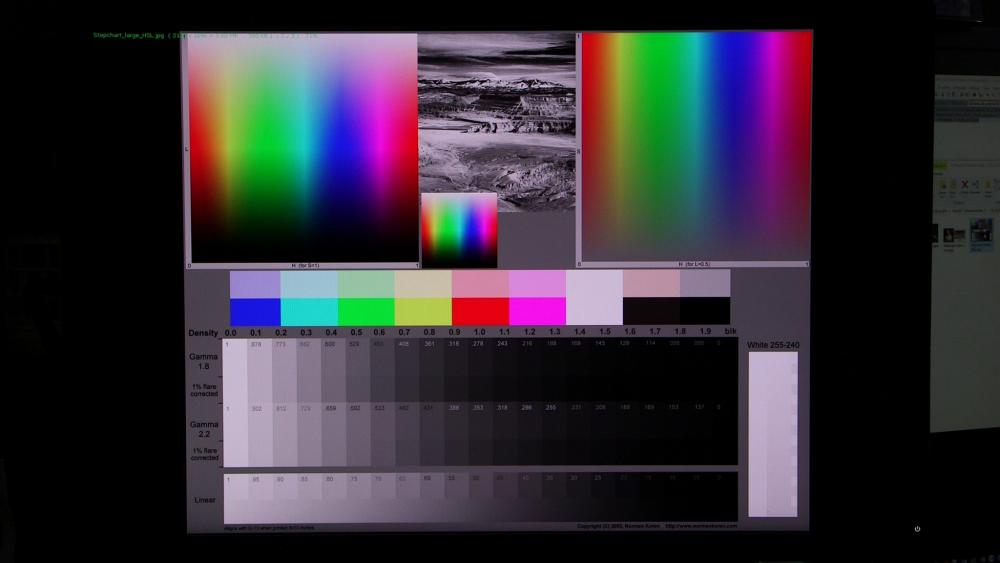

Hi @cantsin, I still don't see what I don't get? I understand that compression has many different meanings (like in audio and anamorphics), but isn't the core issue HERE how the S-LOG setting in a camera affects image quality? I'll focus on "dynamic range compression through log profiles". The way of phrasing that misleads many people. Most people have the idea that the right compression can improve video because, well, in general, it can! So they read "A LOG profile allows you to get more dynamic range through your camera than you could using the camera's default settings (standard profile)." Many people believe S-LOG is some new technology invented by Panasonic and Sony to get more dynamic range. The fact is, it's been around professional 8-bit video for a long time. As I discuss in my video, Canon and Nikon don't include S-LOG because they still don't believe the trade-off is worth it for most people. That trade-off, again, is you get that extra dynamic range (though compression if you want to call it that) by substituting high noise visual data for low noise data. There is no free lunch here. If you come from a photographic background, you try to avoid that trade-off unless you absolutely can't help it. Because most video has a washed out look these days people don't notice this. But it's there. And one day, when tastes change, and people try to re-grade their S-LOG video to new, fully saturated, noise-free tastes, they'll see exactly what I'm talking about I can't stress this enough. The world around us in in 20 stops of dynamic range. But we watch ALL video in 6-stops of dynamic range. Even if you could capture the physical world in all its 20 stops of dynamic range, with NO noise, you still have to fit your data into 6 stops of DR. So again, if the end result in 6 stops, why wouldn't you want those 6 stops to be as fully saturated and noise-free as possible? When one separates DR into those two worlds, physical OR perceptual (viewing) then they can understand the limitations/trade-offs of how technology translates one into the other. In these three screen captures you can see an illustration of the issues. The first is S-LOG interpreted under a linear (or standard gamut) curve, so looks washed out. The second is that S-LOG frame "graded" so that it matches out visual expectations of full-color saturation. The last image is a standard profile screenshot where you can see the benefits of full-saturated colors, both in color depth, and less-noise sharpness (in fact, you can see the aliasing from the monitor pixels!). If you want to capture the monitor in full fidelity, why wouldn't you shoot a standard profile? Who would honestly say the graded S_LOG is superior? if you want to get my office out of the darkness (DR), see some detail there, then yes, the S-LOG does that! I'll say again, why would any DP use S-LOG to get the monitor and my office in the DR he/she wants when, if they had lighting, they could bring up my office into the DR of the monitor and get EVERYTHING noise-free and fully saturated? And, if they did want a scrubby look for my office, noise is easy to add in post.

-

@cantsin and @iaremrsir you keep trying to explain this to me as if I just stumbled upon video technology, as if I haven't written software that works with RAW data. I can't force you to think through the ramifications of S-LOG. I feel you keep bringing up issues that obfuscate the core question. What does compression have to do with the amount of noise in sensor data or 8-bit color spaces? Nothing! You wrote, "You're applying a line of thought that suggests these log specifications are applied at the analog stage before digitization" YES I AM! Though not in the way you say because "log specifications" are not a thing you apply in one place or another. ALL DATA must be married to a curve in the end. Where you think that curve should be applied is a philosophical one. You talk about it as if it is some tool. What I AM SAYING is that S-LOG pulls noisy data from the sensor outside the bounds of what it is engineered to do best and what its physical limitations are. It has nothing to do with whether it is before or after digitization. In a sense, you keep arguing that S-LOG can fix noisy data. I say it can't. I never said Cinema/TV doesn't use S-LOG. Where did I say that? And I certainly NEVER, EVER said it has been used in RAW because that is flat-out non-nonsensical. I would also never say "graded in log" because that is the same as saying "graded in graded" because you cannot do much grading without changing a curve. Again, curves, LOG or linear, are all arbitrary applications of sizing on brightness values to create a subjective perception of contrast. Don't you see that when you work with curves in your NLE all day it's easy to believe it's a tool? I understand where you're coming from. Which is why you need to work harder in thinking about how the image data gets to your NLE and how what you tell the camera to do, how to capture the data, what I'm calling its default "standard profile" or S-LOG, has different effects on the image then what curve you choose in post. If the camera manufacturers had called S-LOG what it really is, mixed ISO shooting there might not be so much confusion. Again, if you get the results you want using your workflows, great! In the end, that is ALL the matters. But that doesn't mean what you do will work for the next guy. Arguing that the other guy doesn't need mid-tone values because S-LOG can do this or that is, to me, artistically arrogant and indicative of a half-baked understanding of photography. I am NOT saying you're a bad filmmaker because you use LOG, far from it! But I will call anyone, even Oliver, on an argument that one can make S-LOG do anything if they know what they're doing. If that' is true, I want to know how! But you have to tell me how you get around the physics of image capturing as it exists today. I have only heard rationales for the trade-offs, not reasons why S-LOG is always preferable. The only thing I have learned so far is that S-LOG has become sort of a lowest common denominator of image quality that prioritizes capturing wide DR in the real world over better color saturation, that the camera is capable of, in scenes lit to maximize the camera's potential.

-

I never said a small gamut equals more data, did I? I don't understand your analogy, though others seem to. I'll try to explain what I'm saying using your metaphors. Yes, the sandbox equals our data space, we can fit 16 million colors into our sandbox. Our gamut is essentially how far apart the colors are, from one piece of sand to another, arranged from darkest to brightest. Perceptually, the gamut MUST match the sandboxes distribution or it will look wrong to us. I want to point out, that I haven't made clear in other posts, that there are no real colors in our sandbox. There are only grayscale readings (brightness) from sensor pixels with known color filters in front. The Gamut (and other matrix calculations) is what translates those colors into a brightness/contract/saturation whatever scale that makes sense to us. Because sensors have their own oddities, and our vision also had idiosyncrasies, we don't just make a red with a value of 20 double the redness of a value of 10, or if we do, it doesn't mean that we make the value of 80, a double of 40. If you take sand from outside your sandbox then yes, you MUST change the gamut to match what we expect visually. Unfortunately, S-LOG introduces another way of thinking about gamut, not as a way of making visual sense of image data, but as a way of taking full-color data outside a 24-bit space and re-jiggering it AS IF it fit in the sandbox in the first place. THE PROBLEM is that data outside the 6-stops of expected gamut, which are put into our sandbox, are at higher noise than those from our original sandbox. Noise is what makes us get less color which again, are ONLY RELATIVE BRIGHTNESS values that the sensor picks up. Put another way, let's talk about red only. In a standard profile you're getting 1 to 255 shades of red. The values at the low and high end are noisier, more uncertain, than the values in the middle, say at 127, because that is the nature of all scientific sensors. The actual red you make out of those values in up to you. You can get someone's LUT for example, and assign them accordingly. When you shoot S-LOG you're getting sand outside your sandbox, as you say, but what you're not recognizing is THE QUALITY OF THAT SAND is poor. Wide gamut, HDR, all these ideas will not change that very simple fact. You can test this yourself as I did (at the end of my video). The quality of your data is the number of distinct colors you get because again, they have no real color, that is assigned afterwards based on your assumptions of how the data is distributed. You get 16 million distinct colors in a standard profile and only 10 million distinct colors in an aggressive S-LOG. You can distribute those 10 million colors to make an image that more closely matches the wide dynamic range of the original scene, in basic contrast only, BUT, and I say this again, AT THE EXPENSE of color information. You may be reading this and thinking, 'well, if I follow your argument why would anyone use S-LOG." Because in lighting they can't control S-LOG can give them contrast they can't get in a standard profile. Again, I HAVE NOTHING against s-log. I'm just pointing out that a lot of people doesn't seem to understand WHAT THE TRADEOFFS are. So I don't understand what you're saying on the level of how you think you can extend the sandbox without real consequences and that the first goal should always be to light your scenes to fit a 6-stop sandbox. When people suggest to new filmmakers that their footage looks bad because "they don't know how to use S-LOG" I disagree strongly. Even when used perfectly, S-LOG is NOT BETTER than a 6-stop scene lit to get EXACTLY the look you want in a 6 stop range which is what EVERYTHING ENDS UP IN. Prove to me it is "literally the opposite." Wide gamut store wider values in a physical scene but those wide values are of poor quality. Because you always end up in a 6-stop space, why not get those values in a standard gamut? That's what you're not thinking about. CAN YOU GET any look you want IF you could control the light in every situation to 6 stops? Yes, you can, which is why big-budget film crews have huge trucks of light modification equipment. THEY KNOW a film shot in 6-stop light will look better than wide gamut in 10 stop light. S-LOG is for when you CANNOT afford to light everything perfectly. Or you're run-and-gunning and you don't have the time, etc.

-

I watched the videos @jcs mentioned. Although I could hear the difference between the Zoom (digital limiter) and Sound Devices (analogue) I doubt my audience would hear it for the stuff I do. Just like imaging equipment, the physical sensor looses fidelity at the extreme ends of its dynamic range making any sort of improvement difficult; that is, the old, garbage in, garbage out Probably just as well to spend a few hours learning post processing techniques than depend on the the devices real-time limiter which has no look-ahead capabilities (AFAIK). Also, for the purposes of a podcast, I'm leaning toward a USB interface where I can get 16 channels of separate audio into the computer for under $300.

-

As much as I want to divert the thread in a discussion of annoying my family with FX .... I recorded another podcast last night on the Tascam 70D. No matter how many times I told them to regulate their voices, by how they sounded in their headphones, they still often talked too loud, or too softly. A technically proficient friend of mine who was there then became annoyed with me that I didn't keep changing the levels. My response was it was hopeless and the podcast is just a family thing anyway (doubt anyone would find it very entertaining). As I mentioned above, my response to JCS's recommendation of the Sound Devices is to taser people, but that's clearly not possible. So if I was going to do a professional podcast I can't see how I wouldn't want the analogue limiters of the Sound Devices, or other professional gear. So I'd like to hear from @jcs, or others, just how valuable, or not, those analogue limiters are in their work.

-

It would be more annoying to them in real-time

-

Again, that last thing I mean to do here is question your creative skills, which are immense. I can understand why BBC might want S-LOG if "the variants in location was staggering", but does that change the fact that IF you were shooting in all controlled 6-stop environments you wouldn't touch S-LOG with a 15 foot pole? Nor would they want you to? What you're talking about is quite fascinating to me, all the variables that go into choosing your profile. If you say the loss in color fidelity is worth the extra dynamic range, then I believe you! But how does another filmmaker on the forum, like me, put that into practical use? I don't shoot every day like you do. I can't eyeball the scene and know from hours of camera use what profile will get me the look I want? Which brings me back to my point. Professionals use S-LOG, which is very tricky, to tame high DR scenes which they CANNOT control. Why would anyone, in control, light a scene beyond 6 stops? Even if they wanted noise, they could simply underexpose! So shouldn't the first goal of anyone be to get a scene as tightly lit as possible? I understand the S-LOG is fun, and I understand why one wants it. Again, I'm no here to pick a fight, I sincerely struggle with this issue myself. I want to find a solution for me. But when I see people on YouTube who show graded S-LOG in a scenes under 6 stops of light I just don't get it. It proves nothing because S-LOG isn't made for that environment--again, it's misleading! Those image data from a standard profile, in those scenes would have up to 30% MORE COLOR! Let me say again how I understand things. Digital sensors have a sweet spot of light sensitivity at a certain stop, at a certain ISO. So if your first stop is 100 ISO, your second stop, in DR, is at 200 ISO, your 3rd stop at 400 ISO. 4th, 800, 5th, 1600, 6th, 3200. There IS noise in light that hits the sensor at its 6th stop, it's just not very noticeable. If, like you mention, you have some 5K Arri coming through the window and you don't want it too overexposed, you might shoot an S-LOG that is 10 stops out. That means you're moving the lowest stop to account for the Arri, putting your top stop at 48,000 or something like that. It really doesn't matter if you're shooting 8, 10 bit H.264 or RAW, THE PHYSICAL LIGHTING drives your decision in having to use S-LOG and it means, ultimately, that you have to use noisy image data to get contrast. Many people on the Internet talk about S-LOG as if it is a fix-all for all lighting. That's what drives me bonkers. Is it? Yes, it fixes certain high DR physical scenes into a look one may want, but it would also DESTROY the quality of a scene in 6-DR lighting. To me, the FIRST GOAL is a noise-free image, fully saturated image, in the DR that will eventually reach the viewer. Again, they never see anything beyond 6 DR, period. So I don't understand why you don't say, I only shoot S-LOG when I have to. Why isn't your first goal shooting a scene that optimizes the image capabilities of your camera, which, no matter how good, still struggles a little bit at 6 stops outs? Just want to repeat, not questioning your skills, but what are your "first principles" as a cinematographer I know it is to get the best look for your client, but before that, don't you want the best image? That's part of my OP, that S-LOG has made many people lazy about how they approach the photography aspect and it may hurt them in the future. For example, someone shoots S-LOG in a 6 DR scene, then on monitors in the future that are more color rich, the footage looks very washed out and noisy compare to other cinematographers who shot the profile that maximizes the camera's capabilities in a 6 DR range.

-

If someone set up a studio and paid professionals to light it all within 6 stops (like a real TV set) and asked you to bid for the camera work and you said you shoot everything in S-LOG what should they conclude? What if they say, "Oliver, the way I understand it, an 8-bit camera's normal gamut captures 16 million colors in 6 stops of dynamic range with the noise expected within those 6 stops. If you shoot LOG, you're bringing in values above those 6 stops, which don't actually exist on our stage, which will be noisy. Why would you trade any of those 16 million colors on our stage, for colors (brightness) that is not on our stage?" You're using "science" as a reason not to really think about this stuff. Again, maybe I'm wrong, but I haven't heard any argument against what I see. Another way of looking at it is that when you say you shoot everything LOG it's like a photographer saying they shoot everything 1600 ISO and when you challenge them on it they say they really know how to "use it and apply noise reduction expertly." Would you want photographs from such a photographer? Of course not (unless they're shooting a scene where deep shadows are more important than the general exposure). When you shoot S-LOG you are also shooting high ISO in a way. Why would you ever want to do that in a scene lit to perfectly fit the gamut of people's displays? If you want to see for yourself, light a scene within 6 stops and shoot both in a normal profile S-LOG and see for yourself.

-

I don't fully understand the USB audio part of these devices. Could you explain more about the reason behind your "abandon ship". --which cracked me up Indeed, expand on it. What I want to be able to do is record 4 to 8 separate mic tracks on my laptop. (btw, what attracted me to the Yamaha was the voice effects--yep, the 13-year-old in me was like "yeah, I'm going to add Darth Vader to the podcast! I'm sure my family would rather I didn't!)