-

Posts

957 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by maxotics

-

From the Sony technical document you claim there is "no mention of pushing and pulling": 3.1 Changing the Sensitivity (Push Process / Pull Process) The most common method is to adjust the MASTER GAIN of the camera as shown in Table 4. The image contrast that appears on camera viewfinders and the on-set displays will remain consistent, hence it is easier to monitor 3.1.2 Push Process Increasing camera gain will improve camera sensitivity but will increase the camera noise floor. When extra dynamic range is required, the exposure value should be defined according to the light meter readings as in film. 3.1.3 Pull Process Reducing the camera gain will improve the signal to noise ratio performance. This technique is suited for blue/green screen effects shots where pulling a clean key is of prime importance. It should be noted that the camera dynamic range will be reduced. Chart 1. Comparing the Differences in Effects of Push/Pull Processing between S-Log and film Push Processing (+ve) Pull Processing (-ve) Contrast Latitude Graininess Contrast Latitude Graininess Film Increases Narrows Increases Reduces Widens Decreases S-Log No changes No changes Increases No changes Narrows Decreas Thanks @IronFilm!

-

Maybe all of us who have SOMEHOW lived without a talking refrigerator will now be rewarded I strongly predicted that Samsung would not give up on their cameras. I was wrong, but not completely wrong because they still sell them. And we must keep that in mind. It's still speculation what's going on with their R&D and factory. If Samsung is listening to all the passion for HDR around here,@jonpais I'm talking to you then NX2 is on the way! 6K would greatly improve color texture in 4K HDR!

-

The dynamic range may be more perceptible but that doesn't mean it gives a higher quality to the filmmaker than the gain in color information, no matter how small. You accept that the trade-off exists above, and then try to argue with me again. I don't get it. It's always up to the filmmaker's subjective decision. I've said that from day one and will keep saying it. I only start arguing again when one say the trade-off doesn't exist. Again, don't know why we're arguing? Small, big, up to the filmmaker. As for @HockeyFan12 second article which is from the beginning of Sony's S-LOG development. Sony writes, "S-Log is a gamma function applied to Sony’s electronic cinematography cameras, in a manner that digitally originated images can be post-processed with similar techniques as those employed for film originated materials" They weren't trying to replace rec.709. They were ONLY giving a filmmaker a way of shooting where he could push or pull the "digital negative" like a film negative. S-LOG was designed for filmmakers were used to the ability to push or pull their FILM! They didn't design it to provide a "better" image than what the camera already recorded in rec.709 with a LOG curve already applied, as @webrunner5 pointed out. Just a different one. In the exposure guide Sony gives these specs Table 1 F35 Dynamic Range in Cine Mode ISO S/N Exposure Latitude over18% Exposure Latitude below 18% Total Latitude (Dmin to Dmax) 450 54.5dB +5.3 Stop -6.8 Stop 12.1 Stops 500 53.6dB +5.5 Stop -6.6 Stop 12.1 Stops 640 51.5dB +5.6 Stop -6.3 Stop 11.9 Stops Notice how the signal/noise at 450 is 54.5 and it is 51.5 as 640. That tells the filmmaker that harder he "pushes" his digital negative the more noise he will experience. So think of the noise difference between shooting 400 ISO on your Sony/Panasonic camera and 800 ISO in LOG gammas. The reason sony didn't go into color loss is that any filmmaker who uses film is ACUTELY AWARE of color loss when pushing negative stock. Now again, Sony isn't going to tell the users of their cameras how to shoot. But I am going to tell any young filmmaker, who has only known digital video, that they want to be careful jumping to conclusions about what 8-bit LOG gammas can do, and cannot do.

-

Thanks, I think you've finally give me the information for my report, when I finish it! THANKS!!!! LOG makes sense for a 16-bit linear scan in old scanners because the data DOES need to be in a LOG scale to work with displays. That makes total sense to me. However, I believe some people assume that the camera manufacturer don't already pick data into a LOG distribution for cameras. That is, they believe there LOG gives a trick to give more DR that the camera manufacturers didn't "notice" until they put LOG gammas into their shooting profiles. I believe that is totally false. Needed gamma adjustments to electronic visual data in cameras have been understood from day one. Scanners are a whole other story. The links you gave appear to me as broken graphics. Can you try again? Thanks! @IronFilm What is distortion? My understanding is that It can either result from sound pressure overloading the microphone's diaphragm or from a data stream that has more dynamic range than the data containers can hold? The reason some don't notice a relationship between dynamic range and bit-depth is that in audio, the equipment is more than powerful enough to capture all the electronic data produced by the microphone and a resolution where people do not notice distortion. This is not the case in video. Current sensors produce way more data than consumer cameras and memory cards can handle. One can consult the table values I attached above. In any recording of physical measurements, dynamic range must have enough bits to record whatever resolution of data is required to give a continuous signal. As you know, there are people who argue that we can discern distortion unconsciously even at 44hz resolution. What do they believe one needs to reduce that distortion? More samples which means more data. Ultimate Dynamic range = resolution / range-of-values. If I can sum my whole point about dynamic range is that one can't think of range only (5-14 stops, whatever) one must factor in the resolution (the "dynamic") needed to give continuous color visually or continuous tone in sound.

-

Yes, it's better in "10-bit" because it's shooting chroma sampling 422, but calling it "10-bit" well, I'll leave that alone . I don't dispute that I'd rather grade 422 than 420. What I question is whether the 10-bit from the GH5 is the same as the 10-bit ProRes from the BMPCC as @Damphousse Anyway, like you, I don't have any real problems with 8-bit. Will the C-LOG from my Canon C100 look better on an HDR TV--most likely! But there are other reasons for that than dynamic range. The whole 15-stops of DR in 8-bit claims are beyond ignorant to me, but again, I'll say no more. The C100 gives a super beautiful image in 8-bit. I just started this thread hoping some people were viewing who have worked with professional equipment for the past 15 years and could shed light on the question of when LOG began, etc. I love all this technology, the cameras from Panasonic, Sony, BM, Canon, Nikon, etc. However, this is supposed to be a forum for filmmakers. I hope to help them understand something that is going on under the hood so they can make better decisions. I hope to learn from them, what they experience in their shooting. It's sad I can't give opinions about what HDR can, or cannot do. I should probably leave this forum for a bit.

-

I'm not going to go that far, Mark. What I said about the limitations of HDR I still believe true. If you believe I have given incorrect information please post it right here. Please quote me verbatim and give technical proof of any technical inaccuracy I have given. I have given technical data above, to show the difficulties inherent in providing increased dynamic range. I am the closest person here to a real engineer as I have worked with RAW data on a very low level. For example, when you tell me you can understand this then let's talk https://bitbucket.org/maxotics/focuspixelfixer/src/016f599a8c708fd0762bfac5cd13a15bbe3ef7ff/Program.cs?at=master&fileviewer=file-view-default "Those people who know more than you on HDR" is who? Sorry, but just because you can go out and buy a $5,000 camera and TV doesn't mean you know anything about how it is built, how it works, or what it can do when measured SCIENTIFICALLY against other TVs. Unlike you, I don't just post clips from expensive cameras of walking around in parks and train stations. I build software and experiments to test what cameras do. That IS my thing. I build gadgets to help with technology. I've designed and built cameras that take 1+ gigapixel images. http://maxotics.com/service/ though a single optic. If you don't value what I've learned fine, but I don't see why you need to leave the nastiest comment I've ever read here on EOSHD.

-

I never said it wouldn't be more pleasing to me. I said I was doubtful it would solve the DR problem inherent in 8-bit equipment. It can be better for a lot of other reasons having nothing to do with DR! I've said this a lot but feel my statements have been taken out of context. If I could do it all over again I wouldn't have said or speculated about anything HDR since it just wasn't appropriate because some people are just getting into HDR and it dilutes the worth of what they're doing (which is the last thing I want to do). For that, I am sorry.

-

You guys are killing me! You know, I want to be as liked as the next guy. When I first started this stuff years ago I got into a huge fight with someone on the Magic Lantern forum. I insisted each pixel captures a full color. I went on and on and on. Much like you guys are doing to me. I feel shame just thinking about it. In the end, I learned 2 things 1) What a CFA is and what de-mosaicing does and 2) Always consider the possibility I might be not just wrong, but horrendously, embarrassingly wrong. It's what we do after learning our errors that define us (hint, hint @IronFilm). Anyway, after the MF thing I try to be like the guy who educated me. He didn't give up on me and I'm glad he didn't....but it's hard.

-

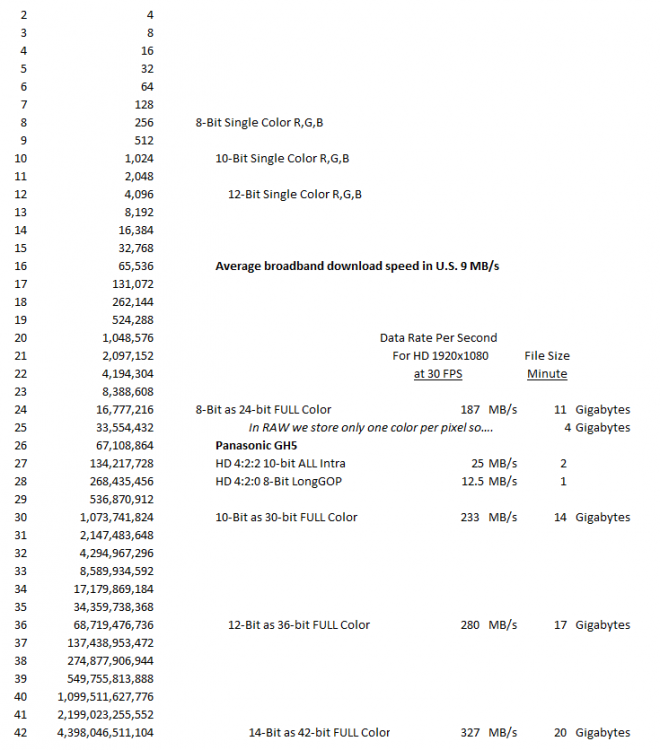

Yes, it is. The question is how 10-bits are measured. In RAW, 10-bits would mean 1,024 values of each R,G,B value. That is certain more dynamic range than storing 256 values (8-bit). If we're talking about 10-bit, in the first sense, we need 1,024 x 1,024 x 1,024 = 1,073,741,825 full-color values. What amount of memory do you need to store a pixel's color in that range? I'm attaching a table of data that I suggest studying and thinking about. The truth is, 10-bit is not 10-bit the way you (and I) would like to think about it. The extra 2-bits goes into reducing chroma-subsampling, or the the amount of color compression the camera does across macro blocks in an 8-bit dynamic range. It does not increase a pixel's dynamic range! You can see this in the Panasonic GH5 specs I included.

-

Sorry, I'm just frustrated. I believe you get everything I'm saying. My guess is you have a reverse blind-spot to Jon. Sorry! You obviously shoot with high-end equipment, so your cameras have fat-pixel sensors and powerful electronics. LOG IS useful to you. But I believe sometimes when you think about LOG you forget that you're thinking about LOG in high-bit depth or cinema-sensor contexts. Many people on this forum have never shot RAW or high bit-depth cameras. All they know/have, is 8-bit. That's always what I'm focused on. Anyway, THANK YOU SO MUCH for your observations. I haven't used the equipment you have and I certainly don't have your experience so I find your comments extremely interesting. Now that I hope we're getting somewhere. I didn't say that Sony and Panasonic were lying. But I do question what they mean by 10-bit video. @HockeyFan12 do you find Sony consumer and Panasonic cameras to have true 10-bit, like an Arri? I don't believe they do. I believe they are 10bit in adding a couple of decimal places to the internal 24-bit color values, but I don't believe they are 10-bit in saving 1,024 bits per color channel. Let me know if my question doesn't make sense.

-

Yes! Because c-log has been tuned to give the most amount in increase DR "look" without super-compromising color. It's a beautiful look, but it's also a sensor made for video. Anyway, I shoot LOG, never said anyone shouldn't. All that said, c-log isn't my first choice.

-

Oh, I could pull my hair out! Those Alexa files (HD) are at around 42 MBS/sec, in other words, pretty close to what you need for a BMPCC or ML RAW. 4K it would be 4x that amount. 10-bit isn't the same for all cameras. That is 10-bit on an Alex isn't the same as 10-bit on a GH5 because the former is, in the case above, doing 444 which is essentially full-color compressed RAW (no chroma-subsampling). It's not a "tiny fraction of the size" in my book. It's more like half the size of RAW, which, don't get me wrong, is nice! No matter how many ways or times I try, some people don't want to read the fine-print or again, figure out if they're really getting 10-bits of DR with no color loss. @EthanAlexander I don't question your viewing experience. Again, I've never said HDR can't be good. The disconnect here is that you and Jon are looking at images where the brain doesn't care if mid-tone color is reduced. If I'm shooting a beach, or landscape, with no one in it, I would shoot LOG. Again, never said one shouldn't use LOG. However, if a person was the subject on a beach, I might not should LOG to maintain the best skin tones. This is a subjective judgment! I might shoot LOG anyway. However, if I did I would not expect the LOG footage to give me as nice skin tones as I would have gotten shooting a standard profile and letting the sky blow out. Your example above demonstrates my point. In the non-HDR version of the truck the red headlights are nice and saturated. The image is contrasty, but not because a standard profile is naturally contrasty but because most of the image data is OUTSIDE the sensor's ability to detect it without a lot of noise. In your second image, you have more detail in the street, and if that's what you're going for, good! But look at the truck's red headlight and the yellow paint. It's all washed out. And if you go back to your editor and try to saturate them, you will get close to the other image, but this time you have high contrast and crude saturated colors, a LOSS against your original image which at least had nicely saturated colors around your center exposure. It's the same thing with your helicopter shot. If you look for rich colors you won't find it in the HDR. Again, all depends on which look you're going for. If you don't like nuanced saturated colors then, then your HDR is great. I do favor saturated colors. We should respect each other's values, right? For anyone to say I can get what I want in HDR is fine, but they need to prove it to me. The images you posted just confirm my experience. But thanks for posting them!!!!!!! We all learn by looking at evidence! This is why I wish Jon would be more careful in accusing me of things. I never said the manufacturers are covering anything up. I said they have no incentive to release test/specifications of their consumer equipment. No professional gives an arse about any of this stuff I'm talking about, because they just go out and get a camera that gives them what they want. If Netflix gave me money for a show do you think I'd shoot it on a DSLR Arri here I come I'm confining myself to the subject of this blog which is understanding and getting the most out of consumer equipment.

-

HDR is a scheme to sell more television sets. They are not artists living off free-love. How much the technology can/will HDR deliver is the question. I NEVER said manufacturers were lying about depth. Please, if you going to put words in my mouth please quote me. I thought I answered all your questions. I don't know exactly what the eye can take in. I only explained my experience. You said it looks fantastic. I said, 'great, I look forward to it'. Yes, you can't see the difference between TRUE 8-bit and 10-bit image data, but that was NOT what we were talking about. We're talking about 8 and 10-bit consumer video. And I don't understand why you fight me so much on these technical issue when you say you're not interested in math? I was never good at math, but have taught myself what I need to understand, I believe, these issues. I made that effort. You don't have to make the effort, if you don't want. But to fight me on it when I have done the work, and you haven't, is well, disrespectful. You may think I have disparaged what you said about HDR. If you read again,you will see I have not done that. I have only pointed out technical problems that I would think they're up against. Because, as I've said repeatedly, I have not seen good HDR I can only speculate. And for the umpteenth time, if LOG can't really fit good color AND extended DR into 8-bit, how will HDR make an end-run around that? Again, not saying there isn't more to the technology. I'll just have to see, literally

-

Am I speaking English? I don't disapprove of HDR or LOG or anything. I only DISAGREE with claims made that LOG can fit more DR range into a fixed data-depth without, essentially, overwriting data. And I'm not making a judgment on you, or anyone else, who believes they can grade 10-bit footage better than 8-bit. This stuff is esoteric and, in the scheme of things, completely pointless. One shoots with what they can afford. Even if I approved, who gives a sh_t? I've gone to great pains to figure some of this arcane stuff out. If you say, I don't care what you say Max, I love my image. I have no answer to that! If you say, 'this isn't working as well as I thought it would' (which is what happened to me and got me on this whole, long, thankless trip), then I have some answers for you. The short answer is the manufacturers have already maximized video quality in 8-bit and there is no way of recording increased DR unless you give up color fidelity. Therefore, if color fidelity is your prime goal, don't shoot LOG. In 10-bit, theoretically, there should be more DR, but 10-bit isn't true 10-bit from 10-bits of RGB values, but is 10-bit in the sense that when three 8-bit values are averaged, say 128 and 129 and 131, it saves that as 129.33 instead of 129. If you want me to explain this is greater depth let me know. Though it doesn't seem you trust anything I say.

-

98% false. You might be enjoying a placebo effect. But I'm not going to beat a dead horse here. In order to display a continuous color gradient, say, each bit must contain a color that will blend in with the next color. If it doesn't we notice banding. I'm just using banding because it is the easiest way to visualize when the bit-depth is great enough to spread a color out evenly through the data space. So let's assume that we never see banding in 5 stops of DR with 256 shades of red (8-bit). If we reduce it to 200 shades, we notice banding (and if we increase it to 512 we don't notice any difference). Okay, so let's say we shoot at 10 stops how many bits do we need to maintain smooth color assuming, again, that we accept that we needed 256 in 8-bits? Please think this out, @IronFilm and explain what bit-rate you came up with and why. Then explain why bit depth and DR and not married at the hip. To put this in audio terms, which you should know more about than I, why don't you record your audio in 8-bits which, I believe, is closer to what is distributed than the 16-bit you probably use? Is DR in audio not connected to bit-depth? If I told you I had a new "Audio LOG" technology that would give you the same quality you get with your mics at 8-bit what would you say to me?

-

Me too. I'm bored with video/photography lately!!!!! Again, Jon, I appreciate that you're reporting back from HDR land! I can definitely see how dual ISO, rather dual-voltage tech, could fit right in!

-

Again, I recorded 10-bit video using the Sony X70. I could find no real different in DR to 8-bit. I have no idea if the GH5 and Atomos Ninja do fully "saturate" the 10-bit space with visual data. I haven't seen anything online that leads me to believe there is a huge difference. In theory, I'm with you! 10-bit video should be serious competition to RAW. I just haven't seen it. Jon, really, I'm not trying to claim there is any conspiracy or that 10-bit or HDR can't deliver real improvements. I just haven't seen them in camera recording. So my opinion: When large sensors with fat pixels were introduced what were the benefits? C100/Sony A7S, etc. HUGE 4K... HUGE LOG... Very useful, but over-used. 10-bit Shooting: SKEPTICAL with existing tech

-

Please Jon. I'm not accusing them of deceiving anyone. It's NOT their job to educate people in how to use cameras. They are completely right to let the user decide when/if to use LOG. From my experience, users can't be educated anyway I'm only using VW as an example that many companies are desperate for market share and aren't looking at things the way others are. And I'm only pointing out that the data is available and one should at least ask why it isn't given out? As for the whether it's 9-1/2 or 10-bit. You can do that experiment yourself. (It's what I'm trying to do but is taking me time to build up the test stuff). Go out and shoot a high DR scene, in both 8-bit and 10-bit and RAW (if possible), then show how more DR and color is in the 10-bit footage over the 8-bit. My first experiments, last year, indicated that except for some very small improvements in banding, there is no real extra DR in 10-bit.

-

My latest video covered this subject. The short answer is that the sensor is helpless. When making video, the manufacturer will try to maximize visual information in its 8-bit space. I don't believe 10-bit really exists in the consumer level because camera chips, AFAIK, are 8-bit. I am NOT AN EXPERT, in that I don't work for a camera company, never have. I don't have an engineering degree. I'm completely self-taught. My conclusions are based on personal experiments where I have to build a lot of the tests. I'm happy to help anyone find an excuse to ignore me The question you want to ask yourself is how much does the manufacturer know, that it isn't telling you, and which there is no economic incentive for people to do. This isn't conspiracy stuff, this is business as usual. To see this on a trillion dollar scale watch the latest Netflix documentary on VW "Dirty Money". Anyway, Sony and Panasonic know exactly how much color information is lost for every LOG "pulling" of higher DR data and how noisey that data is. Since they sell many cameras based on users believing they're getting "professional" tools why should they point out its weakness? Same for the cottage industry of color profiles. Why don't those people do tests to tell you how much color information you lose in LOG? There is no economic incentive here. I'm only interested because I'm just one of those hyper-curious people. A case in point is Jon's comment. No offense Jon, but think about what this implies. It implies that 8-BIT log can capture a fully saturated HDR image. I don't believe it can compared to a RAW based source. Again, the reason is LOG in 8-bit must sacrifice accurate color data (midtones) for inaccurate color data (extended DR). This is gobsmack obvious when one looks at the higher ISO LOG shoots at. If you're going for the lowest noise image, will you end up getting a better image shooting HDR LOG at 1600 or rec.709 at 400 ISO? Again, everyone is constrained by current bandwidth and 8-bit processors. All the companies Jon mentioned want to sell TVs, cameras, etc. They need something new. The question is, are they going to deliver real benefits or will they, like VW, just plain lie about what's really happening when you take your equipment out If you don't know, VW's Diesel TDI was supposed to be very clean. It was, when on a dymo. As soon as one turned the steering wheel it turned off the defeat device and spewed out 40x more pollutants than the driver thought when they bought the car.

-

Hi Mark. We move slowly between dark and bright, inside to outside, etc. On the screen, scenes, between inside and out, can jump every 3 seconds. Too much flickering brightness would give one a headache, like neon signs or strobes. If I can expose well, I can't see much of a difference either. However, I can improve exposure after-the-fact better in RAW. Also, there is something about video compression, especially 420, that adds a lot of unnatural noise (lack of chroma) in the image. RAW provides a natural "grain" like look to the footage, and allows me to set the amount of motion compression, etc. I have a C100 now which gives me a good enough image that I don't feel the need to shoot RAW. Also, the A6300 4K downsampled is also excellent. In short, I agree, with 4K pretty standard, much of the problems of 8-bit have been eliminated. I would be the last to accuse you of delusion The last time I was in BestBuy I couldn't see a good demo. Maybe I'll try MicroCenter. I'm glad to hear you do see an improvement. Something to look forward to! I never want anyone to think I'm against any technology. FAR FROM IT! Quite the opposite. That new 8K Dell monitor? I want it

-

That supports my findings that standard profiles maintain the most color information (white balance solves the equation where R+G+B equals white, the more RGB values you have, the better) while LOG trades color data for essentially gray-scale DR. Many people confuse our brain's ability to composite 20 stops of DR (from multiple visualizations) and the 5 stops of DR we can discern from visualizes where our pupils remain the same size. When one is outdoors, say, they don't see the bright color of a beach ball and the clouds in the sky at the same time, the brain creates that image from when you look at the sky with one pupil size, then the ball, with another. Even so, we too have limitations which is why it is why HDR photography can quickly look fake. We expect a certain amount of detail-less brightness. Indeed, if you look at shows like Suits or Nashville, they use a background blown-out style on purpose. No one there is saying, "we need more detail in the clouds outside the window". My monitor can go brighter. For more DR that is what you do. Again, brightness is not encoded in your video, it is assumed you'll set your display's physical brightness range to match. You want 8 stops of DR, if your monitor can do it, just up the brightness! I don't, because IT IS UNCOMFORTABLE. My eyes can't tolerate it. To anyone who says they want more DR I say, take out your spot-meter, set your display to 7 stops of brightness and determine what that REALLY means to you. Don't just read marketing crap. See for yourself (I'm talking to "you" as in anyone) As I mentioned in one of those videos. By the time we watch anything on Netflix we're down to 5% of the original image data captured by our camera. People could go out and buy higher bit-depth Blue-ray videos but they don't. Unless you're shooting for a digital projector in a movie theaters, video quality is hamstrung by technological limitations in bandwidth, power consumption, etc., NOT software or FIRMWARE or video CODECs. None of that matters anyway, everyone is happy with current display technology. For me, the bottom line is simple. Last-mile tech can't change much. If you want the best image you need to shoot 36-42 bit color (RAW or ProRes) and have powerful computers to grade and edit it. After that, everyone must drink through the same fizzy straw

-

Excellent point! Exactly the kind of questions that fascinate me and for which there is no data to be found. With 4K one gets better color in post-compression highlights by reducing color distortions that pass through from the CFA. So has 4K cured that problem? How much of one, or the other? I don't know what anyone here thinks, but I've noticed a shift in filmmaking philosophy about color. For example, I've been watching the "Dirty Money" docs on Netflix (highly recommended). Each scene has its own color model. One person might be interviewed and look flat, like a LOG gamma. Another scene might be rich in saturated colors. There's something to be said about having a change of look to keep things fresh. So even though, in a perfect world, an old foggy producer might want everyone to shoot the same gamma and recording tech, it seems as if the issues I'm pointing out are becoming less of an aesthetic problem, not because people favor LOG over rec.709, say, but because they want some variety in their viewing experience. I'm certainly getting used to it.

-

Yes, that article, from 2009, goes into the trade-offs of LOG gammas! My theory is that many young filmmakers identified LOG with professional, so when LOGs appeared on their cameras began to shoot with it, forgetting the fine-points of data capture mentioned in technical papers like that. Also, the cottage-industry of color profile makers set out to "fix" LOG footage, conveniently forgetting to educate their customers that sometimes what a filmmaker needs, to get the colors they want, is to shoot in a normal rec.709 gamma.

-

I like to think of myself as an advocate of understanding how one's camera works. linear vs LOG is exhibit A in many filmmaker's choice to skip math class and watch cartoons all day I've done a couple of videos recently on my YT Maxotics channel, which will be the foundation for my new video on LOG. Anyway, the short answer is that a camera records a set of data (pixel) points. Each value is meant to convert into a color. The manufacturer give you a few options with color profiles, like neutral, portrait, landscape. What's the technical difference between linear and LOG? Simply a difference in equation, NOT a difference in data. Before anyone jumps down my throat saying there is a difference in data because a LOG gamma will pull different data points from the sensor, yes that's true. AND IS THE REAL DIFFERENCE. The difference between linear and LOG as it applies to a shooting gamma is the choice of data. It is NOT linear vs LOG. So what IS that difference? Yes, "'linear 'profiles (which end up in a LOG distribution no matter what) .... have less highlight information" BUT one can't claim that without the data trade-off on the other end, which is "LOG profiles have more highlight information than linear but less color information in the mid-tones" So my question to you, or anyone, is why would you always want to decrease the quality of your skin-tones, or mid-tones, to get more highlights in your image? If that's your "look". As I've said a gazillion times. ACES! But to believe you're shooting better images than your friend with the non-LOG camera is simply false if you're both shooting to maximize mid-tone saturation. Part of the confusion is due to various studies about how sensitive we are to light at the high and low end. Again, some have erroneously concluded that LOG can "fix" a problem with linear video data, as if the people making the cameras are complete idiots No, all h.264 video ALREADY applies a LOG gamma to correct for that biological truth. LOG solves a very small problem. What to do if you wanted to shoot a very non-saturated style (like a zombie movie). Or if you didn't want your sky completely blowing out in an outside shot where skin-tones aren't important. I'll put it bluntly. Anyone who shoots LOG for everything, and does not do that to get a specific flat-style, is not getting the best data out of their camera. Anyone who believes a LUT will put back the mid-tone color that a LOG gamma removes, is also expecting the impossible. Finally, there is the question of 10-bit video. Theoretically, it should be able to pull in those highlights and keep the same mid-tone saturation you'd get in 8-bit. My preliminary tests with Sony cameras it that it doesn't happen. I don't know why. I haven't seen any comparison video of 10-bit vs 8-bit where there is a significant improvement in DR without losing saturation. Some suggest it is video compression getting in the way. It's something I will be testing as soon as I'm finished with "work-work"

-

Does anyone know when the first video cameras, both professional and consumer, that recorded a LOG gamma were introduced? I assume something like the Sony F35? Anyway, each manufacturers and approximate date would be helpful. Also, does anyone have an opinion when most professional filmmakers moved from LOG on professional 8-bit cameras to RAW formats (like on RED, etc). THANKS! I want to use this information for the next video I do on the subject.