-

Posts

160 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by joema

-

There are several recent trends that make this difficult to assess. Past experience may not be a reliable indicator. Historically most use of RAW video has been proprietary formats which were expensive and complicated in both acquisition and post. By contrast both Blackmagic's BRAW and ProRes RAW are cheap to acquire and easy to handle in post. However they are both fairly recent developments, especially the rapidly-growing inexpensive availability of ProRes RAW via HDMI from various mirrorless cameras. If a given technology has only been widely available for 1-2 years, you won't immediately see great penetration in any segment of the video production community. There is institutional inertia and lag. However, long before BRAW and ProRes RAW, we had regular ProRes acquisition, either internally or via external recorders. Lots of shops have used ProRes acquisition because it avoids time-consuming transcoding and gives good quality in post. BRAW or ProRes RAW are no more complex or difficult to use than ProRes. This implies in the future, those RAW formats may grow and become somewhat more widely used, even in lower end productions. Conflicting with this is the more widespread recent availability of good-quality internal 10-bit 4:2:2 codecs on mirrorless cameras. I recently did a color correction test comparing 12-bit ProRes RAW from a Sony FS5 via Atomos Inferno to 10-bit 4:2:2 All-Intra from an A7SIII, and even when doing aggressive HSL masking, the A7SIII internal codec looked really good. So the idea is not accurate that the C70 is somehow debilitated because of not shipping with RAW capability on day 1. OTOH Sony will also face this same issue when the FX6 is shortly released. If it doesn't at least have ProRes RAW via HDMI to Atomos, that will be a perceptual problem because the A7SIII and S1H have it. It's also not just about RAW -- regular ProRes is widely used, e.g. various cameras inc'l Blackmagic record this internally or with an inexpensive external recorder. The S1, S1H and A7SIII can record regular 10-bit 4:2:2 ProRes to a Ninja V, the BMPCC4k can record that internally or via USB-C to a Samsung T5, etc. With a good quality 10-bit internal codec you may have less need for either RAW or ProRes acquisition. OTOH I believe some camera mfgs have an internal perceptual problem which is reflected externally in their products and marketing. E.g, I recently asked a senior Sony marketing guy what is the strategy for getting regular ProRes from the FX9. His response was why would I want that, why not use the internal codecs. There is some kind of disconnect, worsened by the new mirrorless cameras. Maybe the C70 lack of RAW is another manifestation. This general issue is discussed in the FX9 review starting at 06:25. While about the FX9 specifically, in broader terms the same issue (to varying degrees) affects the C70 and other cameras:

-

I can confirm a top-spec 2019 MBP 16 using Resolve Studio 16.2.5 has greatly improved playback performance on 400 mbps UHD 4k/29.97 10-bit 4:2:2 All-Intra material from a Panasonic GH5, whereas FCPX 10.4.8 and Catalina 10.15.6 on the same hardware is much slower. Also Resolve export of UHD 4k 10-bit HEVC is very fast on that platform, whereas FCPX 10.4.8 is very slow (for 10-bit HEVC export, 8-bit is OK). In these two cases for some reason FCPX is not using hardware acceleration properly but Resolve is. This only changed in fairly recent versions of Resolve, and I expect the next version of FCPX will be similarly improved. Beyond those codecs it seems the latest 2020 iMac 27 has improved hardware acceleration for some codecs. Of course this will vary based on whether the NLE leverages this, as shown above. Max Yuryev will probably post those results next week. This may be due to the Radeon Pro 5700 XT having AMD's new VCN acceleration logic. VCN is the successor to VCE. It's a confusing area since there are three separate acceleration hardware blocks: Intel's Quick Sync, Apple's T2 and AMD's UVD/VCE (and now VCN). The apps apparently just call the MacOS VideoToolBox framework and request acceleration. Why that works on Resolve 16.2.5 and not FCPX 10.4.8 for the above two cases is unknown.

-

On Mac you can use Invisor which also enables spreadsheet-like side-by-side comparison of several codecs. You can also drag/drop additional files from Finder to the comparison window, or select a bunch of files to compare using right-click>Services>Analyze with Invisor. I think it internally uses MediaInfo to get the data. It cannot extract as much as ffprobe or ExifTool but it's much easier to use and usually sufficient. https://apps.apple.com/us/app/invisor-media-file-inspector/id442947586?mt=12

-

Most commonly H264 is 8-bit Long GOP, sometimes called IBP. This may date to the original H264 standard, but you can also have All-Intra H264 and/or 10-bit H264, it's just less common. I don't have the references at hand but if you crank up the bit rate sufficiently, H264 10-bit can produce very good quality, I think even the IBP variant. The problem is by that point you're burning so much data that you may as well use ProRes. In post production there can be huge differences in hardware-accelerated decode and encode performance between various flavors of a given general type. E.g, the 300 mbps UHD 4k/29.97 10-bit 4:2:2 All-Intra material from a Canon XC15 was very fast and smooth in FCPX on a 2015 iMac 27 when I tested it, but similar material from a Panasonic GH5 or S1 were very sluggish. Even on a specific hardware and OS platform, a mere NLE update can make a big difference. E.g, Resolve has had some big improvements on certain "difficult" codecs, even within the past few months, at least on Mac. Since HEVC is a newer codec, it seems that 10-bit versions are more common than H264 (especially as an NLE export format), but maybe that's only my impression. I think Youtube and Vimeo will accept and process 10-bit Long GOP HEVC OK, I tend to doubt they'd accept 10-bit All-Intra H264. There are some cameras that do 10-bit All-Intra HEVC such as the Fuji X-T3. I think some of these clips include that format https://www.imaging-resource.com/PRODS/fuji-x-t3/fuji-x-t3VIDEO.HTM But there's a lot more involved than just perceptual quality, data rate or file size. Once you expand post production beyond a very small group, you have an entire ecosystem that tends to be reliant on a certain codec; DNxHD or ProRes are good examples. It almost doesn't matter if another codec is a little smaller or very slightly different in perceptual quality on certain scene types, or can accommodate a few more transcode cycles with slightly less generational loss. Current codecs like DNxHD and ProRes work very well, are widely supported and not tied to any specific hardware manufacturer. There's also ease of use in post production. Can the codec be played with common utilities or does it require a special player just to examine the material? If a camera codec, is it a tree-like hierarchical structure or is it a simple flat file with all needed metadata in the single file? Testing perceptual quality on codecs is a very laborious complex process, so thanks for spending time on this and posting your results. Each codec variant may react differently to certain scene types. E.g, one might do well on trees but not water or fireworks. Below are some scenes used in academic research. https://media.xiph.org/video/derf/ http://medialab.sjtu.edu.cn/web4k/index.html http://ultravideo.cs.tut.fi/#testsequences

-

There is no one version of H.265. Like H.264 there are many, many different adjustable internal parameters. AMD's GPUs have bundled on them totally separate hardware called UVD/VCE which is similar to nVidia's NVDEC/NVENC. Over the years there have been multiple versions of *each* of those, just like Quick Sync has had multiple versions. Each version of each hardware accelerator has varying features and capability on varying flavors of H.264 and later H.265. Even if a particular version of a hardware video accelerator works on a certain flavor of one codec, this is not automatic. Both application and system layer software must use development frameworks (often provided by the h/w vendor) to harness that support and provide it in the app. You can easily have a case where h/w acceleration support has existed for years and a certain app just doesn't use it. That was the case with Premiere Pro for years which did not use Intel's Quick Sync. There are some cases now where DaVinci Resolve supports some accelerators for some codec flavors which FCPX does not. There is also a difference between the decode side and encode side. It is common on many platforms that 10-bit HEVC decoding is hardware accelerated for some codec variants but not 10-bit HEVC *encoding*. It is a complex. bewildering, multi-dimensional matrix of hardware versions, software versions, codec versions, sprinkled with bugs, limitations and poor documentation. In general Intel's Quick Sync has been more widely supported because it is not dependent on a specific brand or version of the video acceleration logic bundled on a certain GPU. However Xeon does not have Quick Sync so workstation-class machines must use the accelerator on GPUs or else create their own. That is what Apple did with their iMac Pro and new Mac Pro - they integrated that acceleration logic into their T2 chip.

-

I don't have R5 All-I or IPB material but I've seen similar behavior on certain 10-bit All-Intra codecs using both H.264 and HEVC. This is on MacOS, FCPX 10.4.8 and Resolve Studio 16.2.4 on both iMac Pro with 10-core Xeon W-2150B and Vega 64, and 2019 MacBook Pro 16" with 8-core "Coffee Lake" i9-9980HK and Radeon Pro 5500M. Xeon doesn't have Quick Sync so Apple uses custom logic in the T2 chip for hardware acceleration. The MBP 16 apparently uses Quick Sync, although in either machine could use AMD's UVD/VCE. In theory All-I should be easy to decode, and for some codecs it is. The 300 mbps 4k 10-bit All-I from a Canon XC15 is very fast and smooth. By contrast, similar material from a Panasonic GH5 or S1 is very sluggish on FCPX but recent versions of Resolve is much faster. In that one case Resolve is better leveraging the available hardware acceleration, whether the T2 or Quick Sync. For 10-bit IPB material from a Fuji X-T3, it is slow on both machines and both NLEs. Likewise the Fuji 10-bit HEVC material is super slow. Test material from that camera using various codecs: https://www.imaging-resource.com/PRODS/fuji-x-t3/fuji-x-t3VIDEO.HTM There is no one version of 10-bit H264 or HEVC. Internally there are many different encoding parameters and only some of those are supported by certain *versions* of hardware acceleration. There are many different versions of Quick Sync, many versions of nVidia's NVDEC/NVENC and many versions of AMD's UVD/VCE - all with varying capabilities. Each of those have different software development frameworks. It would be nice if the people writing camera codecs coordinated their work with CPU, GPU and NLE people to ensure it could be edited smoothly. Unfortunately it's like the wild west - a chaotic "zoo" of codec variants, hardware accelerators and NLE capabilities. The general tendency is people buy a camera because they like the features, and then discover its codec cannot be smoothly edited in their favorite NLE (or any NLE). Then they complain the NLE vendors let them down. While there's an element of truth to that, the current reality is compressed codecs produce unreliable decode performance due to lack of standardization between the various technical stakeholders. This situation has gotten much worse with 4k and more widespread use of 10-bit and HEVC. Back around 2010 it seemed Adobe's Mercury Playback engine handled DVI and 8-bit 1080p H264 from various cameras fairly well on most Windows hardware platforms. That was before Quick Sync debuted in 2011, so it must have used software decoding. Today we have much more advanced CPUs and hardware accelerators but these developments have not been well coordinated with codec producers -- at the very time when hardware acceleration is essential. If you plan on editing a new camera's internal codec, that really must be tested on your current hardware and NLE -- before committing to the camera. The alternative is either transcode everything to a mezzanine codec or use ProRes acquisition,. However except for Blackmagic most cameras cannot do that without an external recorder. I'm not sure there is adequate sensitivity to this by most camera manufacturers. I just asked a senior Sony marketing person about external ProRes from the FX9 and he seemed unclear why I'd want that.

-

Canon 9th July "Reimagine" event for EOS R5 and R6 unveiling

joema replied to Andrew - EOSHD's topic in Cameras

Those are good points and helps us documentary/event people see that perspective. OTOH I'm not sure we have complete info on the camera. Is the thermal issue *only* when rolling or partially when it's powered up? Does it never happen when using an external recorder, or just not as quickly? I did a lot of narrative work last year and (like you) never rolled more than about 90 seconds. I see that point. But my old Sony A7R2 would partially heat up just from being powered on, which gave the heat buildup a "head start" when rolling commenced, making a shutdown happen faster. Is the R5 like that? Also unknown is the cumulative heat buildup based on shooting duty cycle. Even if you never shoot a 30 min. interview, if you do numerous 2-min b-roll shots in hot weather, will the R5 hit the thermal limit? More than the initial thermal time limit, the cooldown times seem troubling, esp. if hot ambient conditions amplify this. -

Canon 9th July "Reimagine" event for EOS R5 and R6 unveiling

joema replied to Andrew - EOSHD's topic in Cameras

The R5 has zebras, but does it have waveform monitor? -

Excellent point, and that would be the other possible pathway to pursue. It avoids the mechanical and form factor issues. Maybe someone knowledgeable about sensor development could comment. Given a high development priority, what are the practical limits on low ISO? Of course ISOs are mainly digital so going far below the native ISO would have negative image impact. In theory you could have triple native ISO, with one super low to satisfy the "pseudo ND" requirement, and the other two native ISOs for normal range. But that would likely require six stops below normal range and a separate chain of analog amplifiers per pixel. You wouldn't want any hitch in image quality as it rises from (say) ISO 4 or 8 up to normal levels. I just sounds difficult and expensive.

-

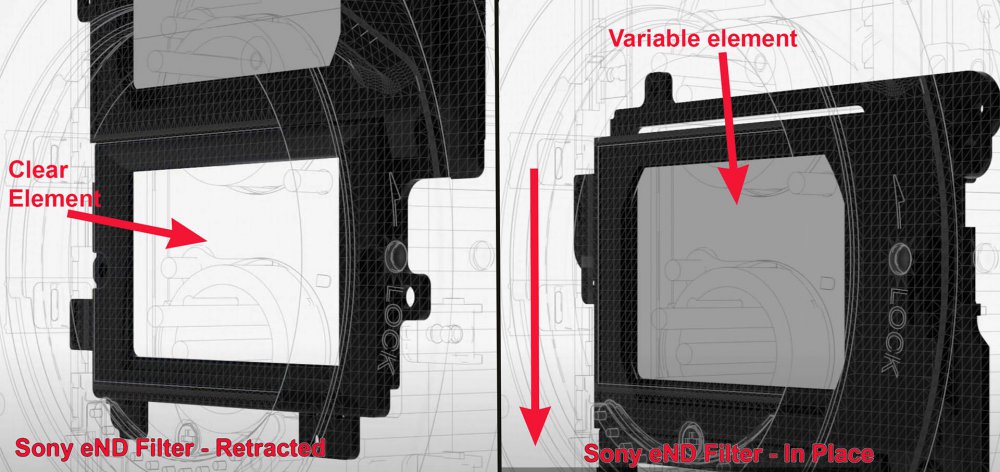

See attached for Sony internal eND mechanism. You are right there are various approaches, just none really good that fit a typical large-sensor, small mirrorless camera. This is a very important area, but it's a significant development and manufacturing cost who's benefit (from customer standpoint) is isolated mostly to upper-end, hard-core video-centric cameras like the S1H or A7SIII. It's not impossible, just really hard. Maybe someone will eventually do it. The Dave Dugdale drawing (which I can't find) was kind of like a reflex mirror rather than vertical/horizontal sliding. It may have required similar volume to a mirror box, which would be costly. For a typical short-flange mirrorless design, I can't see physically where the retracted eND element would fit.

-

I think the Ricoh GR is limited to 2 stops and the XT100 is limited to 3 stops. This is likely because there's no physical space in the camera to move the ND element out of the light path when disabled. This is due to the large sensor size and small camera body. I don't know the specs but a 2-3 stop variable ND can probably get closer to 0 stops attenuation when disabled. A more practical 6 stop variable ND can't go to zero attenuation but will have at least 1 stop on the low end. Nobody wants to give away 1 stop in low light conditions. A boxy camera like the FS5 has a flat front which allows an internal mechanism to vertically slide the variable ND out of the light path when disabled. A typical mirrorless camera doesn't have this space. I agree an internal electronic variable ND would be a highly valuable feature. Sony has the technology. If the A7SIII features, price and video orientation are similar to the S1H, maybe somehow they could do it. Some time ago Dave Dugdale drew a rough cutaway diagram of a theoretical large-sensor mirrorless camera which he thought could house a variable ND with an in-camera mechanism to move it out of the light path. I can't remember what video that was in.

-

On the post-production side, the problem I see is poor or inconsistent NLE performance on the compressed codecs. The 400 mbps HEVC from Fuji is a good example of that -- on a 10-core Vega 64 iMac Pro running FCPX 10.4.8 or Premiere 14.3.0 it is almost impossible to edit. Likewise Sony XAVC-S and XAVC-L, also Panasonic's 10-bit 400 mbps 4:2:2 All-I H264. Resolve Studio 16.2.3 is a bit better on some of those but even it struggles. Of course you can transcode to ProRes but then why not just use ProRes acquisition via Atomos. The problem is there are many different flavors of HEVC and H264, and the currently-available hardware accelerations (Quick Sync, NVENC/NVDEC, UVD/VCE) are in many different versions, each with unique limitations. On the acquisition side it's nice to have a high quality Long GOP or compressed All-I codec - it fits on a little card, data offload rate is very high due to compression, archiving is easy due to smaller file size, etc. But it eventually must be edited and that's the problem.

-

I hope that is not a consumerish focus on something like 8k, or yet another proprietary raw format, at the cost of actual real-world features wanted by videographers. I'd like to see 10-bit or 12-bit ProRes acquisition from day one (if only to a Ninja V), improved IBIS, or simultaneous dual-gain capture like on the C300 Mark III: https://www.newsshooter.com/2020/06/05/canons-dual-gain-output-sensor-explained/ Obviously internal ND would be great but I just can't see mirrorless manufacturers doing that due to cost and space issues.

-

All good points. Maybe the lack of ProRes on Japanese *mirrorless* cameras is a storage issue coupled with lack of design priority on higher-end video features. They have little SD-type cards which cannot hold enough data, esp at the high rate needed. I think you'd need UHS-II which are relatively small and expensive. The BMPCC4k sends data out USB-C so a Samsung T5 can record that at up to 500 MB/sec. The little mirrorless cameras could theoretically do that but (as a class) they just aren't as video-centric. The S1H has USB-C data output, is video-centric, but it doesn't do ProRes encoding. Why not? It also doesn't explain why larger higher-end cameras like the Canon C-series, Sony FS-series and EVA-1 don't have a ProRes option. Maybe it's because their data processing is based on ASICs and they don't have the general-purpose CPU horsepower to encode ProRes. I think Blackmagic cameras all use FPGAs which burn a lot more power but can be field-programmed for almost anything, in fact that's how they added BRAW. But the DJI Inspire 2's X5S camera has a ProRes option, so I can't explain that. For a little mirrorless camera, it's not that big a deal -- those can do external ProRes recording via HDMI to Atomos. Even given in-camera ProRes encoding, they'd likely need a USB-C-connected SSD to store that. Many people would use at least an external 5" monitor, which means you'd have two cable-connected external devices. A Ninja V is both a monitor and an external ProRes recorder - just one device. So in hindsight it seems the only user group benefiting from internal ProRes on a small camera would be those not using an external monitor, and they'd likely need external SSD storage due to the data rate of 4k ProRes.

-

That might be a grey area. The RED patent describes the recorder as either internal to the camera or physically attached. Maybe you could argue the hypothetical future iPhone is not recording compressed raw video and there is no recorder physically inside or attached outside (as described in the patent), rather it's sending data via 5G wireless to somewhere else. Theoretically you could send it to a beige box file server having a 5G card, which is concurrently ingesting many diverse data streams from various clients. Next year you could probably send that unrecorded raw data stream halfway across the planet using SpaceX StarLink satellites. However it seems more likely Apple will not use that approach, but rather attack the "broad patent" issue in a better prepared manner.

-

That seems to be the case. Other issue: despite the hardware-oriented camera/device aspect, it would seem the RED patent is more akin to a software patent. You can patent a highly-specific software algorithmic implementation such as HEVC, but not the broad concept of high efficiency long GOP compression. E.g, the HEVC patent does not preclude Google from developing the functionally-similar AV1 codec. However the RED patent seems to preclude any non-licensed use of the broad, fundamental concept of raw video compression, at least in regard to a camera and recorder. Hypothetically it would cover a future iPhone recording ProRes RAW, even if streaming it for recording via tethered 5G wireless link to a computer. In RF telecommunications there are now "software defined radios" where the entire signal path is implemented in general-purpose software. Similar to that, we are starting to see the term "software-defined camera". It would seem RED would want their patent enforced whether the camera internally used discrete chips or a general-purpose CPU fast enough to execute the entire signal chain and data path. If the RED patent can be interpreted as a software-type patent, this might be affected by recent legal rulings on software patents such as Alice Corp. vs CLS Bank: https://en.wikipedia.org/wiki/Alice_Corp._v._CLS_Bank_International

-

Apparently correct. While the RED patent has sometimes been described as internal only, the patent clearly states: "In some embodiments, the storage device can be mounted on an exterior of the housing...the storage device can be connected to the housing with a flexible cable, thus allowing the storage device to be moved somewhat independently from the housing". The confusion may arise because most implementations of compressed raw recording use external storage devices. Hence it might appear these are evading the patent. But it's possiable external recorders merely isolate the license fee and associated hardware to that additional (optional) device so as not to burden the camera itself, when a significant % of purchasers won't use raw recording. Edit/add: Blackmagic RAW avoids this since their cameras partially debayer the data before internal recording, allegedly to facilitate downstream NLE performance. BRAW is now supported externally on a few non-Blackmagic cameras, but presumably licensing is handled by Atomos or whoever makes the recorder.

-

The XT3 can use H264 or H265 video codecs, plus it can do H264 "All Intra" (IOW no interframe compression) which might be easier to edit, but the bitrate is higher. The key for all those except maybe All Intra is you need hardware accelerated decode/encode, plus editing software that supports that. The most common and widely-adopted version is Intel's Quick Sync. AMD CPUs do not have that. Premiere Pro started supporting Quick Sync relatively recently, so if you have an updated subscription that should help. Normal GPU acceleration doesn't help for this due to the sequential nature of the compression algorithm. It cannot be meaningfully parallelized to harness hundreds of lightweight GPU threads. In theory both nVidia and AMD GPUs have separate fixed-function video acceleration hardware similar to Quick Sync which is bundled on the same die but functionally totally separate. However each has had many versions and require their own software frameworks for the developer to harness those. For these reasons Quick Sync is much more widely used. The i7-2600 (Sandy Bridge) has Quick Sync but that was the first version and I'm not sure how well it worked. Starting with Kaby Lake it was greatly improved from a performance standpoint. In general, editing a 4k H264 or H265 acquisition codec is very CPU-bound due to the compute-intensive decode/encode operations. The I/O rate is not that high, e.g, 200 mbps is only 25 megabytes per sec. As previously stated you can transcode to proxies but that is a separate (possibly time consuming) step.

-

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

joema replied to Andrew - EOSHD's topic in Cameras

Thanks for posting that. It appears that file is UHD 4k/25 10-bit 4:2:2 encoded by Resolve using Avid's DNxHR HQX codec in a Quicktime container. I see the smearing effect on movement. This was also in the original camera file? The filename states 180 deg. shutter. Can you switch the camera to another display mode and verify it is 1/50th? -

Panasonic S1H review / hands-on - a true 6K full frame cinema camera

joema replied to Andrew - EOSHD's topic in Cameras

I have shot lots of field documentary material and I basically agree. We use Ursas, rigged Sony Alpha, DVX200, rigged BMP4CC4k, etc. I am disappointed the video-centric S1H does not allow punch-in to check focus while recording. The BMPCC4K and even my old A7R2 did that. An external EVF or monitor/recorder can provide that on the S1H, but if the goal is retaining a highly functional minimal configuration, lack of focus punch-in while recording is unfortunate. Panasonic's Matt Frazer said this was a limitation of their current imaging pipeline and would likely not be fixable via firmware. The Blackmagic battery grip allows the BMPC6K to run for 2 hrs. If Blackmagic produced a BMPCC6K "Pro" version which had IBIS, a brighter tilting screen, waveform, and maybe 4k 12-bit ProRes or 6k ProRes priced at $4k, that would be compelling. -

I worked on a collaborative team editing a large documentary consisting of 8,500 4k H264 clips, 220 camera hours, and 20 terabytes. It included about 120 multi-camera interviews. The final product was 22 min. In this case we used FCPX which has extensive database features such as range-based (vs. clip-based) keywording and rating. Before touching a timeline, there was a heavy organizational phase where a consistent keyword dictionary and rating criteria was devised and proxy-only media distributed among several geographically distributed assistant editors. All multicam material and content with external audio was first synchronized. FCPX was used to apply range-based keywords and ratings. The ratings included rejecting all unusable or low-quality material which FCPX thereafter suppresses from display. We used XML files including the 3rd-party utility MergeX to interchange library metadata for the assigned media: http://www.merge.software Before timeline editing started, by these methods the material was culled down to a more manageable size with all content organized by a consistent keyword system. The material was shot at 12 different locations over two years so it was crucial to thoroughly organize the content before starting the timeline editing phase. Once the timeline phase began, a preliminary brief demo version was produced to evaluate overall concept and feel. This worked out well and the final version was a more fleshed out version of the demo version. It is true that in documentary, the true story is often discovered during the editorial process. However during preproduction planning there should be some idea of possible story directions otherwise you can't shoot for proper coverage, and the production phase is inefficient. Before using FCPX I edited large documentaries using Premiere Pro CS6, and used an Excel Spreadsheet to keep track of clips and metadata. Editor Walter Murch has described using a Filemaker Pro database for this purpose. There are 3rd party media asset managers such as CatDV: http://www.squarebox.com/products/desktop/ and KeyFlow Pro: http://www.keyflowpro.com Kyno is a simpler screening and media management app which you could use as a front end, esp for NLEs that don't have good built-in organizing features: https://lesspain.software/kyno/ However it is not always necessary to use spreadsheets, databases or other tools. In the above-mentioned video about "Process of a Pro Editor", he just uses Avid's bin system and a bunch of small timelines. That was an excellent video, thanks to BTM_Pix for posting that.

-

In the mirrorless ILC form factor at APS-C size and above, it is a difficult technical and price problem. Technical: No ND can go to zero attenuation while in place. To avoid losing a stop in low light, the ND must mechanically retract, slide or rotate out of the optical path. This is easier with small sensors since the optical path is smaller, so the mechanism is smaller. A box-shaped camcorder has space for a large, even APS-C-size ND to slide in and out of the optical path. On the FS7 it slides vertically: https://photos.smugmug.com/photos/i-k7Zrm9Z/0/135323b7/L/i-k7Zrm9Z-L.jpg There is no place for such machinery in the typical mirrorless ILC camera. That said, in theory if a DSLR was modified for mirrorless operation the space occupied by the former pentaprism might contain a variable ND mechanism. I think Dave Dugdale did a video speculating on this. Economic: hybrid cameras are intended for both still and video use, and typically tilted toward stills. A high-quality, large-diameter internal variable ND would be expensive, yet mostly the video users would benefit. In theory the mfg could make two different versions, one with ND and one without, but that would reduce economy of scale in manufacturing. Yet another option is an OEM designed variable ND "throttle" adapter. It would eliminate the ND-per-lens issue and avoid problems with the lens hood fitting over the screw-in ND filter. But this still has the issue of requiring removal every time you switch to high ISO shooting.

-

RED and Foxconn to create range of affordable 8K prosumer cinema cameras

joema replied to Andrew - EOSHD's topic in Cameras

Given a finite development budget, a lower-end 8K camera will have to trade off other features. Compare this 8K "affordable" prosumer camera to, say, a revised 4K Sony FS5 II, and assume they are about the same price for a "ready to use" configuration. I think it's reasonable to expect the FS5 II may have a greatly improved EVF and LCD, improved high ISO, sensor-based stabilization, internal 4k 10-bit 4:2:2 XAVC-L codec, maybe 4k internal at 60 fps (or above), maybe even optional internal ProRes recording. It already has electronic variable ND. Let's say the 8K RED/Foxconn camera does nice-looking 8K and has good dynamic range but isn't great at low light, has no sensor-based stabilization, and doesn't have built-in variable ND. These are just guesses, but I think they are legitimate possibilities. As a documentary filmmaker, I know which one I'd rather use. What about needing 8K for "professional" work? In 2015 the Oscar for best documentary went to CITIZENFOUR by Laura Poitras. It was shot in 1080p on a Sony FS100. -

As a documentary editor with 200 terabytes of archival 4k H264 material, I've extensively tested the 12-core D700 nMP vs a top-spec 2017 iMac 27. In FCPX the iMac is about 2x faster at importing and creating ProRes proxies and 2x faster at exporting to 4k or 1080p H264. There is a major and generally unreported performance increase between the 2015 and 2017 iMac 27 on H264 material in FCPX. I don't know why the 2017 model is so much faster; maybe it's the Kaby Lake Quick Sync. This is mostly 4k 8-bit 4:2:0 material; I haven't tested 10-bit 4:2:2 or HEVC. Obviously performance in Premiere or Resolve may be different. On ProRes it's a different story. If you do ProRes acquisition and have an end-to-end ProRes workflow, the nMP is pretty fast, at least from my tests. However if you acquire H264 then transcode to ProRes for editing, the 12-core D700 nMP transcodes only 1/2 as fast as the 2017 iMac (using FCPX). That said the nMP is very quiet, whereas the iMac fans spin up under sustained load. The nMP has lots of ports and multiple Thunderbolt 2 controllers feeding those ports. However I have multiple 32TB Thunderbolt 2 arrays simultaneously on my 2017 iMac and they work OK. Regarding acoustic noise, these spinning Thunderbolt RAID arrays also make noise, so the iMac fan noise under load is just one more thing. But I can understand people who don't like the noise. What about compute-intensive plugins such as Neat Video, Imagenomic Portraiture and Digital Anarchy Flicker Free? I tested all those and the nMP wasn't much faster than the 2017 iMac. Based on this, if you're using FCPX I'd definitely recommend the 2017 iMac over even a good deal 12-core nMP -- unless you have an all-ProRes workflow. If you are using Resolve and Premiere those are each unique workloads, even when processing the same material. They each must be evaluated separately, and performance results in one NLE don't necessarily apply to another. I've also done preliminary FCPX performance testing on both 8-core and 10-core iMac Pros. The iMac Pro is much faster than the nMP, especially on H264, because FCPX is apparently calling the UVD/VCE transcoding hardware on the Vega GPU. However even the 10-core Vega 64 iMac Pro isn't vastly faster on H264 than the 2017 top-spec iMac. I'm still testing it, but on complex real-world H264 timelines with lots of edits and many effects, the iMac Pro rendering and encoding performance to H264 is only about 15-20% faster than the 2017 iMac. That's not much improvement for an $8,000 computer. On some specific effects such as sharpen and aged film, the iMac Pro is about 2x faster whether the codec is ProRes or H264. The iMac Pro remains very quiet under heavy load, more like the nMP. In your situation I'd be tempted to either get a top-spec 2017 iMac or that $4000 deal on the base-model iMac Pro or wait for the modular Mac Pro. However Resolve and Premiere are both cross-platform so you also have the option of going Windows which gives you many hardware choices -- a blessing and a curse.

-

That is a perceptive comment. When we first heard of the iMac Pro and upcoming "modular" Mac Pro, a key question was how will those Xeon-powered CPUs handle the world's most common codec, H264. The previous "new" Mac Pro doesn't handle it well, and the 2017 i7 iMac 27 is about 200% faster at ingesting H264 and transcoding to ProRes proxy or exporting to H264 (using FCPX). This includes most H264 variants such as Sony's XAVC-S. For people on the high end, it appears the iMac Pro handles RED RAW very well (at least using FCPX). For people with all-ProRes acquisition it seems pretty fast. For the currently-specialized case of H265/HEVC it seems fast. However for the common case of H264, the iMac Pro doesn't seem faster than the 2017 i7 iMac and in fact it may be slower, at least using Apple's own FCPX. Before the iMac Pro's release there was speculation it might use a customize Xeon with Quick Sync or maybe Apple would write to AMD's UVD and VCE transcoding hardware. This now appears to be not the case. If further testing corroborates the iMac Pro is weak on H264, it might well prove to be the codec equivalent of the MacBook Pro's USB-C design: doesn't work well with current technology but years in the future it might do better -- assuming of course customers have not abandoned it in the meantime.