KnightsFan

Members-

Posts

1,352 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by KnightsFan

-

Is Panasonic rethinking high-end full frame mirrorless line-up?

KnightsFan replied to Andrew Reid's topic in Cameras

I wonder if the main issue that Panasonic faces now is that they simply don't have access to a new sensor that exceeds the one they've been using, at an affordable price. There's only so much you can do if your sensor supplier doesn't have a new model. Remember that the current 24 MP sensor is used by many companies, and is really good. There are very few features that would get me to upgrade from an S5 that don't involve a new sensor (such as 32 bit audio or a decent app)--and they are so niche to small-crew videographers, that the market must be minuscule. The market share numbers are really a shame. Panasonic killed it with the GH and S series feature sets for a long time. I wonder what percent of sales are from people who do basic research like available lenses, upgrade paths, etc. As @Phil A, lack of lenses is a big downside for L mount for enthusiasts--but what percent of buyers ever get more than the kit lens? How about more than 2 lenses? What percent think, "L mount has no lenses" but never buy more than 2 lenses anyway? I really have no idea, I just wonder the true impact. There is some hope on that front, though. Sigma's lenses are wildly good for their prices. And with Samyang joining in, there might be more low end lenses in the next few years. -

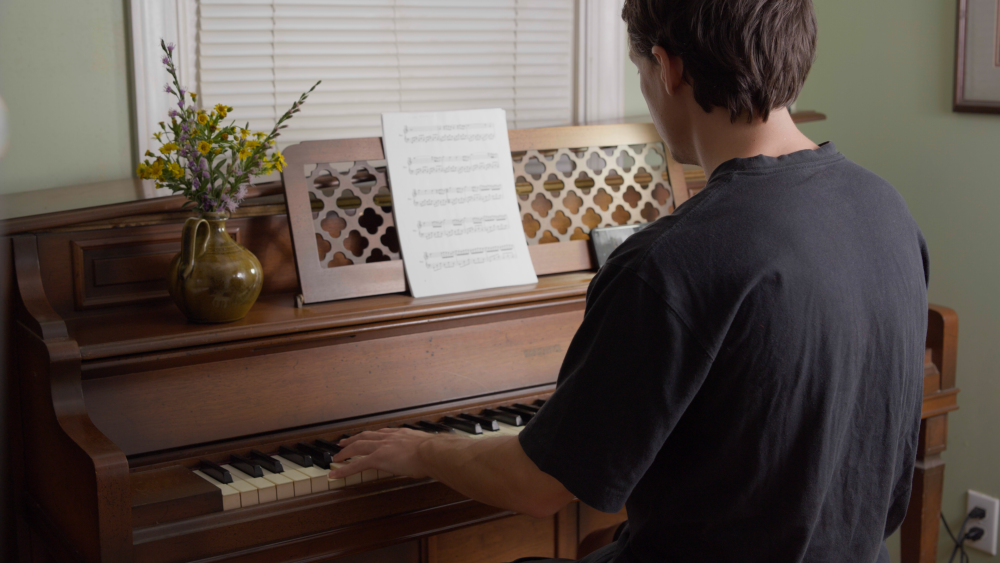

On the S5, the difference between 8 and 10 bit is very obvious. I shot a scene twice, once with 8 bit 100 Mbps (93 Mbps actual), and once with 10 bit 75 Mbps (66 Mbps actual). Both clips have a color space transformation to Rec709, but no other adjustments. The amount of color noise and weird color in 8 bit is very high, and is almost nonexistent in 10 bit. Viewed at 100% scale in motion in my normal viewing condition, it's apparent that "something is off" particularly in skin tones (I did tests facing the camera, too, which I'm not posting). To me, this is part of the "thick" color that people talk about: pure tones without posterized splotches of color noise, which gets worse with saturation. Now clearly this is something to do with the encoder, since the output images that I am posting here are both 8 bit PNGs. So I'm not going to make universal statements, but I will say that on every camera that I have ever used, I get nearly the same result. You can see the difference everywhere in the image, but particularly notice the evenness of the skin on my arm, the black on the t-shirt, the plain green wall, and the deepness of the brown on the wood on the darker parts of the piano. Is this enough of a difference for average audience members to notice, even subconsciously? Probably not. However, I do notice it consciously, and considering the 10 bit file looks better AND is 25% smaller file size, I would never choose 8 bit on this camera.

-

Oh, this is a good chance to test some lenses I just got. I haven't done many format comparisons on my S5 yet. As you show in your blog post, there's a decent difference between 10 and 8 bit at equivalent total bitrate in the form of color splotchiness/posterization. To me it's pretty visible under normal viewing conditions (which, in my case, is a fairly nice 4k PC screen with controlled ambient lighting). I would consider your example shots of the sky to be a significant difference in overall effect. That said, I wouldn't be upset using 8 bit, but since there's no downside to 10 bit, I can't imagine picking 8 bit instead, no matter how small the difference. Something to keep in mind is that very few of us ever see anything as actual 10 bit. On Windows, even Resolve only outputs 10 bit with a Blackmagic card hooked up to a dedicated monitor--so even if your screen is 10 bit, you need to do some homework to figure out which software will output that kind of signal. And obviously most image formats are 8 bit, so as soon as you take a screenshot and put it on the web as a comparison, it's all 8 bit. There is of course HEIF in 10 bit, and then there are flavors of PNG and TIFF in 16 bit.

-

Is Panasonic rethinking high-end full frame mirrorless line-up?

KnightsFan replied to Andrew Reid's topic in Cameras

...aren't all camera rumors written by children? -

Agreed--if I had the production budget to make the backgrounds beautiful, I would never have anything out of focus! (Small exaggeration) Some of my favorite scenes and shots, for example Once Upon a Time in the West https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fbcb910b2-4302-4b6b-b8e1-c52220adeaa4_1200x500.jpeg Barry Lyndon https://sbiff.org/wp-content/uploads/barry-lyndon-1080x675.jpg It's very genre dependent, too. I love sci fi and fantasy, both of which have spectacle and world building that is often best accomplished with very wide establishing shots. Lord of the Rings - The wide shots of Helm's Deep not only serve as beautiful spectacle in their own right, but also display the layout of the fortress in a way that makes the ensuring battle comprehensible. There's this one I linked, but if I recall there's also a few beautiful crane shots right over the keep leading into conversations about the defenses. https://static.wikia.nocookie.net/lotr/images/d/d3/Helm's_Deep_-_TtT.png/revision/latest/scale-to-width-down/1000?cb=20190705232343 Star Wars - Our first glimpse of Coruscant (not sure if this screenshot is the first time we see it) communicates so much about the planet. https://lumiere-a.akamaihd.net/v1/images/Coruscant-Gallery-1_d654d3d0.jpeg?region=100%2C91%2C1000%2C563 On the other hand, personal dramas sometimes don't need as much pure visual setting, or are obvious enough that it's not needed. If our hero lives in an apartment, do we need to see the outside first, or is it very well understood what type of space it is without it?

-

I don't see how the external recorder market can be sustained. I used a Ninja Star around 2015 with the 5D3 and whatever A7 version we were on, and even back then with the clear visual benefit of ProRes, the ridiculousness of using a HDMI cable and bolting a box on the camera was apparent. At that time, anyone could tell that external recorders were a short term investment--never more than one or two generations ahead of internal recording specs. If I worked at Atomos, I'd push leadership to build some new products. Edit: I suppose Atomos does make decent monitors which will continue to be a market. However, I wouldn't call them the top of the tech game, even for that, compared to a lot of the monitors with builtin wireless these days.

-

Interesting. Well, I guess depending when I need that 2nd boom setup and the release dates, both will be in the running. One thing that I do like about Tascam which I almost wrote in my original post, is that their app supports connecting to multiple devices, so you can rec/stop on a bunch of units with 1 phone. This is nice if you use any kind of plant mic or bodypack on a talent. I also think that, just judging from pics, the body design and button layout here is better than the F3. So even if Zoom adds timecode and wireless monitoring, Tascam has a decent advantage imo. Either way, competition is good for consumers.

-

Tascam did a great job with the feature set on this. I'll definitely buy one if reviewers don't find anything silly, and the sound quality is comparable to the F3. Timecode is great, plus builtin wireless monitoring eliminates one more device to mount and power. If it weren't for the FR-AV2, I considered a Sennheiser EW-DP SKP, but one of these is way cheaper than a transmitter/receiver setup. My goal is to have two boom mics, where each has a recorder directly on the boom pole, and the op uses wireless headphones (one will continue to use my F3). What I have found on amateur sets is that A. boom ops are not skillful enough to consistently swap back and forth during dialog. Training an extra person on the day of the shoot and having independent booms does actually sound better than an intermediate-skill person booming 2 people. B. lavs don't work perfectly--we simply don't have the skill or resources to hide them securely and properly C. it's impossible to understate the efficiency value of each boom mic being completely wireless. Letting a boom op put their mic down and quickly help with something else speeds us up. Wireless headphones are an important part of that! D. gain staging--wireless in particular--is difficult for beginners to nail every time. Wired 32 bit is easy to nail every time. I know all the audio pros are going to squirm reading that, lol. But for my triangle of price/required skill/output quality, this might be it.

-

Even worse: a manager used ChatGPT instead of hiring an intern to do it.

-

Metabones Canon EF to Sony E CINE eND Smart Adapter

KnightsFan replied to BTM_Pix's topic in Cameras

This is great. I'm surprised it took this long for anyone to make a mount adapter with integrated swinging filter, to be honest. Lucky E mount users, haha--too bad there's no version for the Pyxis (yet?)! -

Duh... Forgot about the F6 Pro!

-

I wasn't even talking about current, nor that they necessarily ship together in the same box. I'm can't think of any 5+ inch monitors that also have camera control and power over a single cable outside of maybe some studio broadcast equipment.

-

Well, whether it's rebranding or 1st party or partnership, the fact is that various RED packages used to include larger RED branded monitors that integrated by design into their DSMC lineup. For all I know, Blackmagic's is modified version of something else. All I meant is that Blackmagic has a nice history of using big screens, all the way back to the old BMCC 2.5k. It's nice that they've now moved to something detachable that still has single cable, 1st party support. Sony FX or Canon C models usually have smaller monitors. Personally I don't want any screen built onto the camera. It's not a huge deal, I just can't see myself ever using it. When I shot with Z Cam I never used the screen, always a monitor or phone. It's not that I'd avoid the Pyxis because of it, but I'd prefer it weren't there.

-

These cameras seem more marketed and designed for sports or industrial uses, than for cinema or content creation. They are obviously the same design as the previous box cameras, but Panasonic went so far as to rename them to market them even farther away from the GH and S lines. That's my take anyway.

-

Looks great! Lots of extremely nice details. I love that Blackmagic makes large monitors. Unless I'm forgetting someone, RED is the only other company that makes first party monitors (integrated OR detachable) that are 5" in more? Locking USB C cables are great, and I would love to see them get standardized as the single power/signal/control cable on all cameras. The mounting looks well designed as well. It does highlight, though, that the fixed screen on the body is sort of redundant. It's so hard to imagine using it often at all. If I was using a Pyxis, I'd rather have mounting on the left side for a handle or whatever, instead of a big piece of scratchable glass which will inevitably remain off while I use the proper monitor they just announced.

-

RED cuts prices of Komodo and Komodo-X by up to 30%

KnightsFan replied to eatstoomuchjam's topic in Cameras

I wonder if they ran the numbers and figured it would be too expensive to take existing Komodo inventories, and change them Z mount without redesigning a brand new camera? -

@Ninpo33 What exactly is your point? Is it that the DJI Ronin 4D is a good camera for me, individually (responding to my 2nd post here)? Or are you saying that DJI should market and sell a hybrid primarily on image quality instead of their cool focus and wireless tech (responding to my first post)? Because all I'm saying is that I consider as many options as I can with the info I have, and that the Ronin 4D is not among the top viable choices that I have for the projects that I am writing. My group and I discuss every option, from renting an Alexa 35, to buying a 4D, to getting a ton of mirrorless cameras and saving time by shooting multicam. Hybrids are on the table, but generally lower than a Ronin 4D in terms of pros/cons. I'm only saying what makes sense for my wallet and projects, which I don't think is being unnecessarily skeptical nor am I disrespecting people who use DJI cameras. Outside of my personal purchasing, I don't believe that the wider film community currently flocks to DJI as a leader in "color science" in the numbers that rally to "Canon color" or "Nikon color." So my opinion is that a DJI-brand photo camera will gain more traction if they include and advertise innovative accessories, rather than their image quality. Specifically regarding the video that you shared, I do find it a bit monotone and flat colored for my taste, which can obviously be grading. But like I said, the nuance is not whether the image is "good" its "how good" vs. the competition vs. ease of use (in many scenarios) vs. cost vs. upgradeability. My comparisons are all over the place because I try to consider every angle, and I don't have the money to do all of them! It's like deciding whether to hire a CGI artist, or a craftsman to build a miniature, or plane tickets to shoot on location. Totally different options with almost incomparable pros and cons, but ultimately they have to be compared.

-

I thought it was obvious reasoning, I was clarifying my stance on the image as being "meh" compared to cameras half that cost. I'm not saying it looks bad, just that you really have to need the compact gimbal for it to be worth buying over cheaper options with better images. I'm not talking about hybrids. Considering used prices for all models referenced, C70's, Komodos, and 4.6K G2's are considerably cheaper than 4D 6K's. C300 MkIII's are slightly cheaper. Z Cam F6 (which imo is a similar kind of good-but-not-great-image) is a fraction of the cost, and that one in particular is high value since it includes wireless monitoring and control. I do disagree that after rigging it "won't be as modular," since I think it's more straightforward to rig a Komodo on a gimbal than it is to rig a Ronin 4D on a traditional tripod setup with heavy lenses and matte box. You can also go fairly extreme and get a I totally agree with this statement. It's just not the right bargain for me.

-

RED cuts prices of Komodo and Komodo-X by up to 30%

KnightsFan replied to eatstoomuchjam's topic in Cameras

I had an E2 which I traded for an M4 when the flagship came out. Unfortunately my film group fell apart shortly after, so I didn't get to use it much. I always wanted an f6 but I just haven't shot much the last few years. I'm getting back into it this year though! Absolutely, and I've watched a couple sell used for under $1800. Wild value. My next few projects are very action and vfx heavy. A global shutter would make our virtual sets a little easier. Maybe if used komodo prices drop a bit more I'll get that. But I'm in no hurry, no big projects are imminent yet unfortunately. -

That's what I mean. The 6k is a $6800 camera that to my eyes doesn't offer an image above cameras half its price. And I fully understand that it's valid to look at it like a $2k camera with a $4.8k lidar, gimbal, and wireless kit, and that it's a great deal in that sense. For some people, this is the best camera for their job. It will absolutely make good images, same as everything that uses that sensor and has decent codecs and log recording. In my opinion, the footage I have seen out of several of Canon and Blackmagic's cameras looks better or is easier to work with than DJI's. So if I need stabilized shots, I'd rather spend less on the camera, put extra cash into rigging, and retain my non-vendor-locked upgrade path than commit fully to DJI and hope that someday they have a different camera (for example, one with faster readout). Downside is of course that my rigs are less integrated and convenient, but that's a tradeoff I'll take. Now if DJI's sensor and image pipeline was closer to the top of my list already, then the pros/cons would change. That is of course a personal weighing regarding my budget and shoots. Others will land in a different place.

-

RED cuts prices of Komodo and Komodo-X by up to 30%

KnightsFan replied to eatstoomuchjam's topic in Cameras

For sure, and I definitely agree about applying that to every company. I never buy new products anyway, so they're pretty visible before I shop haha. With Z Cam I'm not worried about dishonest vaporware, so much as the company going under or being bought up and discarded. I really hope they make the M5G and that it sells well, I do like that company a lot. A used F6 is still high on my radar, for sheer price/performance value. I hope they continue the Flagship style body and don't go all in on the Pro. -

RED cuts prices of Komodo and Komodo-X by up to 30%

KnightsFan replied to eatstoomuchjam's topic in Cameras

Interesting. I don't check the FB group anymore. Considering it was announced a year ago, and then Z Cam quietly stopped talking about it, and made no update or announcement, I'm definitely in the "believe it when I see it" category. -

RED cuts prices of Komodo and Komodo-X by up to 30%

KnightsFan replied to eatstoomuchjam's topic in Cameras

Not surprising. It's hard to sell cameras at long-term-investment prices when no one knows what the new owners will do with that product and there are so many other brands on the horizon. As much as I would love a global shutter box camera, I don't know that I would spend half those prices on Red cameras, unless Nikon releases a product or two that proves their commitment to those lines. Also, I made a mental note early this year that 2024 would be a uniquely poor time to buy a camera. At that time we knew Nikon had bought Red, and there were rumors of a new Canon C-camera. Here we are a few months later, and on top of both of those, Blackmagic announced a truckload of cameras, Z Cam has presumably cancelled the M5G, and rumors are swirling about successors to the FX6 and S1H. It's quite the year for low-to-mid cinema cams. -

I meant the visual image quality coming out of the camera, specifically the Ronin 4D's. I totally understand that you're paying for the unique gimbal, focus, and monitoring solution, but for $6800 I'm not taken with the image quality. Without having done any research, it seems like the 6K uses roughly the same sensor found in all the modern 24MP consumer cameras. So what I meant was that if that's the level of their image in a high end camera, they might find it difficult to enter the hybrid market unless they're (once again) leveraging unique peripherals. Which is not a bad thing. I'm just musing out loud that if they make a generic Panasonc-S5-clone with DJI branding, I don't know that I see the point. For comparison, I think the C70/C300III/C500II, anything recent from Blackmagic, and the Komodo have good images. I'm not unfairly comparing to really expensive images.

-

I started researching the 4D camera a little closer and I have to say it rally hits a lot of the points I want in a camera. I can't see how they are possibly breaking even on this stuff, but I really hope they continue to make more of those. I'd have to assume that if they enter the hybrid market, their angle will be these cool accessories, like wireless video and Lidar autofocus. Because the one thing they don't really have is a great image. I mean there's nothing wrong with their image, there's just nothing special about it.